笔记:PoseCNN:A Convolutional Neural Network for 6D Object Pose Estimation in Cluttered Scenes

PoseCNN: A Convolutional Neural Network for 6D Object Pose Estimation in Cluttered Scenes

(Robotics: Science and Systems 2018)

原文:https://arxiv.org/abs/1711.00199

代码和数据集: https://rse-lab.cs.washington.edu/projects/posecnn/.

GitHub: https://github.com/yuxng/PoseCNN

Abstract—Estimating the 6D pose of known objects is important for robots to interact with the real world. The problem is challenging due to the variety of objects as well as the complexity of a scene caused by clutter and occlusions between objects. In this work, we introduce PoseCNN, a new Convolutional Neural Network for 6D object pose estimation. PoseCNN estimates the 3D translation of an object by localizing its center in the image and predicting its distance from the camera. The 3D rotation of the object is estimated by regressing to a quaternion representation. We also introduce a novel loss function that enables PoseCNN to handle symmetric objects. In addition, we contribute a large scale video dataset for 6D object pose estimation named the YCB-Video dataset. Our dataset provides accurate 6D poses of 21 objects from the YCB dataset observed in 92 videos with 133,827 frames. We conduct extensive experiments on our YCB-Video dataset and the OccludedLINEMOD dataset to show that PoseCNN is highly robust to occlusions, can handle symmetric objects, and provide accurate pose estimation using only color images as input. When using depth data to further refine the poses, our approach achieves state-of-the-art results on the challenging OccludedLINEMOD dataset. Our code and dataset are available at https://rselab.cs.washington.edu/projects/posecnn/.

1、介绍了一种新的用于6D物体姿态估计的卷积神经网络PoseCNN

2、PoseCNN通过在图像中定位物体的中心并预测其与相机的距离来估计物体的3D平移

3、通过回归到四元数表示来估计对象的3D旋转

4、还引入了一种新的损失函数,使PoseCNN能够处理对称对象。

5、数据集,YCB-Video dataset,the OccludedLINEMOD dataset

1 Introduction

Recognizing objects and estimating their poses in 3D has a wide range of applications in robotic tasks. For instance, recognizing the 3D location and orientation of objects is important for robot manipulation. It is also useful in humanrobot interaction tasks such as learning from demonstration.

However, the problem is challenging due to the variety of objects in the real world. They have different 3D shapes, and their appearances on images are affected by lighting conditions, clutter in the scene and occlusions between objects.

在3D中识别物体并估计其姿态在机器人任务中有广泛的应用。例如,识别物体的3D位置和方向对于机器人操作很重要。它在人机交互任务中也很有用,例如从演示中学习。然而,由于现实世界中对象的多样性,这个问题具有挑战性。它们具有不同的3D形状,它们在图像上的外观受照明条件、场景中的杂波和物体之间的遮挡的影响。

Traditionally, the problem of 6D object pose estimation is tackled by matching feature points between 3D models and images [20, 25, 8]. However, these methods require that there are rich textures on the objects in order to detect feature points for matching. As a result, they are unable to handle texture-less objects. With the emergence of depth cameras, several methods have been proposed for recognizing textureless objects using RGB-D data [13, 3, 2, 26, 15]. For templatebased methods [13, 12], occlusions significantly reduce the recognition performance. Alternatively, methods that perform learning to regress image pixels to 3D object coordinates in order to establish the 2D-3D correspondences for 6D pose estimation [3, 4] cannot handle symmetric objects.

传统上,6D物体姿态估计的问题是通过匹配3D模型和图像之间的特征点来解决的[20,25,8]。然而,这些方法要求对象上有丰富的纹理,以便检测匹配的特征点。因此,它们无法处理无纹理的对象。随着深度相机的出现,已经提出了几种使用RGB-D数据识别无纹理对象的方法[13,3,2,26,15]。对于基于模板的方法[13,12],遮挡显著降低了识别性能。或者,执行学习以将图像像素回归到3D对象坐标以建立6D姿态估计的2D-3D对应关系[3,4]的方法不能处理对称对象。

In this work, we propose a generic framework for 6D object pose estimation where we attempt to overcome the limitations of existing methods. We introduce a novel Convolutional Neural Network (CNN) for end-to-end 6D pose estimation named PoseCNN. A key idea behind PoseCNN is to

decouple the pose estimation task into different components, which enables the network to explicitly model the dependencies and independencies between them. Specifically, PoseCNN performs three related tasks as illustrated in Fig. 1. First, it predicts an object label for each pixel in the input image.

Second, it estimates the 2D pixel coordinates of the object center by predicting a unit vector from each pixel towards the center. Using the semantic labels, image pixels associated with an object vote on the object center location in the image. In addition, the network also estimates the distance of the object center. Assuming known camera intrinsics, estimation of the 2D object center and its distance enables us to recover its 3D translation T. Finally, the 3D Rotation R is estimated by regressing convolutional features extracted inside the bounding box of the object to a quaternion representation of R. As we will show, the 2D center voting followed by rotation regression to estimate R and T can be applied to textured/texture-less objects and is robust to occlusions since the network is trained to vote on object centers even when they are occluded.

在这项工作中,我们提出了一个通用的6D物体姿态估计框架,试图克服这些现有方法的限制。我们介绍了一种新的卷积神经网络(CNN),用于端到端6D姿态估计,称为PoseCNN。PoseCNN背后的一个关键思想是将姿态估计任务解耦为不同的组件,这使得网络能够明确地建模它们之间的依赖性和独立性。具体而言,PoseCNN执行三项相关任务,如图1所示。首先,它预测输入图像中每个像素的对象标签。其次,它通过预测从每个像素到中心的单位向量来估计对象中心的2D像素坐标。使用语义标签,与对象相关联的图像像素对图像中的对象中心位置进行投票。此外,网络还估计对象中心的距离。假设已知的相机内部特性,2D对象中心及其距离的估计使我们能够恢复其3D平移T。最后,通过将在对象边界框内提取的卷积特征回归到R的四元数表示来估计3D旋转R,2D中心投票和旋转回归以估计R和T可以应用于无纹理/无纹理对象,并且对遮挡是鲁棒的。

图1. 我们提出了一种新的用于6D物体姿态估计的PoseCNN,其中训练网络执行三项任务:语义标记、3D平移估计和3D旋转回归。

Handling symmetric objects is another challenge for pose estimation, since different object orientations may generate identical observations. For instance, it is not possible to uniquely estimate the orientation of the red bowl or the wood block shown in Fig. 5. While pose benchmark datasets such as the OccludedLINEMOD dataset [17] consider a special symmetric evaluation for such objects, symmetries are typically ignored during network training. However, this can result in bad training performance since a network receives inconsistent loss signals, such as a high loss on an object orientation even though the estimation from the network is correct with respect to the symmetry of the object. Inspired by this observation, we introduce ShapeMatch-Loss, a new loss function that focuses on matching the 3D shape of an object. We will show that this loss function produces superior estimation for objects with shape symmetries.

处理对称物体是姿态估计的另一个挑战,因为不同的物体方向可能会产生相同的观测结果。例如,不可能唯一地估计图5所示的红碗或木块的方向。虽然姿势基准数据集(如OccludedLINEMOD数据集[17])考虑了此类对象的特殊对称评估,但在网络训练期间通常会忽略对称性。然而,这可能导致不良的训练性能,因为网络接收到不一致的arXiv:11711.00199v3[cs.CV]2018年5月26日丢失信号,例如对象方向上的高丢失,即使来自网络的估计相对于对象的对称性是正确的。受这一观察的启发,我们引入了ShapeMatch Loss,这是一种新的损失函数,专注于匹配对象的3D形状。我们将证明,这种损失函数对形状对称的物体产生了更好的估计。

We evaluate our method on the OccludedLINEMOD dataset [17], a benchmark dataset for 6D pose estimation. On this challenging dataset, PoseCNN achieves state-of-the-art results for both color only and RGB-D pose estimation (we use depth images in the Iterative Closest Point (ICP) algorithm for pose refinement). To thoroughly evaluate our method, we additionally collected a large scale RGB-D video dataset named YCB-Video, which contains 6D poses of 21 objects from the YCB object set [5] in 92 videos with a total of 133,827 frames. Objects in the dataset exhibit different

symmetries and are arranged in various poses and spatial configurations, generating severe occlusions between them.

我们在OccludedLINEMOD数据集[17]上评估了我们的方法,该数据集是6D姿态估计的基准数据集。在这个具有挑战性的数据集上,PoseCNN实现了仅彩色和RGB-D姿态估计的最新结果(我们在迭代最近点(ICP)算法中使用深度图像进行姿态细化)。为了彻底评估我们的方法,我们还收集了一个名为YCB-video的大规模RGB-D视频数据集,其中包含来自YCB对象集[5]的21个对象的6D个姿势,共92个视频,共133827帧。数据集中的对象表现出不同的对称性,并以不同的姿态和空间配置排列,在它们之间产生严重的遮挡。

In summary, our work has the following key contributions:

• We propose a novel convolutional neural network for 6D object pose estimation named PoseCNN. Our network achieves end-to-end 6D pose estimation and is very robust to occlusions between objects.

• We introduce ShapeMatch-Loss, a new training loss function for pose estimation of symmetric objects.

• We contribute a large scale RGB-D video dataset for 6D object pose estimation, where we provide 6D pose annotations for 21 YCB objects.

总之,我们的工作有以下关键贡献:

•我们提出了一种用于6D物体姿态估计的卷积神经网络,名为PoseCNN。我们的网络实现了端到端的6D姿态估计,并且对对象之间的遮挡非常鲁棒。

•我们引入了ShapeMatch损失,这是一种用于对称对象姿态估计的新训练损失函数。

•我们为6D对象姿态估计提供了大规模RGB-D视频数据集,其中我们为21个YCB对象提供了6D姿态注释。

This paper is organized as follows. After discussing related work, we introduce PoseCNN for 6D object pose estimation,followed by experimental results and a conclusion.

本文组织如下。在讨论了相关工作之后,我们介绍了用于6D物体姿态估计的PoseCNN,然后给出了实验结果和结论。

2 RELATED WORK

6D object pose estimation methods in the literature can be roughly classified into template-based methods and featurebased methods. In template-based methods, a rigid template is constructed and used to scan different locations in the input image. At each location, a similarity score is computed, and the best match is obtained by comparing these similarity scores [12, 13, 6]. In 6D pose estimation, a template is usually obtained by rendering the corresponding 3D model. Recently,

2D object detection methods are used as template matching and augmented for 6D pose estimation, especially with deep learning-based object detectors [28, 23, 16, 29]. Templatebased methods are useful in detecting texture-less objects. However, they cannot handle occlusions between objects very well, since the template will have low similarity score if the object is occluded.

文献中的6D物体姿态估计方法可大致分为基于模板的方法和基于特征的方法。在基于模板的方法中,构建刚性模板,并使用该模板扫描输入图像中的不同位置。在每个位置,计算相似度得分,并通过比较这些相似度得分来获得最佳匹配[12,13,6]。在6D姿态估计中,通常通过渲染相应的3D模型来获得模板。最近,2D物体检测方法被用作模板匹配,并被用于6D姿态估计,尤其是使用基于深度学习的物体检测器[28,23,16,29]。基于模板的方法在检测无纹理对象时非常有用。然而,它们不能很好地处理对象之间的遮挡,因为如果对象被遮挡,模板将具有较低的相似度分数。

In feature-based methods, local features are extracted from either points of interest or every pixel in the image and matched to features on the 3D models to establish the 2D-3D correspondences, from which 6D poses can be recovered [20, 25, 30, 22]. Feature-based methods are able to handle

occlusions between objects. However, they require sufficient textures on the objects in order to compute the local features.To deal with texture-less objects, several methods are proposed to learn feature descriptors using machine learning techniques [32, 10]. A few approaches have been proposed to directly regress to 3D object coordinate location for each pixel to establish the 2D-3D correspondences [3, 17, 4]. But 3D coordinate regression encounters ambiguities in dealing with

symmetric objects.

在基于特征的方法中,从感兴趣点或图像中的每个像素提取局部特征,并将其与3D模型上的特征相匹配,以建立2D3D对应关系,从中可以恢复6D姿态[20,25,30,22]。基于特征的方法能够处理对象之间的遮挡。然而,它们需要对象上有足够的纹理来计算局部特征。为了处理无纹理对象,提出了几种使用机器学习技术学习特征描述符的方法[32,10]。已经提出了一些方法来直接回归到每个像素的3D对象坐标位置,以建立2D-3D对应关系[3,17,4]。但三维坐标回归在处理对称对象时遇到了歧义。

In this work, we combine the advantages of both template-based methods and feature-based methods in a deep learning framework, where the network combines bottom-up pixel-wise labeling with top-down object pose regression. Recently, the 6D object pose estimation problem has received more attention thanks to the competition in the Amazon Picking Challenge (APC). Several datasets and approaches have been introduced for the specific setting in the APC [24, 35]. Our network has the potential to be applied to the APC setting as long as the appropriate training data is provided.

在这项工作中,我们在深度学习框架中结合了基于模板的方法和基于特征的方法的优点,其中网络将自下而上的逐像素标记与自上而下的对象姿态回归相结合。最近,由于亚马逊采摘挑战赛(APC)的竞争,6D物体姿态估计问题受到了更多的关注。已经为APC中的特定设置引入了几个数据集和方法[24,35]。只要提供适当的训练数据,我们的网络就有可能应用于APC设置。

3 POSECNN

Given an input image, the task of 6D object pose estimation is to estimate the rigid transformation from the object coordinate system O to the camera coordinate system C. We assume that the 3D model of the object is available and the object coordinate system is defined in the 3D space of the model. The rigid transformation here consists of an SE(3) transform containing a 3D rotation R and a 3D translation T, where R specifies the rotation angles around the X-axis, Y -axis and Z-axis of the object coordinate system O, and T is the coordinate of the origin of O in the camera coordinate system C. In the imaging process, T determines the object location and scale in the image, while R affects the image appearance of the object according to the 3D shape and texture of the object.

Since these two parameters have distinct visual properties, we propose a convolutional neural network architecture that internally decouples the estimation of R and T.

给定输入图像,6D物体姿态估计的任务是估计从物体坐标系O到相机坐标系C的刚性变换。我们假设物体的3D模型是可用的,并且物体坐标系是在模型的3D空间中定义的。这里的刚性变换包括SE(3)变换,该变换包含3D旋转R和3D平移T,其中R指定围绕对象坐标系O的X轴、Y轴和Z轴的旋转角度,T是相机坐标系C中O原点的坐标。在成像过程中,T确定图像中的对象位置和比例,而R根据对象的3D形状和纹理影响对象的图像外观。由于这两个参数具有不同的视觉特性,我们提出了一种卷积神经网络结构,该结构在内部对R和T的估计进行解耦。

A. Overview of the Network

Fig. 2 illustrates the architecture of our network for 6D object pose estimation. The network contains two stages. The first stage consists of 13 convolutional layers and 4 maxpooling layers, which extract feature maps with different resolutions from the input image. This stage is the backbone of the network since the extracted features are shared across all the tasks performed by the network. The second stage consists of an embedding step that embeds the high-dimensional feature maps generated by thefirst stage into low-dimensional, task-specific features. Then, the network performs three different tasks that lead to the 6D pose estimation, i.e., semantic labeling, 3D translation estimation, and 3D rotation regression, as described next.

图2说明了我们用于6D物体姿态估计的网络结构。网络包含两个阶段。第一阶段由13个卷积层和4个最大池层组成,它们从输入图像中提取具有不同分辨率的特征图。该阶段是网络的主干,因为提取的特征在网络执行的所有任务中共享。第二阶段包括嵌入步骤,该步骤将第一阶段生成的高维特征图嵌入到低维任务特定特征中。然后,网络执行导致6D姿态估计的三个不同任务,即语义标记、3D平移估计和3D旋转回归,如下文所述。

B. Semantic Labeling

In order to detect objects in images, we resort to semantic labeling, where the network classifies each image pixel into an object class. Compared to recent 6D pose estimation methods that resort to object detection with bounding boxes [23, 16,29], semantic labeling provides richer information about the objects and handles occlusions better.

为了检测图像中的对象,我们求助于语义标记,其中网络将每个图像像素分类为对象类。与最近使用边界框进行物体检测的6D姿态估计方法相比 [23, 16,29],语义标记提供了关于对象的更丰富的信息,并更好地处理遮挡。

The embedding step of the semantic labeling branch, as shown in Fig. 2, takes two feature maps with channel dimension 512 generated by the feature extraction stage as inputs.The resolutions of the two feature maps are 1/8 and 1/16 of the original image size, respectively. The networkfirst reduces the channel dimension of the two feature maps to 64 using two convolutional layers. Then it doubles the resolution of the 1/16 feature map with a deconvolutional layer. After that, the two feature maps are summed and another deconvolutional layer is used to increase the resolution by 8 times in order to obtain a feature map with the original image size. Finally, a convolutional layer operates on the feature map and generates semantic labeling scores for pixels. The output of this layer has n channels with n the number of the semantic classes. In training, a softmax cross entropy loss is applied to train the semantic labeling branch. While in testing, a softmax function is used to compute the class probabilities of the pixels. The design of the semantic labeling branch is inspired by the fully

convolutional network in [19] for semantic labeling. It is also used in our previous work for scene labeling [34].

如图2所示,语义标记分支的嵌入步骤将特征提取阶段生成的具有通道维度512的两个特征图作为输入。两幅特征图的分辨率分别为原始图像大小的1/8和1/16。该网络首先使用两个卷积层将两个特征图的信道维度降低到64。然后,它使用反进化层将1/16特征图的分辨率提高了一倍。之后,将两个特征图相加,并使用另一个去卷积层将分辨率提高8倍,以获得具有原始图像大小的特征图。最后,卷积层对特征图进行操作,并生成像素的语义标记分数。该层的输出有n个通道,其中n个是语义类的数量。在训练中,应用软最大交叉熵损失来训练语义标记分支。在测试中,使用softmax函数来计算像素的类概率。语义标记分支的设计灵感来自[19]中用于语义标记的完全卷积网络。它也用于我们之前的场景标记工作[34]。

C. 3D Translation Estimation

As illustrated in Fig. 3, the 3D translation T = ( T x , T y , T z ) T T = (Tx,Ty,Tz)^ \mathsf{T} T=(Tx,Ty,Tz)T is the coordinate of the object origin in the camera coordinate system. A naive way of estimating T is to directly regress the image features to T. However, this approach is not generalizable since objects can appear in any location in the image. Also, it cannot handle multiple object instances in the same category. Therefore, we propose to estimate the 3D translation by localizing the 2D object center in the image and estimating object distance from the camera. To see, suppose the projection of T on the image is c = c = ( c x , c y ) T c = (c_x,c_y)^ \mathsf{T} c=(cx,cy)T . If the network can localize c in the image and estimate the depth T z T_z Tz, then we can recover T x T_x Tx and T y T_y Ty according to the following projection equation assuming a pinhole camera:

如图3所示,3D平移 T = ( T x , T y , T z ) T T = (Tx,Ty,Tz)^\mathsf{T} T=(Tx,Ty,Tz)T 是相机坐标系中物体原点的坐标。估计T的一种简单方法是将图像特征直接回归到T。然而,由于对象可能出现在图像中的任何位置。此外,它不能处理同一类别中的多个对象实例。因此,我们建议通过定位图像中的2D对象中心并估计距相机的对象距离来估计3D平移。要看,假设T在图像上的投影是 c = ( c x , c y ) T c = (c_x,c_y)^ \mathsf{T} c=(cx,cy)T。如果网络可以定位图像中的c并估计深度 T z T_z Tz,那么我们可以根据以下投影方程恢复 T x T_x Tx 和 T y T_y Ty,假设针孔相机:

where fx and fy denote the focal lengths of the camera, and ( p x , p y ) T (p_x, p_y)^\mathsf{T} (px,py)T is the principal point. If the object origin O is the centroid of the object, we call c the 2D center of the object.

其中fx和fy表示相机的焦距, ( p x , p y ) T (p_x, p_y)^\mathsf{T} (px,py)T 是主点。如果对象原点O是物体的质心,我们称c为物体的二维中心。

A straightforward way for localizing the 2D object center is to directly detect the center point as in existing key point detection methods [22, 7]. However, these methods would not work if the object center is occluded. Inspired by the traditional Implicit Shape Model (ISM) in which image patches vote for the object center for detection [18], we design our network to regress to the center direction for each pixel in the image. Specifically, for a pixel ( p x , p y ) T (p_x, p_y)^\mathsf{T} (px,py)T on the image, it regresses to three variables:

定位2D对象中心的直接方法是直接检测中心点,如现有关键点检测方法[22,7]。但是,如果对象中心被遮挡,这些方法将不起作用。受传统的隐式形状模型(ISM)的启发,在该模型中,图像块投票选择对象中心进行检测[18],我们设计了网络,以回归到图像中每个像素的中心方向。具体而言,对于图像上的像素 ( p x , p y ) T (p_x, p_y)^\mathsf{T} (px,py)T,它回归到三个变量:

Note that instead of directly regressing to the displacement vector c−p, we design the network to regress to the unit length vector n = ( n x , n y ) T = c − p ∣ ∣ c − p ∣ ∣ n=(n_x,n_y)^\mathsf{T}=\frac{c−p}{||c−p||} n=(nx,ny)T=∣∣c−p∣∣c−p , i.e., 2D center direction, which is scale-invariant and therefore easier to be trained (as we verified experimentally).

注意,不是直接回归到位移向量c−p,而是设计网络回归到单位长度向量 n = ( n x , n y ) T = c − p ∣ ∣ c − p ∣ ∣ n=(n_x,n_y)^\mathsf{T}=\frac{c−p}{||c−p||} n=(nx,ny)T=∣∣c−p∣∣c−p,即2D中心方向,它是尺度不变的,因此更容易训练(正如我们通过实验验证的)。

The center regression branch of our network (Fig. 2) uses the same architecture as the semantic labeling branch, except that the channel dimensions of the convolutional layers and the deconvolutional layers are different. We embed the high-dimensional features into a 128-dimensional space instead of 64-dimensional since this branch needs to regress to three

variables for each object class. The last convolutional layer in this branch has channel dimension 3 × n with n the number of object classes. In training, a smoothed L1 loss function is applied for regression as in [11].

我们网络的中心回归分支(图2)使用与语义标记分支相同的架构,只是卷积层和反卷积层的信道维度不同。我们将高维特征嵌入128维空间而不是64维空间,因为该分支需要为每个对象类回归到三个变量。该分支中的最后一个卷积层具有信道维度3×n,其中n是对象类的数量。在训练中,如[11]所示,平滑L1损失函数用于回归。

In order tofind the 2D object center c of an object, a Hough voting layer is designed and integrated into the network. The Hough voting layer takes the pixel-wise semantic labeling results and the center regression results as inputs. For each object class, it first computes the voting score for every

location in the image. The voting score indicates how likely the corresponding image location is the center of an object in the class. Specifically, each pixel in the object class adds votes for image locations along the ray predicted from the network (see Fig. 4). After processing all the pixels in the object class, we obtain the voting scores for all the image

locations. Then the object center is selected as the location with the maximum score. For cases where multiple instances of the same object class may appear in the image, we apply non-maximum suppression to the voting scores, and then select locations with scores larger than a certain threshold.

为了找到对象的2D对象中心c,设计了霍夫投票层并将其集成到网络中。Hough投票层将逐像素语义标记结果和中心回归结果作为输入。对于每个对象类,它首先计算图像中每个位置的投票分数。投票分数指示对应的图像位置是类中对象的中心的可能性。具体地说,对象类中的每个像素都为从网络预测的射线沿线的图像位置添加投票(见图4)。在处理对象类中的所有像素之后,我们获得所有图像位置的投票分数。然后选择对象中心作为得分最高的位置。对于图像中可能出现同一对象类的多个实例的情况,我们对投票分数应用非最大抑制,然后选择分数大于特定阈值的位置。

图4. Hough投票用于对象中心定位的图示:每个像素都沿着从网络预测的光线对图像位置进行投票。

图4. Hough投票用于对象中心定位的图示:每个像素都沿着从网络预测的光线对图像位置进行投票。

After generating a set of object centers, we consider the pixels that vote for an object center to be the inliers of the center. Then the depth prediction of the center, T z T_z Tz, is simply computed as the mean of the depths predicted by the inliers. Finally, using Eq. 1, we can estimate the 3D translation T. In addition, the network generates the bounding box of the object as the 2D rectangle that bounds all the inliers, and the bounding box is used for 3D rotation regression.

在生成一组对象中心后,我们将投票给对象中心的像素视为中心的内部。然后,中心的深度预测 T z T_z Tz 被简单地计算为由入口预测的深度的平均值。最后,使用等式1,我们可以估计3D平移T。此外,网络将对象的边界框生成为限定所有内边界的2D矩形,边界框用于3D旋转回归。

D. 3D Rotation Regression

The lowest part of Fig. 2 shows the 3D rotation regression branch. Using the object bounding boxes predicted from the Hough voting layer, we utilize two RoI pooling layers [11] to“crop and pool” the visual features generated by the first stage of the network for the 3D rotation regression. The pooled

feature maps are added together and fed into three Fully-Connected (FC) layers. Thefirst two FC layers have dimension 4096, and the last FC layer has dimension 4 × n with n the number of object classes. For each class, the last FC layeroutputs a 3D rotation represented by a quaternion.

图2的最低部分显示了三维旋转回归分支。使用从霍夫投票层预测的对象边界框,我们利用两个RoI池层[11]来“裁剪和池”3D旋转回归网络第一阶段生成的视觉特征。合并的特征映射被添加到一起,并被馈送到三个FullyConnected(FC)层中。前两个FC层的维度为4096,最后一个FC层具有4×n的维度,其中n是对象类的数量。对于每个类,最后一个FC层输出由四元数表示的3D旋转。

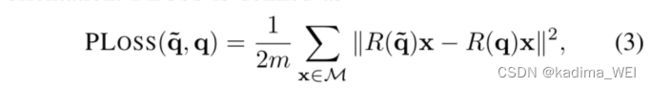

To train the quaternion regression, we propose two loss functions, one of which is specifically designed to handle symmetric objects. Thefirst loss, called PoseLoss (PLOSS), operates in the 3D model space and measures the average squared distance between points on the correct model pose and their corresponding points on the model using the estimated orientation. PLOSS is defined as

为了训练四元数回归,我们提出了两个损失函数,其中一个专门用于处理对称对象。第一个损失,称为PoseLoss(PLOSS),在3D模型空间中操作,并使用估计的方向测量正确模型姿势上的点与其在模型上的对应点之间的平均平方距离。PLOSS定义为

where M denotes the set of 3D model points and m is the number of points. R ( q ~ ) {R}(\tilde q) R(q~) and R ( q ) R(q) R(q) indicate the rotation matrices computed from the the estimated quaternion and the ground truth quaternion, respectively. This loss has its unique minimum when the estimated orientation is identical to the ground truth orientation. Unfortunately, PLOSS does not handle symmetric objects appropriately, since a symmetric object can have multiple correct 3D rotations. Using such a loss function on symmetric objects unnecessarily penalizes the network for regressing to one of the alternative 3D rotations, thereby giving possibly inconsistent training signals.

其中M表示3D模型点的集合,M是点的数量。 R ( q ~ ) {R}(\tilde q) R(q~) 和 R ( q ) R(q) R(q) 分别表示根据估计的四元数和地面真实四元数计算的旋转矩阵。当估计方位与地面真实方位1相同时,该损失具有其唯一的最小值。不幸的是,PLOSS无法正确处理对称对象,因为对称对象可以有多个正确的三维旋转。在对称对象上使用这样的损失函数不必要地惩罚网络回归到备选3D旋转之一,从而给出可能不一致的训练信号。

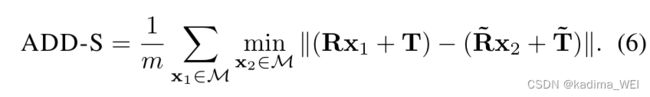

While PLOSS could potentially be modified to handle symmetric objects by manually specifying object symmetries and then considering all correct orientations as ground truth options, we here introduce ShapeMatch-Loss (SLOSS), a loss function that does not require the specification of symmetries.SLOSS is defined as

虽然可以通过手动指定对象对称性,然后将所有正确方向视为地面真实选项,对PLOSS进行修改以处理对称对象,但我们在此引入ShapeMatch Loss(SLOSS),这是一种不需要对称性规范的损失函数。SLOSS定义为

As we can see, just like ICP, this loss measures the offset between each point on the estimated model orientation and the closest point on the ground truth model. SLOSS is minimized when the two 3D models match each other. In this way, theSLOSS will not penalize rotations that are equivalent with respect to the 3D shape symmetry of the object.

正如我们所看到的,就像ICP一样,这个损失测量的是估计模型方向上的每个点和地面真实模型上最近的点之间的偏移。当两个3D模型彼此匹配时,SLOSS被最小化。这样SLOSS不会惩罚与对象的3D形状对称性等效的旋转。

IV. THE YCB-VIDEO DATASET

Object-centric datasets providing ground-truth annotations for object poses and/or segmentations are limited in size by the fact that the annotations are typically provided manually. For example, the popular LINEMOD dataset [13] provides manual annotations for around 1,000 images for each of the 15 objects in the dataset. While such a dataset is useful for evaluation

of model-based pose estimation techniques, it is orders of magnitude smaller than a typical dataset for training state-of-the-art deep neural networks. One solution to this problem is to augment the data with synthetic images. However, care must be taken to ensure that performance generalizes between real and rendered scenes.

以对象为中心的数据集为对象姿态和/或分割提供ground-truth注释,由于注释通常是手动提供的,因此其大小受到限制。例如,流行的LINEMOD数据集[13]为数据集中15个对象中的每一个提供了大约1000幅图像的手动注释。虽然这样的数据集对于基于模型的姿态估计技术的评估是有用的,但它比用于训练最先进的深度神经网络的典型数据集小几个数量级。这个问题的一个解决方案是用合成图像来增强数据。但是,必须注意确保真实场景和渲染场景之间的性能一致。

A. 6D Pose Annotation

To avoid annotating all the video frames manually, we manually specify the poses of the objects only in thefirst frame of each video. Using Signed Distance Function (SDF) representations of each object, we refine the pose of each object in thefirst depth frame. Next, the camera trajectory is initialized byfixing the object poses relative to one another and tracking the object configuration through the depth video. Finally, the camera trajectory and relative object poses are refined in a global optimization step.

为了避免手动注释所有视频帧,我们仅在每个视频的第一帧中手动指定对象的姿势。使用每个对象的符号距离函数(SDF)表示,我们在第一个深度帧中细化每个对象的姿态。接下来,通过固定对象相对于彼此的姿势并通过深度视频跟踪对象配置来初始化相机轨迹。最后,在全局优化步骤中细化相机轨迹和相对对象姿态。

B. Dataset Characteristics

The objects we used are a subset of 21 of the YCB objects [5] as shown in Fig. 5, selected due to high-quality 3D models and good visibility in depth. The videos are collected using an Asus Xtion Pro Live RGB-D camera in fast-cropping mode, which provides RGB images at a resolution of 640x480 at 30 FPS by capturing a 1280x960 image locally on the device and transmitting only the center region over USB. This results in higher effective resolution of RGB images at the cost of a lower FOV, but given the minimum range of the depth sensor this was an acceptable trade-off. The full dataset comprises 133,827 images, two full orders of magnitude larger than the LINEMOD dataset. For more statistics relating to the dataset, see Table I. Fig. 6 shows one annotation example in our dataset where we render the 3D models according to the annotated ground truth pose. Note that our annotation accuracy suffers from several sources of error, including the rolling shutter of the RGB sensor, inaccuracies in the object models, slight asynchrony between RGB and depth sensors, and uncertainties in the intrinsic and extrinsic parameters of the cameras.

我们使用的对象是21个YCB对象[5]的子集,如图5所示,选择这些对象是因为高质量的3D模型和良好的深度可见性。视频是在快速裁剪模式下使用华硕Xtion Pro Live RGB-D相机收集的,该相机通过在设备上本地捕获1280x960图像并通过USB仅传输中心区域,以30 FPS的分辨率提供640x480的RGB图像。这导致RGB图像的更高有效分辨率较低的FOV,但给定深度传感器的最小范围,这是可接受的权衡。完整数据集包括133827幅图像,比LINEMOD数据集大两个完整数量级。有关数据集的更多统计信息,请参见表1。图6显示了数据集中的一个注释示例,其中我们根据注释的地面真实姿态渲染3D模型。请注意,我们的注释精度受到几个误差源的影响,包括RGB传感器的滚动快门、对象模型中的不准确、RGB和深度传感器之间的轻微异步以及相机的内部和外部参数中的不确定性。

V. EXPERIMENTS

A. Datasets

In our YCB-Video dataset, we use 80 videos for training, and test on 2,949 key frames extracted from the rest 12 test videos. We also evaluate our method on the Occluded-LINEMOD dataset [17]. The authors of [17] selected one video with 1,214 frames from the original LINEMOD dataset

[13], and annotated ground truth poses for eight objects in that video: Ape, Can, Cat, Driller, Duck, Eggbox, Glue and Holepuncher. There are significant occlusions between objects in this video sequence, which makes this dataset challenging. For training, we use the eight sequences from the original LINEMOD dataset corresponding to these eight objects. In addition, we generate 80,000 synthetic images for training on both datasets by randomly placing objects in a scene.

在我们的YCB视频数据集中,我们使用80个视频进行训练,并对从其余12个测试视频中提取的2949个关键帧进行测试。我们还在OccludedLINEMOD数据集上评估了我们的方法[17]。[17]的作者从原始LINEMOD数据集[13]中选择了一个1214帧的视频,并为视频中的八个对象标注了地面真实姿势:猿、罐、猫、钻工、鸭子、蛋箱、胶水和打孔器。该视频序列中的对象之间存在明显的遮挡,这使得该数据集具有挑战性。对于训练,我们使用原始LINEMOD数据集中对应于这八个对象的八个序列。此外,我们通过在场景中随机放置对象,在两个数据集上生成80000张合成图像用于训练。

B. Evaluation Metrics

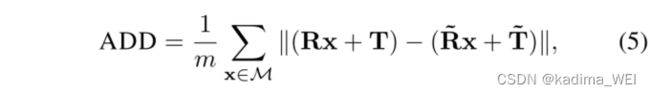

We adopt the average distance (ADD) metric as proposed in [13] for evaluation. Given the ground truth rotation R and translation T and the estimated rotation R ~ \tilde R R~ and translation T ~ \tilde T T~, the average distance computes the mean of the pairwise distances between the 3D model points transformed according to the ground truth pose and the estimated pose:

我们采用[13]中提出的平均距离(ADD)度量进行评估。给定地面真实旋转R和平移T以及估计旋转 R ~ \tilde R R~ 和平移 T ~ \tilde T T~,平均距离计算根据至ground truth姿态和估计姿态:

where M denotes the set of 3D model points and m is the number of points. The 6D pose is considered to be correct if the average distance is smaller than a predefined threshold. In the OccludedLINEMOD dataset, the threshold is set to 10% of the 3D model diameter. For symmetric objects such as the Eggbox and Glue, the matching between points is ambiguous

for some views. Therefore, the average distance is computed using the closest point distance:

其中M表示3D模型点的集合,M是点的数量。如果平均距离小于预定义阈值,则6D姿态被认为是正确的。在OccludedLINEMOD数据集中,阈值设置为3D模型直径的10%。对于对称对象(如Eggbox和Glue),某些视图的点之间的匹配不明确。因此,使用最近点距离计算平均距离:

Our design of the loss function for rotation regression is motivated by these two evaluation metrics. Using afixed threshold in computing pose accuracy cannot reveal how a method performs on these incorrect poses with respect to that threshold. Therefore, we vary the distance threshold in evaluation. In this case, we can plot an accuracy-threshold curve, and compute the area under the curve for pose evaluation.

我们设计旋转回归损失函数的动机是这两个评估指标。在计算姿态精度时使用固定的阈值不能揭示相对于该阈值,方法如何处理这些不正确的姿态。因此,我们在评估中改变距离阈值。在这种情况下,我们可以绘制精度阈值曲线,并计算曲线下的面积以进行姿势评估。

Instead of computing distances in the 3D space, we can project the transformed points onto the image, and then compute the pairwise distances in the image space. This metric is called the reprojection error that is widely used for 6D pose estimation when only color images are used.

代替计算3D空间中的距离,我们可以将变换后的点投影到图像上,然后计算图像空间中的成对距离。当仅使用彩色图像时,该度量被称为用于6D姿态估计的重投影误差。

C. Implementation Details

PoseCNN is implemented using the TensorFlow library [1]. The Hough voting layer is implemented on GPU as in [31]. In training, the parameters of thefirst 13 convolutional layers in the feature extraction stage and thefirst two FC layers in the 3D rotation regression branch are initialized with the VGG16 network [27] trained on ImageNet [9]. No gradient is back-propagated via the Hough voting layer. Stochastic Gradient Descent (SGD) with momentum is used for training.

PoseCNN使用TensorFlow库[1]实现。如[31]所示,Hough投票层在GPU上实现。在训练中,使用在ImageNet上训练的VGG16网络[27]初始化特征提取阶段的前13个卷积层和3D旋转回归分支中的前两个FC层的参数[9]。没有梯度通过霍夫投票层被反向传播。动量随机梯度下降(SGD)用于训练。

D. Baselines

3D object coordinate regression network. Since the state-of-the-art 6D pose estimation methods mostly rely on regressing image pixels to 3D object coordinates [3, 4, 21], we implement a variation of our network for 3D object

coordinate regression for comparison. In this network, instead of regressing to center direction and depth as in Fig. 2, we regress each pixel to its 3D coordinate in the object coordinate system. We can use the same architecture since each pixel still regresses to three variables for each class. Then we remove the 3D rotation regression branch. Using the semantic labeling results and 3D object coordinate regression results, the 6D pose is recovered using the pre-emptive RANSAC as in [4].

三维物体坐标回归网络。由于最先进的6D姿态估计方法主要依赖于将图像像素回归到3D对象坐标[3,4,21],因此我们实现了用于比较的3D对象坐标回归网络的变体。在这个网络中,我们没有像图2那样回归到中心方向和深度,而是将每个像素回归到物体坐标系中的3D坐标。我们可以使用相同的架构,因为每个像素仍然回归到每个类的三个变量。然后我们移除3D旋转回归分支。使用语义标记结果和3D对象坐标回归结果,如[4]所示,使用先发制人的RANSAC恢复6D姿态。

Pose refinement. The 6D pose estimated from our network can be refined when depth is available. We use the Iterative Closest Point (ICP) algorithm to refine the 6D pose. Specifically, we employ ICP with projective data association and a point-plane residual term. We render a predicted point cloud given the 3D model and an estimated pose, and assume that each observed depth value is associated with the predicted depth value at the same pixel location. The residual for each pixel is then the smallest distance from the observed point in 3D to the plane defined by the rendered point in 3D and its normal. Points with residuals above a specified threshold are rejected and the remaining residuals are minimized using gradient descent. Semantic labels from the network are used to crop the observed points from the depth image. Since ICP is not robust to local minimums, we refinement multiple poses by perturbing the estimated pose from the network, and then select the best refined pose using the alignment metric proposed in [33].

姿势细化。当深度可用时,从我们的网络估计的6D姿态可以被细化。我们使用迭代最近点(ICP)算法来细化6D姿态。具体而言,我们使用具有投影数据关联和点平面残差项的ICP。我们渲染预测的点云并且假设每个观察到的深度值与同一像素位置处的预测深度值相关联。然后,每个像素的残差是从3D中的观察点到由3D中的渲染点及其法线定义的平面的最小距离。残差高于指定阈值的点被拒绝,剩余残差使用梯度下降最小化。来自网络的语义标签用于裁剪深度图像中的观察点。由于ICP对局部最小值不鲁棒,我们通过扰动网络中的估计姿态来细化多个姿态,然后使用[33]中提出的对齐度量来选择最佳的细化姿态。

E. Analysis on the Rotation Regress Losses

We first conduct experiments to analyze the effect of the two loss functions for rotation regression on symmetric objects. Fig. 7 shows the rotation error histograms for two symmetric objects in the YCB-Video dataset (wood block and large clamp) using the two loss functions in training. The rotation errors of the PLOSS for the wood block and the large clamp span from 0 degree to 180 degree. The two histograms indicate that the network is confused by the symmetric objects. While the histograms of the SLOSS concentrate on the 180 degree error for the wood block and 0 degree and 180 degree for

the large clamp, since they are symmetric with respect to 180 degree rotation around their coordinate axes.

我们首先进行实验,分析旋转回归的两个损失函数对对称对象的影响。图7显示了YCB视频数据集中两个对称对象(木块和大夹钳)在训练中使用两个损失函数的旋转误差直方图。木块和大夹具跨度从0度到180度的PLOSS旋转误差。这两个直方图表明网络被对称对象所混淆。虽然SLOSS的直方图集中于木块的180度误差以及大夹具的0度和180度误差,因为它们相对于围绕其坐标轴的180度旋转是对称的。

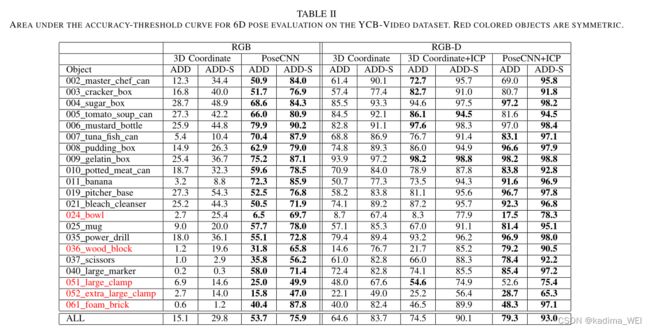

F. Results on the YCB-Video Dataset

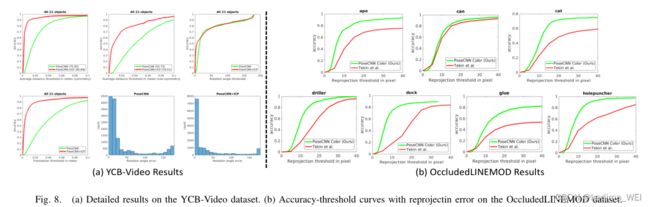

Table II and Fig. 8(a) presents detailed evaluation for all the 21 objects in the YCB-Video dataset. We show the area under the accuracy-threshold curve using both the ADD metric and the ADD-S metric, where we vary the threshold for the average distance and then compute the pose accuracy. The maximum threshold is set to 10cm.

表II和图8(a)给出了YCB视频数据集中所有21个对象的详细评估。我们使用ADD度量和ADD-S度量显示精度阈值曲线下的区域,其中我们改变平均距离的阈值,然后计算姿势精度。最大阈值设置为10cm。

We can see that i) By only using color images, our network significantly outperforms the 3D coordinate regression network combined with the pre-emptive RANSAC algorithm for 6D pose estimation. When there are errors in the 3D coordinate regression results, the estimated 6D pose can drift far away from the ground truth pose. While in our network, the center localization helps to constrain the 3D translation estimation even if the object is occluded. ii) Refining the poses with ICP significantly improves the performance. PoseCNN with ICP achieves superior performance compared to the 3D coordinate regression network when using depth images. The initial pose in ICP is critical for convergence. PoseCNN provides better

initial 6D poses for ICP refinement. iii) We can see that some objects are more difficult to handle such as the tunafish can that is small and with less texture. The network is also confused by the large clamp and the extra large clamp since they have the same appearance. The 3D coordinate regression

network cannot handle symmetric objects very well such as the banana and the bowl.

我们可以看到,i)通过仅使用彩色图像,我们的网络显著优于3D坐标回归网络,该网络结合了用于6D姿态估计的先发制人RANSAC算法。当3D坐标回归结果中存在误差时,估计的6D姿态会远离地面真实姿态。在我们的网络中,即使对象被遮挡,中心定位也有助于约束3D平移估计。ii)使用ICP细化姿势显著提高了性能。当使用深度图像时,与3D坐标回归网络相比,具有ICP的PoseCNN实现了更好的性能。ICP中的初始姿态对于收敛至关重要。PoseCNN为ICP细化提供了更好的初始6D姿态。iii)我们可以看到,有些物体更难处理,比如金枪鱼罐头,它很小,质地也很差。网络还被大钳和超大钳混淆,因为它们具有相同的外观。三维坐标回归网络不能很好地处理对称对象,例如香蕉和碗。

Fig. 9 displays some 6D pose estimation results on the YCB-Video dataset. We can see that the center prediction is quite accurate even if the center is occluded by another object. Our network with color only is already able to provide good 6D pose estimation. With ICP refinement, the accuracy of the

6D pose is further improved.

图9显示了YCB视频数据集上的一些6D姿态估计结果。我们可以看到,即使中心被另一个对象遮挡,中心预测也非常准确。我们的仅具有颜色的网络已经能够提供良好的6D姿态估计。通过ICP细化,6D姿态的精度进一步提高。

G. Results on the OccludedLINEMOD Dataset

The OccludedLINEMOD dataset is challenging due to significant occlusions between objects. Wefirst conduct experiments using color images only. Fig. 8(b) shows the accuracythreshold curves with reprojection error for 7 objects in the dataset, where we compare PoseCNN with [29] that achieves

the current state-of-the-art result on this dataset using color images as input. Our method significantly outperforms [29] by a large margin, especially when the reprojection error threshold is small. These results show that PoseCNN is able to correctly localize the target object even under severe occlusions.

OccludedLINEMOD数据集具有挑战性,因为对象之间存在明显的遮挡。我们首先只使用彩色图像进行实验。图8(b)显示了数据集中7个对象的具有重投影误差的精度阈值曲线,其中我们将PoseCNN与[29]进行了比较,后者使用彩色图像作为输入在该数据集上实现了当前最先进的结果。我们的方法在很大程度上优于[29],特别是当重投影误差阈值很小时。这些结果表明,即使在严重遮挡的情况下,PoseCNN也能够正确定位目标对象。

By refining the poses using depth images in ICP, our method also outperforms the state-of-the-art methods using RGB-D data as input. Table III summarizes the pose estimation accuracy on the OccludedLINEMOD dataset. The most improvement comes from the two symmetric objects“Eggbox” and “Glue”. By using our ShapeMatch-Loss for training,

PoseCNN is able to correctly estimate the 6D pose of the two objects with respect to symmetry. We also present the result of PoseCNN using color only in Table III. These accuracies are much lower since the threshold here is usually smaller than 2cm. It is very challenging for color-based methods to obtain 6D poses within such small threshold when there are occlusions between objects. Fig. 9 shows two examples of the 6D pose estimation results on the OccludedLINEMOD dataset.

通过使用ICP中的深度图像细化姿态,我们的方法也优于使用RGBD数据作为输入的最先进方法。表III总结了OccludedLINEMOD数据集的姿态估计精度。最大的改进来自于两个对称对象“Eggbox”和“Glue”。通过使用我们的ShapeMatch Loss进行训练,PoseCNN能够正确估计两个物体相对于对称的6D姿态。我们还将PoseCNN仅使用颜色的结果显示在表III中。由于这里的阈值通常小于2cm,因此这些精度要低得多。当物体之间存在遮挡时,基于颜色的方法在如此小的阈值内获得6D姿态是非常具有挑战性的。图9显示了OccludedLINEMOD数据集上6D姿态估计结果的两个示例。

VI. CONCLUSIONS

In this work, we introduce PoseCNN, a convolutional neural network for 6D object pose estimation. PoseCNN decouples the estimation of 3D rotation and 3D translation. It estimates the 3D translation by localizing the object center and predicting the center distance. By regressing each pixel to a unit

vector towards the object center, the center can be estimated robustly independent of scale. More importantly, pixels vote the object center even if it is occluded by other objects. The 3D rotation is predicted by regressing to a quaternion representation. Two new loss functions are introduced for

rotation estimation, with the ShapeMatch-Loss designed for symmetric objects. As a result, PoseCNN is able to handle occlusion and symmetric objects in cluttered scenes. We also introduce a large scale video dataset for 6D object pose estimation. Our results are extremely encouraging in that they indicate that it is feasible to accurately estimate the 6D pose of objects in cluttered scenes using vision data only. This opens the path to using cameras with resolution andfield of view that goes far beyond currently used depth camera systems. We note that the SLOSS sometimes results in local minimums in the pose space similar to ICP. It would be interesting to explore more efficient way in handle symmetric objects in 6D pose estimation in the future.

在这项工作中,我们介绍了用于6D物体姿态估计的卷积神经网络PoseCNN。PoseCNN将3D旋转和3D平移的估计解耦。它通过定位对象中心和预测中心距离来估计3D平移。通过将每个像素回归到朝向对象中心的单位向量,可以估计中心完全独立于规模。更重要的是,即使被其他对象遮挡,像素也会对对象中心进行投票。通过回归到四元数表示来预测3D旋转。为旋转估计引入了两个新的损失函数,ShapeMatch损失是为对称对象设计的。因此,PoseCNN能够处理杂乱场景中的遮挡和对称对象。我们还介绍了用于6D物体姿态估计的大规模视频数据集。我们的结果非常令人鼓舞,因为它们表明,仅使用视觉数据来准确估计杂乱场景中物体的6D姿态是可行的。这为使用分辨率和视野远远超出当前使用的深度相机系统的相机开辟了道路。我们注意到,SLOSS有时会导致姿态空间中的局部极小值,类似于ICP。未来,在6D姿态估计中探索更有效的处理对称对象的方法将是很有意思的。