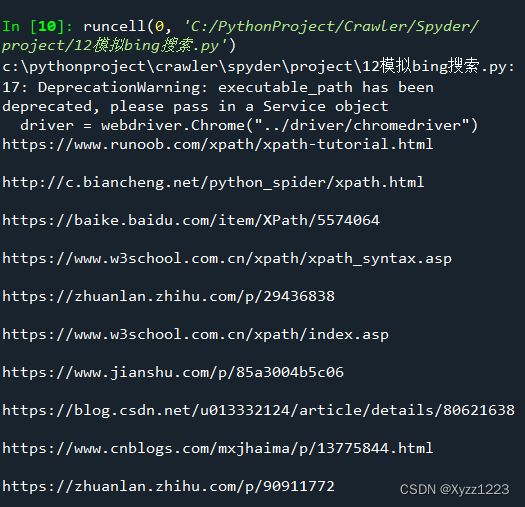

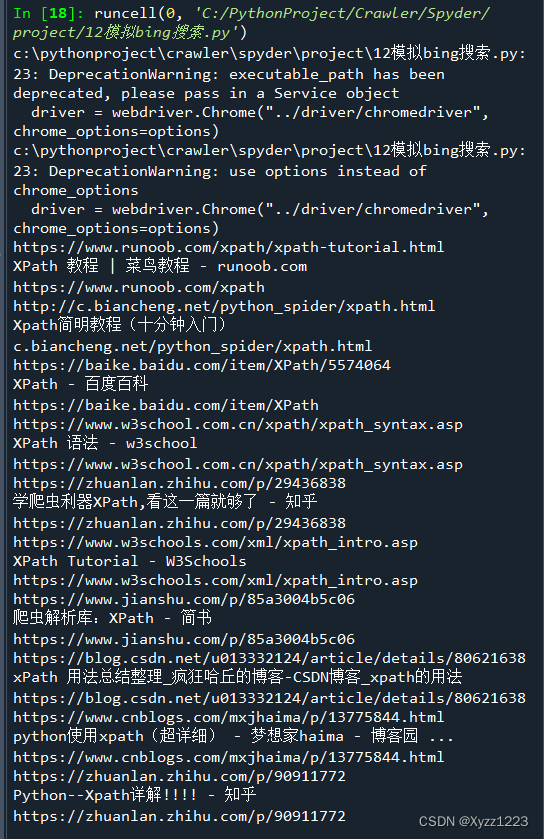

记录Selenium Python访问浏览器时部分内容无法获取的解决方法

出现这种情况可能是浏览器对爬虫进行拦截,可以为webdriver添加浏览器设置。

# 进入浏览器设置

options = webdriver.ChromeOptions()

# 设置中文

options.add_argument('lang=zh_CN.UTF-8')

# 更换头部

options.add_argument('user-agent="Mozilla/5.0 (iPod; U; CPU iPhone OS 2_1 like Mac OS X; ja-jp) AppleWebKit/525.18.1 (KHTML, like Gecko) Version/3.1.1 Mobile/5F137 Safari/525.20"')

driver = webdriver.Chrome("../driver/chromedriver", chrome_options=options)

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.by import By

from selenium.webdriver.common.desired_capabilities import DesiredCapabilities

# 进入浏览器设置

options = webdriver.ChromeOptions()

# 设置中文

options.add_argument('lang=zh_CN.UTF-8')

# 更换头部

options.add_argument('user-agent="Mozilla/5.0 (iPod; U; CPU iPhone OS 2_1 like Mac OS X; ja-jp) AppleWebKit/525.18.1 (KHTML, like Gecko) Version/3.1.1 Mobile/5F137 Safari/525.20"')

#get直接返回,不再等待界面加载完成

desired_capabilities = DesiredCapabilities.CHROME

desired_capabilities["pageLoadStrategy"] = "none"

driver = webdriver.Chrome("../driver/chromedriver", chrome_options=options)

driver.implicitly_wait(10)

url = "https://cm.bing.com"

driver.get(url)

keyword = driver.find_element(By.XPATH, "//input[@id='sb_form_q']")

keyword.send_keys("xpath")

keyword.send_keys(Keys.ENTER)

items = driver.find_elements(By.XPATH, "//li[@class='b_algo']")

for item in items:

a = item.find_element(By.TAG_NAME, "a")

print(a.get_attribute("href"))

print(a.text)

driver.quit()