【SLAM基础入门】贝叶斯滤波、卡尔曼滤波、粒子滤波笔记(3)

文章目录

-

-

- 第五部分:扩展卡尔曼滤波EKF

- 第六部分:无迹卡尔曼滤波

-

第五部分:扩展卡尔曼滤波EKF

-

扩展卡尔曼滤波推导:对 f ( X k − 1 ) f(X_{k-1}) f(Xk−1)和 h ( X k ) h(X_k) h(Xk)进行线性化。

-

设 X k − 1 ∼ N ( x ^ k − 1 + , P k − 1 + ) X_{k-1}\sim N(\hat x_{k-1}^+,P^+_{k-1}) Xk−1∼N(x^k−1+,Pk−1+),这也是卡尔曼滤波的前提。

-

预测步: X k = f ( X k − 1 ) + Q k X_k = f(X_{k-1})+Q_k Xk=f(Xk−1)+Qk,对 f ( X k − 1 ) f(X_{k-1}) f(Xk−1)在 x ^ k − 1 + \hat x_{k-1}^+ x^k−1+进行一阶泰勒展开,

f ( X k − 1 ) = f ( x ^ k − 1 + ) + f ′ ( x ^ k − 1 + ) ( X k − 1 − x ^ k − 1 + ) ≈ [ f ′ ( x ^ k − 1 + ) ] X k − 1 + [ f ( x ^ k − 1 + ) − x ^ k − 1 + f ′ ( x ^ k − 1 + ) ] = A X k − 1 + B f(X_{k-1})=f(\hat x_{k-1}^+)+f'(\hat x_{k-1}^+)(X_{k-1}-\hat x_{k-1}^+)\approx [f'(\hat x_{k-1}^+)]X_{k-1}+[f(\hat x_{k-1}^+)-\hat x_{k-1}^+f'(\hat x_{k-1}^+)]=AX_{k-1}+B f(Xk−1)=f(x^k−1+)+f′(x^k−1+)(Xk−1−x^k−1+)≈[f′(x^k−1+)]Xk−1+[f(x^k−1+)−x^k−1+f′(x^k−1+)]=AXk−1+B

则预测方程改写为

X k = A X k − 1 + B + Q k , A = f ′ ( x ^ k − 1 + ) , B = f ( x ^ k − 1 + ) − f ′ ( x ^ k − 1 + ) x ^ k − 1 + X_k = AX_{k-1}+B+Q_k,A= f'(\hat x_{k-1}^+),B=f(\hat x_{k-1}^+)-f'(\hat x_{k-1}^+)\hat x_{k-1}^+ Xk=AXk−1+B+Qk,A=f′(x^k−1+),B=f(x^k−1+)−f′(x^k−1+)x^k−1+,即 f ( v ) = A v + B f(v) = Av+B f(v)=Av+B

因此

f k − ( x ) = N ( A x ^ k − 1 + + B , A 2 P k − 1 + + Q ) = N ( f ( x ^ k − 1 + ) , A 2 P k − 1 + + Q ) f^-_k(x)=N(A\hat x_{k-1}^++B,A^2P_{k-1}^++Q)=N(f(\hat x_{k-1}^+),A^2P^+_{k-1}+Q) fk−(x)=N(Ax^k−1++B,A2Pk−1++Q)=N(f(x^k−1+),A2Pk−1++Q),即 X k − ∼ N ( f ( x ^ k − 1 + ) , A 2 P k − 1 + + Q ) X_k^-\sim N(f(\hat x_{k-1}^+),A^2P^+_{k-1}+Q) Xk−∼N(f(x^k−1+),A2Pk−1++Q)

x ^ k − = f ( x ^ k − 1 + ) , P k − = A 2 P k − 1 + + Q \hat x_{k}^-=f(\hat x_{k-1}^+),P_k^-=A^2P^+_{k-1}+Q x^k−=f(x^k−1+),Pk−=A2Pk−1++Q,不用算B

-

更新步: Y k = h ( X k ) + R k Y_k = h(X_k)+R_k Yk=h(Xk)+Rk,对 h ( X k ) h(X_k) h(Xk)在 x ^ k − \hat x_{k}^- x^k−点进行一阶泰勒展开,

h ( X k ) ≈ h ( x ^ k − ) + h ′ ( x ^ k − ) ( X k − x ^ k − ) = C X k + D h(X_k)\approx h(\hat x_{k}^-)+h'(\hat x_{k}^-)(X_k-\hat x_{k}^-)=CX_k+D h(Xk)≈h(x^k−)+h′(x^k−)(Xk−x^k−)=CXk+D,其中 C = h ′ ( x ^ k − ) , D = h ( x ^ k − ) − h ′ ( x ^ k − ) x ^ k − C=h'(\hat x_{k}^-),D=h(\hat x_{k}^-)-h'(\hat x_{k}^-)\hat x_{k}^- C=h′(x^k−),D=h(x^k−)−h′(x^k−)x^k−

因此

f k + = N ( R x ^ k − + C P k − ( y k − D ) R + C 2 P k − , ( 1 − C 2 P k − R + C 2 P k − ) P k − ) f_k^+ = N(\frac{R\hat x_{k}^-+CP_k^-(y_k-D)}{R+C^2P_k^-},(1-\frac{C^2P_k^-}{R+C^2P_k^-})P_k^-) fk+=N(R+C2Pk−Rx^k−+CPk−(yk−D),(1−R+C2Pk−C2Pk−)Pk−)

定义卡尔曼增益 K = C P k − R + C 2 P k − K = \frac{CP_k^-}{R+C^2P_k^-} K=R+C2Pk−CPk−,则 X k + ∼ N ( x ^ k − + K [ y k − h ( x ^ k − ) ] , ( 1 − K C ) P k − ) X_k^+\sim N(\hat x_{k}^- +K[y_k-h(\hat x_{k}^-)],(1-KC)P_k^-) Xk+∼N(x^k−+K[yk−h(x^k−)],(1−KC)Pk−)

x ^ k + = x ^ k − + K [ y k − h ( x ^ k − ) ) ] \hat x_{k}^+ = \hat x_{k}^-+K[y_k-h(\hat x_{k}^-))] x^k+=x^k−+K[yk−h(x^k−))], P k + = ( 1 − K C ) P k − P_k^+ = (1-KC)P_k^- Pk+=(1−KC)Pk−,不用算D

-

-

扩展卡尔曼滤波算法流程

- 设 X k − 1 ∼ N ( x ^ k − 1 + , P k − 1 + ) X_{k-1}\sim N(\hat x_{k-1}^+,P^+_{k-1}) Xk−1∼N(x^k−1+,Pk−1+)

- 预测步

- A = f ′ ( x ^ k − 1 + ) A = f'(\hat x_{k-1}^+) A=f′(x^k−1+)

- x ^ k − = f ( x ^ k − 1 + ) \hat x_k^-=f(\hat x_{k-1}^+) x^k−=f(x^k−1+)

- P k − = A 2 P k − 1 + + Q P_k^-=A^2P_{k-1}^++Q Pk−=A2Pk−1++Q

- 更新步

- C = h ′ ( x ^ k − ) C= h'(\hat x_k^-) C=h′(x^k−)

- K = C P k − C 2 P k − + R K = \frac{CP_k^-}{C^2P_k^-+R} K=C2Pk−+RCPk−

- x ^ k + = x ^ k − + K [ y k − h ( x ^ k − ) ] \hat x_k^+ = \hat x_k^-+K[y_k-h(\hat x_k^-)] x^k+=x^k−+K[yk−h(x^k−)]

- P k + = ( 1 − K C ) P k − P_k^+ = (1-KC)P_k^- Pk+=(1−KC)Pk−

矩阵形式: { X ⃗ k = f ( X ⃗ k − 1 ) + Q ⃗ k 预 测 Y ⃗ k = h ( X ⃗ k ) + R ⃗ k 观 测 \left\{\begin{array}{l} \begin{aligned} \vec X_k &= f(\vec X_{k-1})+\vec Q_k 预测\\ \vec Y_k &= h(\vec X_k)+\vec R_{k}观测 \end{aligned} \end{array}\right. {XkYk=f(Xk−1)+Qk预测=h(Xk)+Rk观测

-

设 X ⃗ k − 1 ∼ N ( x ⃗ ^ k − 1 + , Σ k − 1 + ) \vec X_{k-1}\sim N(\hat{\vec x}_{k-1}^+ ,\Sigma^+_{k-1}) Xk−1∼N(x^k−1+,Σk−1+)

-

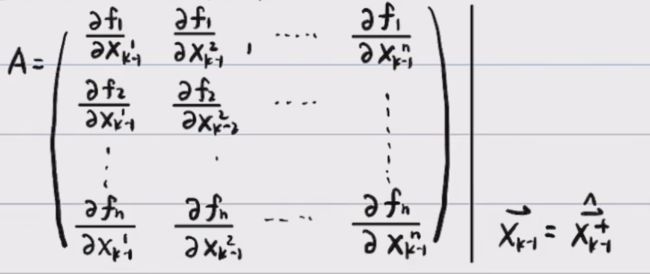

预测步:

- A A A是f对向量 x ⃗ ^ k − 1 + \hat{\vec x}_{k-1}^+ x^k−1+的雅可比矩阵

- x ⃗ ^ k − 1 − = f ( x ⃗ ^ k − 1 + ) \hat{\vec x}_{k-1}^- = f(\hat{\vec x}_{k-1}^+) x^k−1−=f(x^k−1+)

- Σ k − = A Σ k − 1 + A T + Q \Sigma_k^- = A\Sigma_{k-1}^+ A^T+Q Σk−=AΣk−1+AT+Q, Q Q Q为 Q ⃗ k \vec Q_k Qk的协方差矩阵

-

观测步:

- C是h对向量 x ⃗ ^ k − 1 − \hat{\vec x}_{k-1}^- x^k−1−的雅可比矩阵,注意可能不是方阵,因为观测维数可能少于状态。

-

K = Σ k − C T ( C Σ k − C T + R ) − 1 K = \Sigma_k^- C^T(C\Sigma_k^-C^T+R)^{-1} K=Σk−CT(CΣk−CT+R)−1

-

观测到一组数据 Y ⃗ \vec{Y} Y, x ⃗ ^ k + = x ⃗ ^ k − + K [ Y ⃗ − h ( x ⃗ ^ k − ) ] \hat{\vec x}_{k}^+ = \hat{\vec x}_{k}^- + K[\vec Y -h(\hat{\vec x}_{k}^-)] x^k+=x^k−+K[Y−h(x^k−)]

-

Σ k + = ( I − K C ) Σ k − \Sigma _k^+=(I-KC)\Sigma _k^- Σk+=(I−KC)Σk−

第六部分:无迹卡尔曼滤波

-

无迹卡尔曼滤波:处理非线性问题

思路:设 X ∼ N ( 0 , 1 ) X\sim N(0,1) X∼N(0,1), Y = f ( X ) Y = f(X) Y=f(X)。

- EKF:对函数线性化近似, f ( X ) = f ( 0 ) + f ′ ( 0 ) ( X − 0 ) f(X) = f(0)+f'(0)(X-0) f(X)=f(0)+f′(0)(X−0)

- UKF:对PDF近似 Y = f ( X ) ⇒ E ( Y ) = E ( f ( x ) ) , E ( Y 2 ) = E ( [ f ( x ) ] 2 ) Y = f(X)\Rightarrow E(Y) = E(f(x)),E(Y^2) = E([f(x)]^2) Y=f(X)⇒E(Y)=E(f(x)),E(Y2)=E([f(x)]2)。若能通过某种方式直接求出Y的期望和方差,然后用一个期望和方差与之相同的正态分布去近似它,则能将非线性问题转化为普通卡尔曼滤波问题。

期望与方差的求法:设 X ∼ N ( 0 , 1 ) , Y = g ( X ) , X\sim N(0,1),Y = g(X), X∼N(0,1),Y=g(X), 求 E ( Y ) , D ( Y ) E(Y),D(Y) E(Y),D(Y)

-

法1 精确解:不好算

E ( Y ) = E [ g ( X ) ] = ∫ − ∞ ∞ g ( x ) 1 2 π e x p ( − x 2 / 2 ) d x E ( Y 2 ) = ∫ − ∞ ∞ [ g ( x ) ] 2 1 2 π e x p ( − x 2 / 2 ) d x D ( Y ) = E ( Y 2 ) − [ E ( Y ) ] 2 E(Y) = E[g(X)]=\int_{-\infin}^\infin g(x)\frac{1}{\sqrt{2\pi}}exp(-x^2/2)dx\\ E(Y^2)=\int _{-\infin}^\infin [g(x)]^2 \frac{1}{\sqrt{2\pi}}exp(-x^2/2)dx\\ D(Y) = E(Y^2)-[E(Y)]^2 E(Y)=E[g(X)]=∫−∞∞g(x)2π1exp(−x2/2)dxE(Y2)=∫−∞∞[g(x)]22π1exp(−x2/2)dxD(Y)=E(Y2)−[E(Y)]2 -

法2 无迹变换 unscented transform, UT:正态分布特征 μ , σ 2 \mu,\sigma^2 μ,σ2,对称性,因此取3个带权重粒子近似代替 N ( 0 , 1 ) N(0,1) N(0,1),求取Y的期望和方差进而近似出Y的正态分布。

x 1 = E ( X ) , x 2 = E ( X ) − ( 1 + λ ) D ( X ) , x 3 = E ( X ) + ( 1 + λ ) D ( X ) x_1 = E(X),x_2 = E(X)-\sqrt{(1+\lambda)D(X)},x_3= E(X)+\sqrt{(1+\lambda)D(X)} x1=E(X),x2=E(X)−(1+λ)D(X),x3=E(X)+(1+λ)D(X)

w 1 = λ 1 + λ , w 2 = 1 2 ( 1 + λ ) , w 3 = 1 2 ( 1 + λ ) w_1 =\frac{\lambda}{1+\lambda},w_2= \frac{1}{2(1+\lambda)},w_3 = \frac{1}{2(1+\lambda)} w1=1+λλ,w2=2(1+λ)1,w3=2(1+λ)1, λ \lambda λ为大于零的参数任取。

例:设 X ∼ N ( 0 , 1 ) X\sim N(0,1) X∼N(0,1),取 x 1 = 0 , x 2 = 1 + λ , x 3 = − 1 + λ x_1= 0,x_2=\sqrt{1+\lambda},x_3 = -\sqrt{1+\lambda} x1=0,x2=1+λ,x3=−1+λ,$\lambda $越大,粒子散得越开。

则 ∑ i = 1 3 w i x i = 0 , ∑ i = 1 3 w i ( x i − 0 ) 2 = 1 \sum \limits_{i=1}^3 w_ix_i=0,\sum \limits_{i=1}^3 w_i(x_i-0)^2=1 i=1∑3wixi=0,i=1∑3wi(xi−0)2=1

Y = f ( X ) ⇒ y i = f ( x i ) , y 1 = f ( x 1 ) = f ( 0 ) , y 2 = f ( x 2 ) = f ( 1 + λ ) , y 3 = f ( x 3 ) = f ( − 1 + λ ) Y=f(X)\Rightarrow y_i=f(x_i),y_1=f(x_1)=f(0),y_2=f(x_2)=f(\sqrt{1+\lambda}),y_3=f(x_3)=f(-\sqrt{1+\lambda}) Y=f(X)⇒yi=f(xi),y1=f(x1)=f(0),y2=f(x2)=f(1+λ),y3=f(x3)=f(−1+λ)

E ( Y ) = ∑ i w i y i E(Y)=\sum \limits_i w_iy_i E(Y)=i∑wiyi, D ( Y ) = ∑ i w i ( y i − E ( Y ) ) 2 D(Y) = \sum \limits_i w_i(y_i-E(Y))^2 D(Y)=i∑wi(yi−E(Y))2

精度问题:EKF具有一阶精度,UKF具有二阶精度

- 设 X ∼ N ( 0 , 1 ) , Y = X 2 X\sim N(0,1),Y=X^2 X∼N(0,1),Y=X2,则 Y ∼ χ ( 1 ) Y\sim \chi(1) Y∼χ(1)自由度为1的卡方分布 E ( Y ) = 1 , D ( Y ) = 2 E(Y)=1,D(Y)=2 E(Y)=1,D(Y)=2

- EKF:若 Y = f ( X ) Y=f(X) Y=f(X)是二阶以上函数,EKF不能还原 E ( Y ) , D ( Y ) E(Y),D(Y) E(Y),D(Y)

- UKF:若 f ( X ) = a X 2 + b X + C f(X) = aX^2+bX+C f(X)=aX2+bX+C,则UT可以还原 E ( Y ) E(Y) E(Y),但不能还原 D ( Y ) D(Y) D(Y),即 X → x 1 , x 2 , x 3 → y 1 , y 2 , y 3 → E ( Y ) = ∑ w i y i X\to x_1,x_2,x_3 \to y_1,y_2,y_3 \to E(Y)=\sum w_iy_i X→x1,x2,x3→y1,y2,y3→E(Y)=∑wiyi,若 f ( x ) f(x) f(x)高于二阶,则 E ( Y ) , D ( Y ) E(Y),D(Y) E(Y),D(Y)均不能还原。

无迹卡尔曼滤波思想:对核心方程 { X k = f ( X k − 1 ) + Q k 预 测 Y k = h ( X k ) + R k 观 测 \left\{\begin{array}{l} \begin{aligned} X_k &= f(X_{k-1})+Q_k 预测\\ Y_k &= h(X_k)+R_{k}观测 \end{aligned} \end{array}\right. {XkYk=f(Xk−1)+Qk预测=h(Xk)+Rk观测

- 预测方程: X k − 1 X_{k-1} Xk−1是正态分布, f ( X k − 1 ) f(X_{k-1}) f(Xk−1)不一定是正态分布 → 无 迹 变 换 U T f ( X k − 1 ) \mathop{\to} \limits^{无迹变换UT} f(X_{k-1}) →无迹变换UTf(Xk−1)近似为正态。又因为 Q k Q_k Qk是正态分布,则 X k X_k Xk也是正态分布。

- 观测方程: X k X_k Xk是正态分布, h ( X k ) h(X_k) h(Xk)不一定是正态分布 → 无 迹 变 换 U T h ( X k ) \mathop{\to} \limits^{无迹变换UT} h(X_{k}) →无迹变换UTh(Xk)也近似为正态分布。

- 所有随机变量都是正态分布,即可以用kalman Filter处理。

-

无迹卡尔曼滤波算法

-

设 X k − 1 ∼ N ( μ k − 1 + , σ k − 1 2 + ) X_{k-1}\sim N(\mu _{k-1}^+,\sigma_{k-1}^{2+}) Xk−1∼N(μk−1+,σk−12+)

-

将 f ( X k − 1 ) f(X_{k-1}) f(Xk−1)正态化:

x 1 = μ k − 1 + , x 2 = μ k − 1 + + ( 1 + λ ) σ k − 1 2 + , x 3 = μ k − 1 + − ( 1 + λ ) σ k − 1 2 + w 1 = 1 1 + λ , w 2 = w 3 = 1 2 ( 1 + λ ) x k − ( i ) = f ( x k − 1 + ( i ) ) , x ^ k − ( i ) = ∑ i = 1 3 w i f ( x k + ( i ) ) , P k − = ∑ i = 1 3 w i ( x k − ( i ) − x ^ k − ( i ) ) 2 认 为 f ( X k − 1 ) ∼ N ( x ^ k − , P k − ) x_1=\mu_{k-1}^+,\ x_2 = \mu_{k-1}^++\sqrt{(1+\lambda)\sigma_{k-1}^{2+}},\ x_3 = \mu_{k-1}^+-\sqrt{(1+\lambda)\sigma_{k-1}^{2+}} \\ w_1=\frac{1}{1+\lambda},w_2=w_3=\frac{1}{2(1+\lambda)}\\ x_k^{-(i)}=f(x_{k-1}^{+(i)}),\ \hat x_k^{-(i)}=\sum \limits_{i=1}^3w_if(x_k^{+(i)}),\ P_k^-=\sum \limits_{i=1}^3w_i(x_k^{-(i)}-\hat x_k^{-(i)})^2\\ 认为 f(X_{k-1})\sim N(\hat x_k^{-},P_k^-) x1=μk−1+, x2=μk−1++(1+λ)σk−12+, x3=μk−1+−(1+λ)σk−12+w1=1+λ1,w2=w3=2(1+λ)1xk−(i)=f(xk−1+(i)), x^k−(i)=i=1∑3wif(xk+(i)), Pk−=i=1∑3wi(xk−(i)−x^k−(i))2认为f(Xk−1)∼N(x^k−,Pk−) -

预测步: X k = f ( X k − 1 ) + Q k X_k=f(X_{k-1})+Q_k Xk=f(Xk−1)+Qk, f ( X k − 1 ) ∼ N ( x ^ k − , P k − ) f(X_{k-1})\sim N(\hat x_k^{-},P_k^-) f(Xk−1)∼N(x^k−,Pk−), Q k ∼ N ( 0 , Q ) Q_k\sim N(0,Q) Qk∼N(0,Q), ⇒ X k ∼ N ( x ^ k − , P k − + Q ) \Rightarrow X_k\sim N(\hat x_k^{-},P_k^-+Q) ⇒Xk∼N(x^k−,Pk−+Q)

-

将 h ( X k ) h(X_k) h(Xk)正态化: ∼ N ( x ^ k − , P k − + Q ) \sim N(\hat x_k^{-},P_k^-+Q) ∼N(x^k−,Pk−+Q)

x k ( 1 ) = x ^ k − , x 2 = x ^ k − + ( 1 + λ ) ( P k − + Q ) , x 3 = x ^ k − − ( 1 + λ ) ( P k − + Q ) w 1 = 1 1 + λ , w 2 = w 3 = 1 2 ( 1 + λ ) y k ( i ) = h ( x k ( i ) ) , y ^ k = ∑ i = 1 3 w i y k ( i ) , P y − = ∑ i = 1 3 w i ( y k ( i ) − y ^ k ) 2 认 为 h ( X k ) ∼ N ( y ^ k , P y ) x_k^{(1)}=\hat x_{k}^-,\ x_2 =\hat x_{k}^-+\sqrt{(1+\lambda)(P_k^-+Q)},\ x_3 = \hat x_{k}^--\sqrt{(1+\lambda)(P_k^-+Q)} \\ w_1=\frac{1}{1+\lambda},w_2=w_3=\frac{1}{2(1+\lambda)}\\ y_k^{(i)}=h(x_{k}^{(i)}),\hat y_k=\sum \limits_{i=1}^3w_i y_k^{(i)},\ P_y^-=\sum \limits_{i=1}^3w_i(y_k^{(i)}-\hat y_k)^2\\ 认为 h(X_{k})\sim N(\hat y_k,P_y) xk(1)=x^k−, x2=x^k−+(1+λ)(Pk−+Q), x3=x^k−−(1+λ)(Pk−+Q)w1=1+λ1,w2=w3=2(1+λ)1yk(i)=h(xk(i)),y^k=i=1∑3wiyk(i), Py−=i=1∑3wi(yk(i)−y^k)2认为h(Xk)∼N(y^k,Py)

-

更新步:

P x y = ∑ i w i ( x k ( i ) − x ^ k − ) ( y k ( i ) − y ^ ) , K = P x y ( P y + R ) − 1 观 测 到 一 个 数 据 y m 后 x ^ k + = x ^ k − + K ( y m − y ^ ) P k + = P k − + Q − K 2 ( P y + R ) P_{xy}=\sum \limits_i w_i(x_k^{(i)}-\hat x^-_k)(y_k^{(i)}-\hat y),\ K = P_{xy}(P_y+R)^{-1}\\观测到一个数据y_m后 \\ \hat x_k^+ = \hat x_k^- +K(y_m-\hat y)\\ P_k^+ =P_k^-+Q-K^2(P_y+R) Pxy=i∑wi(xk(i)−x^k−)(yk(i)−y^), K=Pxy(Py+R)−1观测到一个数据ym后x^k+=x^k−+K(ym−y^)Pk+=Pk−+Q−K2(Py+R)

-

-

矩阵形式无迹卡尔曼滤波算法

-

X ⃗ k − 1 ∼ N ( μ ⃗ k − 1 , Σ k − 1 + ) \vec X_{k-1}\sim N(\vec \mu_{k-1},\Sigma_{k-1}^+) Xk−1∼N(μk−1,Σk−1+)

-

f ( X k − 1 ) f(X_{k-1}) f(Xk−1)正态化

-

x ⃗ k − 1 ( 1 ) = μ ⃗ k − 1 \vec x_{k-1}^{(1)} = \vec \mu_{k-1} xk−1(1)=μk−1

-

矩阵的 L L T LL^T LLT分解, Σ k − 1 + = L L T \Sigma_{k-1}^+ =LL^T Σk−1+=LLT,因此协方差矩阵 Σ k − 1 + \Sigma_{k-1}^+ Σk−1+必须正定,否则会崩溃。

-

for i=1:n

x ⃗ k − 1 ( i + 1 ) = μ ⃗ k − 1 + + ( n + λ ) L ( i ) \vec x_{k-1}^{(i+1)}=\vec \mu_{k-1}^+ +\sqrt{(n+\lambda)}L_{(i)} xk−1(i+1)=μk−1++(n+λ)L(i), L ( i ) L_{(i)} L(i) 表示L的第i列

x ⃗ k − 1 ( i + 1 + n ) = μ ⃗ k − 1 + − ( n + λ ) L ( i ) \vec x_{k-1}^{(i+1+n)}=\vec \mu_{k-1}^+ -\sqrt{(n+\lambda)}L_{(i)} xk−1(i+1+n)=μk−1+−(n+λ)L(i)

end

w k ( 1 ) = λ n + λ , w k ( 2......2 n + 1 ) = 1 2 ( n + λ ) w_k^{(1)}=\frac{\lambda}{n+\lambda},w_k^{(2......2n+1)}=\frac{1}{2(n+\lambda)} wk(1)=n+λλ,wk(2......2n+1)=2(n+λ)1

-

x ⃗ k − ( i ) = f ( x ⃗ k − 1 ( i ) ) \vec x_k^{-(i)}=f(\vec x_{k-1}^{(i)}) xk−(i)=f(xk−1(i)),不需要加随机数

-

x ^ ⃗ k − = ∑ i = 1 2 n + 1 w i x ⃗ k − ( i ) \vec {\hat x} _k^- = \sum \limits_{i=1}^{2n+1} w_i \vec x_k^{-(i)} x^k−=i=1∑2n+1wixk−(i)

-

P k − = ∑ i = 1 2 n + 1 w i ( x ⃗ k − ( i ) − x ^ ⃗ k − ) ( x ⃗ k − ( i ) − x ^ ⃗ k − ) T + Q P_k^-=\sum \limits_{i=1}^{2n+1} w_i(\vec x_k^{-(i)}-\vec {\hat x}_k^-)(\vec x_k^{-(i)}-\vec {\hat x}_k^-)^T+Q Pk−=i=1∑2n+1wi(xk−(i)−x^k−)(xk−(i)−x^k−)T+Q

-

-

预测步

- 得到 X k − X_k^- Xk−的近似pdf, ∼ N ( x ^ ⃗ k − , P k − ) \sim N(\vec {\hat x}_k^{-},P_k^-) ∼N(x^k−,Pk−)

-

h ( X k ) h(X_k) h(Xk)正态化

-

x ⃗ k ( 1 ) = x ^ ⃗ k − \vec x_k^{(1)} = \vec {\hat x}_k^{-} xk(1)=x^k−

-

P k − = L 1 L 1 T P_k^- = L_1L_1^T Pk−=L1L1T

-

for i = 1:n

x ⃗ k ( i + 1 ) = x ⃗ k − + n + λ L 1 ( i ) \vec x_k^{(i+1)}=\vec x_k^- + \sqrt{n+\lambda} L_{1(i)} xk(i+1)=xk−+n+λL1(i)

x ⃗ k ( i + 1 + n ) = x ⃗ k − − n + λ L 1 ( i ) \vec x_k^{(i+1+n)}=\vec x_k^- - \sqrt{n+\lambda} L_{1(i)} xk(i+1+n)=xk−−n+λL1(i)

end

-

y ⃗ k ( i ) = h ( x ⃗ k ( i ) ) \vec y_k^{(i)} = h(\vec x_k^{(i)}) yk(i)=h(xk(i))

-

y ⃗ ^ k = ∑ i = 1 2 n = 1 w i y k ( i ) \hat {\vec y}_k = \sum \limits_{i=1}^{2n=1} w_iy_k^{(i)} y^k=i=1∑2n=1wiyk(i)

-

P y = ∑ i = 1 2 n + 1 w i ( y ⃗ k ( i ) − y ⃗ ^ k ) ( y ⃗ k ( i ) − y ⃗ ^ k ) T + R P_y =\sum \limits_{i=1}^{2n+1} w_i(\vec y_k^{(i)}-\hat{\vec y}_k)(\vec y_k^{(i)}-\hat{\vec y}_k)^T+R Py=i=1∑2n+1wi(yk(i)−y^k)(yk(i)−y^k)T+R

-

P x y = ∑ i = 1 2 n + 1 w i ( x ⃗ k ( i ) − x ⃗ ^ k − ) ( x ⃗ k ( i ) − x ⃗ ^ k − ) T P_{xy} = \sum \limits_{i=1}^{2n+1} w_i(\vec x_k^{(i)}-\hat {\vec x}_k^-)(\vec x_k^{(i)}-\hat {\vec x}_k^-)^T Pxy=i=1∑2n+1wi(xk(i)−x^k−)(xk(i)−x^k−)T

-

K = P x y ( P y ) − 1 K = P_{xy}(P_y)^{-1} K=Pxy(Py)−1

-

-

更新步

-

观测到一组数据 y ⃗ m \vec y_m ym

-

x ⃗ ^ k − = x ⃗ ^ k + + K ( y ⃗ m − y ⃗ ^ k ) \hat {\vec x}_k^- = \hat {\vec x}_k^+ + K(\vec y_m -\hat {\vec y}_k) x^k−=x^k++K(ym−y^k)

-

P k + = P k − − K P y K T P_k^+ = P_k^- - KP_yK^T Pk+=Pk−−KPyKT

-

-