基于Faster R-CNN-X射线图像缺陷检测使用MindStudio进行MindX SDK应用开发

bilibili视频链接:使用MindStudio开发基于MindX SDK的X射线图像缺陷检测应用

文章目录

-

-

- bilibili视频链接:[使用MindStudio开发基于MindX SDK的X射线图像缺陷检测应用](https://www.bilibili.com/video/BV12R4y1C7Z6/)

- 一、MindStudio

-

- 1、MindStudio介绍

- 二、MindX SDK

-

- 1、MindX SDK介绍

- 三、可视化流程编排介绍

-

- 1、SDK基础概念

- 2、可视化流程编排

- 四、项目开发(Python)

-

- 1、MindStudio安装

- 2、新建一个项目

- 3、MindX SDK安装

- 4、工程目录结构介绍

- 5、文件同步(可选)

- 6、Faster R-CNN模型转换

- 7、编写后处理插件并编译

-

- 1、头文件FasterRcnnMindsporePost.h

- 2、源文件FasterRcnnMindsporePost.cpp

- 3、CMakeLists.txt编译脚本

- 4、配置编译环境

- 5、执行编译

- 8、pipeline文件编排

- 9、本地编写python文件

-

- 1、main.py

- 2、infer.py

- 3、postprocess.py

- 10、代码运行

- 五、常见问题

-

- 1、CANN 连接错误

- 2、后处理插件权限问题

-

一、MindStudio

1、MindStudio介绍

MindStudio简介:MindStudio 提供您在 AI 开发所需的一站式开发环境,支持模型开发、算子开发以及应用开发三个主流程中的开发任务。依靠模型可视化、算力测试、IDE 本地仿真调试等功能,MindStudio 能够帮助您在一个工具上就能高效便捷地完 成 AI 应用开发。MindStudio 采用了插件化扩展机制,开发者可以通过开发插件来扩展已有功能。

功能简介

- 针对安装与部署,MindStudio 提供多种部署方式,支持多种主流操作系统, 为开发者提供最大便利。

- 针对网络模型的开发,MindStudio 支持 TensorFlow、PyTorch、MindSpore 框 架的模型训练,支持多种主流框架的模型转换。集成了训练可视化、脚本转换、模型转换、精度比对等工具,提升了网络模型移植、分析和优化的效率。

- 针对算子开发,MindStudio 提供包含 UT 测试、ST 测试、TIK 算子调试等的全套算子开发流程。支持 TensorFlow、PyTorch、MindSpore 等多种主流框架 的 TBE 和 AI CPU 自定义算子开发。

- 针对应用开发,MindStudio 集成了 Profiling 性能调优、编译器、MindX SDK 的应用开发、可视化 pipeline 业务流编排等工具,为开发者提供了图形化 的集成开发环境,通过 MindStudio 能够进行工程管理、编译、调试、性能分析等全流程开发,能够很大程度提高开发效率。

功能框架

MindStudio功能框架如下图所示,目前含有的工具链包括:模型转换工具、模型训练工具、自定义算子开发工具、应用开发工具、工程管理工具、编译工具、流程编排工具、精度比对工具、日志管理工具、性能分析工具、设备管理工具等多种工具。

场景介绍

- 开发场景:在非昇腾AI设备(如windosw平台)上安装MindStudio和Ascend-cann-toolkit开发套件包。在该开发场景下,我们仅用于代码开发、编译等不依赖昇腾设备的活动,如果要运行应用程序或者模型训练等,需要通过MindStudio远程连接(SSH)已经部署好运行环境所需要软件包(CANN、MindX SDK等)的昇腾AI设备。

- 开发运行场景:在昇腾AI设备(昇腾AI服务器)上安装MindStudio、Ascend-cann-toolkit开发套件包等安装包和AI框架(进行模型训练时需要安装)。在该开发环境下,开发人员可以进行代码编写、编译、程序运行、模型训练等操作。

软件包介绍

- MindStudio:提供图形化开发界面,支持应用开发、调试和模型转换功能, 同时还支持网络移植、优化和分析等功能,可以安装在linux、windows平台。

- Ascend-cann-toolkit:开发套件包。为开发者提供基于昇腾 AI 处理器的相关算法开发工具包,旨在帮助开发者进行快速、高效的模型、算子和应用的开发。**开发套件包只能安装在 Linux 服务器上,**开发者可以在安装开发套件包后,使用 MindStudio 开发工具进行快速开发。

注:由于Ascend-cann-toolkit只能安装在linux服务器上,所以在Windows场景下代码开发时,需先安装MindStudio软件,再远程连接同步Linux服务器的CANN和MindX SDK到本地。

二、MindX SDK

1、MindX SDK介绍

MindX SDK 提供昇腾 AI 处理器加速的各类 AI 软件开发套件(SDK),提供极简易用的 API,加速 AI 应用的开发。

应用开发旨在使用华为提供的 SDK 和应用案例快速开发并部署人工智能应用,是基于现有模型、使用pyACL 提供的 Python 语言 API 库开发深度神经网络 应用,用于实现目标识别、图像分类等功能。

mxManufacture & mxVision 关键特性:

- 配置文件快速构建 AI 推理业务。

- 插件化开发模式,将整个推理流程“插件化”,每个插件提供一种功能,通过组装不同的插件,灵活适配推理业务流程。

- 提供丰富的插件库,用户可根据业务需求组合 Jpeg 解码、抠图、缩放、模型推理、数据序列化等插件。

- 基于 Ascend Computing Language(ACL),提供常用功能的高级 API,如模型推理、解码、预处理等,简化 Ascend 芯片应用开发。

- 支持自定义插件开发,用户可快速地将自己的业务逻辑封装成插件,打造自己的应用插件。

三、可视化流程编排介绍

1、SDK基础概念

通过 stream(业务流)配置文件,Stream manager(业务流管理模块)可识别需要构建的 element(功能元件)以及 element 之间的连接关系,并启动业务流程。Stream manager 对外提供接口,用于向 stream 发送数据和获取结果,帮助用户实现业务对接。

Plugin(功能插件)表示业务流程中的基础模块,通过 element 的串接构建成一个 stream。Buffer(插件缓存)用于内部挂载解码前后的视频、图像数据, 是 element 之间传递的数据结构,同时也允许用户挂载 Metadata(插件元数据), 用于存放结构化数据(如目标检测结果)或过程数据(如缩放后的图像)

2、可视化流程编排

MindX SDK 实现功能的最小粒度是插件,每一个插件实现特定的功能,如图片解码、图片缩放等。流程编排是将这些插件按照合理的顺序编排,实现负责的功能。可视化流程编排是以可视化的方式,开发数据流图,生成 pipeline 文件供应用框架使用。

下图为推理业务流 Stream 配置文件 pipeline 样例。配置文件以 json 格式编写,用户必须指定业务流名称、元件名称和插件名称,并根据需要,补充元件属性和下游元件名称信息。

四、项目开发(Python)

本项目主要介绍在Windows场景下使用MindStudio软件,连接远程服务器配置的MindX SDK、CANN环境,采用Faster R-CNN模型对GDxray焊接缺陷数据集进行焊接缺陷检测的应用开发。

项目参考模型地址:Faster R-CNN

项目代码地址:contrib/Faster_R-CNN · Ascend/mindxsdk-referenceapps

GDXray是一个公开X射线数据集,其中包括一个关于X射线焊接图像(Welds)的数据,该数据由德国柏林的BAM联邦材料研究和测试研究所收集。Welds集中W0003 包括了68张焊接公司的X射线图像。本项目基于W0003数据集并在焊接专家的帮助下将焊缝和其内部缺陷标注。

数据集下载地址:http://dmery.sitios.ing.uc.cl/images/GDXray/Welds.zip

1、MindStudio安装

点击超链接下载MindStudio安装包

MindStudio安装包下载

点击超链接,进入MindStudio用户手册,在安装指南下安装操作中可以看见MindStudio具体的安装操作。

MindStudio用户手册

2、新建一个项目

点击Ascend App,新建一个项目,在D:\Codes\python\Ascend\MyApp位置下创建自己的项目。

点击 Change 安装CANN,进入 Remote CANN Setting 界面,如下图所示,远程连接需要配置SSH连接,点击**“+”**,进入SSH连接界面。

| 参数 | 解释 |

|---|---|

| Remote Connection | 远程服务器 IP |

| Remote CANN location | 远程服务器中 CANN 路径 |

下图为SSH连接界面中,ssh远程连接需配置远程终端账号,点击**“+”**后,进入SSH连接配置界面。

下图为SSH配置界面,配置好后点击Test Connection,出现 ”Sucessfully connected!“即配置成功。

返回到 Remote CANN Setting 界面,输入远程CANN路径完成 CANN 安装,点击 OK。

接着,选择MindX SDK Project(Python),如下图所示,被圈出来的4个项目,上面两个是空模板,在这里面创建我们自己的工程项目,因为我们要创建Python版的应用,所以被单独框出来的这个;下面两个是官方给出的样例项目,如果对目录结构和应该写哪些代码不太熟悉的话,也可以创建一个样例项目先学习一下。

选择完成后,点击Finish完成项目的创建进入项目,项目创建完成后,可根据自己需要新建文件、文件夹。

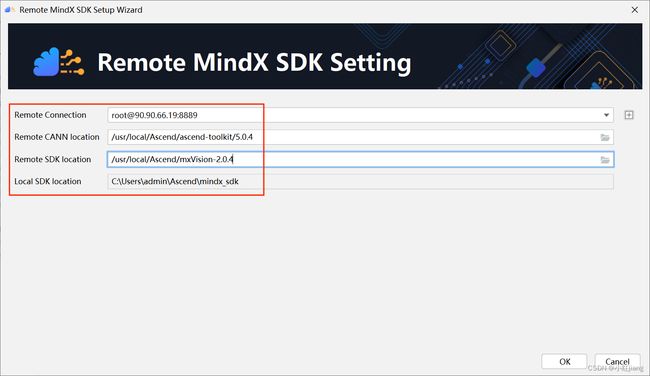

3、MindX SDK安装

步骤 1 : Windows 场景下基于 MindStuido 的 SDK 应用开发(本地远程连接服务器端MindX SDK),请先确保远端环境上 MindX SDK 软件包已安装完成。(远程安装MindX SDK开发套件)

步骤 2 :在 Windows 本地进入工程创建页面,工具栏点击 File > Settings > Appearance & Behavior> System Settings > MindX SDK 进入 MindX SDK 管理界面(只有安装CANN后才会出现MindX SDK按钮)。界面中 MindX SDK Location 为软件包的默认安装路径,默认安装路径为 “C:\Users\用户\Ascend\mindx_sdk”。单击 Install SDK 进入Installation settings 界面。

如图所示,为 MindX SDK 的安装界面,各参数选择如下:

- Remote Connection:远程连接的用户及 IP。

- Remote CANN location:远端环境上 CANN 开发套件包的路径,请配置到版 本号一级。

- Remote SDK location:远端环境上 SDK 的路径,请配置到版本号一级。IDE 将同步该层级下的include、opensource、python、samples 文件夹到本地 Windows 环境,层级选择错误将导致安装失败。

- Local SDK location:同步远端环境上 SDK 文件夹到本地的路径。默认安装路径为“C:\Users\用户名\Ascend\mindx_sdk”。

步骤 3 :单击 OK 结束,返回 SDK 管理界面如下图,可查看安装后的 SDK 的信息,可单击 OK结束安装流程。

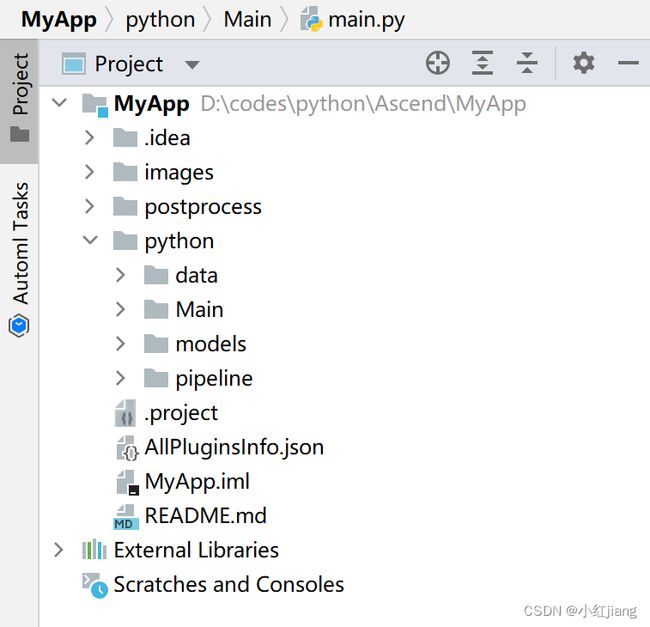

4、工程目录结构介绍

在实际开发中,需要在./postprocess下编写后处理插件,在./python/Main下编写需要运行的python文件,在./python/models下放置模型相关配置文件,在./python/pipeline下编写工作流文件,本项目工程开发结束后的目录如下图所示。

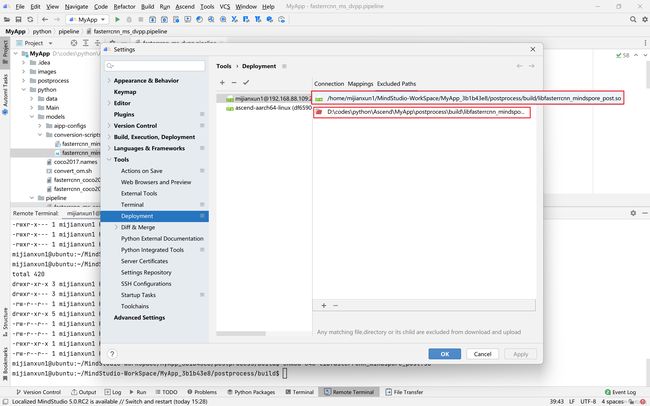

5、文件同步(可选)

本地文件与远程文件同步一般自行决定是否需要该功能,若不进行文件同步,在后续编译文件或者运行应用时,MindStudio也会自行将项目文件同步到远端用户目录下MindStudio-WorkSpace文件夹中。

在顶部菜单栏中选择 Tools > Deployment > Configuration ,如图:

点击已连接的远程环境后,点击Mappings可添加需要同步的文件夹,点击Excluded Paths可添加同步的文件下不需要同步的文件。

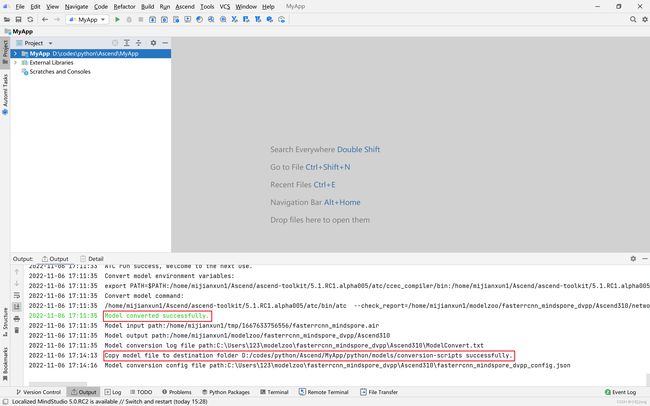

6、Faster R-CNN模型转换

用户使用 Caffe/TensorFlow 等框架训练好的第三方模型,可通过 ATC 工具将其转换为昇腾 AI 处理器支持的离线模型(*.om 文件),模型转换过程中可以实现算子调度的优化、权重数据重排、内存使用优化等,可以脱离设备完成模型的预处理,详细架构如下图。

在本项目中,要将 mindspore 框架下训练好的模型(.air 文件),转换为昇腾 AI 处理器支持的离线模型(.om 文件),具体步骤如下:

步骤 1: 点击 Ascend > Model Converter,进入模型转换界面,参数配置如图所示,若没有CANN Machine,请参见第四章第二节 CANN 安装。

各参数解释如下表所示:

| 参数 | 解释 |

|---|---|

| CANN Machine | CANN 的远程服务器 |

| Model File | *.air 文件的路径(可以在本地,也可以在服务器上) |

| Target SoC Version | 模型转换时指定芯片型号 |

| Model Name | 生成的 om 模型名字 |

| Output Path | 生成的 om 模型保存在本地的路径 |

| Input Format | 输入数据格式 |

| Input Nodes | 模型输入节点信息 |

步骤 2: 配置完成后,点击Next,进行数据预处理设置,配置完成后点击Next,如图:

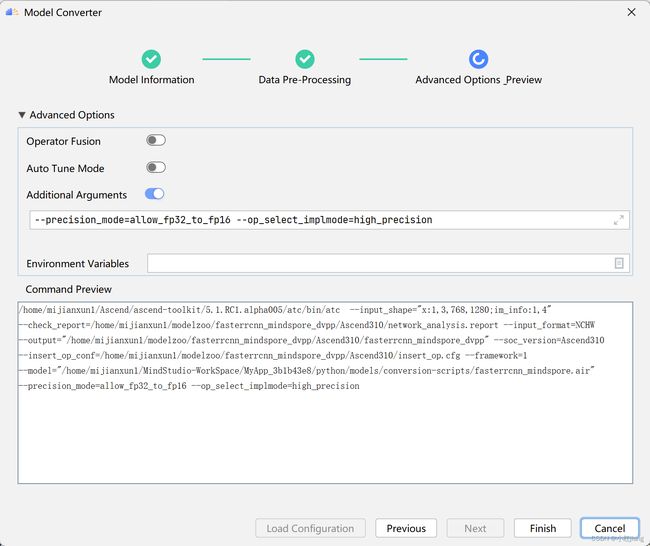

步骤 3: 进行模型转换命令及环境变量设置,该项目配置示例如图:

各参数解释如下表所示:

| 参数 | 解释 |

|---|---|

| Additional Arguments | 执行命令时需要添加的其他参数配置 |

| Environment Variables | 环境变量设置 |

| Command Preview | 查看经过前面一系列配置后最终的命名形式 |

步骤 4: 配置完成后,点击Finish进行模型转换。

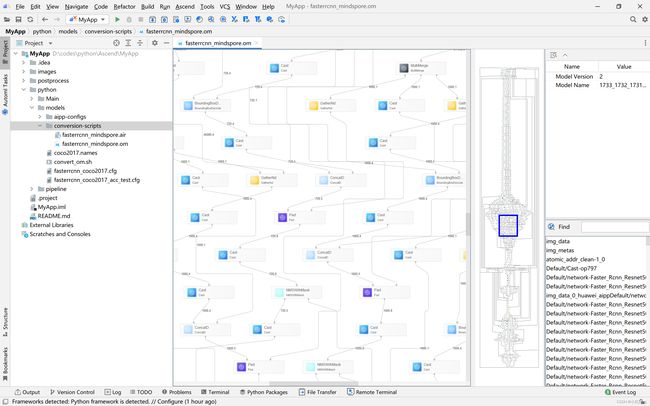

步骤 6:双击转换成功的 om 模型文件,可以查看网络结构。如下图所示。

7、编写后处理插件并编译

以下需要编写的文件均在./postprocess/目录下

1、头文件FasterRcnnMindsporePost.h

FasterRcnnMindsporePost.h头文件包含了类的声明(包括类里面的成员和方法的声明)、函数原型、#define 常数等。其中,#include 类及#define 常数如代码所示;定义的初始化参数结构体如代码所示;类里面的成员和方法的声明如代码所示。

/*

* Copyright (c) 2022. Huawei Technologies Co., Ltd. All rights reserved.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

#ifndef FASTERRCNN_MINSPORE_PORT_H

#define FASTERRCNN_MINSPORE_PORT_H

#include

#include

#include 2、源文件FasterRcnnMindsporePost.cpp

这里我们主要是实现在头文件中定义的函数,接下来做一个简要的概括

- ReadConfigParams函数用来读取目标检测类别信息、以及一些超参数如scoreThresh、iouThresh

- Init函数用来进行目标检测后处理中常用的初始化

- IsValidTensors函数用来判断输出结果是否有效

- GetValidDetBoxes函数用来获取有效的推理信息

- ConvertObjInfoFromDetectBox函数用来将推理信息转为标注框信息

- ObjectDetectionOutput函数用来输出得到的推理结果

- Process函数用来做预处理

/*

* Copyright (c) 2022. Huawei Technologies Co., Ltd. All rights reserved.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

#include

#include

#include

#include "acl/acl.h"

#include "FasterRcnnMindsporePost.h"

#include "MxBase/CV/ObjectDetection/Nms/Nms.h"

namespace {

// Output Tensor

const int OUTPUT_TENSOR_SIZE = 3;

const int OUTPUT_BBOX_SIZE = 3;

const int OUTPUT_BBOX_TWO_INDEX_SHAPE = 5;

const int OUTPUT_BBOX_INDEX = 0;

const int OUTPUT_CLASS_INDEX = 1;

const int OUTPUT_MASK_INDEX = 2;

const int BBOX_INDEX_LX = 0;

const int BBOX_INDEX_LY = 1;

const int BBOX_INDEX_RX = 2;

const int BBOX_INDEX_RY = 3;

const int BBOX_INDEX_PROB = 4;

const int BBOX_INDEX_SCALE_NUM = 5;

} // namespace

namespace MxBase {

FasterRcnnMindsporePost &FasterRcnnMindsporePost::operator=(const FasterRcnnMindsporePost &other) {

if (this == &other) {

return *this;

}

ObjectPostProcessBase::operator=(other);

return *this;

}

APP_ERROR FasterRcnnMindsporePost::ReadConfigParams() {

APP_ERROR ret = configData_.GetFileValue("CLASS_NUM", classNum_);

if (ret != APP_ERR_OK) {

LogWarn << GetError(ret) << "No CLASS_NUM in config file, default value(" << classNum_ << ").";

}

ret = configData_.GetFileValue("SCORE_THRESH", scoreThresh_);

if (ret != APP_ERR_OK) {

LogWarn << GetError(ret) << "No SCORE_THRESH in config file, default value(" << scoreThresh_ << ").";

}

ret = configData_.GetFileValue("IOU_THRESH", iouThresh_);

if (ret != APP_ERR_OK) {

LogWarn << GetError(ret) << "No IOU_THRESH in config file, default value(" << iouThresh_ << ").";

}

ret = configData_.GetFileValue("RPN_MAX_NUM", rpnMaxNum_);

if (ret != APP_ERR_OK) {

LogWarn << GetError(ret) << "No RPN_MAX_NUM in config file, default value(" << rpnMaxNum_ << ").";

}

ret = configData_.GetFileValue("MAX_PER_IMG", maxPerImg_);

if (ret != APP_ERR_OK) {

LogWarn << GetError(ret) << "No MAX_PER_IMG in config file, default value(" << maxPerImg_ << ").";

}

LogInfo << "The config parameters of post process are as follows: \n"

<< " CLASS_NUM: " << classNum_ << " \n"

<< " SCORE_THRESH: " << scoreThresh_ << " \n"

<< " IOU_THRESH: " << iouThresh_ << " \n"

<< " RPN_MAX_NUM: " << rpnMaxNum_ << " \n"

<< " MAX_PER_IMG: " << maxPerImg_ << " \n";

}

APP_ERROR FasterRcnnMindsporePost::Init(const std::map> &postConfig) {

LogInfo << "Begin to initialize FasterRcnnMindsporePost.";

APP_ERROR ret = ObjectPostProcessBase::Init(postConfig);

if (ret != APP_ERR_OK) {

LogError << GetError(ret) << "Fail to superinit in ObjectPostProcessBase.";

return ret;

}

ReadConfigParams();

LogInfo << "End to initialize FasterRcnnMindsporePost.";

return APP_ERR_OK;

}

APP_ERROR FasterRcnnMindsporePost::DeInit() {

LogInfo << "Begin to deinitialize FasterRcnnMindsporePost.";

LogInfo << "End to deinitialize FasterRcnnMindsporePost.";

return APP_ERR_OK;

}

bool FasterRcnnMindsporePost::IsValidTensors(const std::vector &tensors) const {

if (tensors.size() < OUTPUT_TENSOR_SIZE) {

LogError << "The number of tensor (" << tensors.size() << ") is less than required (" << OUTPUT_TENSOR_SIZE

<< ")";

return false;

}

auto bboxShape = tensors[OUTPUT_BBOX_INDEX].GetShape();

if (bboxShape.size() != OUTPUT_BBOX_SIZE) {

LogError << "The number of tensor[" << OUTPUT_BBOX_INDEX << "] dimensions (" << bboxShape.size()

<< ") is not equal to (" << OUTPUT_BBOX_SIZE << ")";

return false;

}

uint32_t total_num = classNum_ * rpnMaxNum_;

if (bboxShape[VECTOR_SECOND_INDEX] != total_num) {

LogError << "The output tensor is mismatched: " << total_num << "/" << bboxShape[VECTOR_SECOND_INDEX] << ").";

return false;

}

if (bboxShape[VECTOR_THIRD_INDEX] != OUTPUT_BBOX_TWO_INDEX_SHAPE) {

LogError << "The number of bbox[" << VECTOR_THIRD_INDEX << "] dimensions (" << bboxShape[VECTOR_THIRD_INDEX]

<< ") is not equal to (" << OUTPUT_BBOX_TWO_INDEX_SHAPE << ")";

return false;

}

auto classShape = tensors[OUTPUT_CLASS_INDEX].GetShape();

if (classShape[VECTOR_SECOND_INDEX] != total_num) {

LogError << "The output tensor is mismatched: (" << total_num << "/" << classShape[VECTOR_SECOND_INDEX]

<< "). ";

return false;

}

auto maskShape = tensors[OUTPUT_MASK_INDEX].GetShape();

if (maskShape[VECTOR_SECOND_INDEX] != total_num) {

LogError << "The output tensor is mismatched: (" << total_num << "/" << maskShape[VECTOR_SECOND_INDEX] << ").";

return false;

}

return true;

}

static bool CompareDetectBoxes(const MxBase::DetectBox &box1, const MxBase::DetectBox &box2) {

return box1.prob > box2.prob;

}

static void GetDetectBoxesTopK(std::vector &detBoxes, size_t kVal) {

std::sort(detBoxes.begin(), detBoxes.end(), CompareDetectBoxes);

if (detBoxes.size() <= kVal) {

return;

}

LogDebug << "Total detect boxes: " << detBoxes.size() << ", kVal: " << kVal;

detBoxes.erase(detBoxes.begin() + kVal, detBoxes.end());

}

void FasterRcnnMindsporePost::GetValidDetBoxes(const std::vector &tensors, std::vector &detBoxes,

uint32_t batchNum) {

LogInfo << "Begin to GetValidDetBoxes.";

auto *bboxPtr = (aclFloat16 *)GetBuffer(tensors[OUTPUT_BBOX_INDEX], batchNum); // 1 * 80000 * 5

auto *labelPtr = (int32_t *)GetBuffer(tensors[OUTPUT_CLASS_INDEX], batchNum); // 1 * 80000 * 1

auto *maskPtr = (bool *)GetBuffer(tensors[OUTPUT_MASK_INDEX], batchNum); // 1 * 80000 * 1

// mask filter

float prob = 0;

size_t total = rpnMaxNum_ * classNum_;

for (size_t index = 0; index < total; ++index) {

if (!maskPtr[index]) {

continue;

}

size_t startIndex = index * BBOX_INDEX_SCALE_NUM;

prob = aclFloat16ToFloat(bboxPtr[startIndex + BBOX_INDEX_PROB]);

if (prob <= scoreThresh_) {

continue;

}

MxBase::DetectBox detBox;

float x1 = aclFloat16ToFloat(bboxPtr[startIndex + BBOX_INDEX_LX]);

float y1 = aclFloat16ToFloat(bboxPtr[startIndex + BBOX_INDEX_LY]);

float x2 = aclFloat16ToFloat(bboxPtr[startIndex + BBOX_INDEX_RX]);

float y2 = aclFloat16ToFloat(bboxPtr[startIndex + BBOX_INDEX_RY]);

detBox.x = (x1 + x2) / COORDINATE_PARAM;

detBox.y = (y1 + y2) / COORDINATE_PARAM;

detBox.width = x2 - x1;

detBox.height = y2 - y1;

detBox.prob = prob;

detBox.classID = labelPtr[index];

detBoxes.push_back(detBox);

}

GetDetectBoxesTopK(detBoxes, maxPerImg_);

}

void FasterRcnnMindsporePost::ConvertObjInfoFromDetectBox(std::vector &detBoxes,

std::vector &objectInfos,

const ResizedImageInfo &resizedImageInfo) {

for (auto &detBoxe : detBoxes) {

if (detBoxe.classID < 0) {

continue;

}

ObjectInfo objInfo = {};

objInfo.classId = (float)detBoxe.classID;

objInfo.className = configData_.GetClassName(detBoxe.classID);

objInfo.confidence = detBoxe.prob;

objInfo.x0 = std::max(detBoxe.x - detBoxe.width / COORDINATE_PARAM, 0);

objInfo.y0 = std::max(detBoxe.y - detBoxe.height / COORDINATE_PARAM, 0);

objInfo.x1 = std::max(detBoxe.x + detBoxe.width / COORDINATE_PARAM, 0);

objInfo.y1 = std::max(detBoxe.y + detBoxe.height / COORDINATE_PARAM, 0);

objInfo.x0 = std::min(objInfo.x0, resizedImageInfo.widthOriginal - 1);

objInfo.y0 = std::min(objInfo.y0, resizedImageInfo.heightOriginal - 1);

objInfo.x1 = std::min(objInfo.x1, resizedImageInfo.widthOriginal - 1);

objInfo.y1 = std::min(objInfo.y1, resizedImageInfo.heightOriginal - 1);

LogDebug << "Find object: "

<< "classId(" << objInfo.classId << "), confidence(" << objInfo.confidence << "), Coordinates("

<< objInfo.x0 << ", " << objInfo.y0 << "; " << objInfo.x1 << ", " << objInfo.y1 << ").";

objectInfos.push_back(objInfo);

}

}

void FasterRcnnMindsporePost::ObjectDetectionOutput(const std::vector &tensors,

std::vector> &objectInfos,

const std::vector &resizedImageInfos) {

LogDebug << "FasterRcnnMindsporePost start to write results.";

auto shape = tensors[OUTPUT_BBOX_INDEX].GetShape();

uint32_t batchSize = shape[0];

for (uint32_t i = 0; i < batchSize; ++i) {

std::vector detBoxes;

std::vector objectInfo;

GetValidDetBoxes(tensors, detBoxes, i);

LogInfo << "DetBoxes size: " << detBoxes.size() << " iouThresh_: " << iouThresh_;

NmsSort(detBoxes, iouThresh_, MxBase::MAX);

ConvertObjInfoFromDetectBox(detBoxes, objectInfo, resizedImageInfos[i]);

objectInfos.push_back(objectInfo);

}

LogDebug << "FasterRcnnMindsporePost write results successed.";

}

APP_ERROR FasterRcnnMindsporePost::Process(const std::vector &tensors,

std::vector> &objectInfos,

const std::vector &resizedImageInfos,

const std::map> &configParamMap) {

LogDebug << "Begin to process FasterRcnnMindsporePost.";

auto inputs = tensors;

APP_ERROR ret = CheckAndMoveTensors(inputs);

if (ret != APP_ERR_OK) {

LogError << "CheckAndMoveTensors failed, ret=" << ret;

return ret;

}

ObjectDetectionOutput(inputs, objectInfos, resizedImageInfos);

LogInfo << "End to process FasterRcnnMindsporePost.";

return APP_ERR_OK;

}

extern "C" {

std::shared_ptr GetObjectInstance() {

LogInfo << "Begin to get FasterRcnnMindsporePost instance.";

auto instance = std::make_shared();

LogInfo << "End to get FasterRcnnMindsporePost Instance";

return instance;

}

}

} // namespace MxBase

3、CMakeLists.txt编译脚本

在编译脚本中,需要指定 CMake最低版本要求、项目信息、编译选项等参数,并且需要指定特定头文件和特定库文件的搜索路径。除此之外,要说明根据FasterRcnnMindsporePost.cpp源文件生成libfasterrcnn_mindspore_post.so可执行文件,同时需要指定可执行文件的安装位置,通常为{MX_SDK_HOME}/lib/modelpostprocessors/

cmake_minimum_required(VERSION 3.5.2)

project(fasterrcnnpost)

add_definitions(-D_GLIBCXX_USE_CXX11_ABI=0)

set(PLUGIN_NAME "fasterrcnn_mindspore_post")

set(TARGET_LIBRARY ${PLUGIN_NAME})

set(ACL_LIB_PATH $ENV{ASCEND_HOME}/ascend-toolkit/latest/acllib)

include_directories(${CMAKE_CURRENT_BINARY_DIR})

include_directories($ENV{MX_SDK_HOME}/include)

include_directories($ENV{MX_SDK_HOME}/opensource/include)

include_directories($ENV{MX_SDK_HOME}/opensource/include/opencv4)

include_directories($ENV{MX_SDK_HOME}/opensource/include/gstreamer-1.0)

include_directories($ENV{MX_SDK_HOME}/opensource/include/glib-2.0)

include_directories($ENV{MX_SDK_HOME}/opensource/lib/glib-2.0/include)

link_directories($ENV{MX_SDK_HOME}/lib)

link_directories($ENV{MX_SDK_HOME}/opensource/lib/)

add_compile_options(-std=c++11 -fPIC -fstack-protector-all -pie -Wno-deprecated-declarations)

add_compile_options("-DPLUGIN_NAME=${PLUGIN_NAME}")

add_compile_options("-Dgoogle=mindxsdk_private")

add_definitions(-DENABLE_DVPP_INTERFACE)

message("ACL_LIB_PATH:${ACL_LIB_PATH}.")

include_directories(${ACL_LIB_PATH}/include)

add_library(${TARGET_LIBRARY} SHARED ./FasterRcnnMindsporePost.cpp ./FasterRcnnMindsporePost.h)

target_link_libraries(${TARGET_LIBRARY} glib-2.0 gstreamer-1.0 gobject-2.0 gstbase-1.0 gmodule-2.0)

target_link_libraries(${TARGET_LIBRARY} plugintoolkit mxpidatatype mxbase)

target_link_libraries(${TARGET_LIBRARY} -Wl,-z,relro,-z,now,-z,noexecstack -s)

install(TARGETS ${TARGET_LIBRARY} LIBRARY DESTINATION $ENV{MX_SDK_HOME}/lib/modelpostprocessors/)

4、配置编译环境

步骤一:指定“CMakeLists.txt”编译配置文件

在工程界面左侧目录找到“CMakeLists.txt”文件,右键弹出并单击如所示“Load CMake Project”,即可指定此配置文件进行工程编译。

注:本项目编译文件CMakeLists.txt在目录./postprocess/下,上图仅作为功能展示

步骤二:编译配置

在MindStudio工程界面,依次选择“Build > Edit Build Configuration…”,进入编译配置页面,如图,配置完成后单击“OK”保存编译配置。

5、执行编译

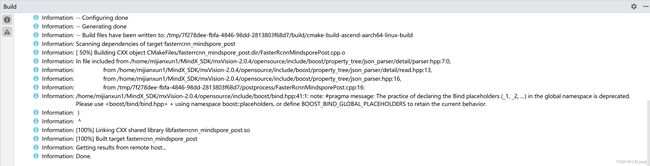

单击“Build”编译工程。如果在编译过程中无错误提示,且编译进度到“100%”,则表示编译成功,如图。

编译成功后,会在项目目录下生成build文件夹,里面有我们需要的可执行文件如图,也可在CMakeLists.txt中最后一行指定可执行文件安装的位置。

8、pipeline文件编排

pipeline文件编排是python版SDK最主要的推理开发步骤,作为一个目标检测任务,主要包括以下几个步骤: 图片获取 → 图片解码 → 图像缩放 → 目标检测 → 序列化 → 结果发送,以下介绍pipeline文件流程编排步骤:

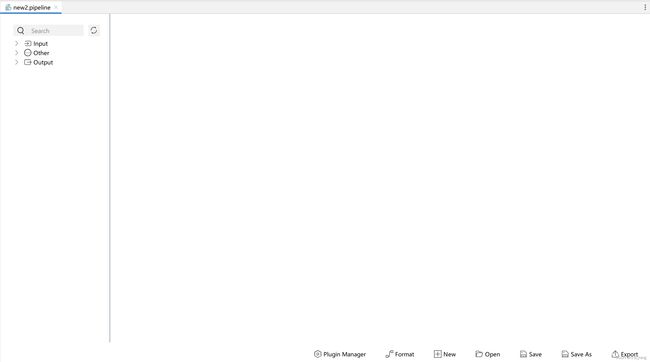

步骤一:在顶部菜单栏中选择“Ascend>MindX SDK Pipeline”,打开空白的pipeline绘制界面,如图:

步骤二:从左方插件库中拖动所需插件,放入编辑区,如图:

以下介绍本项目中,各个插件的功能:

| 插件名称 | 插件功能 |

|---|---|

| appsrc0 | 第一个输入张量,包含了图像数据 |

| appsrc1 | 第二个输入张量,包含了图像元数据,主要是图像原始尺寸和图像缩放比 |

| mxpi_imagedecoder0 | 用于图像解码,当前只支持JPG/JPEG/BMP格式 |

| mxpi_imageresize0 | 对解码后的YUV格式的图像进行指定宽高的缩放,暂时只支持YUV格式的图像 |

| mxpi_tensorinfer0 | 对输入的两个张量进行推理 |

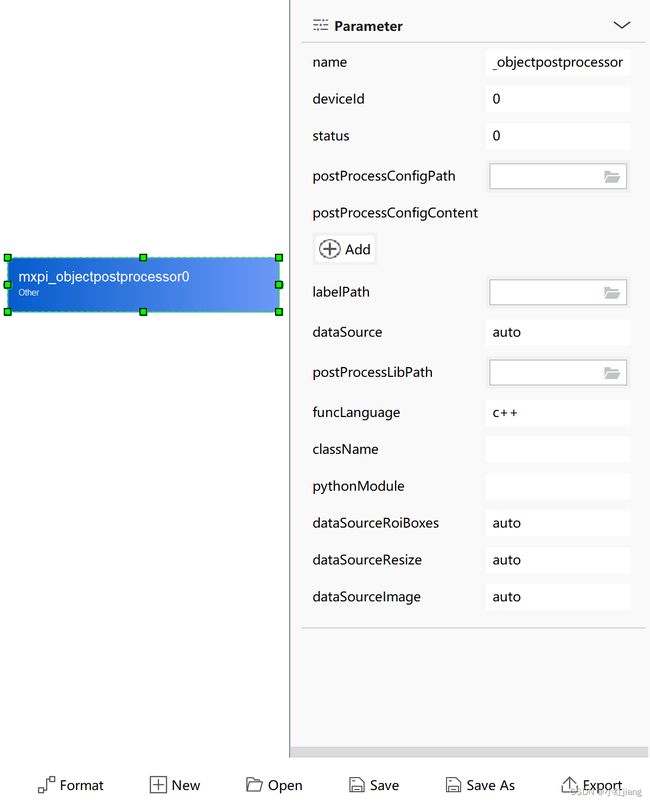

| mxpi_objectpostprocessor0 | 继承图像后处理基类,用于对目标检测模型推理的输出张量进行后处理 |

| mxpi_dataserialize0 | 将stream结果组装成json字符串输出 |

| appsink0 | 从stream中获取数据 |

步骤三:单击选中编辑区内的插件,在插件属性展示区自定义配置(如插件属性中的路径参数),如图:

步骤四:单击编辑区空白区域,插件两端出现接口,按照一定顺序用鼠标连接以上插件,然后点击编辑区下方Format进行格式化,最后点击编辑区下方Save保存pipeline文件,编写好的pipeline文件可视化结果如下图所示:

接下来展示文本代码:

{

"im_fasterrcnn": {

"stream_config": {

"deviceId": "0"

},

"appsrc0": {

"props": {

"blocksize": "409600"

},

"factory": "appsrc",

"next": "mxpi_imagedecoder0"

},

"mxpi_imagedecoder0": {

"factory": "mxpi_imagedecoder",

"next": "mxpi_imageresize0"

},

"mxpi_imageresize0": {

"props": {

"parentName": "mxpi_imagedecoder0",

"resizeHeight": "768",

"resizeWidth": "1280",

"resizeType": "Resizer_KeepAspectRatio_Fit"

},

"factory": "mxpi_imageresize",

"next": "mxpi_tensorinfer0:0"

},

"appsrc1": {

"props": {

"blocksize": "409600"

},

"factory": "appsrc",

"next": "mxpi_tensorinfer0:1"

},

"mxpi_tensorinfer0": {

"props": {

"dataSource": "mxpi_imageresize0,appsrc1",

"modelPath": "../models/conversion-scripts/fasterrcnn_mindspore.om"

},

"factory": "mxpi_tensorinfer",

"next": "mxpi_objectpostprocessor0"

},

"mxpi_objectpostprocessor0": {

"props": {

"dataSource": "mxpi_tensorinfer0",

"postProcessConfigPath": "../models/fasterrcnn_coco2017.cfg",

"labelPath": "../models/coco2017.names",

"postProcessLibPath": "../../postprocess/build/libfasterrcnn_mindspore_post.so"

},

"factory": "mxpi_objectpostprocessor",

"next": "mxpi_dataserialize0"

},

"mxpi_dataserialize0": {

"props": {

"outputDataKeys": "mxpi_objectpostprocessor0"

},

"factory": "mxpi_dataserialize",

"next": "appsink0"

},

"appsink0": {

"factory": "appsink"

}

}

}

9、本地编写python文件

1、main.py

main.py主要进行一些推理前的操作和调用infer.py进行推理,以及调用postprocess.py进行推理结果后处理。

步骤二:编写各个函数

-

parser_args函数用于读入执行改文件时所需的一些参数

def parser_args(): parser = argparse.ArgumentParser(description="FasterRcnn inference") parser.add_argument("--img_path", type=str, required=False, default="../data/test/crop/", help="image directory.") parser.add_argument( "--pipeline_path", type=str, required=False, default="../pipeline/fasterrcnn_ms_dvpp.pipeline", help="image file path. The default is 'config/maskrcnn_ms.pipeline'. ") parser.add_argument( "--model_type", type=str, required=False, default="dvpp", help= "rgb: high-precision, dvpp: high performance. The default is 'dvpp'.") parser.add_argument( "--infer_mode", type=str, required=False, default="infer", help= "infer:only infer, eval: accuracy evaluation. The default is 'infer'.") parser.add_argument( "--infer_result_dir", type=str, required=False, default="../data/test/infer_result", help= "cache dir of inference result. The default is '../data/test/infer_result'.") parser.add_argument("--ann_file", type=str, required=False, help="eval ann_file.") arg = parser.parse_args() return arg -

get_img_metas函数用于记录图像缩放比例

def get_img_metas(file_name): img = Image.open(file_name) img_size = img.size org_width, org_height = img_size resize_ratio = cfg.MODEL_WIDTH / org_width if resize_ratio > cfg.MODEL_HEIGHT / org_height: resize_ratio = cfg.MODEL_HEIGHT / org_height img_metas = np.array([img_size[1], img_size[0]] + [resize_ratio, resize_ratio]) return img_metas -

process_img函数用于对图像进行预处理

def process_img(img_file): img = cv2.imread(img_file) model_img = mmcv.imrescale(img, (cfg.MODEL_WIDTH, cfg.MODEL_HEIGHT)) if model_img.shape[0] > cfg.MODEL_HEIGHT: model_img = mmcv.imrescale(model_img, (cfg.MODEL_HEIGHT, cfg.MODEL_HEIGHT)) pad_img = np.zeros( (cfg.MODEL_HEIGHT, cfg.MODEL_WIDTH, 3)).astype(model_img.dtype) pad_img[0:model_img.shape[0], 0:model_img.shape[1], :] = model_img pad_img.astype(np.float16) return pad_img -

crop_on_slide函数用于对图片进行滑窗裁剪,因为输入的图片尺寸大多都为4000*1000左右,不利于缺陷的识别和推理,对其进行滑窗裁剪后,得到的多张小图片更利于缺陷识别和推理

def crop_on_slide(cut_path, crop_path, stride): if not os.path.exists(crop_path): os.mkdir(crop_path) else: remove_list = os.listdir(crop_path) for filename in remove_list: os.remove(os.path.join(crop_path, filename)) output_shape = 600 imgs = os.listdir(cut_path) for img in imgs: if img.split('.')[1] != "jpg" and img.split('.')[1] != "JPG": raise ValueError("The file {} is not jpg or JPG image!".format(img)) origin_image = cv2.imread(os.path.join(cut_path, img)) height = origin_image.shape[0] width = origin_image.shape[1] x = 0 newheight = output_shape newwidth = output_shape while x < width: y = 0 if x + newwidth <= width: while y < height: if y + newheight <= height: hmin = y hmax = y + newheight wmin = x wmax = x + newwidth else: hmin = height - newheight hmax = height wmin = x wmax = x + newwidth y = height # test crop_img = os.path.join(crop_path, ( img.split('.')[0] + '_' + str(wmax) + '_' + str(hmax) + '_' + str(output_shape) + '.jpg')) cv2.imwrite(crop_img, origin_image[hmin: hmax, wmin: wmax]) y = y + stride if y + output_shape == height: y = height else: while y < height: if y + newheight <= height: hmin = y hmax = y + newheight wmin = width - newwidth wmax = width else: hmin = height - newheight hmax = height wmin = width - newwidth wmax = width y = height # test crop_img = os.path.join(crop_path, ( img.split('.')[0] + '_' + str(wmax) + '_' + str(hmax) + '_' + str( output_shape) + '.jpg')) cv2.imwrite(crop_img, origin_image[hmin: hmax, wmin: wmax]) y = y + stride x = width x = x + stride if x + output_shape == width: x = width -

image_inference函数用于流的初始化,推理所需文件夹的创建、图片预处理、推理时间记录、推理后处理、推理结果可视化

def image_inference(pipeline_path, s_name, img_dir, result_dir, rp_last, model_type): sdk_api = SdkApi(pipeline_path) if not sdk_api.init(): exit(-1) if not os.path.exists(result_dir): os.makedirs(result_dir) img_data_plugin_id = 0 img_metas_plugin_id = 1 logging.info("\nBegin to inference for {}.\n\n".format(img_dir)) file_list = os.listdir(img_dir) total_len = len(file_list) if total_len == 0: logging.info("ERROR\nThe input directory is EMPTY!\nPlease place the picture in '../data/test/cut'!") for img_id, file_name in enumerate(file_list): if not file_name.lower().endswith((".jpg", "jpeg")): continue file_path = os.path.join(img_dir, file_name) save_path = os.path.join(result_dir, f"{os.path.splitext(file_name)[0]}.json") if not rp_last and os.path.exists(save_path): logging.info("The infer result json({}) has existed, will be skip.".format(save_path)) continue try: if model_type == 'dvpp': with open(file_path, "rb") as fp: data = fp.read() sdk_api.send_data_input(s_name, img_data_plugin_id, data) else: img_np = process_img(file_path) sdk_api.send_img_input(s_name, img_data_plugin_id, "appsrc0", img_np.tobytes(), img_np.shape) # set image data img_metas = get_img_metas(file_path).astype(np.float32) sdk_api.send_tensor_input(s_name, img_metas_plugin_id, "appsrc1", img_metas.tobytes(), [1, 4], cfg.TENSOR_DTYPE_FLOAT32) start_time = time.time() result = sdk_api.get_result(s_name) end_time = time.time() - start_time if os.path.exists(save_path): os.remove(save_path) flags = os.O_WRONLY | os.O_CREAT | os.O_EXCL modes = stat.S_IWUSR | stat.S_IRUSR with os.fdopen(os.open((save_path), flags, modes), 'w') as fp: fp.write(json.dumps(result)) logging.info( "End-2end inference, file_name: {}, {}/{}, elapsed_time: {}.\n".format(file_path, img_id + 1, total_len, end_time)) draw_label(save_path, file_path, result_dir) except Exception as ex: logging.exception("Unknown error, msg:{}.".format(ex)) post_process()

步骤三:main方法编写

if __name__ == "__main__":

args = parser_args()

REPLACE_LAST = True

STREAM_NAME = cfg.STREAM_NAME.encode("utf-8")

CUT_PATH = "../data/test/cut/"

CROP_IMG_PATH = "../data/test/crop/"

STRIDE = 450

crop_on_slide(CUT_PATH, CROP_IMG_PATH, STRIDE)

image_inference(args.pipeline_path, STREAM_NAME, args.img_path,

args.infer_result_dir, REPLACE_LAST, args.model_type)

if args.infer_mode == "eval":

logging.info("Infer end.\nBegin to eval...")

get_eval_result(args.ann_file, args.infer_result_dir)

2、infer.py

infer.py中是主要的sdk推理步骤,包括流的初始化到流的销毁,编写完成后在main.py中调用。

步骤二:编写各个函数

-

init魔法属性用来构造sdk实例化对象

def __init__(self, pipeline_cfg): self.pipeline_cfg = pipeline_cfg self._stream_api = None self._data_input = None self._device_id = None -

del魔法属性用来销毁实例化对象

def __del__(self): if not self._stream_api: return self._stream_api.DestroyAllStreams() -

_convert_infer_result函数用来将推理结果输出

def _convert_infer_result(infer_result): data = infer_result.get('MxpiObject') if not data: logging.info("The result data is empty.") return infer_result for bbox in data: if 'imageMask' not in bbox: continue mask_info = json_format.ParseDict(bbox["imageMask"], MxpiDataType.MxpiImageMask()) mask_data = np.frombuffer(mask_info.dataStr, dtype=np.uint8) bbox['imageMask']['data'] = "".join([str(i) for i in mask_data]) bbox['imageMask'].pop("dataStr") return infer_result -

init函数用来stream manager的初始化

def init(self): try: with open(self.pipeline_cfg, 'r') as fp: self._device_id = int( json.loads(fp.read())[self.STREAM_NAME]["stream_config"] ["deviceId"]) logging.info("The device id: {}.".format(self._device_id)) # create api self._stream_api = StreamManagerApi() # init stream mgr ret = self._stream_api.InitManager() if ret != 0: logging.info("Failed to init stream manager, ret={}.".format(ret)) return False # create streams with open(self.pipeline_cfg, 'rb') as fp: pipe_line = fp.read() ret = self._stream_api.CreateMultipleStreams(pipe_line) if ret != 0: logging.info("Failed to create stream, ret={}.".format(ret)) return False self._data_input = MxDataInput() except Exception as exe: logging.exception("Unknown error, msg:{}".format(exe)) return False return True -

send_data_input函数用来传输推理数据

def send_data_input(self, stream_name, plugin_id, input_data): data_input = MxDataInput() data_input.data = input_data unique_id = self._stream_api.SendData(stream_name, plugin_id, data_input) if unique_id < 0: logging.error("Fail to send data to stream.") return False return True -

send_img_input函数用来传入图片

def send_img_input(self, stream_name, plugin_id, element_name, input_data, img_size): vision_list = MxpiDataType.MxpiVisionList() vision_vec = vision_list.visionVec.add() vision_vec.visionInfo.format = 1 vision_vec.visionInfo.width = img_size[1] vision_vec.visionInfo.height = img_size[0] vision_vec.visionInfo.widthAligned = img_size[1] vision_vec.visionInfo.heightAligned = img_size[0] vision_vec.visionData.memType = 0 vision_vec.visionData.dataStr = input_data vision_vec.visionData.dataSize = len(input_data) buf_type = b"MxTools.MxpiVisionList" return self._send_protobuf(stream_name, plugin_id, element_name, buf_type, vision_list) -

send_tensor_input函数用来传入张量数据

def send_tensor_input(self, stream_name, plugin_id, element_name, input_data, input_shape, data_type): tensor_list = MxpiDataType.MxpiTensorPackageList() tensor_pkg = tensor_list.tensorPackageVec.add() # init tensor vector tensor_vec = tensor_pkg.tensorVec.add() tensor_vec.deviceId = self._device_id tensor_vec.memType = 0 tensor_vec.tensorShape.extend(input_shape) tensor_vec.tensorDataType = data_type tensor_vec.dataStr = input_data tensor_vec.tensorDataSize = len(input_data) buf_type = b"MxTools.MxpiTensorPackageList" return self._send_protobuf(stream_name, plugin_id, element_name, buf_type, tensor_list) -

get_result函数用来获得推理结果

def get_result(self, stream_name, out_plugin_id=0): infer_res = self._stream_api.GetResult(stream_name, out_plugin_id, self.INFER_TIMEOUT) if infer_res.errorCode != 0: logging.info("GetResultWithUniqueId error, errorCode={}, errMsg={}".format(infer_res.errorCode, infer_res.data.decode())) return None res_dict = json.loads(infer_res.data.decode()) return self._convert_infer_result(res_dict) -

_send_protobuf函数用来对目标检测结果进行序列化

def _send_protobuf(self, stream_name, plugin_id, element_name, buf_type, pkg_list): protobuf = MxProtobufIn() protobuf.key = element_name.encode("utf-8") protobuf.type = buf_type protobuf.protobuf = pkg_list.SerializeToString() protobuf_vec = InProtobufVector() protobuf_vec.push_back(protobuf) err_code = self._stream_api.SendProtobuf(stream_name, plugin_id, protobuf_vec) if err_code != 0: logging.error( "Failed to send data to stream, stream_name:{}, plugin_id:{}, " "element_name:{}, buf_type:{}, err_code:{}.".format( stream_name, plugin_id, element_name, buf_type, err_code)) return False return True

3、postprocess.py

对经过滑窗裁剪后的小图片进行推理,最后得到的推理结果也是在小图片上,因此需要对推理结果进行后处理,将小图片上的推理结果还原到未经过滑窗裁剪的图片上。

步骤二:编写各个函数

-

json_to_txt函数用来将得到的json格式推理结果转为txt格式

def json_to_txt(infer_result_path, savetxt_path): if os.path.exists(savetxt_path): shutil.rmtree(savetxt_path) os.mkdir(savetxt_path) files = os.listdir(infer_result_path) for file in files: if file.endswith(".json"): json_path = os.path.join(infer_result_path, file) with open(json_path, 'r') as fp: result = json.loads(fp.read()) if result: data = result.get("MxpiObject") txt_file = file.split(".")[0] + ".txt" flags = os.O_WRONLY | os.O_CREAT | os.O_EXCL modes = stat.S_IWUSR | stat.S_IRUSR with os.fdopen(os.open(os.path.join(savetxt_path, txt_file), flags, modes), 'w') as f: if file.split('_')[0] == "W0003": temp = int(file.split("_")[2]) - 600 else: temp = int(file.split("_")[1]) - 600 for bbox in data: class_vec = bbox.get("classVec")[0] class_id = int(class_vec["classId"]) confidence = class_vec.get("confidence") xmin = bbox["x0"] ymin = bbox["y0"] xmax = bbox["x1"] ymax = bbox["y1"] if xmax - xmin >= 5 and ymax - ymin >= 5: f.write( str(xmin + temp) + ',' + str(ymin) + ',' + str(xmax + temp) + ',' + str( ymax) + ',' + str( round(confidence, 2)) + ',' + str(class_id) + '\n') -

hebing_txt函数用来将滑窗裁剪后的小图片推理结果还原到原始图片上

def hebing_txt(txt_path, save_txt_path, remove_txt_path, cut_path): if not os.path.exists(save_txt_path): os.makedirs(save_txt_path) if not os.path.exists(remove_txt_path): os.makedirs(remove_txt_path) fileroot = os.listdir(save_txt_path) remove_list = os.listdir(remove_txt_path) for filename in remove_list: os.remove(os.path.join(remove_txt_path, filename)) for filename in fileroot: os.remove(os.path.join(save_txt_path, filename)) data = [] for file in os.listdir(cut_path): data.append(file.split(".")[0]) txt_list = os.listdir(txt_path) flags = os.O_WRONLY | os.O_CREAT | os.O_EXCL modes = stat.S_IWUSR | stat.S_IRUSR for image in data: fw = os.fdopen(os.open(os.path.join(save_txt_path, image + '.txt'), flags, modes), 'w') for txtfile in txt_list: if image.split('_')[0] == "W0003": if image.split('_')[1] == txtfile.split('_')[1]: for line in open(os.path.join(txt_path, txtfile), "r"): fw.write(line) else: if image.split('_')[0] == txtfile.split('_')[0]: for line in open(os.path.join(txt_path, txtfile), "r"): fw.write(line) fw.close() fileroot = os.listdir(save_txt_path) for file in fileroot: oldname = os.path.join(save_txt_path, file) newname = os.path.join(remove_txt_path, file) shutil.copyfile(oldname, newname) -

py_cpu_nms、plot_bbox、nms_box函数用来对还原后的推理结果进行nms去重处理,在进行滑窗裁剪时,为了不在裁剪时将缺陷切断从而保留所有缺陷,所以设置的滑窗步长小于小图片尺寸,因此得到的推理结果会有重复,需进行nms去重处理

def py_cpu_nms(dets, thresh): x1 = dets[:, 0] y1 = dets[:, 1] x2 = dets[:, 2] y2 = dets[:, 3] areas = (y2 - y1 + 1) * (x2 - x1 + 1) scores = dets[:, 4] keep = [] index = scores.argsort()[::-1] while index.size > 0: i = index[0] # every time the first is the biggst, and add it directly keep.append(i) x11 = np.maximum(x1[i], x1[index[1:]]) # calculate the points of overlap y11 = np.maximum(y1[i], y1[index[1:]]) x22 = np.minimum(x2[i], x2[index[1:]]) y22 = np.minimum(y2[i], y2[index[1:]]) w = np.maximum(0, x22 - x11 + 1) # the weights of overlap h = np.maximum(0, y22 - y11 + 1) # the height of overlap overlaps = w * h ious = overlaps / (areas[i] + areas[index[1:]] - overlaps) idx = np.where(ious <= thresh)[0] index = index[idx + 1] # because index start from 1 return keep def plot_bbox(dets, c='k'): x1 = dets[:, 0] y1 = dets[:, 1] x2 = dets[:, 2] y2 = dets[:, 3] plt.plot([x1, x2], [y1, y1], c) plt.plot([x1, x1], [y1, y2], c) plt.plot([x1, x2], [y2, y2], c) plt.plot([x2, x2], [y1, y2], c) plt.title(" nms") def nms_box(image_path, image_save_path, txt_path, thresh, obj_list): if not os.path.exists(image_save_path): os.makedirs(image_save_path) remove_list = os.listdir(image_save_path) for filename in remove_list: os.remove(os.path.join(image_save_path, filename)) txt_list = os.listdir(txt_path) for txtfile in tqdm.tqdm(txt_list): boxes = np.loadtxt(os.path.join(txt_path, txtfile), dtype=np.float32, delimiter=',') if boxes.size > 5: if os.path.exists(os.path.join(txt_path, txtfile)): os.remove(os.path.join(txt_path, txtfile)) flags = os.O_WRONLY | os.O_CREAT | os.O_EXCL modes = stat.S_IWUSR | stat.S_IRUSR fw = os.fdopen(os.open(os.path.join(txt_path, txtfile), flags, modes), 'w') keep = py_cpu_nms(boxes, thresh=thresh) img = cv.imread(os.path.join(image_path, txtfile[:-3] + 'jpg'), 0) for label in boxes[keep]: fw.write(str(int(label[0])) + ',' + str(int(label[1])) + ',' + str(int(label[2])) + ',' + str( int(label[3])) + ',' + str(round((label[4]), 2)) + ',' + str(int(label[5])) + '\n') x_min = int(label[0]) y_min = int(label[1]) x_max = int(label[2]) y_max = int(label[3]) color = (0, 0, 255) if x_max - x_min >= 5 and y_max - y_min >= 5: cv.rectangle(img, (x_min, y_min), (x_max, y_max), color, 1) font = cv.FONT_HERSHEY_SIMPLEX cv.putText(img, (obj_list[int(label[5])] + str(round((label[4]), 2))), (x_min, y_min - 7), font, 0.4, (6, 230, 230), 1) cv.imwrite(os.path.join(image_save_path, txtfile[:-3] + 'jpg'), img) fw.close() -

post_process函数用来调用以上编写的函数,最后在main.py中被调用

def post_process(): infer_result_path = "../data/test/infer_result" txt_save_path = "../data/test/img_txt" json_to_txt(infer_result_path, txt_save_path) txt_path = "../data/test/img_txt" all_txt_path = "../data/test/img_huizong_txt" nms_txt_path = "../data/test/img_huizong_txt_nms" cut_path = "../data/test/cut" hebing_txt(txt_path, all_txt_path, nms_txt_path, cut_path) cut_path = "../data/test/cut" image_save_path = "../data/test/draw_result" nms_txt_path = "../data/test/img_huizong_txt_nms" obj_lists = ['qikong', 'liewen'] nms_box(cut_path, image_save_path, nms_txt_path, thresh=0.1, obj_list=obj_lists)

10、代码运行

前面的步骤完成之后,我们就可以进行代码的运行了,本项目中,图片的输入输出位置都是用的相对路径,因此不需要修改路径参数,按以下步骤进行模型推理:

步骤一:放置待检测图片

本项目中,将图片放置在./python/data/test/cut目录下,例如我放的图片:

步骤二:在main.py中设置好初始图片所在位置和结果图片保存位置。

步骤三:设置运行脚本运行应用

五、常见问题

在使用 MindStudio 时,遇到问题,可以登陆华为云论坛云计算论坛开发者论坛技术论坛-华为云 (huaweicloud.com)进行互动,提出问题,会有专家老师为你解答。

1、CANN 连接错误

点击OK,点击Show Error Details,查看报错信息:

问题:权限不够,无法连接。

解决方案:在远程环境自己的工作目录下重新下载CANN后,再连接CANN即可。

2、后处理插件权限问题

两种解决方案:

方案一:在远程终端中找到后处理插件所在位置,修改其权限为640,如图:

方案二:在远程终端环境中找到后处理插件所在位置,将其复制到MindX SDK自带的后处理插件库文件夹下,并修改其权限为640,然后修改pipeline文件中后处理插件所在位置。

注:MindX SDK自带的后处理插件库文件夹一般为${MX_SDK_HOME}/lib/modelpostprocessors/