VGG理论概述,torch,tensorflow代码实现

vggnet

AlexNet 在 2012 年发表之后,研究界对这个模型做了很多改进工作,使得这个模型得到了不断优化,特别是在 ImageNet 上的表现获得了显著提升。针对 AlexNet 模型的两个重要改进,分别是 VGG 和 GoogleNet。

4.1 VGGNet名称由来及作者介绍

论文题目是《用于大规模图像识别的深度卷积网络》(Very Deep Convolutional Networks for Large-Scale Image Recognition)[1]。这篇文章的作者都来自于英国牛津大学的“视觉几何实验室”(Visual Geometry Group),简称 VGG,所以文章提出的模型也被叫作 VGG 网络。到目前为止,这篇论文的引用次数已经多达 1 万 4 千次。

首先,我们简单来了解一下这篇论文的作者。

第一作者叫卡伦·西蒙彦(Karen Simonyan),发表论文的时候他在牛津大学计算机系攻读博士学位。之后,西蒙彦加入了谷歌,在 DeepMind 任职,继续从事深度学习的研究。

第二作者叫安德鲁·兹泽曼(Andrew Zisserman),是牛津大学计算机系的教授,也是计算机视觉领域的学术权威。他曾经三次被授予计算机视觉最高荣誉“马尔奖”(Marr Prize)。

4.2 VGG论文的主要贡献

这篇论文的主要贡献是什么呢?一个重要贡献就是研究如何把之前的模型(例如 AlexNet)加深层次,从而能够拥有更好的模型泛化能力,最终实现更小的分类错误率。

为了更好地理解这篇文章的贡献,我们来回忆一下 AlexNet 的架构。AlexNet 拥有 8 层神经网络,分别是 5 层卷积层和 3 层全联通层。AlexNet 之所以能够有效地进行训练,是因为这个模型利用了“线性整流函数”(ReLu)、数据增强(Data Augmentation)以及 Dropout 等手段。这些方法让 AlexNet 能够达到 8 层。

但是,学术界一直以来都认为,从理论上看,神经网络应该是层数越多,泛化能力越好。而且在理论上,一个 8 层的神经网络完全可以加到 18 层或者 80 层。但是在现实中,梯度消失和过拟合等情况让加深神经网络变得非常困难。在这篇论文中,VGG 网络就尝试从 AlexNet 出发,看能否加入更多的神经网络层数,来达到更好的模型效果。

那 VGG 是怎么做到加深神经网络层数的呢?总体来说,VGG 对卷积层的“过滤器”(Filter)进行了更改,达到了 19 层的网络结构。从结果上看,和 AlexNet 相比,VGG 在 ImageNet 上的错误率要降低差不多一半。可以说,这是第一个真正意义上达到了“深层”的网络结构。

VGG 在“过滤器”上着手更改,那么具体的改变细节究竟有哪些呢?简单来说,就是在卷积层中仅仅使用“3*3”的“接受域”(Receptive Field),使得每一层都非常小。我们可以从整个形象上来理解,认为这是一组非常“瘦”的网络架构。在卷积层之后,是三层全联通层以及最后一层进行分类任务的层。一个细节是,VGG 放弃了我们之前介绍的 AlexNet 中引入的一个叫“局部响应归一化”(Local Response Normalization)的技术,原因是这个技巧并没有真正带来模型效果的提升。

VGG 架构在训练上的一个要点是先从一个小的结构开始,我们可以理解为首先训练一个 AlexNet,然后利用训练的结果来初始化更深结构的网络。作者们发现采用这种“初始训练”(Pre-Training)的办法要比完全从随机状态初始化模型训练得更加稳定。

4.3 走向深度:VGGNet

随着AlexNet在2012年ImageNet大赛上大放异彩后,卷积网络进入 了飞速的发展阶段,而2014年的ImageNet亚军结构VGGNet(Visual Geometry Group Network)则将卷积网络进行了改良,探索了网络深度 与性能的关系,用更小的卷积核与更深的网络结构,取得了较好的效果,成为卷积结构发展史上较为重要的一个网络。

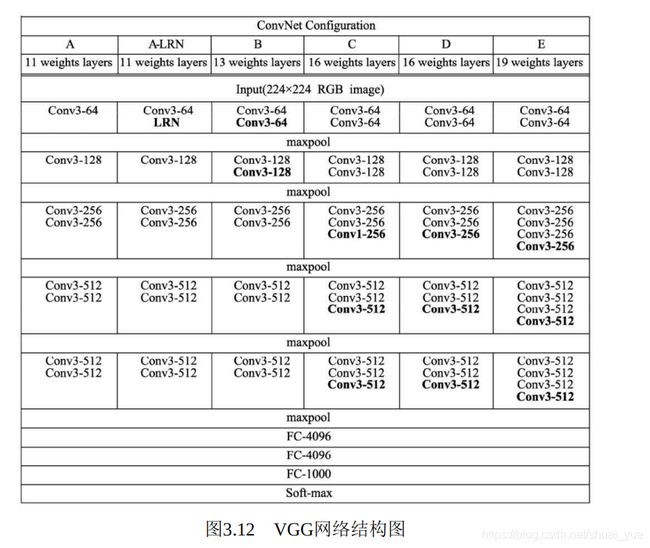

VGGNet网络结构组成如图3.12所示,一共有6个不同的版本,最常 用的是VGG16。从图3.12中可以看出,VGGNet采用了五组卷积与三个 全连接层,最后使用Softmax做分类。VGGNet有一个显著的特点:每次 经过池化层(maxpool)后特征图的尺寸减小一倍,而通道数则增加一 倍(最后一个池化层除外)。

AlexNet中有使用到5×5的卷积核,而在VGGNet中,使用的卷积核基本都是3×3,而且很多地方出现了多个3×3堆叠的现象,这种结构的优点在于,首先从感受野来看,两个3×3的卷积核与一个5×5的卷积核是一样的;其次,同等感受野时,3×3卷积核的参数量更少。更为重要的是,两个3×3卷积核的非线性能力要比5×5卷积核强,因为其拥有两个激活函数,可大大提高卷积网络的学习能力。

下面使用PyTorch来搭建VGG16经典网络结构,新建一个vgg.py文件,并输入以下内容:

from torch import nn

class VGG(nn.Module):

def __init__(self, num_classes=1000):

super(VGG, self).__init__()

layers = []

in_dim = 3

out_dim = 64

# 循环构造卷积层,一共有13个卷积层

for i in range(13):

layers += [nn.Conv2d(in_dim, out_dim, 3, 1, 1), nn.ReLU(inplace=True)]

in_dim = out_dim

# 在第2、4、7、10、13个卷积层后增加池化层

if i==1 or i==3 or i==6 or i==9 or i==12:

layers += [nn.MaxPool2d(2, 2)]

# 第10个卷积后保持和前边的通道数一致,都为512,其余加倍

if i!=9:

out_dim*=2

self.features = nn.Sequential(*layers)

# VGGNet的3个全连接层,中间有ReLU与Dropout层

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True), nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, num_classes),

)

def forward(self, x):

x = self.features(x)

# 这里是将特征图的维度从[1, 512, 7, 7]变到[1, 512*7*7]

x = x.view(x.size(0), -1)

x = self.classifier(x)

return x

在终端中进入上述vgg.py文件的同级目录,输入python3进入交互式环境,利用下面代码调用该模块。

>>> import torch

>>> from vgg import VGG

# 实例化VGG类,在此设置输出分类数为21,并转移到GPU上

>>> vgg = VGG(21).cuda()

>>> input = torch.randn(1, 3, 224, 224).cuda()

>>> input.shape torch.Size([1, 3, 224, 224]) # 调用VGG,输出21类的得分

>>> scores = vgg(input)

>>> scores.shape torch.Size([1, 21]) # 也可以单独调用卷积模块,输出最后一层的特征图

>>> features = vgg.features(input)

>>> features.shape torch.Size([1, 512, 7, 7]) # 打印出VGGNet的卷积层,5个卷积组一共30层

>>> vgg.features

Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) )

# 打印出VGGNet的3个全连接层

>>> vgg.classifier

Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace)

(2): Dropout(p=0.5)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace)

(5): Dropout(p=0.5)

(6): Linear(in_features=4096, out_features=21, bias=True) )

VGGNet简单灵活,拓展性很强,并且迁移到其他数据集上的泛化能力也很好,因此时至今日有很多检测与分割算法仍采用VGGNet的网络骨架。

通用设计

输入是固定大小的224×224 RGB图像

预训练的时候每个像素减均值

使用感受野很小的滤波器

33这是俘获左右上下中心的最小尺寸,其中一种配置中还是用了11,可以看做输入通道的线性变换(后面是非线性) 步长为1,

33卷积填充为1 ,

池化由五个最大池化构成22像素大小步长为1

一堆卷积之后三个全连接 4096通道 1000分类

softmax函数进行分类

隐藏层全是relu函数

不包含局部响应归一化(不会增加性能但是消耗空间)

详细说明评估中配置

本文中的6个模型

A: 11层卷积

A-LRN:在A的基础上增加一个LRN

B:第1,2个block中增加了33卷积

C:第3,4,5个block增加了1个11卷积,增加非线性有利于提升性能

D:第3,4,5个block的 11卷积变为33卷积

E:第3,4,5个block再分别增加1个 3*3卷积

该论文中使用的是小卷积核(3×3,步幅为1)

两个3×3的卷积核相当于一个5×5

5 * 5比3 * 3贵了2.78倍

三个3×3的卷积核相当于一个7×7

7×7比3个3×3多81 % 的参数

(1)相比于一个卷积核,3个卷积核结合了3个非线性层,增加了非线性,增加特征抽象能力

(2) 减少了参数的个数

(3) 使用1×1

增加了非线性说明使用小卷积核的好处以及使用1×1可以增加非线性

VGG的创新点在于

(1)堆叠小卷积核,加深网络

(2)训练阶段使用了尺度扰动

(3)测试阶段,采用多尺度以及multi-crop+dense

模型在多个迁移学习任务中的表现要优于googLeNet

缺点在于,参数量有140M之多 存储空间大

之前的网络都用7x7,11x11等比较大的卷积核,现在全用3x3不会有什么影响吗?

几个小滤波器卷积层的组合比一个大滤波器卷积层好:

多个卷积层与非线性的激活层交替的结构,比单一卷积层的结构更能提取出深层的更好的特征。其次,假设所有的数据有C个通道,那么单独的7x7卷积层将会包含 49C2 3个3*3 = 27C2

比AlexNet好点

cifar10vgg16 tensorflow2

import tensorflow as tf

import os

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras.layers import Conv2D,BatchNormalization,Activation,MaxPool2D,Dropout,Flatten,Dense

from tensorflow.keras import Model

np.set_printoptions(threshold=np.inf)

cifar10 = tf.keras.datasets.cifar10

(x,y),(x_test,y_test) = cifar10.load_data()

x,x_test = x/255.,x_test/255.

class VGG16(Model):

def __init__(self):

super(VGG16, self).__init__()

self.c1 = Conv2D(filters=64,kernel_size=(3,3),padding='same')

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.c2 = Conv2D(filters=64,kernel_size=(3,3),padding='same')

self.b2 =BatchNormalization()

self.a2 = Activation('relu')

self.p1 = MaxPool2D(pool_size=(2,2),strides=2,padding='same')

self.d1 = Dropout(0.2)

self.c3 = Conv2D(filters=128,kernel_size=(3,3),padding='same')

self.b3 =BatchNormalization()

self.a3 = Activation('relu')

self.c4 = Conv2D(filters=128,kernel_size=(3,3),padding='same')

self.b4 = BatchNormalization() # BN层1

self.a4 = Activation('relu') # 激活层1

self.p2 = MaxPool2D(pool_size=(2,2),strides=2,padding='same')

self.d2 = Dropout(0.2)

self.c5 = Conv2D(filters=256,kernel_size=(3,3),padding='same')

self.b5 = BatchNormalization()

self.a5 = Activation('relu')

self.c6 = Conv2D(filters=256,kernel_size=(3,3),padding='same')

self.b6 = BatchNormalization()

self.a6 = Activation('relu')

self.c7 = Conv2D(filters=256,kernel_size=(3,3),padding='same')

self.b7 = BatchNormalization()

self.a7 = Activation('relu')

self.p3 = MaxPool2D(pool_size=(2,2),strides=2,padding='same')

self.d3 = Dropout(0.2)

self.c8 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b8 = BatchNormalization()

self.a8 = Activation('relu')

self.c9 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b9 = BatchNormalization()

self.a9 = Activation('relu')

self.c10 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b10 = BatchNormalization()

self.a10 = Activation('relu')

self.p4 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d4 = Dropout(0.2)

self.c11 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b11 = BatchNormalization()

self.a11 = Activation('relu')

self.c12 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b12 = BatchNormalization()

self.a12 = Activation('relu')

self.c13 = Conv2D(filters=256, kernel_size=(3, 3), padding='same')

self.b13 = BatchNormalization()

self.a13 = Activation('relu')

self.p5 = MaxPool2D(pool_size=(2, 2), strides=2, padding='same')

self.d5 = Dropout(0.2)

self.flatten = Flatten()

self.f1 = Dense(512,activation='relu')

self.d6 = Dropout(0.2)

self.f2 = Dense(512,activation='relu')

self.d7 =Dropout(0.2)

self.f3 = Dense(10,activation='softmax')

def call(self,x):

x = self.c1(x)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

x = self.b2(x)

x = self.a2(x)

x = self.p1(x)

x = self.d1(x)

x = self.c3(x)

x = self.b3(x)

x = self.a3(x)

x = self.c4(x)

x = self.b4(x)

x = self.a4(x)

x = self.p2(x)

x = self.d2(x)

x = self.c5(x)

x = self.b5(x)

x = self.a5(x)

x = self.c6(x)

x = self.b6(x)

x = self.a6(x)

x = self.c7(x)

x = self.b7(x)

x = self.a7(x)

x = self.p3(x)

x = self.d3(x)

x = self.c8(x)

x = self.b8(x)

x = self.a8(x)

x = self.c9(x)

x = self.b9(x)

x = self.a9(x)

x = self.c10(x)

x = self.b10(x)

x = self.a10(x)

x = self.p4(x)

x = self.d4(x)

x = self.c11(x)

x = self.b11(x)

x = self.a11(x)

x = self.c12(x)

x = self.b12(x)

x = self.a12(x)

x = self.c13(x)

x = self.b13(x)

x = self.a13(x)

x = self.p5(x)

x = self.d5(x)

x = self.flatten(x)

x = self.f1(x)

x = self.d6(x)

x = self.f2(x)

x = self.d7(x)

y = self.f3(x)

return y

model = VGG16()

model.compile(optimizer='adam',

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False),

metrics=['sparse_categorical_accuracy'])

checkpoint_save_path = './checkpoint/VGG16.ckpt'

if os.path.exists(checkpoint_save_path + '.index'):

print("------------load the model -----------")

model.load_weights(checkpoint_save_path)

cp_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_save_path,

save_weights_only=True,

save_best_only=True)

history = model.fit(x,y,batch_size=32,epochs=5,validation_data=(x_test,y_test),

validation_freq=1,callbacks=[cp_callback])

file = open('./VGG16weights.txt','w')

for v in model.trainable_variables:

file.write(str(v.name) +'\n')

file.write(str(v.shape) +'\n')

file.write(str(v.numpy()) +'\n')

file.close()

########################### show #############3

acc= history.history['sparse_categorical_accuracy']

val_acc= history.history['val_sparse_categorical_accuracy']

loss= history.history['loss']

val_loss= history.history['val_loss']

plt.subplot(1,2,1)

plt.plot(acc,label = 'training acc')

plt.plot(val_acc,label = 'validation acc')

plt.title('train and validation acc')

plt.legend()

plt.subplot(1,2,2)

plt.plot(loss,label = 'training loss')

plt.plot(val_loss,label = 'validation loss')

plt.title('train and validation loss')

plt.legend()

plt.show()

torch代码和训练

# -*- coding: utf-8 -*-

"""

# @file name : vgg_inference.py

# @author : TingsongYu https://github.com/TingsongYu

# @date : 2020-04-28

# @brief : inference demo

"""

import os

os.environ['NLS_LANG'] = 'SIMPLIFIED CHINESE_CHINA.UTF8'

import time

import json

import torch.nn as nn

import torch

import torchvision.transforms as transforms

from PIL import Image

from matplotlib import pyplot as plt

import torchvision.models as models

from tools.common_tools import get_vgg16

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

def img_transform(img_rgb, transform=None):

"""

将数据转换为模型读取的形式

:param img_rgb: PIL Image

:param transform: torchvision.transform

:return: tensor

"""

if transform is None:

raise ValueError("找不到transform!必须有transform对img进行处理")

img_t = transform(img_rgb)

return img_t

def process_img(path_img):

# hard code

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

inference_transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop((224, 224)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# path --> img

img_rgb = Image.open(path_img).convert('RGB')

# img --> tensor

img_tensor = img_transform(img_rgb, inference_transform)

img_tensor.unsqueeze_(0) # chw --> bchw

img_tensor = img_tensor.to(device)

return img_tensor, img_rgb

def load_class_names(p_clsnames, p_clsnames_cn):

"""

加载标签名

:param p_clsnames:

:param p_clsnames_cn:

:return:

"""

with open(p_clsnames, "r") as f:

class_names = json.load(f)

with open(p_clsnames_cn, encoding='UTF-8') as f: # 设置文件对象

class_names_cn = f.readlines()

return class_names, class_names_cn

if __name__ == "__main__":

# config

path_state_dict = os.path.join(BASE_DIR, "..", "data", "vgg16-397923af.pth")

# path_img = os.path.join(BASE_DIR, "..", "..", "Data","Golden Retriever from baidu.jpg")

path_img = os.path.join(BASE_DIR, "..", "..", "Data", "tiger cat.jpg")

path_classnames = os.path.join(BASE_DIR, "..", "..", "Data", "imagenet1000.json")

path_classnames_cn = os.path.join(BASE_DIR, "..", "..", "Data","imagenet_classnames.txt")

# load class names

cls_n, cls_n_cn = load_class_names(path_classnames, path_classnames_cn)

# 1/5 load img

img_tensor, img_rgb = process_img(path_img)

# 2/5 load model

vgg_model = get_vgg16(path_state_dict, device, True)

# 3/5 inference tensor --> vector

with torch.no_grad():

time_tic = time.time()

outputs = vgg_model(img_tensor)

time_toc = time.time()

# 4/5 index to class names

_, pred_int = torch.max(outputs.data, 1)

_, top5_idx = torch.topk(outputs.data, 5, dim=1)

pred_idx = int(pred_int.cpu().numpy())

pred_str, pred_cn = cls_n[pred_idx], cls_n_cn[pred_idx]

print("img: {} is: {}\n{}".format(os.path.basename(path_img), pred_str, pred_cn))

print("time consuming:{:.2f}s".format(time_toc - time_tic))

# 5/5 visualization

plt.imshow(img_rgb)

plt.title("predict:{}".format(pred_str))

top5_num = top5_idx.cpu().numpy().squeeze()

text_str = [cls_n[t] for t in top5_num]

for idx in range(len(top5_num)):

plt.text(5, 15+idx*30, "top {}:{}".format(idx+1, text_str[idx]), bbox=dict(fc='yellow'))

plt.show()

# -*- coding: utf-8 -*-

"""

# @file name : train_vgg.py

# @author : TingsongYu https://github.com/TingsongYu

# @date : 2020-04-28

# @brief : vgg traning

"""

import os

import numpy as np

import torch.nn as nn

import torch

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as plt

import torchvision.models as models

from tools.my_dataset import CatDogDataset

from tools.common_tools import get_vgg16

from datetime import datetime

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# log dir

now_time = datetime.now()

time_str = datetime.strftime(now_time, '%m-%d_%H-%M')

log_dir = os.path.join(BASE_DIR, "..", "results", time_str)

if not os.path.exists(log_dir):

os.makedirs(log_dir)

if __name__ == "__main__":

# config

data_dir = os.path.join(BASE_DIR, "../data/train")

print(data_dir)

path_state_dict = os.path.join(BASE_DIR, "../data/vgg16-397923af.pth")

print(path_state_dict)

num_classes = 2

MAX_EPOCH = 3

BATCH_SIZE = 8 # 16 / 8 / 4 / 2 / 1

LR = 0.001

log_interval = 2

val_interval = 1

classes = 2

start_epoch = -1

lr_decay_step = 1

# ============================ step 1/5 数据 ============================

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((256)), # (256, 256) 区别

transforms.CenterCrop(256),

transforms.RandomCrop(224),

transforms.RandomHorizontalFlip(p=0.5),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

normalizes = transforms.Normalize(norm_mean, norm_std)

valid_transform = transforms.Compose([

transforms.Resize((256, 256)),

transforms.TenCrop(224, vertical_flip=False), # 上下左右中心裁剪后翻转

transforms.Lambda(lambda crops: torch.stack([normalizes(transforms.ToTensor()(crop)) for crop in crops])),

])

# 构建MyDataset实例

train_data = CatDogDataset(data_dir=data_dir, mode="train", transform=train_transform)

valid_data = CatDogDataset(data_dir=data_dir, mode="valid", transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=4)

# ============================ step 2/5 模型 ============================

vgg16_model = get_vgg16(path_state_dict, device, False)

num_ftrs = vgg16_model.classifier._modules["6"].in_features

vgg16_model.classifier._modules["6"] = nn.Linear(num_ftrs, num_classes)

vgg16_model.to(device)

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss()

# ============================ step 4/5 优化器 ============================

# 冻结卷积层

flag = 0

# flag = 1

if flag:

fc_params_id = list(map(id, vgg16_model.classifier.parameters())) # 返回的是parameters的 内存地址

base_params = filter(lambda p: id(p) not in fc_params_id, vgg16_model.parameters())

optimizer = optim.SGD([

{'params': base_params, 'lr': LR * 0.1}, # 0

{'params': vgg16_model.classifier.parameters(), 'lr': LR}], momentum=0.9)

else:

optimizer = optim.SGD(vgg16_model.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=lr_decay_step, gamma=0.1) # 设置学习率下降策略

# scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(patience=5)

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

for epoch in range(start_epoch + 1, MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

vgg16_model.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

outputs = vgg16_model(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().cpu().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%} lr:{}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total, scheduler.get_last_lr()))

loss_mean = 0.

scheduler.step() # 更新学习率

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

vgg16_model.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

# 1 150 3 224 224

bs, ncrops, c, h, w = inputs.size()

#

outputs = vgg16_model(inputs.view(-1, c, h, w))

# 1 150 1

outputs_avg = outputs.view(bs, ncrops, -1).mean(1)

loss = criterion(outputs_avg, labels)

_, predicted = torch.max(outputs_avg.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().cpu().sum().numpy()

loss_val += loss.item()

loss_val_mean = loss_val/len(valid_loader)

valid_curve.append(loss_val_mean)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val_mean, correct_val / total_val))

vgg16_model.train()

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.savefig(os.path.join(log_dir, "loss_curve.png"))

# plt.show()