Nanodet自带数据增强

1.自己写的数据增强

from torchvision import transforms

from albumentations import (

HorizontalFlip, IAAPerspective, ShiftScaleRotate, CLAHE, RandomRotate90,

Transpose, ShiftScaleRotate, Blur,GaussianBlur, OpticalDistortion, GridDistortion, HueSaturationValue,

IAAAdditiveGaussianNoise, GaussNoise, MotionBlur, MedianBlur, IAAPiecewiseAffine,

IAASharpen, IAAEmboss, RandomBrightnessContrast, Flip, OneOf, Compose,RandomBrightness,ToSepia, BboxParams,

VerticalFlip,RandomScale,RandomSizedBBoxSafeCrop,Rotate

)

import albumentations as A

import numpy as np

# 0.5

def strong_aug(p=0.9):

return Compose([

OneOf([

IAAAdditiveGaussianNoise(), #�~F�~X�~V��~Y�声添�~J| �~H��~S�~E��~[��~C~O

GaussNoise(), # �~F�~X�~V��~Y�声�~T�~T��~N�~S�~E��~[��~C~O

], p=0.2),

OneOf([

MotionBlur(p=0.25), #使�~T��~Z~O�~\�大�~O�~Z~D�~F~E�| ��~F�~P�~J�模�~J�~T�~T��~N�~S�~E��~[��~C~O

GaussianBlur(p=0.5),

Blur(blur_limit=3, p=0.25), #使�~T��~Z~O�~\�大�~O�~Z~D�~F~E�| �模�~J�~S�~E��~[��~C~O

], p=0.2),

HueSaturationValue(p=0.2),

OneOf([

RandomBrightness(),

IAASharpen(),

IAAEmboss(),

RandomBrightnessContrast(),

], p=0.6),

ToSepia(p=0.1) #�~U�~P�~I�

], p=p)

# 0.7

def aug_with_bbox(p=0.7):

return Compose([

HorizontalFlip(p=0.6), #水平�

VerticalFlip(p=0.6),

RandomScale(scale_limit=0.1, interpolation=1, always_apply=False, p=0.5),

RandomSizedBBoxSafeCrop(width=320, height=320, erosion_rate=0.2, p=0.5),

Rotate(limit=90, p=0.5),

# �~Z~O�~\�仿�~D�~O~X�~M�

ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.50, rotate_limit=45, p=.75)],

p=p,

bbox_params=BboxParams(format='pascal_voc', min_area=1024, min_visibility=0.1, label_fields=['class_labels']))

def aug_all(p=0.7):

return Compose([

OneOf([

IAAAdditiveGaussianNoise(), #�~F�~X�~V��~Y�声添�~J| �~H��~S�~E��~[��~C~O

GaussNoise(), # �~F�~X�~V��~Y�声�~T�~T��~N�~S�~E��~[��~C~O

], p=0.2),

OneOf([

MotionBlur(p=0.25), #使�~T��~Z~O�~\�大�~O�~Z~D�~F~E�| ��~F�~P�~J�模�~J�~T�~T��~N�~S�~E��~[��~C~O

GaussianBlur(p=0.5),

Blur(blur_limit=3, p=0.25), #使�~T��~Z~O�~\�大�~O�~Z~D�~F~E�| �模�~J�~S�~E��~[��~C~O

], p=0.2),

HueSaturationValue(p=0.2),

OneOf([

RandomBrightness(),

IAASharpen(),

IAAEmboss(),

RandomBrightnessContrast(),

], p=0.6),

ToSepia(p=0.1), #�~U�~P�

HorizontalFlip(p=0.6), #水平�

VerticalFlip(p=0.6),

RandomScale(scale_limit=0.1, interpolation=1, always_apply=False, p=0.5),

RandomSizedBBoxSafeCrop(width=320, height=320, erosion_rate=0.2, p=0.5),

Rotate(limit=90, p=0.5),

# �~Z~O�~\�仿�~D�~O~X�~M�

ShiftScaleRotate(shift_limit=0.0625, scale_limit=0.50, rotate_limit=90, p=0.5)

], p=p, bbox_params=BboxParams(format='pascal_voc', min_area=1025, min_visibility=0.1, label_fields=['class_labels']))很多中文乱了,其实第3个函数是对前两个的合并,第1个函数包括了高斯噪声之类的非物理位置变化数据增强;第2个函数是包含翻转之类的物理位置变化的数据增强。

self.tranform = 增强函数

transformed = self.transform(image=meta["img"], bboxes=meta["gt_bboxes"], class_labels=meta["gt_labels"])

meta["img"] = transformed["image"]

meta["gt_bboxes"] = np.array(transformed["bboxes"])

meta["gt_labels"] = np.array(transformed["class_labels"])使用如上。

2.nanodet的数据增强

pipeline:

perspective: 0.0

scale: [0.6, 1.4]

stretch: [[0.8, 1.2], [0.8, 1.2]]

rotation: 0

shear: 0

translate: 0.2

flip: 0.5

brightness: 0.2

contrast: [0.6, 1.4]

saturation: [0.5, 1.2]

normalize: [[103.53, 116.28, 123.675], [57.375, 57.12, 58.395]]2.1 perspective 透视变换

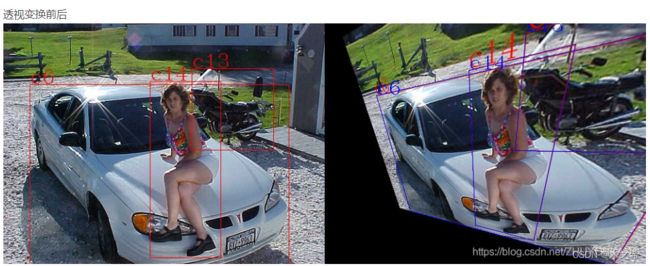

此图参考了 (1条消息) yolov5数据增强引发的思考——透视变换矩阵的创建_之子无裳的博客-CSDN博客

可以看到,透视变换之后,标注框变形不再是矩形框,不便训练,因此一般不使用。 设置值为0就好了。

2.2 scale 缩放

2.3 stretch 拉伸

2.4 rotation 旋转

2.5 shear 切割

2.6 translate 平移

2.7 flip 翻转

2.8 brightness 亮度

2.9 contrast 对比度

2.10 saturation 饱和度