Python 使用numpy 完成 反向传播 多层感知机

一些废话

- 虽然Python使用numpy完成反向传播设计多层感知机已经烂大街了,但因为作业需要还是自己动手写了一个,使用了层次化设计的思想。仅此记录一番。

- 层的设计中仅实现Sigmoid和Tanh两个激活函数。

模型部分

import numpy as np

from matplotlib import pyplot as plt

class layer():

def __init__(self,inputdim,outputdim,act,bias=True):

self.bias = bias

if bias:

self.input = inputdim + 1

else:

self.input = inputdim

self.output = outputdim

self.W = np.random.randn(self.input,self.output)

if act not in ['sigmoid','tanh']:

assert(False)

self.act = act

def __call__(self,x,train=False):

return self.forward(x,train)

def forward(self,x, train):

shape = x.shape

if len(shape)==2:

pass

elif len(shape)==1:

x = x.reshape(1,-1)

else:

assert(False)

if self.bias:

x = np.concatenate([x,np.array([1]*x.shape[0]).reshape(-1,1)],axis=1)

y = x@self.W

if self.act=='sigmoid':

Y = 1/(1+np.exp(-y))

elif self.act == 'tanh':

Y = (np.exp(y)-np.exp(-y))/(np.exp(y)+np.exp(-y))

if train:

# need backward so record inputdata

self.Y = Y

self.x = x

return Y

def backward(self,error_Y,eta):

if self.act=='sigmoid':

error_y = self.Y*(1-self.Y)*error_Y

elif self.act == 'tanh':

error_y = (1-self.Y*self.Y)*error_Y

#此行先前上传版本将error_y误写为error_Y,感谢“镇长1998”指出错误

error_W = (self.x).T@error_y

error_x = error_y@(self.W.T)

self.W = self.W - error_W*eta

if self.bias:

return error_x[:,:-1]

else:

return error_x

实现了单层的 y = σ ( W x + b ) y=\sigma(Wx+b) y=σ(Wx+b)的设计:

L = layer(inputdim,outputdim,act='sigmoid',bias=True)

表示初始化一层使用sigmoid激活,并且使用偏置项。

前向传播

y = L(x,train=True/False)

其中:

- x是一个nxd维的numpy:ndarray数组,n个样本,d维特征

- train为True表示用于训练过程(模型每层计算输出结果后,记录输入输出内容用于反向传播计算)

- train为False表示用于预测过程(模型每层不记录输入输出内容)

后向传播

grad_output = L.backward(grad_input,eta)

其中:

- eta是学习率,

- grad_input是损失loss对式子 y = σ ( W x + b ) y=\sigma(Wx+b) y=σ(Wx+b)中 y y y的偏导数 ∂ l o s s ∂ y \frac{\partial loss}{\partial y} ∂y∂loss,如果y是nxd维的矩阵,那么grad_input也是一个nxd维的矩阵,第(i, j)元素表示 ∂ l o s s ∂ y i j \frac{\partial loss}{\partial y_{ij}} ∂yij∂loss。

- grad_output是损失loss对式子 y = σ ( W x + b ) y=\sigma(Wx+b) y=σ(Wx+b)中 x x x的偏导数 ∂ l o s s ∂ x \frac{\partial loss}{\partial x} ∂x∂loss如果x是nxd维的矩阵,那么grad_output也是一个nxd维的矩阵,第(i, j)元素表示 ∂ l o s s ∂ x i j \frac{\partial loss}{\partial x_{ij}} ∂xij∂loss。

- backward方法必须在前向传播被调用后使用。layer的backward的被调用时,将会根据学习率更新层内参数。

使用示例

其实就是作业

定义模型结构

class Model():

def __init__(self,d1,d2,d3):

#使用两层结构,一个用tanh,一个用sigmoid

#定义损失函数为平方和误差

self.L1 = layer(d1,d2,'tanh',bias=True)

self.L2 = layer(d2,d3,'sigmoid',bias=True)

self.lossf = lambda x,y: np.sum((x-y)*(x-y))

def __call__(self,x,train):

#前向传播

h = self.L1(x,train)

Y = self.L2(h,train)

return Y

def loss(self,y,Y):

#计算y和Y的损失

return self.lossf(y,Y)

def backward(self,error,eta):

#逐层反向传播

#需要输入最终loss对模型输出的梯度

#先对最后一层反向传播

#最后一层计算得到的梯度再传给第一层,用于第一层的反向传播计算

error = self.L2.backward(error,eta)

_ = self.L1.backward(error,eta)

输入数据和训练

#第一类样本特征值

x1=np.array([[1.58,2.32,-5.8],[0.67,1.58,-4.78],[1.04,1.01,-3.63],[-1.49,2.18,-3.39],[-0.41,1.21,-4.73],[1.39,3.16,2.87],[1.20,1.40,-1.89],[-0.92,1.44,-3.22],[0.45,1.33,-4.38],[-0.76,0.84,-1.96]])

#第二类样本特征值

x2=np.array([[0.21,0.03,-2.21],[0.37,0.28,-1.8],[0.18,1.22,0.16],[-0.24,0.93,-1.01],[-1.18,0.39,-0.39],[0.74,0.96,-1.16],[-0.38,1.94,-0.48],[0.02,0.72,-0.17],[0.44,1.31,-0.14],[0.46,1.49,0.68]])

#第三类样本特征值

x3=np.array([[-1.54,1.17,0.64],[5.41,3.45,-1.33],[1.55,0.99,2.69],[1.86,3.19,1.51],[1.68,1.79,-0.87],[3.51,-0.22,-1.39],[1.40,-0.44,-0.92],[0.44,0.83,1.97],[0.25,0.68,-0.99],[0.66,-0.45,0.08]])

#第一类样本标签

y1 = np.zeros([10,3]);y1[:,0]=1

#第二类样本标签

y2 = np.zeros([10,3]);y2[:,1]=1

#第三类样本标签

y3 = np.zeros([10,3]);y3[:,2]=1

#拼起来

x = np.concatenate([x1,x2,x3],axis=0)

y = np.concatenate([y1,y2,y3],axis=0)

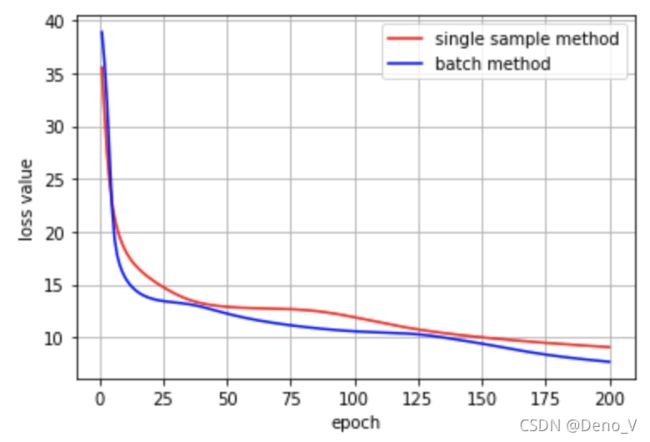

#batch

model = Model(3,5,3)

eta = 0.05

epoch = 200

for i in range(epoch):

pred = model(x,train=True)

lossval = model.loss(pred,y)

#采用平方误差和作为loss的计算方法

#所以loss对模型输出pred的梯度为 2*(pred-y)

model.backward(2*(pred-y),eta)

if i%35==0:

acc = np.sum(np.argmax(pred,axis=1)==np.argmax(y,axis=1))/y.shape[0]

print('epoch: {:>4} | loss: {:>10.6f}'.format(i+1,lossval))