O2O优惠券使用预测项目总结

O2O优惠券使用预测笔记

- 前言

- 项目介绍

-

- 数据

- 评价方式

- 赛题分析

- 基本思路

-

- 数据集划分

- 特征工程

- 模型选取

- 过程及代码

-

- 导入python库

- 导入与划分数据集

- 特征工程

- 模型训练与调参

- 预测测试集

- 总结

前言

笔者希望通过本篇文章总结阿里天池O2O优惠券使用预测比赛的实践成果,以期不断提高自身数据分析和数据挖掘技能的落地能力。

由于没有过多借鉴其他大神的思路,笔者本次实践的成绩为0.74,排名在前5%以内,相信在进一步优化后,能取得比较不错的成绩。

项目介绍

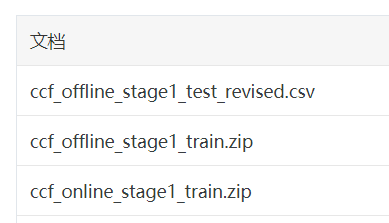

数据

赛题提供的数据为用户在2016年1月1日至2016年6月30日之间真实线上线下消费行为,用以预测用户在2016年7月领取优惠券后15天以内的使用情况,用户及商家隐私信息已做脱敏处理。

数据集主要分为三个部分,依次为用户线下领券及消费行为-测试集、用户线下领券及消费行为-训练集、用户线上领券及消费行为-训练集:

用户线下领券及消费行为-测试集的字段说明如下:

用户线下领券及消费行为-训练集的字段说明如下:

用户线上领券及消费行为-训练集的字段说明如下:

评价方式

赛题以使用优惠券核销预测的平均AUC(ROC曲线下面积)作为评价标准,即对每个优惠券coupon_id单独计算核销预测的AUC值,再对所有优惠券的AUC值求平均作为最终的评价标准。

可见该评分方式与 roc_auc_score 直接计算的AUC评分结果将存在一定的差异。

赛题分析

观察数据集及字段说明后,笔者引出如下思考:

- 数据集方面:

线下训练集与线上训练集之间可能存在关联,因为如果用户存在线上消费的习惯,则在线下进行消费的行为可能会较少;此外,若商户存在线上和线下两个渠道,则有网购习惯的用户在领取指定商家发放的优惠券后,通过线下渠道使用优惠券的可能性也会较小。 - 字段方面:

数据集提供特征维度很少,直观上可能有用的特征维度仅有Discount_rate(不同优惠力度对用户用券的刺激是不同的)、Distance(用户与商家距离越远,用户到店用券的成本理论上越高)、Date_received(节假日领券的话,用户可能更有精力和时间到店消费),因此做特征工程时应考虑构建交叉特征,以丰富特征维度,提高预测的准确性。 - 其他方面:

理论上,短期内用户的消费习惯、用券偏好不会发生重大改变,这意味着用户历史的用券和消费行为可能对预测有帮助,由于测试集是用户2016年7月份的数据,可考虑用训练集中2016年1月至2016年6月的全部或部分数据构造出历史行为特征。

基本思路

数据集划分

基于对赛题的初步分析,我希望利用训练集构造出用户的历史行为特征,因此需要将训练集划分成多份,分别用于特征提取和训练模型。

写baseline时采用的是以下的划分方式:

| 数据集类型 | 训练/测试集时间区间 | 特征提取时间区间 |

|---|---|---|

| 测试集 | 20160701-20160731 | 20160201-20160630 |

| 训练集 | 20160601-20160630 | 20160101-20160531 |

由于baseline预测成绩较为一般,最终参考wepe大神的划分方法,优化后的数据集划分方式如下:

| 数据集类型 | 训练/测试集时间区间 | 特征提取时间区间 |

|---|---|---|

| 测试集 | 20160701-20160731 | 20160315-20160630 |

| 训练集1 | 20160515-20160615 | 20160201-20160514 |

| 训练集2 | 20160414-20160514 | 20160101-20160413 |

优化后的划分方法较优化前主要有两个方面的优势:

- 优化后训练集由训练集1和训练集2合并而成,样本量增加至40W条,训练集数据量越大通常模型学习的效果越好;

- 历史行为的特征提取区间从5个月缩短到3个月,更符合用户行为习惯在短期内不会轻易改变的假设。

特征工程

如赛题分析所述,数据集提供的原始特征较少,为了从用户行为、商户行为、优惠券特点等维度获取更多信息。笔者基于业务理解和常识尽可能穷举所有维度的特征,再选取对预测结果的贡献度大于某阈值的特征,作为最终的特征。

拟提取以下几个维度的特征信息:

- 用户历史线下特征提取(用户维度)

- 商户历史线下特征提取(商户维度)

- 优惠券历史线下特征提取(优惠券维度)

- 用户-商户历史线下交叉特征提取(用户-商户维度)

- 用户-优惠券历史线下交叉特征提取(用户-优惠券维度)

- 商户-优惠券历史线下交叉特征提取(商户-优惠券维度)

- 历史线上特征提取

- 当期特征提取

各维度下具体的特征信息如下:

-

用户历史线下特征——by user

A.历史上各用户领券的次数

B.历史上各用户领券但未核销的次数

C.历史上各用户领券且核销的次数

D.历史上各用户领券后的核销率

E.历史上各用户核销的优惠券中平均/最小/最大用户与商户的距离

F.历史上用户平均核销每个商户的优惠券数量

G.历史上各用户核销过优惠券所属的商家数量及其占总商家数量的比重

H.历史上各用户核销过优惠券所属的优惠券类型及其占总优惠券类型的比重 -

商户历史线下特征——by merchant

A. 历史上各商户被领券的次数

B. 历史上各商户被领券但未核销的次数

C. 历史上各商户被领券且核销的次数

D. 历史上各商户被领券后的核销率

E. 历史上各商户被核销的优惠券中平均/最小/最大用户与商户距离

F. 历史上各商家的优惠券平均被核销的时间

G. 历史上各商家平均被每个用户核销的优惠券数量

H. 历史上核销各商家优惠券的用户数量及其占总用户数量的比重

I. 历史上各商家被核销的各类优惠券数量占总优惠券类型的比重 -

优惠券历史线下特征——by type

A.历史上各优惠券历史被领取次数

B.历史上各优惠券历史被领取但未核销次数

C.历史上各优惠券历史被领取且核销次数

D.历史上各优惠券历史核销率

E.历史上各优惠券平均被核销的时间

F.历史上各优惠券的领取时间(一周中第几天) -

用户与商户历史线下交叉特征——by user & by merchant

A.历史上各用户领取各商家优惠券的数量

B.历史上各用户领取各商家优惠券未核销的数量

C.历史上各用户领取各商家优惠券核销的数量

D.历史上各用户对各商家优惠券的核销率

E.历史上用户对每个商家的不核销次数占用户总不核销次数的比重

F.历史上用户对每个商家的核销次数占用户总核销次数的比重

G.历史上用户对每个商家的不核销次数占商家不核销次数的比重

H.历史上用户对每个商家的核销次数占商家核销次数的比重 -

用户与优惠券历史线下交叉特征——by user & by type

A.历史上各用户领取的各类优惠券次数

B.历史上各用户领取各类优惠券后未核销的次数

C.历史上各用户领取各类优惠券后核销的次数

D.历史上各用户对各类优惠券的核销率

E.历史上各用户对各类优惠券的不核销数量占其用户总不核销数量的比重

F.历史上各用户对各类优惠券的核销数量占其用户总核销数量的比重

G.历史上各用户对各类优惠券的不核销数量占各类优惠券总不核销数量的比重

H. 历史上各用户对各类优惠券的核销数量占各类优惠券总核销数量的比重 -

商家与优惠券历史线下交叉特征——by merchant & by type

A.历史上各商家被领取的各类优惠券数量

B.历史上各商家被领取未核销的各类优惠券数量

C.历史上各商家被领取且核销的各类优惠券数量

D.历史上各商家的各类优惠券核销率

E.历史上各商家各类优惠券的不核销数量占其商家总不核销数量的比重

F.历史上各商家各类优惠券的核销数量占其商家总核销数量的比重

G.历史上各商家各类优惠券的不核销数量占各类优惠券总不核销数量的比重

H.历史上各商家各类优惠券的核销数量占各类优惠券总核销数量的比重 -

用户历史线上特征——by user1

A. 历史上用户线上领券的次数

B. 历史上用户线上消费的次数

- 当期特征

A. 当期各用户领取优惠券的数目

B. 当期各用户领取各类优惠券的数目

C. 当期各用户当天领取的优惠券数量

D. 当期各用户当天领取的各类优惠券数量

E. 当期各用户此次领券之前/之后的领券数量

F. 当期各用户此次领券之前/之后的各类优惠券领券数量

G. 当期各用户领取各商家的优惠券数目

H. 当期各用户领取过优惠券的商家数量

I. 当期各用户领取的优惠券种类数量

J. 当期各商家被领取的优惠券数量

K. 当期各商家被领取的各类优惠券数量

L. 当期各商家被领取的优惠券种类数量

M. 当期各商家被不同用户领券的数目

模型选取

笔者采用的模型是以决策树为弱处理器的Xgboost算法,主要原因如下:

- 某一时间段内的活跃用户包括新用户和老用户,在构造训练集与预测集的历史特征时,由于缺少新用户的历史数据,会产生较多缺失值,而与LR相比Xgboost算法对处理缺失值的要求并不高。

- 训练集样本量较大,有效规避Xgboost容易产生过拟合的缺点,与RF相比可调的参数较多,有助于提升训练效果。

- Xgboost算法在很多比赛上的训练效果不错。

过程及代码

本部分开始用python代码实现和验证上述的分析和思考。

导入python库

# 导入库

import pandas as pd

import numpy as np

import os

import xgboost as xgb

from xgboost import XGBClassifier

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import roc_auc_score

import datetime as dt

导入与划分数据集

# 导入训练集和特征提取区间-线下消费情况

def get_data(path):

df=pd.read_csv(path,encoding='gbk')

return df

path=os.getcwd()

df=get_data(path+'\\ccf_offline_stage1_train.csv')

# 训练集1- date_received 20160515-20160615

df_0515_0615=df[(df['Date_received']>=20160515) & (df['Date_received']<=20160615)]

# 训练集1的特征提取区间- date_received 20160201-20160514

df_0201_0514=df[(df['Date_received']>=20160201) & (df['Date_received']<=20160514)]

# 训练集2- date_received 20160414-20160514

df_0414_0514=df[(df['Date_received']>=20160414) & (df['Date_received']<=20160514)]

# 训练集2的特征提取区间- date_received 20160101-20160413

df_0101_0413=df[(df['Date_received']>=20160101) & (df['Date_received']<=20160413)]

# 测试集的特征提取区间- date_received 20160315-20160630

df_0315_0630=df[(df['Date_received']>=20160315) & (df['Date_received']<=20160630)]

# 数据清洗-选取发生领券的数据

def clean_df(df):

df_c=df[df['Coupon_id'].notnull()]

return df_c.reset_index()

df_0515_0615_c=clean_df(df_0515_0615)

df_0201_0514_c=clean_df(df_0201_0514)

df_0414_0514_c=clean_df(df_0414_0514)

df_0101_0413_c=clean_df(df_0101_0413)

df_0315_0630_c=clean_df(df_0315_0630)

特征工程

- 用户历史线下特征提取——by user

# 用户线下特征的提取-by user

def GetFeatureByUser(df):

# 历史各用户历史领券次数

df_times_byuser=df.groupby(['User_id']).agg({'Coupon_id':'count'}).rename(columns={'Coupon_id':'Times_received_byuser'}).reset_index()

# 历史各用户领券未核销的次数

df_times_notused_byuser=df[df['Date'].isnull()].groupby(['User_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_notused_byuser'}).reset_index()

# 历史各用户领券核销的次数

df_times_used_byuser=df[df['Date'].notnull()].groupby(['User_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_used_byuser'}).reset_index()

# 历史各用户领券后的核销率

df_tem1=pd.merge(df_times_byuser,df_times_notused_byuser,how='left',left_on='User_id',right_on='User_id')

df_tem1=pd.merge(df_tem1,df_times_used_byuser,how='left',left_on='User_id',right_on='User_id').fillna(0)

df_tem1.loc[:,'ConsumeRate_byuser']=df_tem1.loc[:,'Times_received_used_byuser']/df_tem1.loc[:,'Times_received_byuser']

# 历史用户核销优惠券中平均、最大、最小用户-商户距离

# 知识点:.ravel() 转化成数组 xx.join() 用xx组合join中的字符

df_distance_byuser=df[df['Date'].notnull()].groupby(['User_id']).agg({'Distance':['mean','min','max']})

df_distance_byuser.columns=['_byuser_'.join(x) for x in df_distance_byuser.columns.ravel()]

# 历史上各用户平均核销每个商家的优惠券数量

df_merchant_number_byuser=df.groupby(['User_id']).agg({'Merchant_id':'nunique'})\

.rename(columns={'Merchant_id':'Number_merchant_byuser'}).reset_index()

df_tem2=pd.merge(df_tem1,df_merchant_number_byuser,how='left',left_on='User_id',right_on='User_id')

df_tem2.loc[:,'AvgUse_byuser']=df_tem2.loc[:,'Times_received_used_byuser']/df_tem2.loc[:,'Number_merchant_byuser']

# 将上述结果组合成一张表

df_merge=pd.merge(df_tem2,df_distance_byuser,how='left',left_on='User_id',right_on='User_id')

df_merge=pd.merge(df,df_merge,how='left',left_on='User_id',right_on='User_id')

# 历史上各用户核销优惠券所属的商家数量及其占总商家数量的比重(去重)

df_number_byuser_onmerchant=df[df['Date'].notnull()].groupby(['User_id']).agg({'Merchant_id':'nunique'})\

.rename(columns={'Merchant_id':'Number_used_byuser_onmerchant'}).reset_index()

df_merge=pd.merge(df_merge,df_number_byuser_onmerchant,how='left',left_on='User_id',right_on='User_id')

df_merge.loc[:,'Number_used_byuser_onmerchant'].fillna(0,inplace=True)

df_merge.loc[:,'Percent_used_byuser_onmerchant']=df_merge.loc[:,'Number_used_byuser_onmerchant']/\

df_merge.loc[:,'Merchant_id'].nunique()

# 历史上各用户核销优惠券的类型数及其占总优惠券类型的比重(去重)

df_number_byuser_ontype=df[df['Date'].notnull()].groupby(['User_id']).agg({'Discount_rate':'nunique'})\

.rename(columns={'Discount_rate':'Number_used_byuser_ontype'}).reset_index()

df_merge=pd.merge(df_merge,df_number_byuser_ontype,how='left',left_on='User_id',right_on='User_id')

df_merge.loc[:,'Number_used_byuser_ontype'].fillna(0,inplace=True)

df_merge.loc[:,'Percent_used_byuser_ontype']=df_merge.loc[:,'Number_used_byuser_ontype']/\

df_merge.loc[:,'Discount_rate'].nunique()

return df_merge

df_0201_0514_c1=GetFeatureByUser(df_0201_0514_c)

df_0101_0413_c1=GetFeatureByUser(df_0101_0413_c)

df_0315_0630_c1=GetFeatureByUser(df_0315_0630_c)

- 商户历史线下行为特征——by merchant

# 商家线下特征提取-by merchant

def GetFeatureByMerchant(df):

# 历史上各商家优惠券被领取的次数

df_times_bymerchant=df.groupby(['Merchant_id']).agg({'Coupon_id':'count'}).rename(columns={'Coupon_id':'Times_received_bymerchant'}).reset_index()

# 历史上各商家优惠券被领取后不核销的次数

df_times_notused_bymerchant=df[df['Date'].isnull()].groupby(['Merchant_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_notused_bymerchant'}).reset_index()

# 历史上各商家优惠券被领取后核销的次数

df_times_used_bymerchant=df[df['Date'].notnull()].groupby(['Merchant_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_used_bymerchant'}).reset_index()

# 历史上各商家优惠券被领取后的核销率

df_tem1=pd.merge(df_times_bymerchant,df_times_notused_bymerchant,how='left',left_on='Merchant_id',right_on='Merchant_id')

df_tem1=pd.merge(df_tem1,df_times_used_bymerchant,how='left',left_on='Merchant_id',right_on='Merchant_id').fillna(0)

df_tem1.loc[:,'ConsumeRate_bymerchant']=df_tem1.loc[:,'Times_received_used_bymerchant']/df_tem1.loc[:,'Times_received_bymerchant']

# 历史上商家核销优惠券中平均、最大、最小商家-用户距离

# 知识点:.ravel() 转化成数组 xx.join() 用xx组合join中的字符

df_distance_bymerchant=df[df['Date'].notnull()].groupby(['Merchant_id']).agg({'Distance':['mean','min','max']})

df_distance_bymerchant.columns=['_bymerchant_'.join(x) for x in df_distance_bymerchant.columns.ravel()]

# 历史上商家优惠券平均被每个用户核销多少张

df_user_number_bymerchant=df.groupby(['Merchant_id']).agg({'User_id':'nunique'})\

.rename(columns={'User_id':'Number_user_bymerchant'}).reset_index()

df_tem2=pd.merge(df_tem1,df_user_number_bymerchant,how='left',left_on='Merchant_id',right_on='Merchant_id')

df_tem2.loc[:,'AvgUse_bymerchant']=df_tem2.loc[:,'Times_received_used_bymerchant']/df_tem2.loc[:,'Number_user_bymerchant']

# 合并特征

df_merge=pd.merge(df_tem2,df_distance_bymerchant,how='left',left_on='Merchant_id',right_on='Merchant_id')

df_merge=pd.merge(df,df_merge,how='left',left_on='Merchant_id',right_on='Merchant_id')

# 历史上核销各商家优惠券的用户数量及其占总用户数量的比重(去重)

df_number_bymerchant_onuser=df[df['Date'].notnull()].groupby(['Merchant_id']).agg({'User_id':'nunique'})\

.rename(columns={'User_id':'Number_used_bymerchant_onuser'}).reset_index()

df_merge=pd.merge(df_merge,df_number_bymerchant_onuser,how='left',left_on='Merchant_id',right_on='Merchant_id')

df_merge.loc[:,'Number_used_bymerchant_onuser'].fillna(0,inplace=True)

df_merge.loc[:,'Percent_used_bymerchant_onuser']=df_merge.loc[:,'Number_used_bymerchant_onuser']/\

df_merge.loc[:,'User_id'].nunique()

# 历史上核销各商家优惠券的类型数量及其占总优惠券类型的比重(去重)

df_number_bymerchant_ontype=df[df['Date'].notnull()].groupby(['Merchant_id']).agg({'Discount_rate':'nunique'})\

.rename(columns={'Discount_rate':'Number_used_bymerchant_ontype'}).reset_index()

df_merge=pd.merge(df_merge,df_number_bymerchant_ontype,how='left',left_on='Merchant_id',right_on='Merchant_id')

df_merge.loc[:,'Number_used_bymerchant_ontype'].fillna(0,inplace=True)

df_merge.loc[:,'Percent_used_bymerchant_ontype']=df_merge.loc[:,'Number_used_bymerchant_ontype']/\

df_merge.loc[:,'Discount_rate'].nunique()

#历史上各商家优惠券平均被核销的时间

df_merge.loc[:,'Interval']=(pd.to_datetime(df[df['Date'].notnull()].loc[:,'Date'],format='%Y%m%d')-\

pd.to_datetime(df[df['Date'].notnull()].loc[:,'Date_received'],format='%Y%m%d')).apply(lambda x:x.days)

df_interval_bymerchant=df_merge[df_merge['Date'].notnull()].groupby(['Merchant_id']).agg({'Interval':'mean'})\

.rename(columns={'Interval':'Interval_avg_bymerchant'}).reset_index()

df_merge1=pd.merge(df_merge,df_interval_bymerchant,how='left',left_on='Merchant_id',right_on='Merchant_id')

return df_merge1

df_0201_0514_c2=GetFeatureByMerchant(df_0201_0514_c1)

df_0101_0413_c2=GetFeatureByMerchant(df_0101_0413_c1)

df_0315_0630_c2=GetFeatureByMerchant(df_0315_0630_c1)

- 优惠券历史线下特征——by type

# 优惠券线下特征提取——by type

def GetFeatureByType(df):

# 历史上各类优惠券被领取的次数

df_times_bytype=df.groupby(['Discount_rate']).agg({'Coupon_id':'count'}).rename(columns={'Coupon_id':'Times_received_bytype'}).reset_index()

# 历史上各类优惠券被领取未核销的次数

df_times_notused_bytype=df[df['Date'].isnull()].groupby(['Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_notused_bytype'}).reset_index()

# 历史上各类优惠券被领取且核销的次数

df_times_used_bytype=df[df['Date'].notnull()].groupby(['Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_used_bytype'}).reset_index()

# 历史上各类优惠券核销率

df_tem1=pd.merge(df_times_bytype,df_times_notused_bytype,how='left',left_on='Discount_rate',right_on='Discount_rate')

df_tem1=pd.merge(df_tem1,df_times_used_bytype,how='left',left_on='Discount_rate',right_on='Discount_rate').fillna(0)

df_tem1.loc[:,'ConsumeRate_bytype']=df_tem1.loc[:,'Times_received_used_bytype']/df_tem1.loc[:,'Times_received_bytype']

# 历史上各类优惠券平均被核销的时间

df_interval_bytype=df.groupby(['Discount_rate']).agg({'Interval':'mean'}).rename(columns={'Interval':'Interval_avg_bytype'}).reset_index()

df_merge=pd.merge(df,df_tem1,how='left',left_on='Discount_rate',right_on='Discount_rate')

df_merge=pd.merge(df_merge,df_interval_bytype,how='left',left_on='Discount_rate',right_on='Discount_rate')

# 历史上优惠券被领取是一周的第几天

df_merge.loc[:,'Datetime']=pd.to_datetime(df_merge.loc[:,'Date_received'],format='%Y%m%d')

df_merge.loc[:,'Dateofweek']=df_merge.loc[:,'Datetime'].dt.dayofweek

return df_merge

df_0201_0514_c3=GetFeatureByType(df_0201_0514_c2)

df_0101_0413_c3=GetFeatureByType(df_0101_0413_c2)

df_0315_0630_c3=GetFeatureByType(df_0315_0630_c2)

4.用户-商户历史线下交互特征——by user & by merchant

# 用户-商家交互特征提取

def GetFeatureByUserByMerchant(df):

# 历史上各用户领取各商家优惠券的次数

df_times_byuser_bymerchant=df.groupby(['User_id','Merchant_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_byuser_bymerchant'}).reset_index()

# 历史上各用户领取各商家优惠券不核销的次数

df_times_notused_byuser_bymerchant=df[df['Date'].isnull()].groupby(['User_id','Merchant_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_notused_byuser_bymerchant'}).reset_index()

# 历史上各用户领取各商家优惠券核销的次数

df_times_used_byuser_bymerchant=df[df['Date'].notnull()].groupby(['User_id','Merchant_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_used_byuser_bymerchant'}).reset_index()

# 历史上各用户对各商家优惠券的核销率

df_tem1=pd.merge(df_times_byuser_bymerchant,df_times_used_byuser_bymerchant,how='left',left_on=['User_id','Merchant_id']\

,right_on=['User_id','Merchant_id'])

df_tem1=pd.merge(df_tem1,df_times_notused_byuser_bymerchant,how='left',left_on=['User_id','Merchant_id']\

,right_on=['User_id','Merchant_id']).fillna(0)

df_tem1.loc[:,'ConsumeRate_byuser_bymerchant']=df_tem1.loc[:,'Times_received_used_byuser_bymerchant']/\

df_tem1.loc[:,'Times_received_byuser_bymerchant']

# 将结果合并到总表上

df_merge=pd.merge(df,df_tem1,how='left',left_on=['User_id','Merchant_id'],right_on=['User_id','Merchant_id'])

# 用户对每个商家的不核销次数占用户总不核销次数的比重

df_merge.loc[:,'NotuseRate_bymerchant_byuser']=df_merge.loc[:,'Times_received_notused_byuser_bymerchant']/\

df_merge.loc[:,'Times_received_notused_byuser']

# 用户对每个商家的核销次数占用户总核销次数的比重

df_merge.loc[:,'UseRate_bymerchant_byuser']=df_merge.loc[:,'Times_received_used_byuser_bymerchant']/\

df_merge.loc[:,'Times_received_used_byuser']

# 用户对每个商家的不核销次数占商家不核销次数的比重

df_merge.loc[:,'NotuseRate_byuser_bymerchant']=df_merge.loc[:,'Times_received_notused_byuser_bymerchant']/\

df_merge.loc[:,'Times_received_notused_bymerchant']

# 用户对每个商家的核销次数占商家核销次数的比重

df_merge.loc[:,'UseRate_byuser_bymerchant']=df_merge.loc[:,'Times_received_used_byuser_bymerchant']/\

df_merge.loc[:,'Times_received_used_bymerchant']

return df_merge

df_0201_0514_c4=GetFeatureByUserByMerchant(df_0201_0514_c3)

df_0101_0413_c4=GetFeatureByUserByMerchant(df_0101_0413_c3)

df_0315_0630_c4=GetFeatureByUserByMerchant(df_0315_0630_c3)

- 用户-优惠券历史交互特征——by user & by type

# 用户与优惠券交叉特征提取

def GetFeatureByUserByType(df):

# 历史上各用户领取各优惠券的次数

df_times_byuser_bytype=df.groupby(['User_id','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_byuser_bytype'}).reset_index()

# 历史上各用户领取各类优惠券未核销的次数

df_times_notused_byuser_bytype=df[df['Date'].isnull()].groupby(['User_id','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_notused_byuser_bytype'}).reset_index()

# 历史上各用户领取各类优惠券且核销的次数

df_times_used_byuser_bytype=df[df['Date'].notnull()].groupby(['User_id','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_used_byuser_bytype'}).reset_index()

# 历史上各用户对各优惠券的核销率

df_tem1=pd.merge(df_times_byuser_bytype,df_times_notused_byuser_bytype,how='left',left_on=['User_id','Discount_rate']\

,right_on=['User_id','Discount_rate'])

df_tem1=pd.merge(df_tem1,df_times_used_byuser_bytype,how='left',left_on=['User_id','Discount_rate']\

,right_on=['User_id','Discount_rate']).fillna(0)

df_tem1.loc[:,'ConsumeRate_byuser_bytype']=df_tem1.loc[:,'Times_received_used_byuser_bytype']/df_tem1.loc[:,'Times_received_byuser_bytype']

# 将总表与上述结果合并

df_merge=pd.merge(df,df_tem1,how='left',left_on=['User_id','Discount_rate'],right_on=['User_id','Discount_rate'])

# 历史上用户对各类优惠券的不核销次数占用户总不核销次数的比重

df_merge.loc[:,'NotuseRate_bytype_byuser']=df_merge.loc[:,'Times_received_notused_byuser_bytype']/\

df_merge.loc[:,'Times_received_notused_byuser']

# 历史上用户对各类优惠券的核销次数占用户总核销次数的比重

df_merge.loc[:,'UseRate_bytype_byuser']=df_merge.loc[:,'Times_received_used_byuser_bytype']/\

df_merge.loc[:,'Times_received_used_byuser']

# 历史上用户对各类优惠券的不核销次数占各类优惠券总不核销次数的比重

df_merge.loc[:,'NotuseRate_byuser_bytype']=df_merge.loc[:,'Times_received_notused_byuser_bytype']/\

df_merge.loc[:,'Times_received_notused_bytype']

# 历史上用户对各类优惠券的核销次数占各类优惠券总核销次数的比重

df_merge.loc[:,'UseRate_byuser_bytype']=df_merge.loc[:,'Times_received_used_byuser_bytype']/\

df_merge.loc[:,'Times_received_used_bytype']

return df_merge

df_0201_0514_c5=GetFeatureByUserByType(df_0201_0514_c4)

df_0101_0413_c5=GetFeatureByUserByType(df_0101_0413_c4)

df_0315_0630_c5=GetFeatureByUserByType(df_0315_0630_c4)

- 商户-优惠券历史线下交互特征——by merchant & by type

# 商家与优惠券交叉特征提取

def GetFeatureByMerchantByType(df):

# 历史上各商家被领取各类优惠券的次数

df_times_bymerchant_bytype=df.groupby(['Merchant_id','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_bymerchant_bytype'}).reset_index()

# 历史上各商家被领取各类优惠券未核销的次数

df_times_notused_bymerchant_bytype=df[df['Date'].isnull()].groupby(['Merchant_id','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_notused_bymerchant_bytype'}).reset_index()

# 历史上各商家被领取各类优惠券核销的次数

df_times_used_bymerchant_bytype=df[df['Date'].notnull()].groupby(['Merchant_id','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_used_bymerchant_bytype'}).reset_index()

# 历史上各商家对各类优惠券的核销率

df_tem1=pd.merge(df_times_bymerchant_bytype,df_times_notused_bymerchant_bytype,how='left',left_on=['Merchant_id','Discount_rate']\

,right_on=['Merchant_id','Discount_rate'])

df_tem1=pd.merge(df_tem1,df_times_used_bymerchant_bytype,how='left',left_on=['Merchant_id','Discount_rate']\

,right_on=['Merchant_id','Discount_rate']).fillna(0)

df_tem1.loc[:,'ConsumeRate_bymerchant_bytype']=df_tem1.loc[:,'Times_received_used_bymerchant_bytype']/\

df_tem1.loc[:,'Times_received_bymerchant_bytype']

# 合并总表与上述特征

df_merge=pd.merge(df,df_tem1,how='left',left_on=['Merchant_id','Discount_rate'],right_on=['Merchant_id','Discount_rate'])

# 历史上商家发放的各类优惠券的不核销次数占商家总不核销次数的比重

df_merge.loc[:,'NotuseRate_bytype_bymerchant']=df_merge.loc[:,'Times_received_notused_bymerchant_bytype']/\

df_merge.loc[:,'Times_received_notused_bymerchant']

# 历史上商家发放的各类优惠券的核销次数占商家总核销次数的比重

df_merge.loc[:,'UseRate_bytype_bymerchant']=df_merge.loc[:,'Times_received_used_bymerchant_bytype']/\

df_merge.loc[:,'Times_received_used_bymerchant']

# 历史上商家发放的各类优惠券的不核销次数占各类优惠券总不核销次数的比重

df_merge.loc[:,'NotuseRate_bymerchant_bytype']=df_merge.loc[:,'Times_received_notused_byuser_bytype']/\

df_merge.loc[:,'Times_received_notused_bytype']

# 历史上用户对各类优惠券的核销次数占各类优惠券总核销次数的比重

df_merge.loc[:,'UseRate_bymerchant_bytype']=df_merge.loc[:,'Times_received_used_byuser_bytype']/\

df_merge.loc[:,'Times_received_used_bytype']

return df_merge

df_0201_0514_c6=GetFeatureByMerchantByType(df_0201_0514_c5)

df_0101_0413_c6=GetFeatureByMerchantByType(df_0101_0413_c5)

df_0315_0630_c6=GetFeatureByMerchantByType(df_0315_0630_c5)

- 当期特征

# 其他特征-当期

def GetFeatureByothers(df):

# 用户领取优惠券的数目

df_times_byuser_n=df.groupby('User_id').agg({'Coupon_id':'count'}).rename(columns={'Coupon_id':'Times_received_byuser_n'}).reset_index()

# 用户领取特定优惠券的数目

df_times_byuser_bytype_n=df.groupby(['User_id','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_byuser_bytype_n'}).reset_index()

#用户当天领取的优惠券数目

df_times_byuser_bydate_n=df.groupby(['User_id','Date_received']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_byuser_bydate_n'}).reset_index()

#用户当天领取的特定优惠券数目

df_times_byuser_bydate_bytype_n=df.groupby(['User_id','Date_received','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_byuser_bydate_bytype_n'}).reset_index()

# 用户此次之后/前领取的所有优惠券数目

df_times_byuser_byindex_n=df.groupby(['User_id','index']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_byuser_byindex_n'}).reset_index()

df_times_byuser_byindex_n.loc[:,'Times_received_byuser_byindex_n_before']=df_times_byuser_byindex_n.groupby(['User_id'])\

['Times_received_byuser_byindex_n'].cumsum()-1

df_tem1=pd.merge(df_times_byuser_byindex_n,df_times_byuser_n,how='left',left_on='User_id',right_on='User_id')

df_tem1.loc[:,'Times_received_byuser_byindex_n_after']=df_tem1.loc[:,'Times_received_byuser_n']\

-df_tem1.loc[:,'Times_received_byuser_byindex_n_before']-1

# 用户此次之后/前领取的特定优惠券数目

df_times_byuser_bytype_byindex_n=df.groupby(['User_id','Discount_rate','index']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Times_received_byuser_bytype_byindex_n'}).reset_index()

df_times_byuser_bytype_byindex_n.loc[:,'Times_received_byuser_bytype_byindex_n_before']=df_times_byuser_bytype_byindex_n.groupby(['User_id','Discount_rate'])\

['Times_received_byuser_bytype_byindex_n'].cumsum()-1

df_tem2=pd.merge(df_times_byuser_bytype_byindex_n,df_times_byuser_bytype_n,how='left',left_on=['User_id','Discount_rate']\

,right_on=['User_id','Discount_rate'])

df_tem2.loc[:,'Times_received_byuser_bytype_byindex_n_after']=df_tem2.loc[:,'Times_received_byuser_bytype_n']\

-df_tem2.loc[:,'Times_received_byuser_bytype_byindex_n_before']-1

# 用户领取特定商家的优惠券数目

df_number_byuser_bymerchant_n=df.groupby(['User_id','Merchant_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Number_received_byuser_bymerchant_n'}).reset_index()

# 用户领取的不同商家数目

df_number_byuser_onmerchant_n=df.groupby(['User_id']).agg({'Merchant_id':'nunique'})\

.rename(columns={'Merchant_id':'Number_received_byuser_onmerchant_n'}).reset_index()

#用户领取的所有优惠券种类数目

df_number_byuser_ontype_n=df.groupby(['User_id']).agg({'Discount_rate':'nunique'})\

.rename(columns={'Discount_rate':'Number_received_byuser_ontype_n'}).reset_index()

#商家被领取的优惠券数目

df_number_bymerchant_n=df.groupby(['Merchant_id']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Number_received_bymerchant_n'}).reset_index()

#商家被领取的特定优惠券数目

df_number_bymerchant_bytype_n=df.groupby(['Merchant_id','Discount_rate']).agg({'Coupon_id':'count'})\

.rename(columns={'Coupon_id':'Number_received_bymerchant_bytype_n'}).reset_index()

#商家被多少不同用户领取的数目

df_number_bymerchant_onuser_n=df.groupby(['Merchant_id']).agg({'User_id':'nunique'})\

.rename(columns={'User_id':'Number_received_bymerchant_onuser_n'}).reset_index()

#商家发行的所有优惠券种类数目

df_number_bymerchant_ontype_n=df.groupby(['Merchant_id']).agg({'Discount_rate':'nunique'})\

.rename(columns={'Discount_rate':'Number_received_bymerchant_ontype_n'}).reset_index()

#合并所有当期特征

df_merge=pd.merge(df,df_tem1,how='left',left_on=['User_id','index'],right_on=['User_id','index'])

df_merge=pd.merge(df_merge,df_tem2,how='left',left_on=['User_id','Discount_rate','index'],right_on=['User_id','Discount_rate','index'])

df_merge=pd.merge(df_merge,df_times_byuser_bydate_n,how='left',left_on=['User_id','Date_received'],right_on=['User_id','Date_received'])

df_merge=pd.merge(df_merge,df_times_byuser_bydate_bytype_n,how='left',left_on=['User_id','Date_received','Discount_rate']\

,right_on=['User_id','Date_received','Discount_rate'])

df_merge=pd.merge(df_merge,df_number_byuser_bymerchant_n,how='left',left_on=['User_id','Merchant_id'],right_on=['User_id','Merchant_id'])

df_merge=pd.merge(df_merge,df_number_byuser_onmerchant_n,how='left',left_on=['User_id'],right_on=['User_id'])

df_merge=pd.merge(df_merge,df_number_byuser_ontype_n,how='left',left_on=['User_id'],right_on=['User_id'])

df_merge=pd.merge(df_merge,df_number_bymerchant_n,how='left',left_on=['Merchant_id'],right_on=['Merchant_id'])

df_merge=pd.merge(df_merge,df_number_bymerchant_bytype_n,how='left',left_on=['Merchant_id','Discount_rate']\

,right_on=['Merchant_id','Discount_rate'])

df_merge=pd.merge(df_merge,df_number_bymerchant_onuser_n,how='left',left_on=['Merchant_id'],right_on=['Merchant_id'])

df_merge=pd.merge(df_merge,df_number_bymerchant_ontype_n,how='left',left_on=['Merchant_id'],right_on=['Merchant_id'])

# 用户领券日为一周的周几

df_merge.loc[:,'Datetime_received_n']=pd.to_datetime(df_merge.loc[:,'Date_received'],format='%Y%m%d')

df_merge.loc[:,'Dayofweek_received_n']=df_merge.loc[:,'Datetime_received_n'].dt.dayofweek

df_merge1=df_merge.drop(['Datetime_received_n'],axis=1)

return df_merge1

df_0515_0615_c1=GetFeatureByothers(df_0515_0615_c)

df_0414_0514_c1=GetFeatureByothers(df_0414_0514_c)

- 合并训练集的历史特征和当期特征

# 获取各维度特征的列名

#(1)

columns_c0=df_0101_0413_c.columns.values.tolist()

columns_c1=df_0101_0413_c1.columns.values.tolist()

columns_c2=df_0101_0413_c2.columns.values.tolist()

columns_c3=df_0101_0413_c3.columns.values.tolist()

columns_c4=df_0101_0413_c4.columns.values.tolist()

columns_c5=df_0101_0413_c5.columns.values.tolist()

columns_c6=df_0101_0413_c6.columns.values.tolist()

#(2)

columns_c1_c0=list(set(columns_c1)-set(columns_c0))

columns_c2_c1=list(set(columns_c2)-set(columns_c1)-set(['Interval']))

columns_c3_c2=list(set(columns_c3)-set(columns_c2)-set(['Interval','Datetime']))

columns_c4_c3=list(set(columns_c4)-set(columns_c3)-set(['Interval','Datetime']))

columns_c5_c4=list(set(columns_c5)-set(columns_c4)-set(['Interval','Datetime']))

columns_c6_c5=list(set(columns_c6)-set(columns_c5)-set(['Interval','Datetime']))

# (3)

columns_c1_c0.extend(['User_id'])

columns_c2_c1.extend(['Merchant_id'])

columns_c3_c2.extend(['Discount_rate'])

columns_c4_c3.extend(['User_id','Merchant_id'])

columns_c5_c4.extend(['User_id','Discount_rate'])

columns_c6_c5.extend(['Merchant_id','Discount_rate'])

# 合并训练集的历史特征和当期特征

def GetHistoryFeature(df_history,df_prediction,col1,col2,col3,col4,col5,col6):

#(1)获取历史特征信息

df_byuser=df_history.loc[:,col1].drop_duplicates(subset=['User_id'],keep='first',inplace=False)

df_bymerchant=df_history.loc[:,col2].drop_duplicates(subset=['Merchant_id'],keep='first',inplace=False)

df_bytype=df_history.loc[:,col3].drop_duplicates(subset=['Discount_rate'],keep='first',inplace=False)

df_byuser_bymerchant=df_history.loc[:,col4].drop_duplicates(subset=['User_id','Merchant_id'],keep='first',inplace=False)

df_byuser_bytype=df_history.loc[:,col5].drop_duplicates(subset=['User_id','Discount_rate'],keep='first',inplace=False)

df_bymerchant_bytype=df_history.loc[:,col6].drop_duplicates(subset=['Merchant_id','Discount_rate'],keep='first',inplace=False)

#(2)合并历史信息和当期信息

df_merge1=pd.merge(df_prediction,df_byuser,how='left',left_on=['User_id'],right_on=['User_id'])

df_merge2=pd.merge(df_merge1,df_bymerchant,how='left',left_on=['Merchant_id'],right_on=['Merchant_id'])

df_merge3=pd.merge(df_merge2,df_bytype,how='left',left_on=['Discount_rate'],right_on=['Discount_rate'])

df_merge4=pd.merge(df_merge3,df_byuser_bymerchant,how='left',left_on=['User_id','Merchant_id']\

,right_on=['User_id','Merchant_id'])

df_merge5=pd.merge(df_merge4,df_byuser_bytype,how='left',left_on=['User_id','Discount_rate']\

,right_on=['User_id','Discount_rate'])

df_merge6=pd.merge(df_merge5,df_bymerchant_bytype,how='left',left_on=['Merchant_id','Discount_rate']\

,right_on=['Merchant_id','Discount_rate'])

return df_merge6

df_train_0515_0615=GetHistoryFeature(df_0201_0514_c6,df_0515_0615_c1,columns_c1_c0,columns_c2_c1,columns_c3_c2\

,columns_c4_c3,columns_c5_c4,columns_c6_c5)

df_train_0414_0514=GetHistoryFeature(df_0101_0413_c6,df_0414_0514_c1,columns_c1_c0,columns_c2_c1,columns_c3_c2\

,columns_c4_c3,columns_c5_c4,columns_c6_c5)

# 将两个训练集合并

df_train=pd.concat([df_train_0414_0514,df_train_0515_0615],axis=0)

- 标签构建、缺失值处理以及定性特征处理

# 标签构建

def create_flag(df):

df.loc[:,'Flag']=df['Date'].apply(lambda x: 0 if np.isnan(x) else 1)

df.drop(['Date','index','Coupon_id','Date_received'],axis=1,inplace=True)

return df

df_train=create_flag(df_train)

# 训练集缺失值处理

df_train_fillna=df_train.fillna(-999)

# 哑编码定性特征

def dummy(df):

dummies_type=pd.get_dummies(df['Discount_rate'],prefix='Discount_rate')

df_dummy=pd.concat([df,dummies_type],axis=1)

df_dummy.drop(['Discount_rate'],axis=1,inplace=True)

return df_dummy

df_train_fillna=dummy(df_train_fillna)

- 根据特征重要性排行选取贡献度大于0.01的特征

# 训练模型Xgboost

X_train=df_train_fillna.drop(['Flag'],axis=1)

y_train=df_train_fillna.loc[:,'Flag']

def get_xgb(feature,flag):

train = xgb.DMatrix(data = feature, label = flag)

Trate = 0.25

params = {'booster':'gbtree', # 选择基学习器

'n_estimators':500, # 基学习器的数量

'eval_metric':'auc', # 选择模型的评分方法

'eta':0.1, # 学习率,稀释每次迭代的特征权重

'gamma':0, # 分裂阈值,每次分裂致使损失函数下降幅度超过这个阈值时才会分裂

'max_depth':4, # 树的深度,越深越容易出现过拟合

'min_child_weight':1,

'max_delta_step':0,

'subsample':0.7, # 限制每个基学习器样本采样的比例,值越小越容易防止拟合,但太小会出现欠拟合

'colsample_bytree':0.7, # 限制每个基学习器特征采样的比例

'colsample_bylevel':1, # 用来控制每个基学习器分裂时特征采集的比例

'base_score':Trate,

'objective':'binary:logistic', # 目标函数,不同的目标函数可以处理分类、回归等不同类型的问题

'lambda':5, # L2正则项的权重系数,越大越保守

'alpha':8, # L1正则项的权重系数,越大越保守

'random_seed':100 # 随机种子,相同种子可以复现随机结果,主要用于模型调参

}

xgb_model = xgb.train(params, train, num_boost_round=200, maximize = True,verbose_eval= True )

return xgb_model

xgb_model=get_xgb(X_train,y_train)

# 输出所选特征的重要程度

imp_dict=xgb_model.get_score(importance_type='gain')

imp_gain=pd.Series(imp_dict).sort_values(ascending=False)

importance=imp_gain/imp_gain.sum()

# 选出重要性大于阈值的特征

importance_index=importance.index.tolist()

importance_values=importance.values.tolist()

df_importance=pd.DataFrame({'Feature':importance_index,'Imp':importance_values})

col_select=df_importance[df_importance['Imp']>=0.01].loc[:,'Feature'].values.tolist()

模型训练与调参

- 将特征工程中提取的特征值(col_select)重新代入模型中训练。

# 训练模型-XGBClassifier

X_train_select=df_train_fillna.loc[:,col_select]

model=XGBClassifier(

base_score=0.25,

booster='gbtree',

learning_rate=0.1,

n_estimators=600,

max_depth=4,

min_child_weight = 1,

gamma=0.,

subsample=0.3,

colsample_btree=0.7,

objective='binary:logistic',

scale_pos_weight=1,

reg_lambda=7,

reg_alpha=8,

random_state=2,

n_jobs=-1,

)

model.fit(X_train_select,y_train)

- 利用sklearn中的GridSearchCV粗调参数,优化模型预测效果。调参步骤如下:

(1)设置模型初始参数;

(2)设定预调参数的取值范围;

(3)GridSearchCV输出取值范围内的最优参数;

(4)将最优参数代入模型后重新训练;

(5)选取下一次预调参数,并重复上述步骤。

按以上步骤粗调通常达到的是局部最优解,但考虑到训练集样本量大,训练成本高,因此未进行细调。

# 调参过程-获取最优的n_estimators---结果为600

CV_params={'n_estimators':np.linspace(100, 1000, 10, dtype=int)}

gs=GridSearchCV(model,CV_params,verbose=2,refit=True,cv=5,n_jobs=-1)

gs.fit(X_train_select,y_train)

print('参数的最佳取值:', gs.best_params_)

print('最佳模型得分:', gs.best_score_)

# 调参过程-获取最优的max_depth---结果为4

CV_params={'max_depth':np.linspace(1,6,6,dtype=int)}

gs=GridSearchCV(model,CV_params,verbose=2,refit=True,cv=3,n_jobs=-1)

gs.fit(X_train_select,y_train)

print('参数的最佳取值:', gs.best_params_)

print('最佳模型得分:', gs.best_score_)

# 调参过程-获取最优的subsample---结果为0.3

CV_params={'subsample':np.linspace(0,1.0,11)}

gs=GridSearchCV(model,CV_params,verbose=2,refit=True,cv=3,n_jobs=-1)

gs.fit(X_train_select,y_train)

print('参数的最佳取值:', gs.best_params_)

print('最佳模型得分:', gs.best_score_)

# 调参过程-获取最优的colsample_btree--结果

CV_params={'colsample_btree':np.linspace(0,1.0,11)}

gs=GridSearchCV(model,CV_params,verbose=2,refit=True,cv=3,n_jobs=-1)

gs.fit(X_train_select,y_train)

print('参数的最佳取值:', gs.best_params_)

print('最佳模型得分:', gs.best_score_)

# 调参过程-获取最优的reg_lambda----结果为7

CV_params={'reg_lambda':np.linspace(0,10,11,dtype=int)}

gs=GridSearchCV(model,CV_params,verbose=2,refit=True,cv=3,n_jobs=-1)

gs.fit(X_train_select,y_train)

print('参数的最佳取值:', gs.best_params_)

print('最佳模型得分:', gs.best_score_)

# 调参过程-获取最优的reg_alpha----结果为8

CV_params={'reg_alpha':np.linspace(0,10,11,dtype=int)}

gs=GridSearchCV(model,CV_params,verbose=2,refit=True,cv=3,n_jobs=-1)

gs.fit(X_train_select,y_train)

print('参数的最佳取值:', gs.best_params_)

print('最佳模型得分:', gs.best_score_)

- 用roc_auc_score查看训练集的预测评分为0.88左右,笔者没有特别去构建与赛题说明一致的评分方式,因此与最终提交的分数会存在差异。

# 预测训练集-XGBClassifier

prediction_train_proba=model.predict_proba(X_train_select)

# 获取训练集预测标签的对应概率

prediction_train_proba=prediction_train_proba[:,1]

# 训练集AUC评分

print('train_AUC_xgbclassified',roc_auc_score(y_train,prediction_train_proba))

预测测试集

# 获取测试集数据并进行初步清洗

df_t=get_data(path+'\\ccf_offline_stage1_test_revised.csv')

df_t=clean_df(df_t)

# 获取测试集当期的特征

df_t_0701_0731_c=GetFeatureByothers(df_t)

# 合并测试集的当期特征和历史特征

df_test=GetHistoryFeature(df_0315_0630_c6,df_t_0701_0731_c,columns_c1_c0,columns_c2_c1,columns_c3_c2,columns_c4_c3\

,columns_c5_c4,columns_c6_c5)

# 处理测试集的缺失值

df_test_fillna=df_test.fillna(-999)

# 哑编码测试集的定性特征

df_test_dummy=dummy(df_test_fillna)

X_test=df_test_dummy.loc[:,X_train_select.columns.values.tolist()]

# 得出测试集的预测结果

prediction_test=model.predict_proba(X_test)[:,1]

# 将预测结果保存到文件夹中

def save_prediction(df_test,prediction_test):

df_test.loc[:,'Probability']=prediction_test

df_test_final=df_test[['User_id','Coupon_id','Date_received','Probability']]

df_test_final.to_csv('submission.csv',index=False,header=False)

save_prediction(df_test,prediction_test)

总结

笔者认为优惠券使用情况预测是个非常接地气的项目,该项目经验可以迁移到电商营销、游戏运营活动(尤其是道具促销的充值类活动)等的效果预测上,有助于降低营销成本,提高用户转化率。

此外,笔者认为项目思路仍可以做以下改善,以优化预测结果,但碍于时间成本没有继续探索:

-

缺失值处理:

特征提取时产生缺失值的原因主要有:① 老用户没有执行过相关行为;②无法从历史信息观测出新用户的行为特征。

优化前:

对于两种情况产生的缺失值均采用-999填充,将两种情形均视为用户的一种特性进行标识。

优化后:

对于第一种情况,应将老用户未执行相关行为视为一种习惯或特性,用0或-999进行标识均可;对于第二种情况,应根据老用户在该特征下的数据分布,用均值、中位数、众数等进行填充,或将其他特征值导入RF等算法对缺失值进行预测。 -

特征选择:

优化前:

Embedded(嵌入法),先使用Xgboost算法和模型进行训练,得到各个特征的权值系数,根据系数从大到小选择特征,设定权值系数阈值为0.01。

优化后:

①基于Embedded的优化,使用多种算法并行训练,选取在多种算法中排名均靠前的特征;此外,可以调整阈值的范围,观测训练效果;

② 结合Embedded(嵌入法)和Filter(过滤法),在Embedded的基础上,再按照特征的发散性或者相关性对各个特征进行评分,剔除小于或大于某阈值的特征,已提升测试集的预测效果。 -

模型融合:

模型融合通常能提升最终的预测效果,因为不同的机器学习算法的训练思路,对于不同问题的学习效果是不同的,而多数人经合理思考判断的结果,往往比少数人的判断结果正确率高。

优化前:

采用单一模型(Xgboost)对测试集进行预测。

优化后:

引入RF、lightgbm等算法,并行训练,分配相应的权重(例如:训练结果评分越高,权重越高),将多个模型输出的预测结果加权平均后作为最终值。

在特征重要性排行中排名靠后,主要原因是部分用户可能没有网上消费的习惯,导致在与线下特征联立时产生大量缺失值,缺失值占比在85%以上,因此对预测结果的贡献度不高,将其从特征中剔除。 ↩︎