【Transformer】7、TNT: Transformer iN Transformer

文章目录

-

- 一、背景

- 二、动机

- 三、方法

-

- 3.1 Transformer in Transformer

- 3.2 Network Architecture

- 四、效果

- 五、代码

论文链接: Transformer in Transformer

代码链接:https://github.com/huawei-noah/CV-Backbones/tree/master/tnt_pytorch

一、背景

Transformer 是一种主要基于注意力机制的网络结构,能提取输入数据的特征。计算机视觉中的 Transformer 首先将输入图像均分为多个图像块,然后提取其特征和关系。因为图像数据有很多的细节纹理和颜色等信息,所以目前方法所分的图像块的粒度还不够细,以至于难以挖掘不同尺度和位置特征。

二、动机

计算机视觉使用的是图像作为输入,所以输入和输出在语义上有很大的 gap,所以 ViT 将图像分成了几块组成一个 sequence 作为输入,然后使用 attention 计算不同 patch 之间的关系,来生成图像特征表达。

虽然 ViT 及其变体能够较好的提取图像特征来实现识别的任务,但数据集中的图像是很多样的,虽然将图像分块能够很好的找到不同 patch 之间的关系和相似性,但不同图像块内的小图像块也存在很高的相似性。

作者受此启发,提出了一个更加精细的图像划分方法,来生成 visual sequence 并且提升效果。

三、方法

方法:

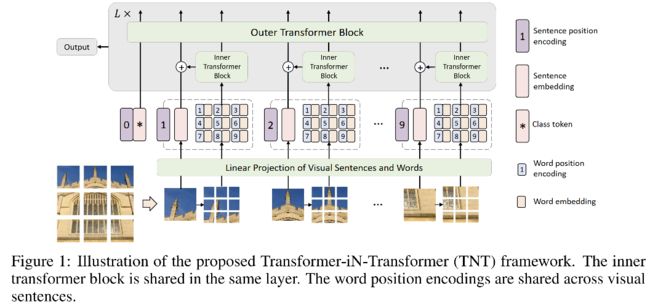

- 先将图像进行 patch 划分(sentence),每个 patch 加上位置编码,同样使用一个 cls_token

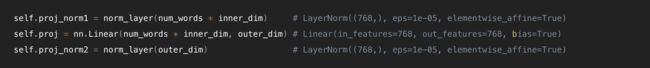

- 在每个 patch 内部,再次进行 words 的划分,给每个 words 也加上位置编码,patch 内部不需要使用 cls_token,得到了 patch 内部 words 的特征后,进行映射 LN → Linear 映射 → LN

本文中,作者指出,每个 patch 内部的 attention 对网络效果的影响是非常重要的,所以提出了一种新的结构 TNT。

- 将 patch(16x16)看做 “visual sentence”

- 将 patch 再切分成更细的 patch(4x4),看做 “visual words”

- 会计算 sentence 中每个 words 和其他 words 的 attention

- 然后把 sentences 和 words 的特征进行聚合来提高网络特征的表达能力

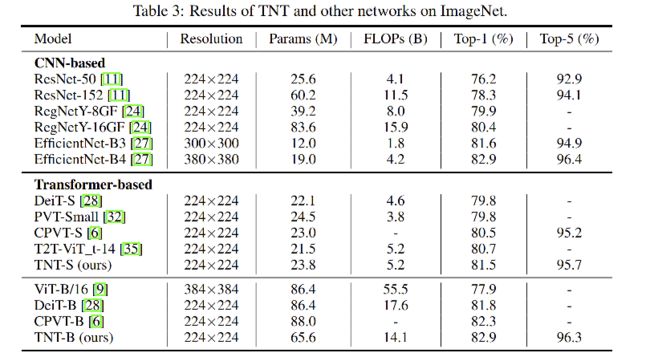

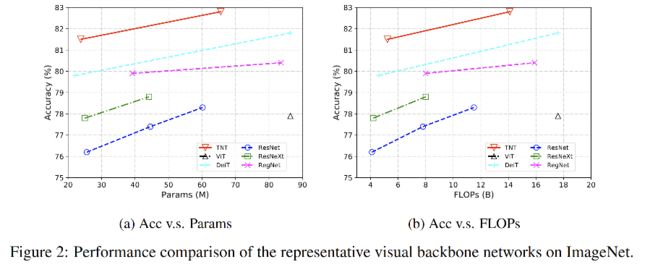

- TNT 在 ImageNet 上达到了 81.5% 的 top-1 acc,比当前 SOTA 提升了 1.7%

3.1 Transformer in Transformer

1、输入 2D 图像,切分成 n n n 个 patches

![]()

2、将每个 patch 切分成 m m m 个 sub-patches, x i , j x^{i,j} xi,j 是第 i 个 visual sentence 中的第 j 个word

3、对 visual sentence 和 visual word 分别进行处理

inner transformer block 用来对 word 之间建模,outer transformer block 捕捉 sentence 之间的关系。

通过对 TNT block 的 L 次堆叠,得到 transformer-in-transformer network。类似于 ViT,classification token 在这里也作为图像整体特征的表达。

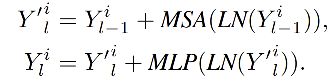

① 对于 word embedding

使用线性映射将 visual word 进行 embedding,得到如下的 Y Y Y

使用 transformer 来抽取不同 words 之间的关系,L 是 block 的总数,第一个 block Y 0 i Y_0^i Y0i 的输入是上面的 Y i Y^i Yi。

经过 transformer 之后,图像中的所有 word embedding 可以表示为 Y l = [ Y l 1 , Y l 2 , . . . , Y l n ] Y_l=[Y_l^1, Y_l^2, ..., Y_l^n] Yl=[Yl1,Yl2,...,Yln],这也可以看成一个内部 transformer block,叫做 T i n T_{in} Tin,这个过程给每个 sentence 内部的所有 word 两两之间建立了关系特征。

举例来说,就是在一个人脸图分割的patch中,一个眼睛对应的 word 和另外一个眼睛对应的 word 关联更高,而与前额关联更少一些。

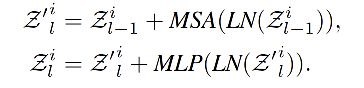

② 对于 sentence embedding

作者建立了一个 sentence embedding memories 来存储 sentence 层面的序列表达, Z c l a s s Z_{class} Zclass 是 class token,用来分类:

在每一层 sentence embedding,会将 word embedding 的序列结果经过线性变换后加到 sentence embedding上, W W W 和 b b b 分别为权重和偏置

之后,使用标准的 transformer 来进行特征提取,即使用 outer transformer T o u t T_{out} Tout 来对不同的 sentence embedding 进行关系建模。

总之,TNT block 的输入和输出都包括了 word embedding 和 sentence embedding,如图1b所示,所以 TNT 总体表达如下:

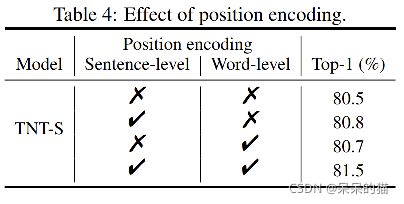

3、position encoding

对于 word 和 sentence,分别都要使用位置编码来保持空间信息。作者在这里使用的是可学习的一维位置编码。

对于 sentence,每个 sentence 都被分配了一个位置编码, E s e n t e n c e ∈ R ( n + 1 ) × d E_{sentence}\in R^{(n+1)\times d} Esentence∈R(n+1)×d 是 sentence 位置编码。

对于sentence 中的 word,也给每个都加上了位置编码, E w o r d E_{word} Eword 是 word 位置编码,所有 sentence 内的 word 编码是共享的。

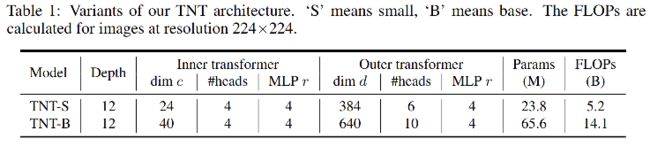

3.2 Network Architecture

- patch size:16x16

- sun-patch size:m=4x4=16

- TNT-S(Small, 23.8M) 和 TNT-B(Base, 65.6M) 的结构

四、效果

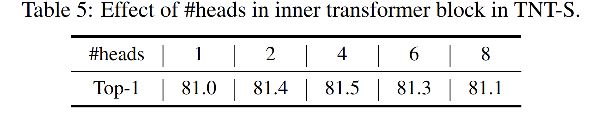

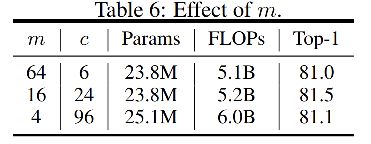

消融实验:

可视化:

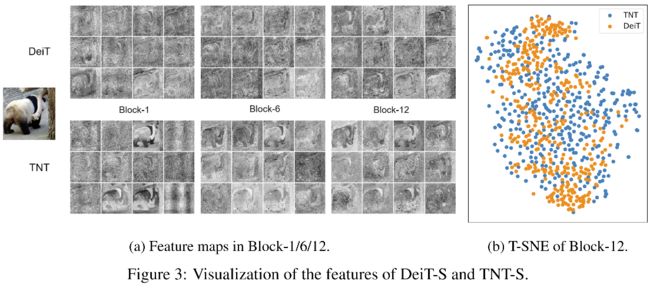

1、特征图可视化

① DeiT 和 TNT 学习到的特征图可视化如下,第 1、6、12 个block 的特征图如图3a所示,TNT 的位置信息保存的更好。

图3b 使用 t-SNE 可视化了第 12 个 block 的所有 384 个 feature map,可以看出来 TNT 的特征图更加多样,保存了更丰富的信息,这可以归功于 inner transformer 对局部特征的建模能力。

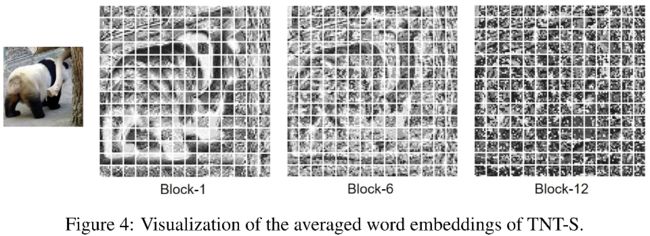

② 除了 patch-level 外,作者还可视化了 pixel-level 的 embedding 如图4,对于每个 patch,作者根据空间位置进行了 reshape,然后把所有通道进行了平均。平均后的特征图大小为 14x14,可以看出在浅层局部特征保存的较好,深层特征越来越抽象。

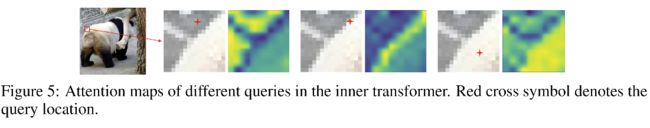

2、Attention map 的可视化

在 TNT block 里边有两个不同的 self-attention,inner self-attention 和 outer self-attention,图5展示了 inner transformer 的不同 query 的 attention map。

① visual word 可视化

对于一个给定的 visual word,和该 visual word 想外观越相似的 word 的 attention value 越高,这也表示他们的特征将和 query 进行更密切的交互,但 ViT 和 DeiT 就没有这种特性。

图6展示了某个 patch 对其他所有 patch 的 attention map,随着层的加深,更多的 patch 会有响应。这是因为在越深的层,patch 之间的信息会更好的被关联起来。

在 block-12,TNT 能够关注到有用的 patch,而 DeiT 仍然会关注到和 panda 无关的 patch 上去。

3、class token 和 patch 之间的 attention

图7可视化了 class token 和图像中的所有 patches 之间的关系,可以看出输出特征会更加注意到和目标位置关联的patch

五、代码

import torch

from tnt import TNT

tnt = TNT()

tnt.eval()

inputs = torch.randn(1, 3, 224, 224)

logits = tnt(inputs)

tnt.py 代码如下:

import torch

import torch.nn as nn

from functools import partial

import math

from timm.data import IMAGENET_DEFAULT_MEAN, IMAGENET_DEFAULT_STD

from timm.models.helpers import load_pretrained

from timm.models.layers import DropPath, to_2tuple, trunc_normal_

from timm.models.resnet import resnet26d, resnet50d

from timm.models.registry import register_model

def _cfg(url='', **kwargs):

return {

'url': url,

'num_classes': 1000, 'input_size': (3, 224, 224), 'pool_size': None,

'crop_pct': .9, 'interpolation': 'bicubic',

'mean': IMAGENET_DEFAULT_MEAN, 'std': IMAGENET_DEFAULT_STD,

'first_conv': 'patch_embed.proj', 'classifier': 'head',

**kwargs

}

default_cfgs = {

'tnt_s_patch16_224': _cfg(

mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5),

),

'tnt_b_patch16_224': _cfg(

mean=(0.5, 0.5, 0.5), std=(0.5, 0.5, 0.5),

),

}

def make_divisible(v, divisor=8, min_value=None):

min_value = min_value or divisor

new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_v < 0.9 * v:

new_v += divisor

return new_v

class Mlp(nn.Module):

def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.GELU, drop=0.):

super().__init__()

out_features = out_features or in_features

hidden_features = hidden_features or in_features

self.fc1 = nn.Linear(in_features, hidden_features)

self.act = act_layer()

self.fc2 = nn.Linear(hidden_features, out_features)

self.drop = nn.Dropout(drop)

def forward(self, x):

x = self.fc1(x)

x = self.act(x)

x = self.drop(x)

x = self.fc2(x)

x = self.drop(x)

return x

class SE(nn.Module):

def __init__(self, dim, hidden_ratio=None):

super().__init__()

hidden_ratio = hidden_ratio or 1

self.dim = dim

hidden_dim = int(dim * hidden_ratio)

self.fc = nn.Sequential(

nn.LayerNorm(dim),

nn.Linear(dim, hidden_dim),

nn.ReLU(inplace=True),

nn.Linear(hidden_dim, dim),

nn.Tanh()

)

def forward(self, x):

a = x.mean(dim=1, keepdim=True) # B, 1, C

a = self.fc(a)

x = a * x

return x

class Attention(nn.Module):

def __init__(self, dim, hidden_dim, num_heads=8, qkv_bias=False, qk_scale=None, attn_drop=0., proj_drop=0.):

super().__init__()

self.hidden_dim = hidden_dim

self.num_heads = num_heads

head_dim = hidden_dim // num_heads

self.head_dim = head_dim

# NOTE scale factor was wrong in my original version, can set manually to be compat with prev weights

self.scale = qk_scale or head_dim ** -0.5

self.qk = nn.Linear(dim, hidden_dim * 2, bias=qkv_bias)

self.v = nn.Linear(dim, dim, bias=qkv_bias)

self.attn_drop = nn.Dropout(attn_drop, inplace=True)

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(proj_drop, inplace=True)

def forward(self, x):

B, N, C = x.shape

qk = self.qk(x).reshape(B, N, 2, self.num_heads, self.head_dim).permute(2, 0, 3, 1, 4)

q, k = qk[0], qk[1] # make torchscript happy (cannot use tensor as tuple)

v = self.v(x).reshape(B, N, self.num_heads, -1).permute(0, 2, 1, 3)

attn = (q @ k.transpose(-2, -1)) * self.scale

attn = attn.softmax(dim=-1)

attn = self.attn_drop(attn)

x = (attn @ v).transpose(1, 2).reshape(B, N, -1)

x = self.proj(x)

x = self.proj_drop(x)

return x

class Block(nn.Module):

""" TNT Block

"""

def __init__(self, outer_dim, inner_dim, outer_num_heads, inner_num_heads, num_words, mlp_ratio=4.,

qkv_bias=False, qk_scale=None, drop=0., attn_drop=0., drop_path=0., act_layer=nn.GELU,

norm_layer=nn.LayerNorm, se=0):

super().__init__()

self.has_inner = inner_dim > 0

if self.has_inner:

# Inner

self.inner_norm1 = norm_layer(inner_dim)

self.inner_attn = Attention(

inner_dim, inner_dim, num_heads=inner_num_heads, qkv_bias=qkv_bias,

qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

self.inner_norm2 = norm_layer(inner_dim)

self.inner_mlp = Mlp(in_features=inner_dim, hidden_features=int(inner_dim * mlp_ratio),

out_features=inner_dim, act_layer=act_layer, drop=drop)

self.proj_norm1 = norm_layer(num_words * inner_dim)

self.proj = nn.Linear(num_words * inner_dim, outer_dim, bias=False)

self.proj_norm2 = norm_layer(outer_dim)

# Outer

self.outer_norm1 = norm_layer(outer_dim)

self.outer_attn = Attention(

outer_dim, outer_dim, num_heads=outer_num_heads, qkv_bias=qkv_bias,

qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

self.outer_norm2 = norm_layer(outer_dim)

self.outer_mlp = Mlp(in_features=outer_dim, hidden_features=int(outer_dim * mlp_ratio),

out_features=outer_dim, act_layer=act_layer, drop=drop)

# SE

self.se = se

self.se_layer = None

if self.se > 0:

self.se_layer = SE(outer_dim, 0.25)

def forward(self, inner_tokens, outer_tokens):

if self.has_inner:

inner_tokens = inner_tokens + self.drop_path(self.inner_attn(self.inner_norm1(inner_tokens))) # B*N, k*k, c

inner_tokens = inner_tokens + self.drop_path(self.inner_mlp(self.inner_norm2(inner_tokens))) # B*N, k*k, c

B, N, C = outer_tokens.size()

outer_tokens[:,1:] = outer_tokens[:,1:] + self.proj_norm2(self.proj(self.proj_norm1(inner_tokens.reshape(B, N-1, -1)))) # B, N, C

if self.se > 0:

outer_tokens = outer_tokens + self.drop_path(self.outer_attn(self.outer_norm1(outer_tokens)))

tmp_ = self.outer_mlp(self.outer_norm2(outer_tokens))

outer_tokens = outer_tokens + self.drop_path(tmp_ + self.se_layer(tmp_))

else:

outer_tokens = outer_tokens + self.drop_path(self.outer_attn(self.outer_norm1(outer_tokens)))

outer_tokens = outer_tokens + self.drop_path(self.outer_mlp(self.outer_norm2(outer_tokens)))

return inner_tokens, outer_tokens

class PatchEmbed(nn.Module):

""" Image to Visual Word Embedding

"""

def __init__(self, img_size=224, patch_size=16, in_chans=3, outer_dim=768, inner_dim=24, inner_stride=4):

super().__init__()

img_size = to_2tuple(img_size)

patch_size = to_2tuple(patch_size)

num_patches = (img_size[1] // patch_size[1]) * (img_size[0] // patch_size[0])

self.img_size = img_size

self.patch_size = patch_size

self.num_patches = num_patches

self.inner_dim = inner_dim

self.num_words = math.ceil(patch_size[0] / inner_stride) * math.ceil(patch_size[1] / inner_stride)

self.unfold = nn.Unfold(kernel_size=patch_size, stride=patch_size)

self.proj = nn.Conv2d(in_chans, inner_dim, kernel_size=7, padding=3, stride=inner_stride)

def forward(self, x):

B, C, H, W = x.shape

# FIXME look at relaxing size constraints

assert H == self.img_size[0] and W == self.img_size[1], \

f"Input image size ({H}*{W}) doesn't match model ({self.img_size[0]}*{self.img_size[1]})."

# self.unfold = Unfold(kernel_size=(16, 16), dilation=1, padding=0, stride=(16, 16))

x = self.unfold(x) # B, Ck2, N [1, 768, 196] # 输出行数为卷积核横纵大小相乘,每列为每次卷积核卷过的元素

x = x.transpose(1, 2).reshape(B * self.num_patches, C, *self.patch_size) # B*N, C, 16, 16 [196, 3, 16, 16]

x = self.proj(x) # [196, 48, 4, 4]

x = x.reshape(B * self.num_patches, self.inner_dim, -1).transpose(1, 2) # [196, 16, 48]

return x

class TNT(nn.Module):

""" TNT (Transformer in Transformer) for computer vision

"""

def __init__(self, img_size=224, patch_size=16, in_chans=3, num_classes=1000, outer_dim=768, inner_dim=48,

depth=12, outer_num_heads=12, inner_num_heads=4, mlp_ratio=4., qkv_bias=False, qk_scale=None,

drop_rate=0., attn_drop_rate=0., drop_path_rate=0., norm_layer=nn.LayerNorm, inner_stride=4, se=0):

super().__init__()

self.num_classes = num_classes # 1000

self.num_features = self.outer_dim = outer_dim # num_features for consistency with other models

self.patch_embed = PatchEmbed(

img_size=img_size, patch_size=patch_size, in_chans=in_chans, outer_dim=outer_dim,

inner_dim=inner_dim, inner_stride=inner_stride)

self.num_patches = num_patches = self.patch_embed.num_patches # 196

num_words = self.patch_embed.num_words # 16

self.proj_norm1 = norm_layer(num_words * inner_dim) # LayerNorm((768,), eps=1e-05, elementwise_affine=True)

self.proj = nn.Linear(num_words * inner_dim, outer_dim) # Linear(in_features=768, out_features=768, bias=True)

self.proj_norm2 = norm_layer(outer_dim) # LayerNorm((768,), eps=1e-05, elementwise_affine=True)

self.cls_token = nn.Parameter(torch.zeros(1, 1, outer_dim)) # [1, 1, 768]

self.outer_tokens = nn.Parameter(torch.zeros(1, num_patches, outer_dim), requires_grad=False) # [1, 196, 768]

self.outer_pos = nn.Parameter(torch.zeros(1, num_patches + 1, outer_dim)) # [1, 196, 768]

self.inner_pos = nn.Parameter(torch.zeros(1, num_words, inner_dim)) # [1, 16, 48]

self.pos_drop = nn.Dropout(p=drop_rate)

dpr = [x.item() for x in torch.linspace(0, drop_path_rate, depth)] # stochastic depth decay rule

vanilla_idxs = []

blocks = []

for i in range(depth):

if i in vanilla_idxs:

blocks.append(Block(

outer_dim=outer_dim, inner_dim=-1, outer_num_heads=outer_num_heads, inner_num_heads=inner_num_heads,

num_words=num_words, mlp_ratio=mlp_ratio, qkv_bias=qkv_bias, qk_scale=qk_scale, drop=drop_rate,

attn_drop=attn_drop_rate, drop_path=dpr[i], norm_layer=norm_layer, se=se))

else:

blocks.append(Block(

outer_dim=outer_dim, inner_dim=inner_dim, outer_num_heads=outer_num_heads, inner_num_heads=inner_num_heads,

num_words=num_words, mlp_ratio=mlp_ratio, qkv_bias=qkv_bias, qk_scale=qk_scale, drop=drop_rate,

attn_drop=attn_drop_rate, drop_path=dpr[i], norm_layer=norm_layer, se=se))

self.blocks = nn.ModuleList(blocks)

self.norm = norm_layer(outer_dim)

# NOTE as per official impl, we could have a pre-logits representation dense layer + tanh here

#self.repr = nn.Linear(outer_dim, representation_size)

#self.repr_act = nn.Tanh()

# Classifier head

self.head = nn.Linear(outer_dim, num_classes) if num_classes > 0 else nn.Identity() # Linear(in_features=768, out_features=1000, bias=True)

trunc_normal_(self.cls_token, std=.02)

trunc_normal_(self.outer_pos, std=.02)

trunc_normal_(self.inner_pos, std=.02)

self.apply(self._init_weights)

# import pdb; pdb.set_trace()

def _init_weights(self, m):

if isinstance(m, nn.Linear):

trunc_normal_(m.weight, std=.02)

if isinstance(m, nn.Linear) and m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.LayerNorm):

nn.init.constant_(m.bias, 0)

nn.init.constant_(m.weight, 1.0)

@torch.jit.ignore

def no_weight_decay(self):

return {'outer_pos', 'inner_pos', 'cls_token'}

def get_classifier(self):

return self.head

def reset_classifier(self, num_classes, global_pool=''):

self.num_classes = num_classes

self.head = nn.Linear(self.outer_dim, num_classes) if num_classes > 0 else nn.Identity()

def forward_features(self, x):

# import pdb; pdb.set_trace()

# x.shape=[1, 3, 224, 224]

B = x.shape[0] # 1

inner_tokens = self.patch_embed(x) + self.inner_pos # self.patch_embed(x) = [196, 16, 48], self.inner_pos=[1, 16, 48]

outer_tokens = self.proj_norm2(self.proj(self.proj_norm1(inner_tokens.reshape(B, self.num_patches, -1)))) # [1, 196, 768]

outer_tokens = torch.cat((self.cls_token.expand(B, -1, -1), outer_tokens), dim=1) # [1, 197, 768]

outer_tokens = outer_tokens + self.outer_pos # [1, 197, 768]

outer_tokens = self.pos_drop(outer_tokens) # [1, 197, 768]

for blk in self.blocks:

inner_tokens, outer_tokens = blk(inner_tokens, outer_tokens) # inner_tokens.shape=[196, 16, 48] outer_tokens.shape=[1, 197, 768]

outer_tokens = self.norm(outer_tokens)

return outer_tokens[:, 0] # [1, 768]

def forward(self, x):

x = self.forward_features(x) # [1, 768]

x = self.head(x)

return x

def _conv_filter(state_dict, patch_size=16):

""" convert patch embedding weight from manual patchify + linear proj to conv"""

out_dict = {}

for k, v in state_dict.items():

if 'patch_embed.proj.weight' in k:

v = v.reshape((v.shape[0], 3, patch_size, patch_size))

out_dict[k] = v

return out_dict

@register_model

def tnt_s_patch16_224(pretrained=False, **kwargs):

patch_size = 16

inner_stride = 4

outer_dim = 384

inner_dim = 24

outer_num_heads = 6

inner_num_heads = 4

outer_dim = make_divisible(outer_dim, outer_num_heads)

inner_dim = make_divisible(inner_dim, inner_num_heads)

model = TNT(img_size=224, patch_size=patch_size, outer_dim=outer_dim, inner_dim=inner_dim, depth=12,

outer_num_heads=outer_num_heads, inner_num_heads=inner_num_heads, qkv_bias=False,

inner_stride=inner_stride, **kwargs)

model.default_cfg = default_cfgs['tnt_s_patch16_224']

if pretrained:

load_pretrained(

model, num_classes=model.num_classes, in_chans=kwargs.get('in_chans', 3), filter_fn=_conv_filter)

return model

@register_model

def tnt_b_patch16_224(pretrained=False, **kwargs):

patch_size = 16

inner_stride = 4

outer_dim = 640

inner_dim = 40

outer_num_heads = 10

inner_num_heads = 4

outer_dim = make_divisible(outer_dim, outer_num_heads)

inner_dim = make_divisible(inner_dim, inner_num_heads)

model = TNT(img_size=224, patch_size=patch_size, outer_dim=outer_dim, inner_dim=inner_dim, depth=12,

outer_num_heads=outer_num_heads, inner_num_heads=inner_num_heads, qkv_bias=False,

inner_stride=inner_stride, **kwargs)

model.default_cfg = default_cfgs['tnt_b_patch16_224']

if pretrained:

load_pretrained(

model, num_classes=model.num_classes, in_chans=kwargs.get('in_chans', 3), filter_fn=_conv_filter)

return model

self.blocks

ModuleList(

(0): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(1): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(2): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(3): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(4): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(5): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(6): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(7): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(8): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(9): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(10): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(11): Block(

(inner_norm1): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_attn): Attention(

(qk): Linear(in_features=48, out_features=96, bias=False)

(v): Linear(in_features=48, out_features=48, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=48, out_features=48, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(inner_norm2): LayerNorm((48,), eps=1e-05, elementwise_affine=True)

(inner_mlp): Mlp(

(fc1): Linear(in_features=48, out_features=192, bias=True)

(act): GELU()

(fc2): Linear(in_features=192, out_features=48, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(proj_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(proj): Linear(in_features=768, out_features=768, bias=False)

(proj_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_norm1): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_attn): Attention(

(qk): Linear(in_features=768, out_features=1536, bias=False)

(v): Linear(in_features=768, out_features=768, bias=False)

(attn_drop): Dropout(p=0.0, inplace=True)

(proj): Linear(in_features=768, out_features=768, bias=True)

(proj_drop): Dropout(p=0.0, inplace=True)

)

(drop_path): Identity()

(outer_norm2): LayerNorm((768,), eps=1e-05, elementwise_affine=True)

(outer_mlp): Mlp(

(fc1): Linear(in_features=768, out_features=3072, bias=True)

(act): GELU()

(fc2): Linear(in_features=3072, out_features=768, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

)