Pytorch:目标检测网络-FPN

Pytorch: 目标检测-特征金字塔-FPN

Copyright: Jingmin Wei, Pattern Recognition and Intelligent System, School of Artificial and Intelligence, Huazhong University of Science and Technology

Pytorch教程专栏链接

文章目录

-

-

- Pytorch: 目标检测-特征金字塔-FPN

- @[toc]

-

-

- Reference

- FPN 网络结构

- 代码实现

文章目录

-

-

- Pytorch: 目标检测-特征金字塔-FPN

- @[toc]

-

-

- Reference

- FPN 网络结构

- 代码实现

-

-

本教程不商用,仅供学习和参考交流使用,如需转载,请联系本人。

Reference

FPN

《深度学习之 Pytorch 物体检测实战》

import torch.nn as nn

import torch.nn.functional as F

import torch

FPN 网络结构

为了增强语义性,传统的物体检测模型通常只在深度卷积网络的最后一个特征图上进行后续操作,而这一层对应的下采样率(图像缩小的倍数)通常又比较大,如 16 , 32 16,32 16,32 ,造成小物体在特征图上的有效信息较少,小物体的检测性能会急剧下降,这个问题也被称为多尺度问题。

解决多尺度问题的关键在于如何提取多尺度的特征。传统的方法有图像金字塔(Image Pyramid),主要思路是将输入图片做成多个尺度,不同尺度的图像生成不同尺度的特征,这种方法简单而有效,大量使用在了 COCO 等竞赛上,但缺点是非常耗时,计算量也很大。

从 torch.nn 那一章可以知道,卷积神经网络不同层的大小与语义信息不同,本身就类似一个金字塔结构。 2017 2017 2017 年的 FPN (Feature Pyramid Network)方法融合了不同层的特征,较好地改善了多尺度检测问题。

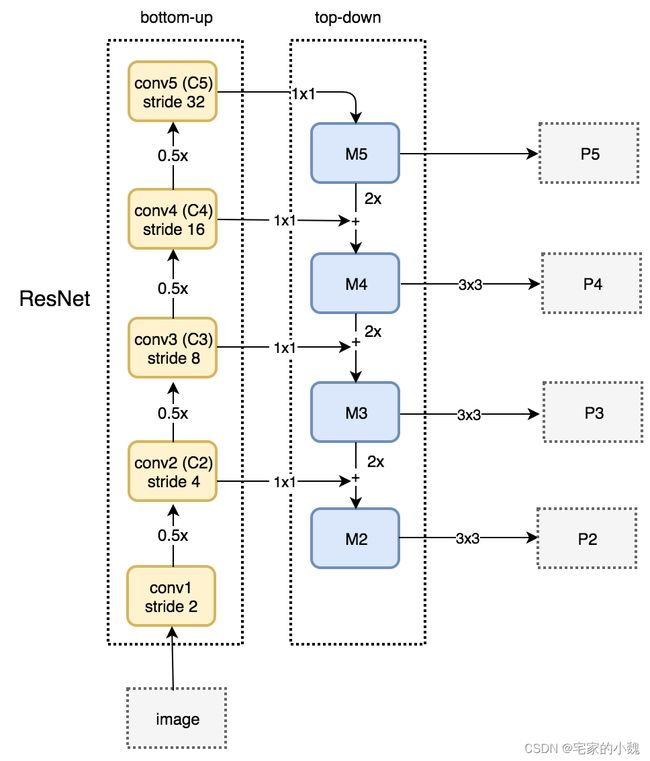

FPN 的总体架构如图所示,主要包含自下而上网络、自上而下网络、横向连与卷积融合 4 4 4 个部分。

-

自下而上:最左侧为普通的卷积网络,默认使用 ResNet 结构,用作提取语义信息。 C 1 C_1 C1 代表了 ResNet 的前几个卷积与池化层,而 C 2 − C 5 C_2-C_5 C2−C5 分别为不同的 ResNet 卷积组,这些卷积组包含了多个 Bottleneck 结构,组内的特征图大小相同,组间大小递减。

-

自上而下:首先对 C 5 C_5 C5 进行 1 × 1 1\times1 1×1 卷积降低通道数得到 M 5 M_5 M5 ,然后依次进行上采样得到 M 4 , M 3 , M 2 M_4,M_3,M_2 M4,M3,M2 ,目的是得到与 C 4 , C 3 , C 2 C_4,C_3,C_2 C4,C3,C2 长宽相同的特征,以方便下一步进行逐元素相加。这里采用 2 2 2 倍最邻近上采样,即直接对临近元素进行复制,而非线性插值。

-

横向连接(Lateral Connection):目的是为了将上采样后的高语义特征与浅层的定位细节特征进行融合。高语义特征经过上采样后,其长宽与对应的浅层特征相同,而通道数固定为 256 256 256 ,因此需要对底层特征 C 2 − C 4 C_2-C_4 C2−C4 进行 1 × 1 1\times1 1×1 卷积使得其通道数变为 256 256 256 ,然后两者进行逐元素相加得到 M 4 , M 3 , M 2 M_4,M_3,M_2 M4,M3,M2 。由于 C 1 C_1 C1 的特征图尺寸较大且语义信息不足,因此没有把 C 1 C_1 C1 放到横向连接中。

-

卷积融合:在得到相加后的特征后,利用 3 × 3 3\times3 3×3 卷积对生成的 M 2 − M 4 M_2-M_4 M2−M4 再进行融合, M 5 M_5 M5 不做处理。目的是消除上采样过程带来的重叠效应,以生成最终的特征图 P 2 − P 5 P_2-P_5 P2−P5 。

对于实际的物体检测算法,需要在特征图上进行 RoI(Region of Interests),即感兴趣区域提取。而 FPN 有 4 4 4 个输出的特征图,选择哪一个特征图上面的特征也是个问题。FPN 给出的解决方法是,对于不同大小的 RoI ,使用不同的特征图,大尺度的 RoI 在深层的特征图上进行提取,如 P 5 P_5 P5 ,小尺度的 RoI 在浅层的特征图上进行提取,如 P 2 P_2 P2 ,具体确定方法,可以自行查看 FPN 论文。

FPN 将深层的语义信息传到底层,来补充浅层的语义信息,从而获得了高分辨率、强语义的特征,在小物体检测、实例分割等领域有着非常不俗的表现。

代码实现

首先实现 Residual Block:

# 定义ResNet的Bottleneck类

class Bottleneck(nn.Module):

expansion = 4 # 定义一个类属性,而非实例属性

def __init__(self, in_channels, channels, stride=1, downsample=None):

super(Bottleneck, self).__init__()

# 网路堆叠层是由3个卷积+BN组成

self.bottleneck = nn.Sequential(

nn.Conv2d(in_channels, channels, 1, stride=1, bias=False),

nn.BatchNorm2d(channels),

nn.ReLU(True),

nn.Conv2d(channels, channels, 3, stride=stride, padding=1, bias=False),

nn.BatchNorm2d(channels),

nn.ReLU(inplace=True),

nn.Conv2d(channels, channels*self.expansion, 1, stride=1, bias=False),

nn.BatchNorm2d(channels * self.expansion)

)

self.relu = nn.ReLU(inplace=True)

# Down sample由一个包含BN的1*1卷积构成

self.downsample = downsample

def forward(self, x):

identity = x

output = self.bottleneck(x)

if self.downsample is not None:

identity = self.downsample(x)

# 将identity(恒等映射)与堆叠层输出相加

output += identity

output = self.relu(output)

return output

其次利用残差块搭建 FPN,这部分不熟的可以看 ResNet 教程。

# 定义FPN类,初始化需要一个list,代表ResNet每个阶段的Bottleneck的数量

class FPN(nn.Module):

def __init__(self, layers):

super(FPN, self).__init__()

self.in_channels = 64

# 处理输入的C1模块

self.conv1 = nn.Conv2d(3, 64, 7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(3, stride=2, padding=1)

# 搭建自下而上的C2,C3,C4,C5

self.layer1 = self._make_layer(64, layers[0]) # stride=1

self.layer2 = self._make_layer(128, layers[1], 2) # stride=2

self.layer3 = self._make_layer(256, layers[2], 2) # stride=2

self.layer4 = self._make_layer(512, layers[3], 2) # stride=2

# 对C5减少通道数,得到M5

self.toplayer = nn.Conv2d(2048, 256, 1, stride=1, padding=0)

# 3*3卷积融合特征

self.smooth1 = nn.Conv2d(256, 256, 3, 1, 1)

self.smooth2 = nn.Conv2d(256, 256, 3, 1, 1)

self.smooth3 = nn.Conv2d(256, 256, 3, 1, 1)

# 横向连接,保证通道数相同

self.latlayer1 = nn.Conv2d(1024, 256, 1, 1, 0)

self.latlayer2 = nn.Conv2d(512, 256, 1, 1, 0)

self.latlayer3 = nn.Conv2d(256, 256, 1, 1, 0)

# 定义一个protected方法,构建C2-C5

# 思想类似于ResNet,注意区分stride=1/2的情况

def _make_layer(self, channels, blocks, stride=1):

downsample = None

# stride为2时,Residual Block存在恒等映射

if stride != 1 or self.in_channels != Bottleneck.expansion * channels:

downsample = nn.Sequential(

nn.Conv2d(self.in_channels, Bottleneck.expansion*channels, 1, stride, bias=False),

nn.BatchNorm2d(Bottleneck.expansion*channels)

)

layers = []

layers.append(Bottleneck(self.in_channels, channels, stride, downsample))

self.in_channels = channels*Bottleneck.expansion

for i in range(1, blocks):

layers.append(Bottleneck(self.in_channels, channels))

return nn.Sequential(*layers)

# 自上而下的上采样模块

def _upsample_add(self, x, y):

_, _, H, W = y.shape

return F.interpolate(x, size=(H, W)) + y

def forward(self, x):

# 自下而上

c1 = self.maxpool(self.relu(self.bn1(self.conv1(x))))

c2 = self.layer1(c1)

c3 = self.layer2(c2)

c4 = self.layer3(c3)

c5 = self.layer4(c4)

# 自上而下

m5 = self.toplayer(c5)

m4 = self._upsample_add(m5, self.latlayer1(c4))

m3 = self._upsample_add(m4, self.latlayer2(c3))

m2 = self._upsample_add(m3, self.latlayer3(c2))

# 卷积融合,平滑处理

p5 = m5

p4 = self.smooth1(m4)

p3 = self.smooth2(m3)

p2 = self.smooth3(m2)

return p2, p3, p4, p5

def FPN50():

return FPN([3, 4, 6, 3]) # FPN50

def FPN101():

return FPN([3, 4, 23, 3]) # FPN101

def FPN152():

return FPN([3, 8, 36, 3]) # FPN152

# 定义一个FPN网络

net_fpn = FPN50() # FPN50

input = torch.randn(1, 3, 224, 224)

output = net_fpn(input)

# 查看特征图尺寸,它们通道数相同,尺寸递减

print(output[0].shape) # p2

print(output[1].shape) # p3

print(output[2].shape) # p4

print(output[3].shape) # p5

torch.Size([1, 256, 56, 56])

torch.Size([1, 256, 28, 28])

torch.Size([1, 256, 14, 14])

torch.Size([1, 256, 7, 7])

from torchsummary import summary

# D*W*H

summary(net_fpn, input_size=(3, 224, 224), device='cpu')

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 112, 112] 9,408

BatchNorm2d-2 [-1, 64, 112, 112] 128

ReLU-3 [-1, 64, 112, 112] 0

MaxPool2d-4 [-1, 64, 56, 56] 0

Conv2d-5 [-1, 64, 56, 56] 4,096

BatchNorm2d-6 [-1, 64, 56, 56] 128

ReLU-7 [-1, 64, 56, 56] 0

Conv2d-8 [-1, 64, 56, 56] 36,864

BatchNorm2d-9 [-1, 64, 56, 56] 128

ReLU-10 [-1, 64, 56, 56] 0

Conv2d-11 [-1, 256, 56, 56] 16,384

BatchNorm2d-12 [-1, 256, 56, 56] 512

Conv2d-13 [-1, 256, 56, 56] 16,384

BatchNorm2d-14 [-1, 256, 56, 56] 512

ReLU-15 [-1, 256, 56, 56] 0

Bottleneck-16 [-1, 256, 56, 56] 0

Conv2d-17 [-1, 64, 56, 56] 16,384

BatchNorm2d-18 [-1, 64, 56, 56] 128

ReLU-19 [-1, 64, 56, 56] 0

Conv2d-20 [-1, 64, 56, 56] 36,864

BatchNorm2d-21 [-1, 64, 56, 56] 128

ReLU-22 [-1, 64, 56, 56] 0

Conv2d-23 [-1, 256, 56, 56] 16,384

BatchNorm2d-24 [-1, 256, 56, 56] 512

ReLU-25 [-1, 256, 56, 56] 0

Bottleneck-26 [-1, 256, 56, 56] 0

Conv2d-27 [-1, 64, 56, 56] 16,384

BatchNorm2d-28 [-1, 64, 56, 56] 128

ReLU-29 [-1, 64, 56, 56] 0

Conv2d-30 [-1, 64, 56, 56] 36,864

BatchNorm2d-31 [-1, 64, 56, 56] 128

ReLU-32 [-1, 64, 56, 56] 0

Conv2d-33 [-1, 256, 56, 56] 16,384

BatchNorm2d-34 [-1, 256, 56, 56] 512

ReLU-35 [-1, 256, 56, 56] 0

Bottleneck-36 [-1, 256, 56, 56] 0

Conv2d-37 [-1, 128, 56, 56] 32,768

BatchNorm2d-38 [-1, 128, 56, 56] 256

ReLU-39 [-1, 128, 56, 56] 0

Conv2d-40 [-1, 128, 28, 28] 147,456

BatchNorm2d-41 [-1, 128, 28, 28] 256

ReLU-42 [-1, 128, 28, 28] 0

Conv2d-43 [-1, 512, 28, 28] 65,536

BatchNorm2d-44 [-1, 512, 28, 28] 1,024

Conv2d-45 [-1, 512, 28, 28] 131,072

BatchNorm2d-46 [-1, 512, 28, 28] 1,024

ReLU-47 [-1, 512, 28, 28] 0

Bottleneck-48 [-1, 512, 28, 28] 0

Conv2d-49 [-1, 128, 28, 28] 65,536

BatchNorm2d-50 [-1, 128, 28, 28] 256

ReLU-51 [-1, 128, 28, 28] 0

Conv2d-52 [-1, 128, 28, 28] 147,456

BatchNorm2d-53 [-1, 128, 28, 28] 256

ReLU-54 [-1, 128, 28, 28] 0

Conv2d-55 [-1, 512, 28, 28] 65,536

BatchNorm2d-56 [-1, 512, 28, 28] 1,024

ReLU-57 [-1, 512, 28, 28] 0

Bottleneck-58 [-1, 512, 28, 28] 0

Conv2d-59 [-1, 128, 28, 28] 65,536

BatchNorm2d-60 [-1, 128, 28, 28] 256

ReLU-61 [-1, 128, 28, 28] 0

Conv2d-62 [-1, 128, 28, 28] 147,456

BatchNorm2d-63 [-1, 128, 28, 28] 256

ReLU-64 [-1, 128, 28, 28] 0

Conv2d-65 [-1, 512, 28, 28] 65,536

BatchNorm2d-66 [-1, 512, 28, 28] 1,024

ReLU-67 [-1, 512, 28, 28] 0

Bottleneck-68 [-1, 512, 28, 28] 0

Conv2d-69 [-1, 128, 28, 28] 65,536

BatchNorm2d-70 [-1, 128, 28, 28] 256

ReLU-71 [-1, 128, 28, 28] 0

Conv2d-72 [-1, 128, 28, 28] 147,456

BatchNorm2d-73 [-1, 128, 28, 28] 256

ReLU-74 [-1, 128, 28, 28] 0

Conv2d-75 [-1, 512, 28, 28] 65,536

BatchNorm2d-76 [-1, 512, 28, 28] 1,024

ReLU-77 [-1, 512, 28, 28] 0

Bottleneck-78 [-1, 512, 28, 28] 0

Conv2d-79 [-1, 256, 28, 28] 131,072

BatchNorm2d-80 [-1, 256, 28, 28] 512

ReLU-81 [-1, 256, 28, 28] 0

Conv2d-82 [-1, 256, 14, 14] 589,824

BatchNorm2d-83 [-1, 256, 14, 14] 512

ReLU-84 [-1, 256, 14, 14] 0

Conv2d-85 [-1, 1024, 14, 14] 262,144

BatchNorm2d-86 [-1, 1024, 14, 14] 2,048

Conv2d-87 [-1, 1024, 14, 14] 524,288

BatchNorm2d-88 [-1, 1024, 14, 14] 2,048

ReLU-89 [-1, 1024, 14, 14] 0

Bottleneck-90 [-1, 1024, 14, 14] 0

Conv2d-91 [-1, 256, 14, 14] 262,144

BatchNorm2d-92 [-1, 256, 14, 14] 512

ReLU-93 [-1, 256, 14, 14] 0

Conv2d-94 [-1, 256, 14, 14] 589,824

BatchNorm2d-95 [-1, 256, 14, 14] 512

ReLU-96 [-1, 256, 14, 14] 0

Conv2d-97 [-1, 1024, 14, 14] 262,144

BatchNorm2d-98 [-1, 1024, 14, 14] 2,048

ReLU-99 [-1, 1024, 14, 14] 0

Bottleneck-100 [-1, 1024, 14, 14] 0

Conv2d-101 [-1, 256, 14, 14] 262,144

BatchNorm2d-102 [-1, 256, 14, 14] 512

ReLU-103 [-1, 256, 14, 14] 0

Conv2d-104 [-1, 256, 14, 14] 589,824

BatchNorm2d-105 [-1, 256, 14, 14] 512

ReLU-106 [-1, 256, 14, 14] 0

Conv2d-107 [-1, 1024, 14, 14] 262,144

BatchNorm2d-108 [-1, 1024, 14, 14] 2,048

ReLU-109 [-1, 1024, 14, 14] 0

Bottleneck-110 [-1, 1024, 14, 14] 0

Conv2d-111 [-1, 256, 14, 14] 262,144

BatchNorm2d-112 [-1, 256, 14, 14] 512

ReLU-113 [-1, 256, 14, 14] 0

Conv2d-114 [-1, 256, 14, 14] 589,824

BatchNorm2d-115 [-1, 256, 14, 14] 512

ReLU-116 [-1, 256, 14, 14] 0

Conv2d-117 [-1, 1024, 14, 14] 262,144

BatchNorm2d-118 [-1, 1024, 14, 14] 2,048

ReLU-119 [-1, 1024, 14, 14] 0

Bottleneck-120 [-1, 1024, 14, 14] 0

Conv2d-121 [-1, 256, 14, 14] 262,144

BatchNorm2d-122 [-1, 256, 14, 14] 512

ReLU-123 [-1, 256, 14, 14] 0

Conv2d-124 [-1, 256, 14, 14] 589,824

BatchNorm2d-125 [-1, 256, 14, 14] 512

ReLU-126 [-1, 256, 14, 14] 0

Conv2d-127 [-1, 1024, 14, 14] 262,144

BatchNorm2d-128 [-1, 1024, 14, 14] 2,048

ReLU-129 [-1, 1024, 14, 14] 0

Bottleneck-130 [-1, 1024, 14, 14] 0

Conv2d-131 [-1, 256, 14, 14] 262,144

BatchNorm2d-132 [-1, 256, 14, 14] 512

ReLU-133 [-1, 256, 14, 14] 0

Conv2d-134 [-1, 256, 14, 14] 589,824

BatchNorm2d-135 [-1, 256, 14, 14] 512

ReLU-136 [-1, 256, 14, 14] 0

Conv2d-137 [-1, 1024, 14, 14] 262,144

BatchNorm2d-138 [-1, 1024, 14, 14] 2,048

ReLU-139 [-1, 1024, 14, 14] 0

Bottleneck-140 [-1, 1024, 14, 14] 0

Conv2d-141 [-1, 512, 14, 14] 524,288

BatchNorm2d-142 [-1, 512, 14, 14] 1,024

ReLU-143 [-1, 512, 14, 14] 0

Conv2d-144 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-145 [-1, 512, 7, 7] 1,024

ReLU-146 [-1, 512, 7, 7] 0

Conv2d-147 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-148 [-1, 2048, 7, 7] 4,096

Conv2d-149 [-1, 2048, 7, 7] 2,097,152

BatchNorm2d-150 [-1, 2048, 7, 7] 4,096

ReLU-151 [-1, 2048, 7, 7] 0

Bottleneck-152 [-1, 2048, 7, 7] 0

Conv2d-153 [-1, 512, 7, 7] 1,048,576

BatchNorm2d-154 [-1, 512, 7, 7] 1,024

ReLU-155 [-1, 512, 7, 7] 0

Conv2d-156 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-157 [-1, 512, 7, 7] 1,024

ReLU-158 [-1, 512, 7, 7] 0

Conv2d-159 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-160 [-1, 2048, 7, 7] 4,096

ReLU-161 [-1, 2048, 7, 7] 0

Bottleneck-162 [-1, 2048, 7, 7] 0

Conv2d-163 [-1, 512, 7, 7] 1,048,576

BatchNorm2d-164 [-1, 512, 7, 7] 1,024

ReLU-165 [-1, 512, 7, 7] 0

Conv2d-166 [-1, 512, 7, 7] 2,359,296

BatchNorm2d-167 [-1, 512, 7, 7] 1,024

ReLU-168 [-1, 512, 7, 7] 0

Conv2d-169 [-1, 2048, 7, 7] 1,048,576

BatchNorm2d-170 [-1, 2048, 7, 7] 4,096

ReLU-171 [-1, 2048, 7, 7] 0

Bottleneck-172 [-1, 2048, 7, 7] 0

Conv2d-173 [-1, 256, 7, 7] 524,544

Conv2d-174 [-1, 256, 14, 14] 262,400

Conv2d-175 [-1, 256, 28, 28] 131,328

Conv2d-176 [-1, 256, 56, 56] 65,792

Conv2d-177 [-1, 256, 14, 14] 590,080

Conv2d-178 [-1, 256, 28, 28] 590,080

Conv2d-179 [-1, 256, 56, 56] 590,080

================================================================

Total params: 26,262,336

Trainable params: 26,262,336

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 302.71

Params size (MB): 100.18

Estimated Total Size (MB): 403.47

----------------------------------------------------------------

# 查看FPN的第一个layer,即C2

net_fpn.layer1

Sequential(

(0): Bottleneck(

(bottleneck): Sequential(

(0): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(7): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(bottleneck): Sequential(

(0): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(7): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(bottleneck): Sequential(

(0): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(7): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(relu): ReLU(inplace=True)

)

)

# 查看FPN的第二个layer,即C3,包含了4个Bottleneck

net_fpn.layer2

Sequential(

(0): Bottleneck(

(bottleneck): Sequential(

(0): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(7): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(relu): ReLU(inplace=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(bottleneck): Sequential(

(0): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(7): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(relu): ReLU(inplace=True)

)

(2): Bottleneck(

(bottleneck): Sequential(

(0): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(7): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(relu): ReLU(inplace=True)

)

(3): Bottleneck(

(bottleneck): Sequential(

(0): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(7): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(relu): ReLU(inplace=True)

)

)