'''

SVM分类:最优超参数GridSearchCV优化后的SVM分类

'''

import numpy as np

import sklearn.model_selection as ms

import sklearn.svm as svm

import sklearn.metrics as sm

import matplotlib.pyplot as plt

'''***************************数据集:start************************************'''

x, y = [], []

with open('multiple2.txt', 'r') as f:

for line in f.readlines():

data = [float(substr) for substr in line.split(',')]

x.append(data[:-1])

y.append(data[-1])

x = np.array(x)

y = np.array(y, dtype=int)

train_x, test_x, train_y, test_y = ms.train_test_split(x, y, test_size=0.25, random_state=7)

'''***************************数据集:end**************************************'''

'''***************************超参数优化及GridSearchCV属性:start*******************************'''

params = [

{'kernel': ['linear'], 'C': [1, 10, 100, 100]},

{'kernel': ['poly'], 'C': [1], 'degree': [2, 3]},

{'kernel': ['rbf'], 'C': [1, 10, 100, 100], 'gamma':[1, 0.1, 0.01, 0.001]}

]

model = ms.GridSearchCV(svm.SVC(probability=True),

params,

refit=True,

return_train_score=True,

cv=5)

model.fit(train_x, train_y)

print('Attrabutes:vvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvvv')

print('cv_results_:',model.cv_results_.keys())

print('Desc:',model.cv_results_['params'][2], model.cv_results_['mean_train_score'][2],

model.cv_results_['mean_test_score'][2],model.cv_results_['rank_test_score'][2])

print('best_estimator_:',model.best_estimator_)

print('best_params_:',model.best_params_)

print('best_params_:', model.cv_results_['params'][model.best_index_])

print('best_score_:',model.best_score_)

print('scorer_:',model.scorer_)

print('n_splits_:',model.n_splits_)

'''

# params mean_train_score mean_test_score rank_test_score

# {'C': 100, 'kernel': 'linear'} 0.6877777777777778 0.6577777777777778 17

# cv_results_: 类似如下output

# | {'param_kernel': masked_array(data = ['poly', 'poly', 'rbf', 'rbf'],

# | mask = [False False False False]...)

# | 'param_gamma': masked_array(data = [-- -- 0.1 0.2],

# | mask = [ True True False False]...),

# | 'param_degree': masked_array(data = [2.0 3.0 -- --],

# | mask = [False False True True]...),

# | 'split0_test_score' : [0.8, 0.7, 0.8, 0.9],

# | 'split1_test_score' : [0.82, 0.5, 0.7, 0.78],

# | 'mean_test_score' : [0.81, 0.60, 0.75, 0.82],

# | 'std_test_score' : [0.02, 0.01, 0.03, 0.03],

# | 'rank_test_score' : [2, 4, 3, 1],

# | 'split0_train_score' : [0.8, 0.9, 0.7],

# | 'split1_train_score' : [0.82, 0.5, 0.7],

# | 'mean_train_score' : [0.81, 0.7, 0.7],

# | 'std_train_score' : [0.03, 0.03, 0.04],

# | 'mean_fit_time' : [0.73, 0.63, 0.43, 0.49],

# | 'std_fit_time' : [0.01, 0.02, 0.01, 0.01],

# | 'mean_score_time' : [0.007, 0.06, 0.04, 0.04],

# | 'std_score_time' : [0.001, 0.002, 0.003, 0.005],

# | 'params' : [{'kernel': 'poly', 'degree': 2}, ...],

# | }

# best_estimator_:

#SVC(C=1, cache_size=200, class_weight=None, coef0=0.0,

# decision_function_shape='ovr', degree=3, gamma=1, kernel='rbf',

# max_iter=-1, probability=True, random_state=None, shrinking=True,

# tol=0.001, verbose=False)

# model.cv_results_['mean_test_score'] 与 model.cv_results_['params']对应数据的平均值

for param, score in zip(model.cv_results_['params'],model.cv_results_['mean_test_score']):

print(param, score)

#{'C': 1, 'kernel': 'linear'} 0.6577777777777778

# ...

#{'C': 1, 'gamma': 1, 'kernel': 'rbf'} 0.9511111111111111

# ...

'''

'''***************************超参数优化及GridSearchCV属性:start*******************************'''

'''**************************优化模型的分类预测:start**************************'''

model_best = model.best_estimator_

pred_test_y = model_best.predict(test_x)

prob_x = np.array([[2, 1.5],[8, 9], [4.8, 5.2], [4,4],[2.5,7],[7.6,2],[5.4, 5.9]])

pred_prob_y = model_best.predict(prob_x)

probs = model_best.predict_proba(prob_x)

print(probs)

print(model_best.decision_function(prob_x))

'''**************************优化模型的分类预测:end****************************'''

'''******************************绘图区:start**********************************'''

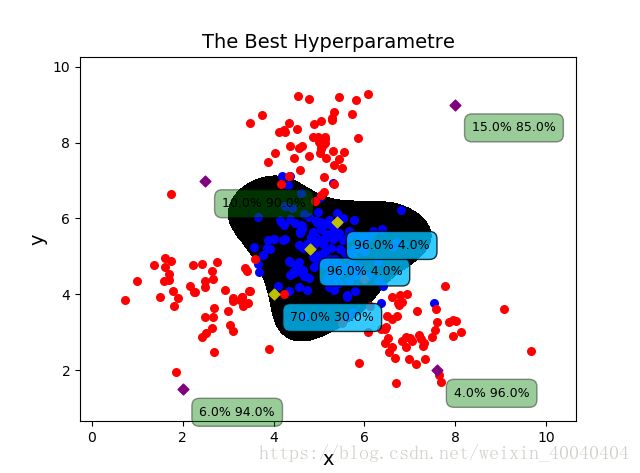

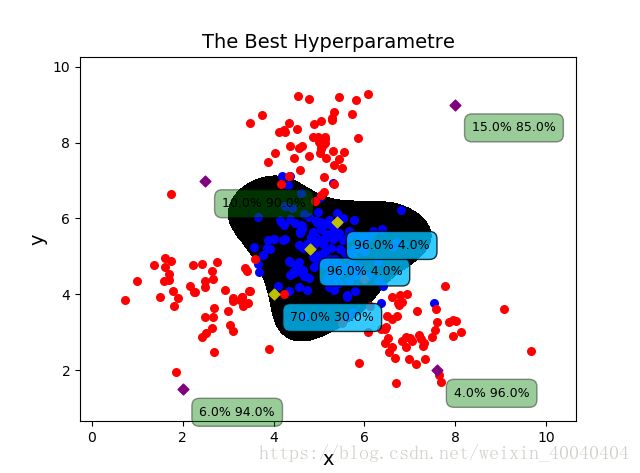

plt.figure('The Best HyperParametre', facecolor='lightgray')

plt.title('The Best Hyperparametre', fontsize=14)

plt.xlabel('x', fontsize=14)

plt.ylabel('y', fontsize=14)

plt.tick_params(labelsize=10)

l, r, h = x[:, 0].min() - 1, x[:, 0].max() + 1, 0.005

b, t, v = x[:, 1].min() - 1, x[:, 1].max() + 1, 0.005

grid_x = np.meshgrid(np.arange(l, r, h), np.arange(b, t, v))

flat_x = np.c_[grid_x[0].ravel(), grid_x[1].ravel()]

flat_y = model_best.predict(flat_x)

grid_y = flat_y.reshape(grid_x[0].shape)

plt.pcolormesh(grid_x[0], grid_x[1], grid_y, cmap='gray')

c0, c1 = y==0, y==1

plt.scatter(x[c0][:, 0], x[c0][:, 1], c='b', s=30)

plt.scatter(x[c1][:, 0], x[c1][:, 1], c='r', s=30)

C0, C1 = pred_prob_y==0, pred_prob_y==1

plt.scatter(prob_x[C0][:, 0], prob_x[C0][:, 1], c='y', s=30, marker='D')

plt.scatter(prob_x[C1][:, 0], prob_x[C1][:, 1], c='purple', s=30, marker='D')

for i in range(len(probs[C0])):

plt.annotate(

'{}% {}%'.format(round(probs[C0][:, 0][i],2)*100, round(probs[C0][:, 1][i],2)*100),

xy=(prob_x[C0][:, 0][i], prob_x[C0][:, 1][i]),

xytext=(12, -12),textcoords='offset points',

horizontalalignment='left',

verticalalignment='top',

fontsize=9,

bbox={'boxstyle':'round, pad=0.6','fc':'deepskyblue', 'alpha':0.8})

for i in range(len(probs[C1])):

plt.annotate(

'{}% {}%'.format(round(probs[C1][:, 0][i], 2)*100, round(probs[C1][:, 1][i], 2)*100),

xy=(prob_x[C1][:, 0][i], prob_x[C1][:, 1][i]),

xytext=(12, -12),textcoords='offset points',

horizontalalignment='left',

verticalalignment='top',

fontsize=9,

bbox={'boxstyle':'round, pad=0.6','fc':'green', 'alpha':0.4})

plt.show()

'''******************************绘图区:end************************************'''