XGBoost与Light-GBM算法

本文代码及数据集来自《Python大数据分析与机器学习商业案例实战》

一、XGBoost算法

XGBoost算法在某种程度上可以说是GBDT算法的改良版,两者在本质上都是利用了Boosting算法中拟合残差的思想。XGBoost算法的官方说明文档网址

作为对GBDT算法的高效实现,XGBoost算法在以下两方面进行了优化。

- 算法本身的优化:XGBoost算法的损失函数,除了本身的损失,还加上了正则化部分,可以防止过拟合,泛化能力更强。XGBoost算法的损失函数是对误差部分做二阶泰勒展开,相较于GBDT算法的损失函数只对误差部分做负梯度(一阶泰勒)展开,更加准确。

- 算法运行效率的优化:对每个弱学习器,如决策树建立的过程做并行选择,找到合适的子节点分裂特征和特征值,从而提升运行效率。

案例一:分类模型

# XGBoost分类模型

# **模型搭建**

# 1.读取数据

import pandas as pd

df = pd.read_excel('信用卡交易数据.xlsx')

# 2.提取特征变量和目标变量

X = df.drop(columns='欺诈标签')

y = df['欺诈标签']

# 3.划分训练集和测试集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1)

# 4.模型训练及搭建

from xgboost import XGBClassifier

clf = XGBClassifier(n_estimators=100, learning_rate=0.05)

clf.fit(X_train, y_train)

# **模型预测及评估**

y_pred = clf.predict(X_test)

print(y_pred)

a = pd.DataFrame() # 创建一个空DataFrame

a['预测值'] = list(y_pred)

a['实际值'] = list(y_test)

a.head()

from sklearn.metrics import accuracy_score

score = accuracy_score(y_pred, y_test)

print(score)

print(clf.score(X_test, y_test))

y_pred_proba = clf.predict_proba(X_test)

print(y_pred_proba[0:5]) # 查看前5个预测的概率

from sklearn.metrics import roc_curve

fpr, tpr, thres = roc_curve(y_test, y_pred_proba[:,1])

import matplotlib.pyplot as plt

plt.plot(fpr, tpr)

plt.show()

from sklearn.metrics import roc_auc_score

score = roc_auc_score(y_test, y_pred_proba[:,1])

print(score)

print(clf.feature_importances_)

features = X.columns # 获取特征名称

importances = clf.feature_importances_ # 获取特征重要性

importances_df = pd.DataFrame()

importances_df['特征名称'] = features

importances_df['特征重要性'] = importances

importances_df.sort_values('特征重要性', ascending=False)

print(importances_df)

运行结果:

特征名称 特征重要性

0 换设备次数 0.399616

1 支付失败次数 0.195023

2 换IP次数 0.053074

3 换IP国次数 0.323595

4 交易金额 0.028692

# **模型参数调优**

from sklearn.model_selection import GridSearchCV

parameters = {'max_depth': [1, 3, 5], 'n_estimators': [50, 100, 150], 'learning_rate': [0.01, 0.05, 0.1, 0.2]} # 指定模型中参数的范围

clf = XGBClassifier() # 构建模型

grid_search = GridSearchCV(clf, parameters, scoring='roc_auc', cv=5)

grid_search.fit(X_train, y_train) # 传入数据

print(grid_search.best_params_) # 输出参数的最优值

clf = XGBClassifier(max_depth=1, n_estimators=100, learning_rate=0.05)

clf.fit(X_train, y_train)

y_pred_proba = clf.predict_proba(X_test)

from sklearn.metrics import roc_auc_score

score = roc_auc_score(y_test, y_pred_proba[:,1])

print(score)

运行结果:score为0.8648648648648649。

案例二:回归模型

# XGBoost回归模型

# 1.读取数据

import pandas as pd

df = pd.read_excel('信用评分卡模型.xlsx')

# 2.提取特征变量和目标变量

X = df.drop(columns='信用评分')

y = df['信用评分']

# 3.划分测试集和训练集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=123)

# 4.模型训练及搭建

from xgboost import XGBRegressor

model = XGBRegressor()

model.fit(X_train, y_train)

# 5.模型预测及评估

y_pred = model.predict(X_test)

print(y_pred[0:10])

a = pd.DataFrame() # 创建一个空DataFrame

a['预测值'] = list(y_pred)

a['实际值'] = list(y_test)

a.head()

from sklearn.metrics import r2_score

r2 = r2_score(y_test, model.predict(X_test))

print(r2)

print(model.score(X_test, y_test))

# 6.查看特征重要性

features = X.columns

importances = model.feature_importances_

importances_df = pd.DataFrame()

importances_df['特征名称'] = features

importances_df['特征重要性'] = importances

importances_df.sort_values('特征重要性', ascending=False)

print(importances_df)

运行结果:

特征名称 特征重要性

0 月收入 0.324461

1 年龄 0.098869

2 性别 0.066339

3 历史授信额度 0.202864

4 历史违约次数 0.307467

得分:0.5715437436791975

# **补充知识点1:XGBoost回归模型的参数调优**

from sklearn.model_selection import GridSearchCV

parameters = {'max_depth': [1, 3, 5], 'n_estimators': [50, 100, 150], 'learning_rate': [0.01, 0.05, 0.1, 0.2]}

clf = XGBRegressor() # 构建回归模型

grid_search = GridSearchCV(model, parameters, scoring='r2', cv=5)

grid_search.fit(X_train, y_train)

print(grid_search.best_params_ )

# {'learning_rate': 0.1, 'max_depth': 3, 'n_estimators': 50}

model = XGBRegressor(max_depth=3, n_estimators=50, learning_rate=0.1)

model.fit(X_train, y_train)

from sklearn.metrics import r2_score

r2 = r2_score(y_test, model.predict(X_test))

print(r2)

# 0.688

得分:0.6884486054771359,明显优于调优前。

# **补充知识点2:对于XGBoost模型,有必要做很多数据预处理吗?**

from sklearn.preprocessing import StandardScaler

X_new = StandardScaler().fit_transform(X)

print(X_new)

# 3.划分测试集和训练集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X_new, y, test_size=0.2, random_state=123)

# 4.建模

from xgboost import XGBRegressor

model = XGBRegressor()

model.fit(X_train, y_train)

from sklearn.metrics import r2_score

r2 = r2_score(y_test, model.predict(X_test))

print(r2)

运行结果:

[[-0.88269208 -1.04890243 -1.01409939 -0.60873764 0.63591822]

[-0.86319167 0.09630122 0.98609664 -1.55243002 1.27956013]

[-1.39227834 -1.46534013 -1.01409939 -0.83867808 -0.0077237 ]

…

[ 1.44337605 0.61684833 0.98609664 1.01172301 -0.0077237 ]

[ 0.63723633 -0.21602705 0.98609664 -0.32732239 -0.0077237 ]

[ 1.57656755 0.61684833 -1.01409939 1.30047599 -0.0077237 ]]

得分:0.5716150813375576,发现结果和数据没有标准化时的结果一样,都为0.571。这也验证了树模型不需要进行特征的标准化。此外,树模型对于共线性也不敏感。

XGBoost模型的常见超参数

至于XGBoost回归模型,大多数参数和上表中列举的XGBoost分类模型的参数一致,略微有些不同的是,objective参数在回归问题中取值为’reg:linear’,而在二分类问题中一般取值为’binary:logistic’,在多分类问题中一般取值为’multi:softmax’。不过当选择XGBoost回归模型时,该参数会自动切换,所以也无须手动调整。

二、LightGBM算法

LigthGBM算法是Boosting算法的新成员,由微软公司开发。它和XGBoost算法一样是对GBDT算法的高效实现,在原理上与GBDT算法和XGBoost算法类似,都采用损失函数的负梯度作为当前决策树的残差近似值,去拟合新的决策树。LightGBM算法的官方说明文档网址

- 基于leaf-wise的决策树生长策略

大部分决策树算法使用的生长策略是level-wise生长策略,即同一层的叶子节点每次都一起分裂,如下图所示。但实际上一些叶子节点的分裂增益较低,这样分裂会增加不小的开销。

LightGBM算法使用的则是leaf-wise生长策略,每次在当前叶子节点中找出分裂增益最大的叶子节点进行分裂,而不是所有节点都进行分裂,如下图所示,这样可以提高精度。但是,leaf-wise策略在样本量较小时容易造成过拟合,LightGBM算法可以通过参数max_depth限制树的深度来防止过拟合。

- 直方图算法

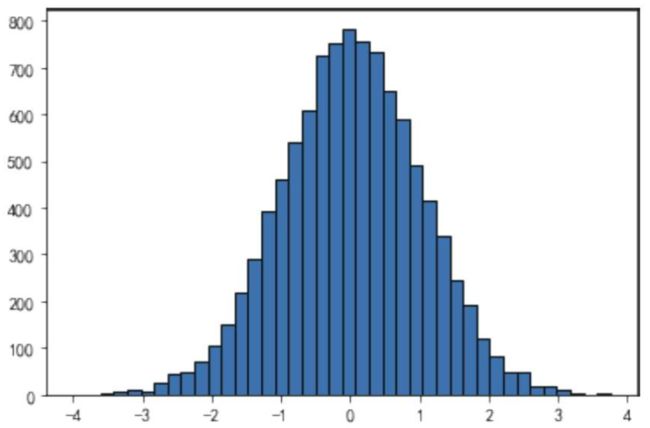

直方图算法又称为histogram算法,简单来说,就是先对特征值进行装箱处理,将连续的浮点特征值离散化成k个整数,形成一个个箱体(bins),同时构造一个宽度为k的直方图,在遍历数据时,以离散化后的值作为索引在直方图中累积统计量(因此这里是频数直方图)。遍历一次数据后,直方图累积了需要的统计量,再根据直方图的离散值遍历寻找最优分割点。

对于连续特征来说,装箱处理就是特征工程中的离散化,例如,[0,10)区间的值都赋值为0,[10,20)区间的值都赋值为1等,这样就可以把众多的数值划分到有限的分箱中。LightGBM算法中默认的分箱数(bins)为256。

举例来说,现在有10000个客户,也就有10000个身高数据,将身高分箱为256份后(例如,身高180~180.2cm的所有客户都分箱为数字200),就变为256个数字,这时再统计每个数值对应的频数(例如,身高180~180.2cm的客户为100人,那么数字200对应的频数就是100)。这样在节点分裂时,就不需要按照预排序算法对每个特征都计算10000遍(样本总数),而只需要计算256遍(分箱数),大大加快了训练速度。

对于分类特征来说,则是将每一种取值放入一个分箱(bin),且当取值的个数大于最大分箱数时,会忽略那些很少出现的分类值。例如,10000个客户的国籍数据,便可以按国家名称进行分箱,如果超过最大分箱数(如256),那么很少出现的国家就会被忽略。 - 并行学习

LightGBM算法支持特征并行和数据并行两种学习方式。传统的特征并行的主要思想是在并行化决策树中寻找最佳切分点,在数据量大时难以加速,同时需要对切分结果进行通信整合。而LightGBM算法在本地保存全部数据,这样就没有了机器间通信所需的开销。此外,传统的数据并行是构建本地直方图,然后进行整合,在全局直方图中寻找最佳切分点。LightGBM算法则使用分散规约(reducescatter),将直方图合并的任务分给不同的机器,降低通信和计算的开销,并利用直方图做加速训练,进一步减少开销。

除了上述原理,LightGBM算法还包含一些重要的算法思想,如单边梯度采样GOSS算法(Gradient-based One-Side Sampling)和互斥特征绑定EFB算法(Exclusive Feature Bundling)。在GOSS算法中,梯度更大的样本点在计算信息增益时会发挥更重要的作用,当对样本进行下采样时保留这些梯度较大的样本点,并随机去掉梯度小的样本点。EFB算法则将互斥特征绑在一起以减少特征维度。

案例一:分类模型

# LightGBM分类模型

# **模型搭建**

# 1.读取数据

import pandas as pd

df = pd.read_excel('客户信息及违约表现.xlsx')

df.head()

# 2.提取特征变量和目标变量

X = df.drop(columns='是否违约')

Y = df['是否违约']

# 3.划分训练集和测试集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size=0.2, random_state=123)

# 4.模型训练及搭建

from lightgbm import LGBMClassifier

model = LGBMClassifier()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

print(y_pred)

a = pd.DataFrame()

a['预测值'] = list(y_pred)

a['实际值'] = list(y_test)

a.head()

from sklearn.metrics import accuracy_score

score = accuracy_score(y_pred, y_test)

print(score)

print(model.score(X_test, y_test))

y_pred_proba = model.predict_proba(X_test)

from sklearn.metrics import roc_curve

fpr, tpr, thres = roc_curve(y_test, y_pred_proba[:,1])

import matplotlib.pyplot as plt

plt.plot(fpr, tpr)

plt.show()

from sklearn.metrics import roc_auc_score

score = roc_auc_score(y_test, y_pred_proba[:,1])

print(score)

print(model.feature_importances_)

features = X.columns

importances = model.feature_importances_

importances_df = pd.DataFrame()

importances_df['特征名称'] = features

importances_df['特征重要性'] = importances

importances_df.sort_values('特征重要性', ascending=False)

# **模型参数调优**

from sklearn.model_selection import GridSearchCV

parameters = {'num_leaves': [10, 15, 31], 'n_estimators': [10, 20, 30], 'learning_rate': [0.05, 0.1, 0.2]}

model = LGBMClassifier() # 构建分类器

grid_search = GridSearchCV(model, parameters, scoring='roc_auc', cv=5)

grid_search.fit(X_train, y_train)

print(grid_search.best_params_)

model = LGBMClassifier(num_leaves=15, n_estimators=20,learning_rate=0.1)

model.fit(X_train, y_train)

y_pred_proba = model.predict_proba(X_test)

from sklearn.metrics import roc_curve

fpr, tpr, thres = roc_curve(y_test, y_pred_proba[:,1])

import matplotlib.pyplot as plt

plt.plot(fpr, tpr)

plt.show()

y_pred_proba = model.predict_proba(X_test)

from sklearn.metrics import roc_auc_score

score = roc_auc_score(y_test, y_pred_proba[:, 1])

print(score)

案例二:预测模型

# LightGBM回归模型

# **模型搭建**

# 读取数据

import pandas as pd

df = pd.read_excel('广告收益数据.xlsx')

df.head()

# 1.提取特征变量和目标变量

X = df.drop(columns='收益')

y = df['收益']

# 2.划分训练集和测试集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=123)

# 3. 模型训练和搭建

from lightgbm import LGBMRegressor

model = LGBMRegressor()

model.fit(X_train, y_train)

# **模型预测及评估**

y_pred = model.predict(X_test)

print(y_pred[0:5])

a = pd.DataFrame()

a['预测值'] = list(y_pred)

a['实际值'] = list(y_test)

a.head()

X = [[71, 11, 2]]

print(model.predict(X))

from sklearn.metrics import r2_score

r2 = r2_score(y_test, model.predict(X_test))

print(r2)

print(model.score(X_test, y_test))

print(model.feature_importances_)

# **模型参数调优**

from sklearn.model_selection import GridSearchCV

parameters = {'num_leaves': [15, 31, 62], 'n_estimators': [20, 30, 50, 70], 'learning_rate': [0.1, 0.2, 0.3, 0.4]}

model = LGBMRegressor() # 构建模型

grid_search = GridSearchCV(model, parameters,scoring='r2',cv=5)

grid_search.fit(X_train, y_train)

print(grid_search.best_params_)

model = LGBMRegressor(num_leaves=31, n_estimators=50,learning_rate=0.3)

model.fit(X_train, y_train)

print(model.score(X_test, y_test))