【百度AI-Studio】强化学习训练营(共六节课)——PaddlePaddle(自学笔记)(附代码)

如果对你有帮助的话

为博主点个赞吧

点赞是对博主最大的鼓励

爱心发射~

强化学习训练营入口链接

GYM官网

PARL代码链接

目录

- 一、第一课强化学习(RL)初印象

-

- 1. 什么是强化学习

- 2. 强化学习的应用

- 3. 强化学习与其他机器学习的关系

- 4. Agent学习的两种方案

- 5. RL的分类

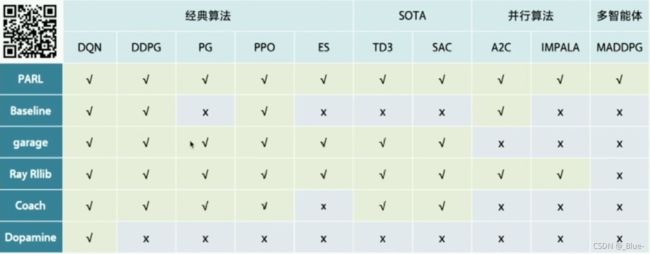

- 6. 算法库&框架库

-

- (1) RL编程实践:GYM

- (2) RL agent→ environment 交互接口

- (3) PARL介绍:快速搭建并行框架

- 7. 总结

- 8. pip安装问题

- 二、第二课:基于表格型方法求解RL

-

- 1. 强化学习MDP 四元组(S,A,P,R)

- 2. 实践环境

- 3. reward折扣因子y

- 4. Sarsa——代码

-

- (1)∈- greed

- (2)Sarsa与环境交互代码,每一个Step都更新lean一下

- (4)Sarsa Agent

- (5)Sarsa - train

- 5. Q-Learning——代码

-

- (1)Q- -learning与环境交互

- (2)Q-learning Agent

- (3)train

- 6. On-policy vs Off-policy

- 7. 总结

- 8. gridworld. py使用指南

- 第三课:基于神经网络方法求解RL

-

- 1. 基础知识

-

- (1)值函数近似

- (2)DQN 与 Q-Learning 关系

- 2. DQN 算法解析

-

- (1)DQN两大创新点

- (2)DQN 流程图

- 3. DQN——代码

-

- (1)model.py

- (2)algorithm.py

- (3)agent.py

- (4)replaymemory.py

- (5)train.py

- 4. 常用方法

-

- (1)PARL常用AP

- (2)模型保存与加载

- (3)打印日志

- 5. 总结

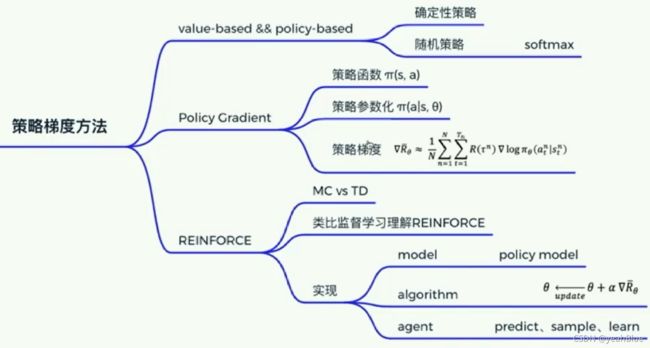

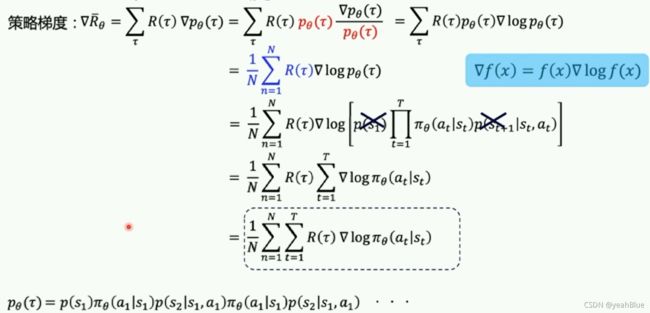

- 第四课:基于策略梯度求解RL

-

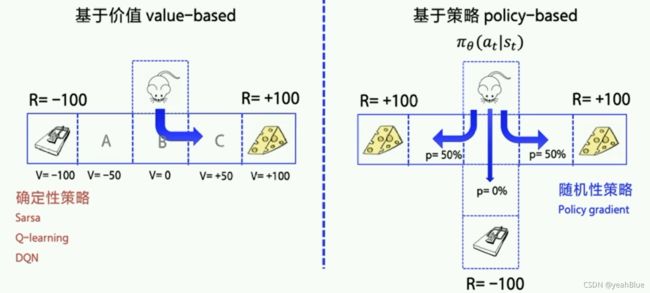

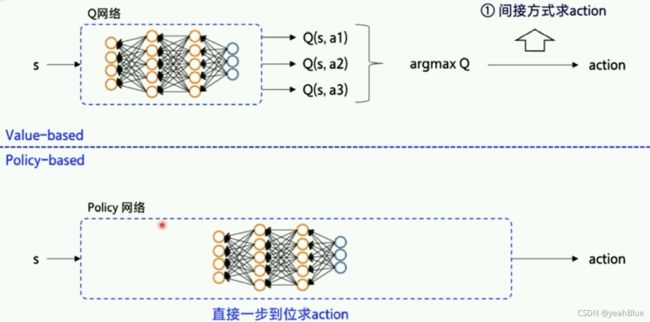

- 1. Value-based vs policy-based

-

- (1)Policy- based 直接表示策略

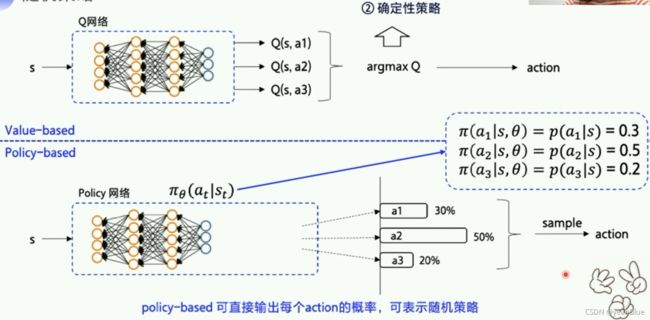

- (2)随机策略

- (3)随机策略—— softmax函数

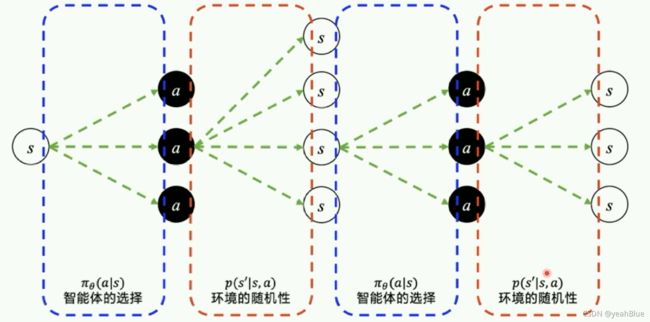

- (4)轨迹

- (5)期望回报

- (6)优化策略函数

- (7)蒙特卡洛MC与时序差分TD

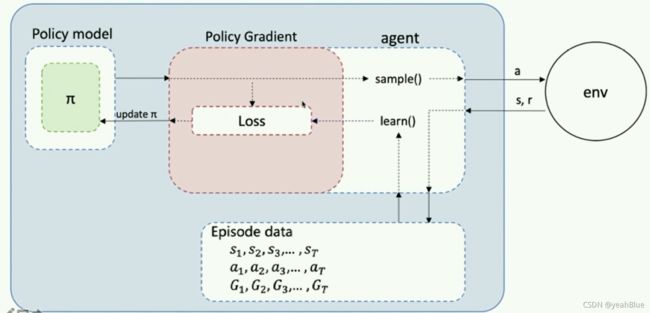

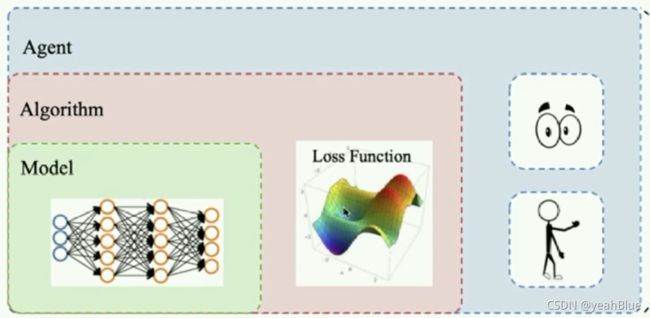

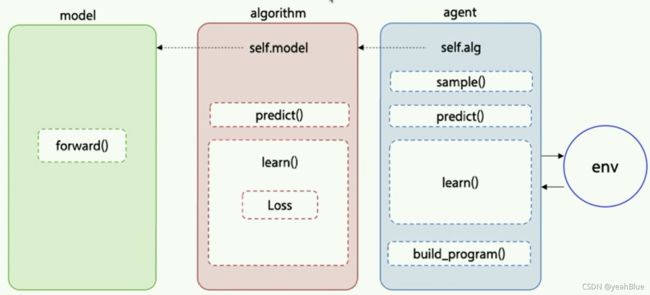

- 2. 流程图

- 3. PG——代码

-

- (1)model.py

- (2)algorithm.py

- (3)agent.py

- (4)train.py

- 4. 总结

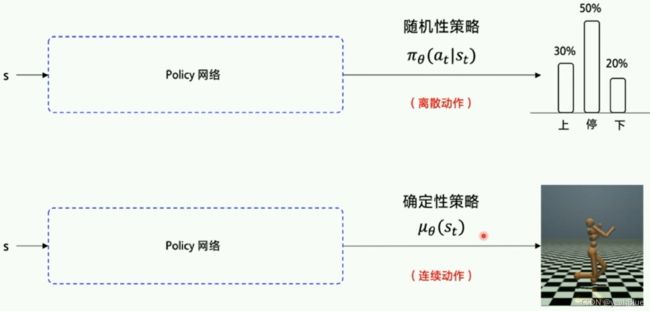

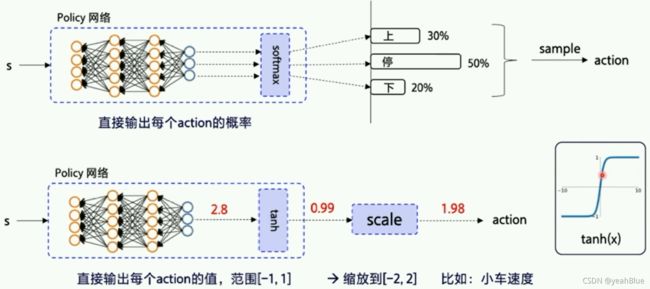

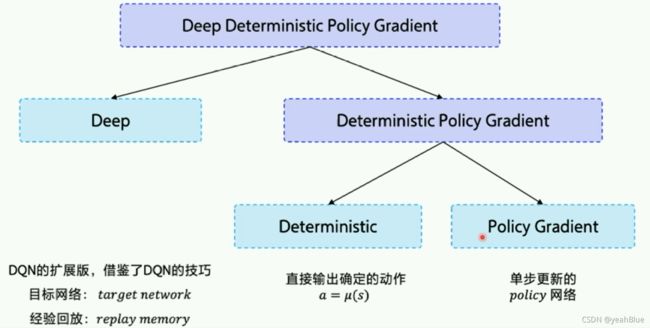

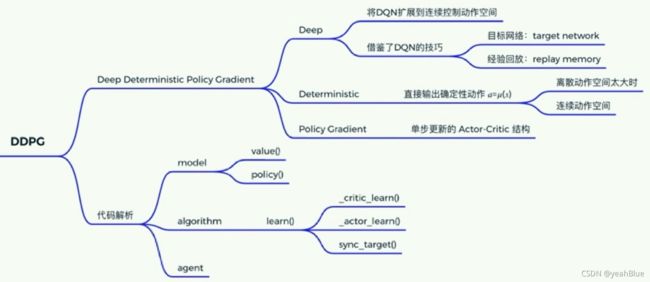

- 第五课:连续动作空间上求解RL

-

- 1. 离散动作VS连续动作

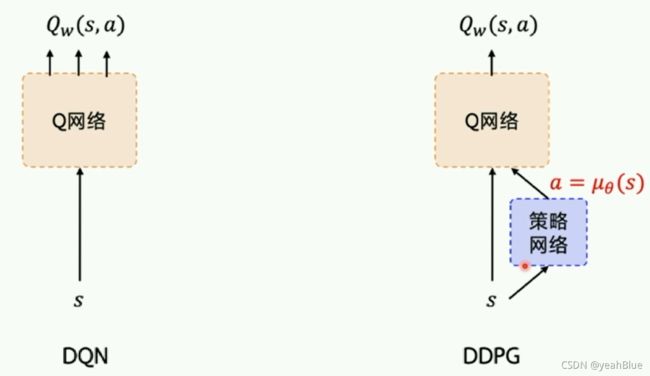

- 2. DDPG( Deep Deterministic Policy Gradient)

-

- (1)DDPG结构

- (2) DQN——>DDPG

- (3)Actor- Critic 结构 (评论家-演员)

- (4)目标网络 target network+经验回放 ReplayMemory

- 3. PARL DDPG

- 4. DDPG——代码

-

- (1)model.py

- (2)algorithm.py

- (3)train.py

- 5. 总结

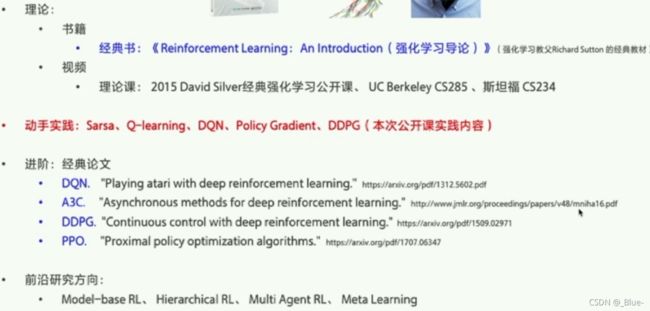

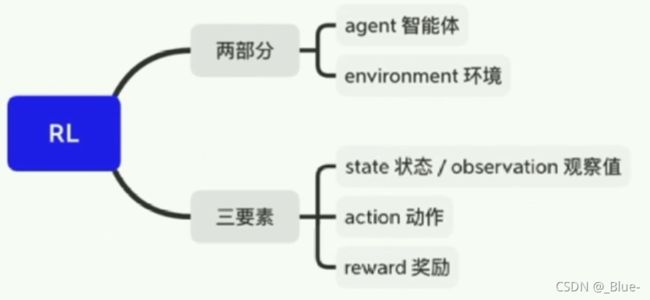

一、第一课强化学习(RL)初印象

- RL概述、入门路线

- 实践:环境搭建

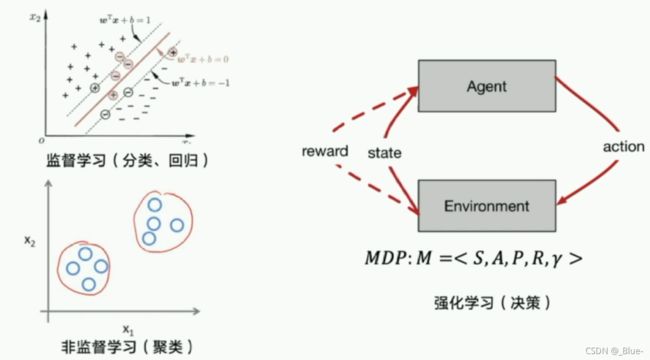

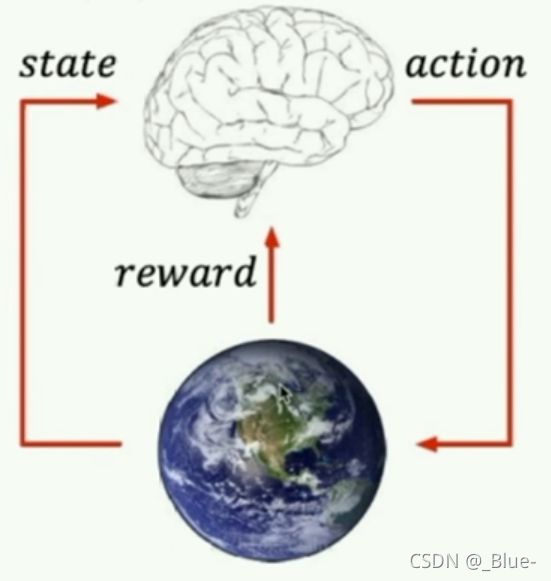

1. 什么是强化学习

核心思想:智能体 agent在环境 environment中学习,根据环境的状态 state,执行动作 action,并根据环境的反馈 reward(奖励)来指导更好的动作。

2. 强化学习的应用

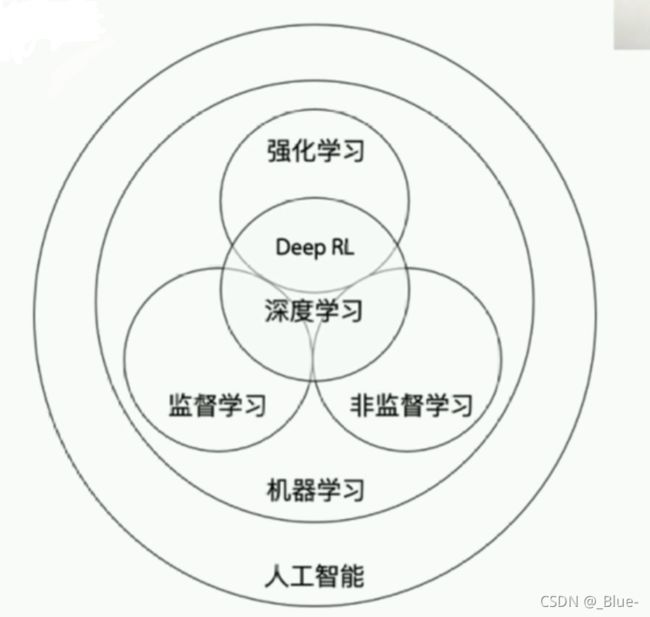

3. 强化学习与其他机器学习的关系

4. Agent学习的两种方案

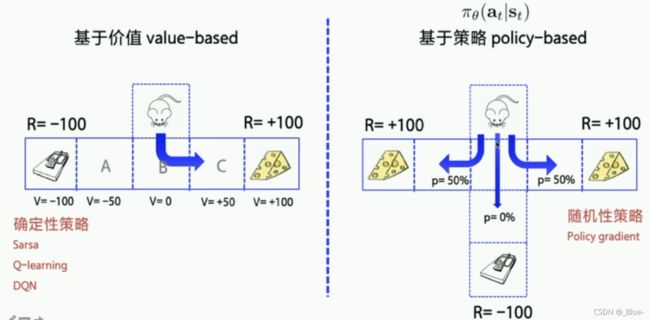

5. RL的分类

6. 算法库&框架库

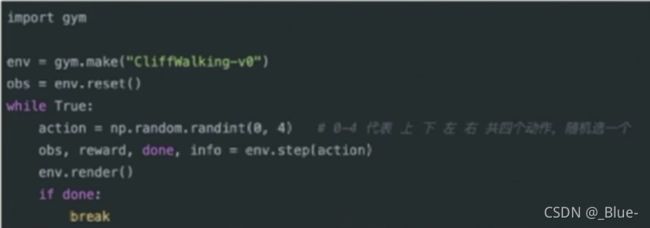

(1) RL编程实践:GYM

- Gym:仿真平台、python开源库、RL测试平台

- 官网: GYM官网

- 离散控制场景:一般使用 atari 环境评估

- 连续控制场景:一般使用 mujoco 环境游戏来评估

(2) RL agent→ environment 交互接口

gym 的核心接口是 environment 。提供以下几个核心方法:

- reset():重置环境的状态,回到初始环境,方便开始下一次训练。

- step( action):推进一个时间步长,返回四个值:

① observation( object):对环境的一次观察;

② reward ( float):奖励;

③ done ( boolean):代表是否需要重置环境;

④ info (dct):用于调试的诊段信息。 - render():重绘环境的一帧图像。

代码路径:https://github.com/paddlepaddle/Parl/tree/develop/examples/tutorials

import gym

env = gym.make("Cliffwalking-v0")

obs = env.reset()

while true:

action = np.random.randint(0,4)

oba, reward, done,

(3) PARL介绍:快速搭建并行框架

7. 总结

8. pip安装问题

Tips1:Pip库安装常见问题:网络超时

- 解决办法1:使用清华源-i https://pypi.tuna.tsinghua.edu.cn/simple

- 解决办法2:https:/pypi.org/下载whl包或者源码包安装

Tips2:Pip安装中断:某个依赖包安装失败,重新安装即可

Tips3:独立的 Python环境: Conda

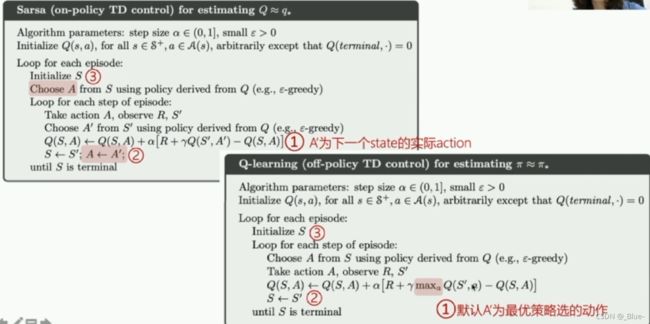

二、第二课:基于表格型方法求解RL

- MDP、状态价值、Q表格

- 实践:Sarsa、Q- learning

1. 强化学习MDP 四元组(S,A,P,R)

s:state 状态

a:action 动作

r:reward 奖励

p:probability 状态转移概率

如何描述环境:

P函数: probability function

R函数: reward function

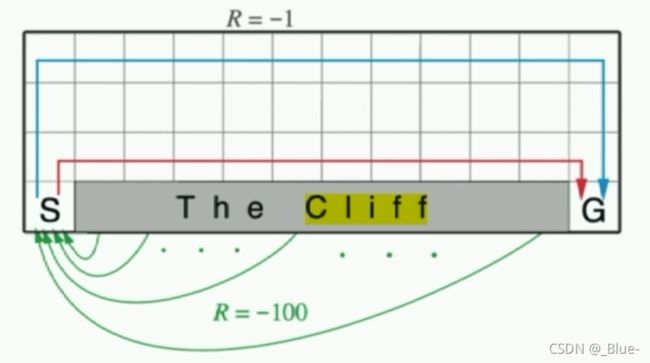

2. 实践环境

悬崖问题(快速到达目的地):

- 每走一步都有-1的惩罚,掉进悬崖会有-100的惩罚(并被拖回出发点),直到到达目的地结束游戏

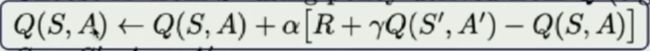

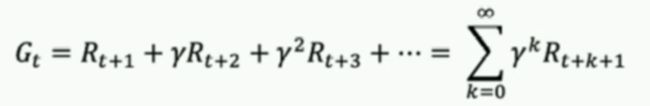

3. reward折扣因子y

Temporal Difference 时序差分(TD单步更新)

4. Sarsa——代码

(1)∈- greed

# 根据输入观察值,采样输出的动作值,带探索

def sample(self, obs):

if np.random.uniform(0, 1) < (1.0 - self.epsilon): #根据table的Q值选动作

action = self.predict(obs)

else:

action = np.random.choice(self.act_n) #有一定概率随机探索选取一个动作

return action

# 根据输入观察值,预测输出的动作值

def predict(self, obs):

Q_list = self.Q[obs, :]

maxQ = np.max(Q_list)

action_list = np.where(Q_list == maxQ)[0] # maxQ可能对应多个action

action = np.random.choice(action_list)

return action

(2)Sarsa与环境交互代码,每一个Step都更新lean一下

def run_episode(env, agent, render=False):

total_steps = 0 # 记录每个episode走了多少step

total_reward = 0

obs = env.reset() # 重置环境, 重新开一局(即开始新的一个episode)

action = agent.sample(obs) # 根据算法选择一个动作

while True:

next_obs, reward, done, _ = env.step(action) # 与环境进行一个交互

next_action = agent.sample(next_obs) # 根据算法选择一个动作

# 训练 Sarsa 算法

agent.learn(obs, action, reward, next_obs, next_action, done)

action = next_action

obs = next_obs # 存储上一个观察值

total_reward += reward

total_steps += 1 # 计算step数

if render:

env.render() #渲染新的一帧图形

if done:

break

return total_reward, total_steps

(4)Sarsa Agent

===================================根据Q表格选动作============================================

class SarsaAgent(object):

def __init__(self,

obs_n,

act_n,

learning_rate=0.01,

gamma=0.9,

e_greed=0.1):

self.act_n = act_n # 动作维度,有几个动作可选

self.lr = learning_rate # 学习率

self.gamma = gamma # reward的衰减率

self.epsilon = e_greed # 按一定概率随机选动作

self.Q = np.zeros((obs_n, act_n))

# 根据输入观察值,采样输出的动作值,带探索

def sample(self, obs):

if np.random.uniform(0, 1) < (1.0 - self.epsilon): #根据table的Q值选动作

action = self.predict(obs)

else:

action = np.random.choice(self.act_n) #有一定概率随机探索选取一个动作

return action

# 根据输入观察值,预测输出的动作值

def predict(self, obs):

Q_list = self.Q[obs, :]

maxQ = np.max(Q_list)

action_list = np.where(Q_list == maxQ)[0] # maxQ可能对应多个action

action = np.random.choice(action_list)

return action

==========================================更新Q-table===================================

# 学习方法,也就是更新Q-table的方法

def learn(self, obs, action, reward, next_obs, next_action, done):

""" on-policy

obs: 交互前的obs, s_t

action: 本次交互选择的action, a_t

reward: 本次动作获得的奖励r

next_obs: 本次交互后的obs, s_t+1

next_action: 根据当前Q表格, 针对next_obs会选择的动作, a_t+1

done: episode是否结束

"""

predict_Q = self.Q[obs, action]

if done:

target_Q = reward # 没有下一个状态了

else:

target_Q = reward + self.gamma * self.Q[next_obs,

next_action] # Sarsa

self.Q[obs, action] += self.lr * (target_Q - predict_Q) # 修正q

(5)Sarsa - train

import gym

from gridworld import CliffWalkingWapper, FrozenLakeWapper

from agent import SarsaAgent

import time

def run_episode(env, agent, render=False):

total_steps = 0 # 记录每个episode走了多少step

total_reward = 0

obs = env.reset() # 重置环境, 重新开一局(即开始新的一个episode)

action = agent.sample(obs) # 根据算法选择一个动作

while True:

next_obs, reward, done, _ = env.step(action) # 与环境进行一个交互

next_action = agent.sample(next_obs) # 根据算法选择一个动作

# 训练 Sarsa 算法

agent.learn(obs, action, reward, next_obs, next_action, done)

action = next_action

obs = next_obs # 存储上一个观察值

total_reward += reward

total_steps += 1 # 计算step数

if render:

env.render() #渲染新的一帧图形

if done:

break

return total_reward, total_steps

def test_episode(env, agent):

total_reward = 0

obs = env.reset()

while True:

action = agent.predict(obs) # greedy

next_obs, reward, done, _ = env.step(action)

total_reward += reward

obs = next_obs

time.sleep(0.5)

env.render()

if done:

print('test reward = %.1f' % (total_reward))

break

def main():

# env = gym.make("FrozenLake-v0", is_slippery=False) # 0 left, 1 down, 2 right, 3 up

# env = FrozenLakeWapper(env)

env = gym.make("CliffWalking-v0") # 0 up, 1 right, 2 down, 3 left

env = CliffWalkingWapper(env)

agent = SarsaAgent(

obs_n=env.observation_space.n,

act_n=env.action_space.n,

learning_rate=0.1,

gamma=0.9,

e_greed=0.1)

is_render = False

for episode in range(500):

ep_reward, ep_steps = run_episode(env, agent, is_render)

print('Episode %s: steps = %s , reward = %.1f' % (episode, ep_steps,

ep_reward))

# 每隔20个episode渲染一下看看效果

if episode % 20 == 0:

is_render = True

else:

is_render = False

# 训练结束,查看算法效果

test_episode(env, agent)

if __name__ == "__main__":

main()

5. Q-Learning——代码

(1)Q- -learning与环境交互

def run_episode(env, agent, render=False):

total_steps = 0 # 记录每个episode走了多少step

total_reward = 0

obs = env.reset() # 重置环境, 重新开一局(即开始新的一个episode)

while True:

action = agent.sample(obs) # 根据算法选择一个动作

next_obs, reward, done, _ = env.step(action) # 与环境进行一个交互

# 训练 Q-learning算法

agent.learn(obs, action, reward, next_obs, done)

obs = next_obs # 存储上一个观察值

total_reward += reward

total_steps += 1 # 计算step数

if render:

env.render() #渲染新的一帧图形

if done:

break

return total_reward, total_steps

(2)Q-learning Agent

=============================①根据Q表格选动作=============================

class QLearningAgent(object):

def __init__(self,

obs_n,

act_n,

learning_rate=0.01,

gamma=0.9,

e_greed=0.1):

self.act_n = act_n # 动作维度,有几个动作可选

self.lr = learning_rate # 学习率

self.gamma = gamma # reward的衰减率

self.epsilon = e_greed # 按一定概率随机选动作

self.Q = np.zeros((obs_n, act_n))

# 根据输入观察值,采样输出的动作值,带探索

def sample(self, obs):

if np.random.uniform(0, 1) < (1.0 - self.epsilon): #根据table的Q值选动作

action = self.predict(obs)

else:

action = np.random.choice(self.act_n) #有一定概率随机探索选取一个动作

return action

# 根据输入观察值,预测输出的动作值

def predict(self, obs):

Q_list = self.Q[obs, :]

maxQ = np.max(Q_list)

action_list = np.where(Q_list == maxQ)[0] # maxQ可能对应多个action

action = np.random.choice(action_list)

return action

========================更新Q表格=====================================

# 学习方法,也就是更新Q-table的方法

def learn(self, obs, action, reward, next_obs, done):

""" off-policy

obs: 交互前的obs, s_t

action: 本次交互选择的action, a_t

reward: 本次动作获得的奖励r

next_obs: 本次交互后的obs, s_t+1

done: episode是否结束

"""

predict_Q = self.Q[obs, action]

if done:

target_Q = reward # 没有下一个状态了

else:

target_Q = reward + self.gamma * np.max(

self.Q[next_obs, :]) # Q-learning

self.Q[obs, action] += self.lr * (target_Q - predict_Q) # 修正q

(3)train

import gym

from gridworld import CliffWalkingWapper, FrozenLakeWapper

from agent import QLearningAgent

import time

def run_episode(env, agent, render=False):

total_steps = 0 # 记录每个episode走了多少step

total_reward = 0

obs = env.reset() # 重置环境, 重新开一局(即开始新的一个episode)

while True:

action = agent.sample(obs) # 根据算法选择一个动作

next_obs, reward, done, _ = env.step(action) # 与环境进行一个交互

# 训练 Q-learning算法

agent.learn(obs, action, reward, next_obs, done)

obs = next_obs # 存储上一个观察值

total_reward += reward

total_steps += 1 # 计算step数

if render:

env.render() #渲染新的一帧图形

if done:

break

return total_reward, total_steps

def test_episode(env, agent):

total_reward = 0

obs = env.reset()

while True:

action = agent.predict(obs) # greedy

next_obs, reward, done, _ = env.step(action)

total_reward += reward

obs = next_obs

time.sleep(0.5)

env.render()

if done:

print('test reward = %.1f' % (total_reward))

break

def main():

# env = gym.make("FrozenLake-v0", is_slippery=False) # 0 left, 1 down, 2 right, 3 up

# env = FrozenLakeWapper(env)

env = gym.make("CliffWalking-v0") # 0 up, 1 right, 2 down, 3 left

env = CliffWalkingWapper(env)

agent = QLearningAgent(

obs_n=env.observation_space.n,

act_n=env.action_space.n,

learning_rate=0.1,

gamma=0.9,

e_greed=0.1)

is_render = False

for episode in range(500):

ep_reward, ep_steps = run_episode(env, agent, is_render)

print('Episode %s: steps = %s , reward = %.1f' % (episode, ep_steps,

ep_reward))

# 每隔20个episode渲染一下看看效果

if episode % 20 == 0:

is_render = True

else:

is_render = False

# 训练结束,查看算法效果

test_episode(env, agent)

if __name__ == "__main__":

main()

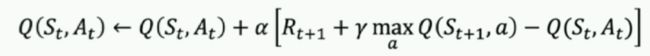

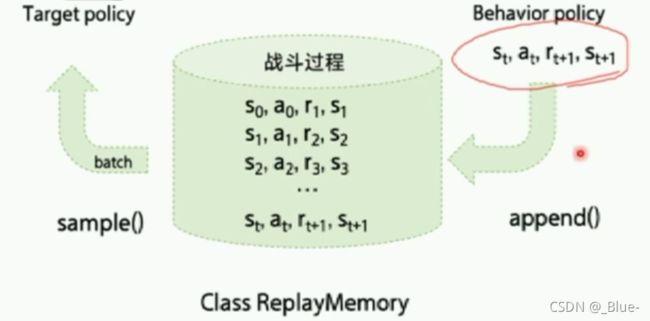

6. On-policy vs Off-policy

☆On- policy策略:使用策略π学习,使用策略π与环境交互产生经验

- 由于需要兼顾探索,策略π并不稳定

☆ Of-policy策略(两个策略): - 目标策略:π

- 行为策略:μ

☆ 使用Off policy的好处: - 目标策略π 用于学习最优策略

- 行为策略μ 更具有探索性,与环境交互产生经验轨迹

7. 总结

8. gridworld. py使用指南

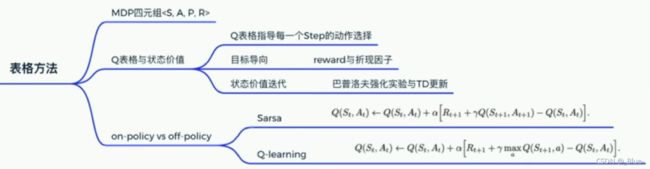

第三课:基于神经网络方法求解RL

- 函数逼近方法

- 实践:DQN

1. 基础知识

(1)值函数近似

使用值函数近似的优点:

①仅需存储有限的参数

②状态泛化,相似的状态可以输出一样

表格方法的缺点:

①表格可能占用极大内存

②当表格极大时,查表效率低下

(2)DQN 与 Q-Learning 关系

2. DQN 算法解析

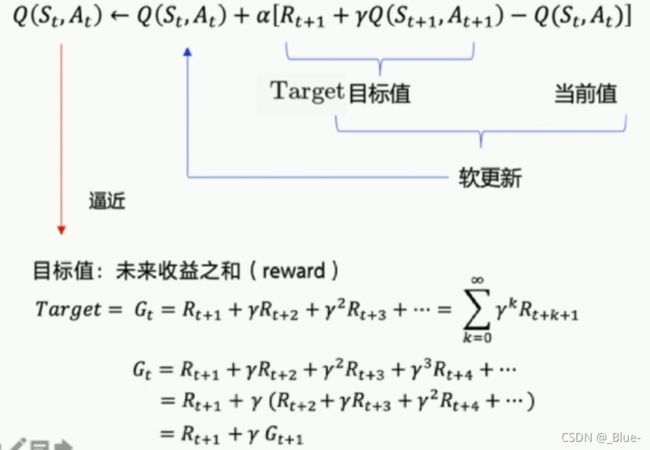

(1)DQN两大创新点

用 append()讲数据存入经验池,再用sample()从经验池取出数据进行训练

经验回放——样本关联性

- 打乱样本关联性

- 提高样本利用率

固定Q目标

(2)DQN 流程图

3. DQN——代码

(1)model.py

import parl

from parl import layers # 封装了 paddle.fluid.layers 的API

class Model(parl.Model):

def __init__(self, act_dim):

hid1_size = 128

hid2_size = 128

# 3层全连接网络

self.fc1 = layers.fc(size=hid1_size, act='relu')

self.fc2 = layers.fc(size=hid2_size, act='relu')

self.fc3 = layers.fc(size=act_dim, act=None)

def value(self, obs):

h1 = self.fc1(obs)

h2 = self.fc2(h1)

Q = self.fc3(h2)

return Q

(2)algorithm.py

import copy

import paddle.fluid as fluid

import parl

from parl import layers

class DQN(parl.Algorithm):

def __init__(self, model, act_dim=None, gamma=None, lr=None):

""" DQN algorithm

Args:

model (parl.Model): 定义Q函数的前向网络结构

act_dim (int): action空间的维度,即有几个action

gamma (float): reward的衰减因子

lr (float): learning_rate,学习率.

"""

self.model = model

self.target_model = copy.deepcopy(model)

assert isinstance(act_dim, int)

assert isinstance(gamma, float)

assert isinstance(lr, float)

self.act_dim = act_dim

self.gamma = gamma

self.lr = lr

def predict(self, obs):

""" 使用self.model的value网络来获取 [Q(s,a1),Q(s,a2),...]

"""

return self.model.value(obs)

def learn(self, obs, action, reward, next_obs, terminal):

""" 使用DQN算法更新self.model的value网络

"""

# 从target_model中获取 max Q' 的值,用于计算target_Q

next_pred_value = self.target_model.value(next_obs)

best_v = layers.reduce_max(next_pred_value, dim=1)

best_v.stop_gradient = True # 阻止梯度传递

terminal = layers.cast(terminal, dtype='float32')

target = reward + (1.0 - terminal) * self.gamma * best_v

pred_value = self.model.value(obs) # 获取Q预测值

# 将action转onehot向量,比如:3 => [0,0,0,1,0]

action_onehot = layers.one_hot(action, self.act_dim)

action_onehot = layers.cast(action_onehot, dtype='float32')

# 下面一行是逐元素相乘,拿到action对应的 Q(s,a)

# 比如:pred_value = [[2.3, 5.7, 1.2, 3.9, 1.4]], action_onehot = [[0,0,0,1,0]]

# ==> pred_action_value = [[3.9]]

pred_action_value = layers.reduce_sum(

layers.elementwise_mul(action_onehot, pred_value), dim=1)

# 计算 Q(s,a) 与 target_Q的均方差,得到loss

cost = layers.square_error_cost(pred_action_value, target)

cost = layers.reduce_mean(cost)

optimizer = fluid.optimizer.Adam(learning_rate=self.lr) # 使用Adam优化器

optimizer.minimize(cost)

return cost

def sync_target(self):

""" 把 self.model 的模型参数值同步到 self.target_model

"""

self.model.sync_weights_to(self.target_model)

注意:

best_v.stop_gradient = True # 阻止梯度传递

用到了target_model的值,但是target_model参数需要固定不动,这句话切断联系。

(3)agent.py

import numpy as np

import paddle.fluid as fluid

import parl

from parl import layers

class Agent(parl.Agent):

def __init__(self,

algorithm,

obs_dim,

act_dim,

e_greed=0.1,

e_greed_decrement=0):

assert isinstance(obs_dim, int)

assert isinstance(act_dim, int)

self.obs_dim = obs_dim

self.act_dim = act_dim

super(Agent, self).__init__(algorithm)

self.global_step = 0

self.update_target_steps = 200 # 每隔200个training steps再把model的参数复制到target_model中

self.e_greed = e_greed # 有一定概率随机选取动作,探索

self.e_greed_decrement = e_greed_decrement # 随着训练逐步收敛,探索的程度慢慢降低

def build_program(self):

self.pred_program = fluid.Program()

self.learn_program = fluid.Program()

with fluid.program_guard(self.pred_program): # 搭建计算图用于 预测动作,定义输入输出变量

obs = layers.data(

name='obs', shape=[self.obs_dim], dtype='float32')

self.value = self.alg.predict(obs)

with fluid.program_guard(self.learn_program): # 搭建计算图用于 更新Q网络,定义输入输出变量

obs = layers.data(

name='obs', shape=[self.obs_dim], dtype='float32')

action = layers.data(name='act', shape=[1], dtype='int32')

reward = layers.data(name='reward', shape=[], dtype='float32')

next_obs = layers.data(

name='next_obs', shape=[self.obs_dim], dtype='float32')

terminal = layers.data(name='terminal', shape=[], dtype='bool')

self.cost = self.alg.learn(obs, action, reward, next_obs, terminal)

def sample(self, obs):

sample = np.random.rand() # 产生0~1之间的小数

if sample < self.e_greed:

act = np.random.randint(self.act_dim) # 探索:每个动作都有概率被选择

else:

act = self.predict(obs) # 选择最优动作

self.e_greed = max(

0.01, self.e_greed - self.e_greed_decrement) # 随着训练逐步收敛,探索的程度慢慢降低

return act

def predict(self, obs): # 选择最优动作

obs = np.expand_dims(obs, axis=0)

pred_Q = self.fluid_executor.run(

self.pred_program,

feed={'obs': obs.astype('float32')},

fetch_list=[self.value])[0]

pred_Q = np.squeeze(pred_Q, axis=0)

act = np.argmax(pred_Q) # 选择Q最大的下标,即对应的动作

return act

def learn(self, obs, act, reward, next_obs, terminal):

# 每隔200个training steps同步一次model和target_model的参数

if self.global_step % self.update_target_steps == 0:

self.alg.sync_target()

self.global_step += 1

act = np.expand_dims(act, -1)

feed = {

'obs': obs.astype('float32'),

'act': act.astype('int32'),

'reward': reward,

'next_obs': next_obs.astype('float32'),

'terminal': terminal

}

cost = self.fluid_executor.run(

self.learn_program, feed=feed, fetch_list=[self.cost])[0] # 训练一次网络

return cost

(4)replaymemory.py

import random

import collections

import numpy as np

class ReplayMemory(object):

def __init__(self, max_size):

self.buffer = collections.deque(maxlen=max_size)

def append(self, exp):

self.buffer.append(exp)

def sample(self, batch_size):

mini_batch = random.sample(self.buffer, batch_size)

obs_batch, action_batch, reward_batch, next_obs_batch, done_batch = [], [], [], [], []

for experience in mini_batch:

s, a, r, s_p, done = experience

obs_batch.append(s)

action_batch.append(a)

reward_batch.append(r)

next_obs_batch.append(s_p)

done_batch.append(done)

return np.array(obs_batch).astype('float32'), \

np.array(action_batch).astype('float32'), np.array(reward_batch).astype('float32'),\

np.array(next_obs_batch).astype('float32'), np.array(done_batch).astype('float32')

def __len__(self):

return len(self.buffer)

(5)train.py

import os

import gym

import numpy as np

import parl

from parl.utils import logger # 日志打印工具

from model import Model

from algorithm import DQN # from parl.algorithms import DQN # parl >= 1.3.1

from agent import Agent

from replay_memory import ReplayMemory

LEARN_FREQ = 5 # 训练频率,不需要每一个step都learn,攒一些新增经验后再learn,提高效率

MEMORY_SIZE = 20000 # replay memory的大小,越大越占用内存

MEMORY_WARMUP_SIZE = 200 # replay_memory 里需要预存一些经验数据,再从里面sample一个batch的经验让agent去learn

BATCH_SIZE = 32 # 每次给agent learn的数据数量,从replay memory随机里sample一批数据出来

LEARNING_RATE = 0.001 # 学习率

GAMMA = 0.99 # reward 的衰减因子,一般取 0.9 到 0.999 不等

# 训练一个episode

def run_episode(env, agent, rpm):

total_reward = 0

obs = env.reset()

step = 0

while True:

step += 1

action = agent.sample(obs) # 采样动作,所有动作都有概率被尝试到

next_obs, reward, done, _ = env.step(action)

rpm.append((obs, action, reward, next_obs, done))

# train model

if (len(rpm) > MEMORY_WARMUP_SIZE) and (step % LEARN_FREQ == 0):

(batch_obs, batch_action, batch_reward, batch_next_obs,

batch_done) = rpm.sample(BATCH_SIZE)

train_loss = agent.learn(batch_obs, batch_action, batch_reward,

batch_next_obs,

batch_done) # s,a,r,s',done

total_reward += reward

obs = next_obs

if done:

break

return total_reward

# 评估 agent, 跑 5 个episode,总reward求平均

def evaluate(env, agent, render=False):

eval_reward = []

for i in range(5):

obs = env.reset()

episode_reward = 0

while True:

action = agent.predict(obs) # 预测动作,只选最优动作

obs, reward, done, _ = env.step(action)

episode_reward += reward

if render:

env.render()

if done:

break

eval_reward.append(episode_reward)

return np.mean(eval_reward)

def main():

env = gym.make(

'CartPole-v0'

) # CartPole-v0: expected reward > 180 MountainCar-v0 : expected reward > -120

action_dim = env.action_space.n # CartPole-v0: 2

obs_shape = env.observation_space.shape # CartPole-v0: (4,)

rpm = ReplayMemory(MEMORY_SIZE) # DQN的经验回放池

# 根据parl框架构建agent

model = Model(act_dim=action_dim)

algorithm = DQN(model, act_dim=action_dim, gamma=GAMMA, lr=LEARNING_RATE)

agent = Agent(

algorithm,

obs_dim=obs_shape[0],

act_dim=action_dim,

e_greed=0.1, # 有一定概率随机选取动作,探索

e_greed_decrement=1e-6) # 随着训练逐步收敛,探索的程度慢慢降低

# 加载模型

# save_path = './dqn_model.ckpt'

# agent.restore(save_path)

# 先往经验池里存一些数据,避免最开始训练的时候样本丰富度不够

while len(rpm) < MEMORY_WARMUP_SIZE:

run_episode(env, agent, rpm)

max_episode = 2000

# start train

episode = 0

while episode < max_episode: # 训练max_episode个回合,test部分不计算入episode数量

# train part

for i in range(0, 50):

total_reward = run_episode(env, agent, rpm)

episode += 1

# test part

eval_reward = evaluate(env, agent, render=True) # render=True 查看显示效果

logger.info('episode:{} e_greed:{} Test reward:{}'.format(

episode, agent.e_greed, eval_reward))

# 训练结束,保存模型

save_path = './dqn_model.ckpt'

agent.save(save_path)

if __name__ == '__main__':

main()

4. 常用方法

(1)PARL常用AP

- 搭建好强化学习训练框架,快速复现,便于修改调试

- 实现常用 AP,避免重复造轮子

- agent. store():保存模型

- agent. restore():加载模型

- model. sync_weights_to():把当前模型的参数同步到另一个模型去

- model. parameters():返回一个list,包含模型所有参数的名称

- model. get_weights():返回一个list,包含模型的所有参数

- model. set_ weights():设置模型参数

(2)模型保存与加载

# 加载模型

save_path = './dqn_model.ckpt'

agent.restore(save_path)

# 训练结束,保存模型

save_path = './dqn_model.ckpt'

agent.save(save_path)

(3)打印日志

- parl. utils. logger:打印日志:涵盖时间、代码所在文件以及行数,方便记录训练时间

from parl.utils import logger # 日志打印工具

logger.info('episode:{} e_greed:{} Test reward:{}'.format(

episode, agent.e_greed, eval_reward))

5. 总结

第四课:基于策略梯度求解RL

- 策略近似、策略梯度

- 实践: Policy Gradient

1. Value-based vs policy-based

(1)Policy- based 直接表示策略

(2)随机策略

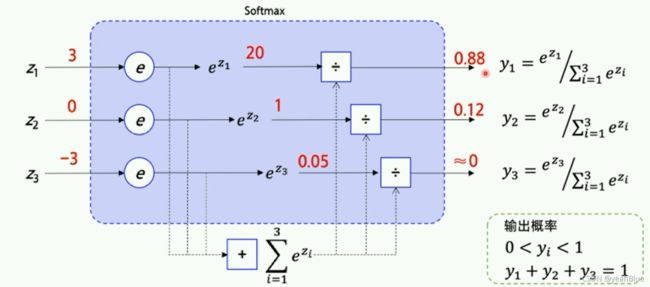

(3)随机策略—— softmax函数

Softmax将多个神经元的输出,映射到(0,1)区间内,可以看成概率来理解,可以输出不同动作的概率

(4)轨迹

(5)期望回报

(6)优化策略函数

(7)蒙特卡洛MC与时序差分TD

2. 流程图

3. PG——代码

(1)model.py

import parl

from parl import layers

class Model(parl.Model):

def __init__(self, act_dim):

act_dim = act_dim

hid1_size = act_dim * 10

self.fc1 = layers.fc(size=hid1_size, act='tanh')

self.fc2 = layers.fc(size=act_dim, act='softmax')

def forward(self, obs): # 可直接用 model = Model(5); model(obs)调用

out = self.fc1(obs)

out = self.fc2(out)

return out

(2)algorithm.py

import paddle.fluid as fluid

import parl

from parl import layers

class PolicyGradient(parl.Algorithm):

def __init__(self, model, lr=None):

""" Policy Gradient algorithm

Args:

model (parl.Model): policy的前向网络.

lr (float): 学习率.

"""

self.model = model

assert isinstance(lr, float)

self.lr = lr

def predict(self, obs):

""" 使用policy model预测输出的动作概率

"""

return self.model(obs)

def learn(self, obs, action, reward):

""" 用policy gradient 算法更新policy model

"""

act_prob = self.model(obs) # 获取输出动作概率

# log_prob = layers.cross_entropy(act_prob, action) # 交叉熵

log_prob = layers.reduce_sum(

-1.0 * layers.log(act_prob) * layers.one_hot(

action, act_prob.shape[1]),

dim=1)

cost = log_prob * reward

cost = layers.reduce_mean(cost)

optimizer = fluid.optimizer.Adam(self.lr)

optimizer.minimize(cost)

return cost

(3)agent.py

import numpy as np

import paddle.fluid as fluid

import parl

from parl import layers

class Agent(parl.Agent):

def __init__(self, algorithm, obs_dim, act_dim):

self.obs_dim = obs_dim

self.act_dim = act_dim

super(Agent, self).__init__(algorithm)

def build_program(self):

self.pred_program = fluid.Program()

self.learn_program = fluid.Program()

with fluid.program_guard(self.pred_program): # 搭建计算图用于 预测动作,定义输入输出变量

obs = layers.data(

name='obs', shape=[self.obs_dim], dtype='float32')

self.act_prob = self.alg.predict(obs)

with fluid.program_guard(

self.learn_program): # 搭建计算图用于 更新policy网络,定义输入输出变量

obs = layers.data(

name='obs', shape=[self.obs_dim], dtype='float32')

act = layers.data(name='act', shape=[1], dtype='int64')

reward = layers.data(name='reward', shape=[], dtype='float32')

self.cost = self.alg.learn(obs, act, reward)

def sample(self, obs):

obs = np.expand_dims(obs, axis=0) # 增加一维维度

act_prob = self.fluid_executor.run(

self.pred_program,

feed={'obs': obs.astype('float32')},

fetch_list=[self.act_prob])[0]

act_prob = np.squeeze(act_prob, axis=0) # 减少一维维度

act = np.random.choice(range(self.act_dim), p=act_prob) # 根据动作概率选取动作

return act

def predict(self, obs):

obs = np.expand_dims(obs, axis=0)

act_prob = self.fluid_executor.run(

self.pred_program,

feed={'obs': obs.astype('float32')},

fetch_list=[self.act_prob])[0]

act_prob = np.squeeze(act_prob, axis=0)

act = np.argmax(act_prob) # 根据动作概率选择概率最高的动作

return act

def learn(self, obs, act, reward):

act = np.expand_dims(act, axis=-1)

feed = {

'obs': obs.astype('float32'),

'act': act.astype('int64'),

'reward': reward.astype('float32')

}

cost = self.fluid_executor.run(

self.learn_program, feed=feed, fetch_list=[self.cost])[0]

return cost

(4)train.py

import os

import gym

import numpy as np

import parl

from agent import Agent

from model import Model

from algorithm import PolicyGradient # from parl.algorithms import PolicyGradient

from parl.utils import logger

LEARNING_RATE = 1e-3

# 训练一个episode

def run_episode(env, agent):

obs_list, action_list, reward_list = [], [], []

obs = env.reset()

while True:

obs_list.append(obs)

action = agent.sample(obs)

action_list.append(action)

obs, reward, done, info = env.step(action)

reward_list.append(reward)

if done:

break

return obs_list, action_list, reward_list

# 评估 agent, 跑 5 个episode,总reward求平均

def evaluate(env, agent, render=False):

eval_reward = []

for i in range(5):

obs = env.reset()

episode_reward = 0

while True:

action = agent.predict(obs)

obs, reward, isOver, _ = env.step(action)

episode_reward += reward

if render:

env.render()

if isOver:

break

eval_reward.append(episode_reward)

return np.mean(eval_reward)

def calc_reward_to_go(reward_list, gamma=1.0):

for i in range(len(reward_list) - 2, -1, -1):

# G_i = r_i + γ·G_i+1

reward_list[i] += gamma * reward_list[i + 1] # Gt

return np.array(reward_list)

def main():

env = gym.make('CartPole-v0')

# env = env.unwrapped # Cancel the minimum score limit

obs_dim = env.observation_space.shape[0]

act_dim = env.action_space.n

logger.info('obs_dim {}, act_dim {}'.format(obs_dim, act_dim))

# 根据parl框架构建agent

model = Model(act_dim=act_dim)

alg = PolicyGradient(model, lr=LEARNING_RATE)

agent = Agent(alg, obs_dim=obs_dim, act_dim=act_dim)

# 加载模型

# if os.path.exists('./model.ckpt'):

# agent.restore('./model.ckpt')

# run_episode(env, agent, train_or_test='test', render=True)

# exit()

for i in range(1000):

obs_list, action_list, reward_list = run_episode(env, agent)

if i % 10 == 0:

logger.info("Episode {}, Reward Sum {}.".format(

i, sum(reward_list)))

batch_obs = np.array(obs_list)

batch_action = np.array(action_list)

batch_reward = calc_reward_to_go(reward_list)

agent.learn(batch_obs, batch_action, batch_reward)

if (i + 1) % 100 == 0:

total_reward = evaluate(env, agent, render=True)

logger.info('Test reward: {}'.format(total_reward))

# save the parameters to ./model.ckpt

agent.save('./model.ckpt')

if __name__ == '__main__':

main()

4. 总结

第五课:连续动作空间上求解RL

- 实践:DDPG

1. 离散动作VS连续动作

2. DDPG( Deep Deterministic Policy Gradient)

(1)DDPG结构

(2) DQN——>DDPG

- DDPG是DQN的扩展版本,可以扩展到连续控制动作空间

(3)Actor- Critic 结构 (评论家-演员)

(4)目标网络 target network+经验回放 ReplayMemory

![]()

3. PARL DDPG

4. DDPG——代码

(1)model.py

import paddle.fluid as fluid

import parl

from parl import layers

class Model(parl.Model):

def __init__(self, act_dim):

self.actor_model = ActorModel(act_dim)

self.critic_model = CriticModel()

def policy(self, obs):

return self.actor_model.policy(obs)

def value(self, obs, act):

return self.critic_model.value(obs, act)

def get_actor_params(self):

return self.actor_model.parameters() # 返回一个list,包含模型所有参数的名称

class ActorModel(parl.Model):

def __init__(self, act_dim):

hid_size = 100

self.fc1 = layers.fc(size=hid_size, act='relu')

self.fc2 = layers.fc(size=act_dim, act='tanh')

def policy(self, obs):

hid = self.fc1(obs)

means = self.fc2(hid)

return means

class CriticModel(parl.Model):

def __init__(self):

hid_size = 100

self.fc1 = layers.fc(size=hid_size, act='relu')

self.fc2 = layers.fc(size=1, act=None)

def value(self, obs, act):

concat = layers.concat([obs, act], axis=1)

hid = self.fc1(concat)

Q = self.fc2(hid)

Q = layers.squeeze(Q, axes=[1])

return Q

(2)algorithm.py

import parl

from parl import layers

from copy import deepcopy

from paddle import fluid

class DDPG(parl.Algorithm):

def __init__(self,

model,

gamma=None,

tau=None,

actor_lr=None,

critic_lr=None):

""" DDPG algorithm

Args:

model (parl.Model): actor and critic 的前向网络.

model 必须实现 get_actor_params() 方法.

gamma (float): reward的衰减因子.

tau (float): self.target_model 跟 self.model 同步参数 的 软更新参数

actor_lr (float): actor 的学习率

critic_lr (float): critic 的学习率

"""

assert isinstance(gamma, float)

assert isinstance(tau, float)

assert isinstance(actor_lr, float)

assert isinstance(critic_lr, float)

self.gamma = gamma

self.tau = tau

self.actor_lr = actor_lr

self.critic_lr = critic_lr

self.model = model

self.target_model = deepcopy(model)

def predict(self, obs):

""" 使用 self.model 的 actor model 来预测动作

"""

return self.model.policy(obs)

def learn(self, obs, action, reward, next_obs, terminal):

""" 用DDPG算法更新 actor 和 critic

"""

actor_cost = self._actor_learn(obs)

critic_cost = self._critic_learn(obs, action, reward, next_obs,

terminal)

return actor_cost, critic_cost

def _actor_learn(self, obs):

action = self.model.policy(obs)

Q = self.model.value(obs, action)

cost = layers.reduce_mean(-1.0 * Q) # actor的cost实际使用了critic来计算,但必须只更新自己的参数

optimizer = fluid.optimizer.AdamOptimizer(self.actor_lr)

optimizer.minimize(cost, parameter_list=self.model.get_actor_params()) # 只更新actor网络的参数

return cost

def _critic_learn(self, obs, action, reward, next_obs, terminal):

next_action = self.target_model.policy(next_obs)

next_Q = self.target_model.value(next_obs, next_action)

terminal = layers.cast(terminal, dtype='float32')

target_Q = reward + (1.0 - terminal) * self.gamma * next_Q

target_Q.stop_gradient = True # 阻止optimizer优化cost时更新target网络的参数

Q = self.model.value(obs, action)

cost = layers.square_error_cost(Q, target_Q)

cost = layers.reduce_mean(cost)

optimizer = fluid.optimizer.AdamOptimizer(self.critic_lr)

optimizer.minimize(cost)

return cost

def sync_target(self, decay=None, share_vars_parallel_executor=None):

""" self.target_model从self.model复制参数过来,若decay不为None,则是软更新

"""

if decay is None:

decay = 1.0 - self.tau

self.model.sync_weights_to(

self.target_model,

decay=decay,

share_vars_parallel_executor=share_vars_parallel_executor)

(3)train.py

import gym

import numpy as np

import parl

from parl.utils import logger

from agent import Agent

from model import Model

from algorithm import DDPG # from parl.algorithms import DDPG

from env import ContinuousCartPoleEnv

from replay_memory import ReplayMemory

ACTOR_LR = 1e-3 # Actor网络的 learning rate

CRITIC_LR = 1e-3 # Critic网络的 learning rate

GAMMA = 0.99 # reward 的衰减因子

TAU = 0.001 # 软更新的系数

MEMORY_SIZE = int(1e4) # 经验池大小

MEMORY_WARMUP_SIZE = MEMORY_SIZE // 20 # 预存一部分经验之后再开始训练

BATCH_SIZE = 128

REWARD_SCALE = 0.1 # reward 缩放系数

NOISE = 0.05 # 动作噪声方差

TRAIN_EPISODE = 6e3 # 训练的总episode数

# 训练一个episode

def run_episode(agent, env, rpm):

obs = env.reset()

total_reward = 0

steps = 0

allCost = [] # 1个episode = ?次训练的critic_cost

while True:

steps += 1

batch_obs = np.expand_dims(obs, axis=0)

action = agent.predict(batch_obs.astype('float32'))

# 增加探索扰动(高斯噪声), 输出限制在 [-1.0, 1.0] 范围内

action = np.clip(np.random.normal(action, NOISE), -1.0, 1.0)

next_obs, reward, done, info = env.step(action)

action = [action] # 方便存入replaymemory

rpm.append((obs, action, REWARD_SCALE * reward, next_obs, done))

if len(rpm) > MEMORY_WARMUP_SIZE and (steps % 5) == 0:

(batch_obs, batch_action, batch_reward, batch_next_obs, batch_done) = rpm.sample(BATCH_SIZE)

critic_cost = agent.learn(batch_obs, batch_action, batch_reward, batch_next_obs, batch_done)

allCost.append(critic_cost)

obs = next_obs

total_reward += reward

if done or steps >= 200:

break

return total_reward, allCost

# 评估 agent, 跑 5 个episode,总reward求平均

def evaluate(env, agent, render=False):

eval_reward = []

for i in range(5):

obs = env.reset()

total_reward = 0

steps = 0

while True:

batch_obs = np.expand_dims(obs, axis=0)

action = agent.predict(batch_obs.astype('float32'))

action = np.clip(action, -1.0, 1.0)

steps += 1

next_obs, reward, done, info = env.step(action)

obs = next_obs

total_reward += reward

if render:

env.render()

if done or steps >= 200:

break

eval_reward.append(total_reward)

return np.mean(eval_reward)

def main():

env = ContinuousCartPoleEnv()

obs_dim = env.observation_space.shape[0]

act_dim = env.action_space.shape[0]

record = {'cost': []}

print("obs_dim:", obs_dim," act_dim:", act_dim)

# 使用PARL框架创建agent

model = Model(act_dim)

algorithm = DDPG(

model, gamma=GAMMA, tau=TAU, actor_lr=ACTOR_LR, critic_lr=CRITIC_LR)

agent = Agent(algorithm, obs_dim, act_dim)

# 创建经验池

rpm = ReplayMemory(MEMORY_SIZE)

# 往经验池中预存数据

while len(rpm) < MEMORY_WARMUP_SIZE:

run_episode(agent, env, rpm)

episode = 0

while episode < TRAIN_EPISODE:

for i in range(50):

total_reward, episodeCost = run_episode(agent, env, rpm)

record['cost'].append(np.squeeze(episodeCost))

episode += 1

eval_reward = evaluate(env, agent, render=False)

logger.info('episode:{} Test reward:{}'.format(

episode, eval_reward))

SaveDictCoverList(record)

print("Success!")

def SaveDictCoverList(dict):

for key in dict:

invertArray = np.asarray(dict[key])

np.savetxt(r".\logs\%s.txt" % key, invertArray)

if __name__ == '__main__':

main()