1.导包

"""导包"""

import collections

import numpy as np

import math

import os

import shutil

import pandas as pd

import torch

import torchvision

import matplotlib.pyplot as plt

from torch import nn

import torch .nn.functional as F

2.整理数据集

"""整理数据集"""

def read_csv_labels(fname):

"""读取 fname 来给标签字典返回一个文件名"""

with open(fname, 'r') as f:

lines = f.readlines()[1:]

tokens = [l.rstrip().split(',') for l in lines]

return dict(((name, label) for name, label in tokens))

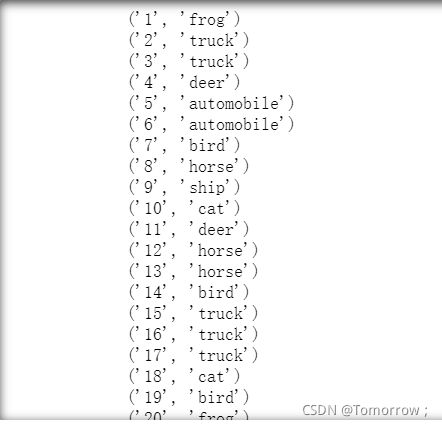

labels = read_csv_labels('../CIFAR10 Image-Classification/trainLabels.csv')

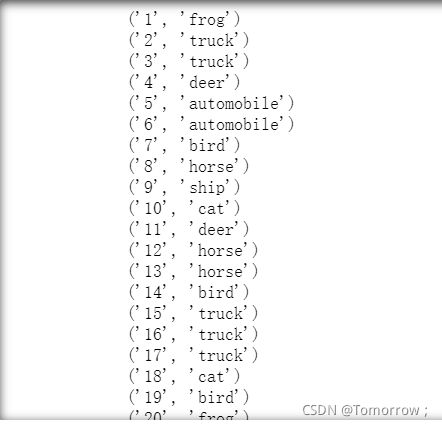

for item in labels.items():

print(item)

print('类别 :', len(set(labels.values())))

整理好后的样子

3.将验证集从原始的数据集中拆分出来

"""将验证集从原始的数据集中拆分出来"""

data_dir=('../CIFAR10 Image-Classification/')

def copyfile(filename, target_dir):

"""将文件复制到目标目录"""

os.makedirs(target_dir, exist_ok=True)

shutil.copy(filename, target_dir)

def reorg_train_valid(data_dir, labels, valid_ratio):

n = collections.Counter(labels.values()).most_common()[-1][1]

n_valid_per_label = max(1, math.floor(n * valid_ratio))

label_count = {}

for train_file in os.listdir(os.path.join(data_dir,'train')):

label = labels[train_file.split('.')[0]]

fname = os.path.join(data_dir, 'train', train_file)

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'train_valid', label))

if label not in label_count or label_count[label] < n_valid_per_label:

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'valid', label))

label_count[label] = label_count.get(label, 0) + 1

else:

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'train', label))

return n_valid_per_label

4.在预测期间整理测试集,以方便读取

def reorg_test(data_dir):

for test_file in os.listdir(os.path.join(data_dir, 'test')):

copyfile(os.path.join(data_dir, 'test', test_file),

os.path.join(data_dir, 'train_valid_test', 'test',

'unknown'))

5.调用前面定义的函数

def reorg_cifar10_data(data_dir, valid_ratio):

labels = read_csv_labels(os.path.join(data_dir, 'trainLabels.csv'))

reorg_train_valid(data_dir, labels, valid_ratio)

reorg_test(data_dir)

valid_ratio = 0.1

reorg_cifar10_data(data_dir, valid_ratio)

6.图像增广

transform_train = torchvision.transforms.Compose([

torchvision.transforms.Resize(40),

torchvision.transforms.RandomResizedCrop(32, scale=(0.64, 1.0),

ratio=(1.0, 1.0)),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize([0.4914, 0.4822, 0.4465],

[0.2023, 0.1994, 0.2010])])

transform_test = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize([0.4914, 0.4822, 0.4465],

[0.2023, 0.1994, 0.2010])])

6.读取由原图像组成的数据集

"""读取由原图像组成的数据集"""

train_ds, train_valid_ds = [torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'train_valid_test', folder),

transform=transform_train) for folder in ['train', 'train_valid']]

valid_ds, test_ds = [torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'train_valid_test', folder),

transform=transform_test) for folder in ['valid', 'test']]

7.制作dataloader

train_loader, train_valid_loader = [torch.utils.data.DataLoader(

dataset, batch_size=100, shuffle=True, drop_last=True,num_workers=2)

for dataset in (train_ds, train_valid_ds)]

valid_loader = torch.utils.data.DataLoader(valid_ds, batch_size=10, shuffle=False,

drop_last=True,num_workers=2)

test_loader = torch.utils.data.DataLoader(test_ds, batch_size=100, shuffle=False,

drop_last=False,num_workers=2)

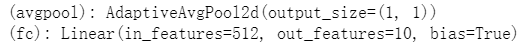

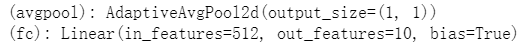

8.定义模型

from torchvision import models as models

net=models.resnet18(pretrained=True)

net.fc=torch.nn.Linear(512,10)

print(net)

修改后的全连接层

9.权重初始化

import torch

import torch.nn.init as init

for name,module in net._modules.items() :

if (name=='fc'):

init.kaiming_uniform_(module.weight,a=0,mode='fan_in')

10.调用GPU

devices=torch.device("cuda:0" if torch.cuda.is_available() else"cpu")

print(devices)

11.定义优化器

optimizer = torch.optim.SGD(net.parameters(), lr=1e-1, momentum=0.9,weight_decay=5e-4)

StepLR = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5,gamma=0.65)

12.定义准确率函数

import torch

def accuracy(pred,target):

pred_label=torch .argmax(pred,1)

correct=sum(pred_label==target).to(torch .float )

return correct,len(pred)

13.定义损失函数

celoss=nn.CrossEntropyLoss()

best_acc=0

14.定义字典来存放loss和acc

acc={'train':[],"val":[]}

loss_all={'train':[],"val":[]}

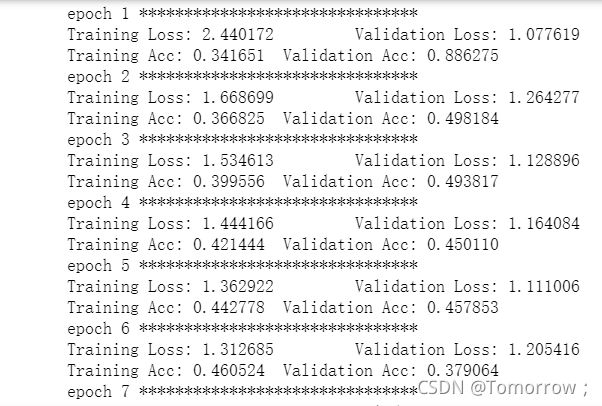

15.验证和训练

"""训练和验证"""

net.to(devices)

for epoch in range(10):

print('epoch',epoch+1,'*******************************')

net.train()

train_total_loss,train_correctnum, train_prednum=0.,0.,0.

for images,labels in train_loader:

images,labels=images.to(devices),labels.to(devices)

outputs=net(images)

loss=celoss(outputs,labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_total_loss+=loss.item()

correctnum,prednum= accuracy(outputs,labels)

train_correctnum +=correctnum

train_prednum +=prednum

net.eval()

valid_total_loss,valid_correctnum, valid_prednum=0.,0.,0.

for images,labels in valid_loader:

images,labels=images.to(devices),labels.to(devices)

outputs=net(images)

loss=celoss(outputs,labels)

valid_total_loss += loss.item()

correctnum,prednum= accuracy(outputs,labels)

valid_correctnum +=correctnum

valid_prednum +=prednum

"""计算平均损失"""

train_loss = train_total_loss/len(train_loader)

valid_loss = valid_total_loss/len(valid_loader)

"""将损失值存入字典"""

loss_all['train'].append(train_loss )

loss_all['val'].append(valid_loss)

"""将准确率存入字典"""

acc['train'].append(train_correctnum/train_prednum)

acc['val'].append(valid_correctnum/valid_prednum)

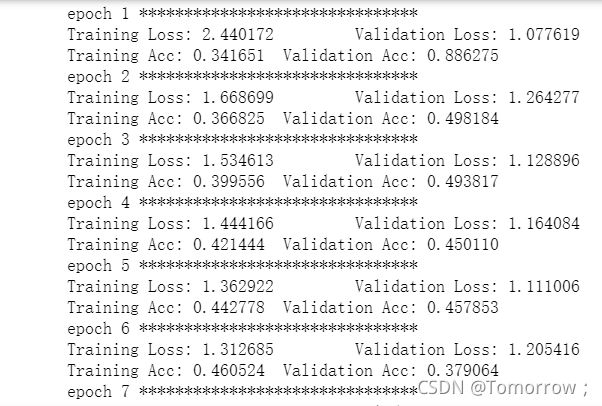

print('Training Loss: {:.6f} \tValidation Loss: {:.6f}'.format(train_loss, valid_loss))

print('Training Acc: {:.6f} \tValidation Acc: {:.6f}'.format(train_correctnum/train_prednum,valid_correctnum/valid_prednum))

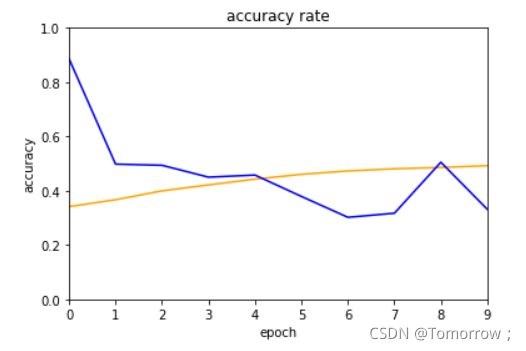

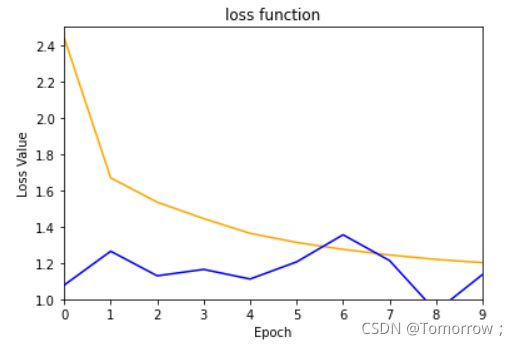

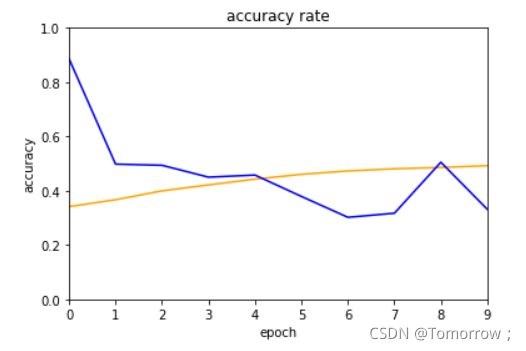

16.训练结果

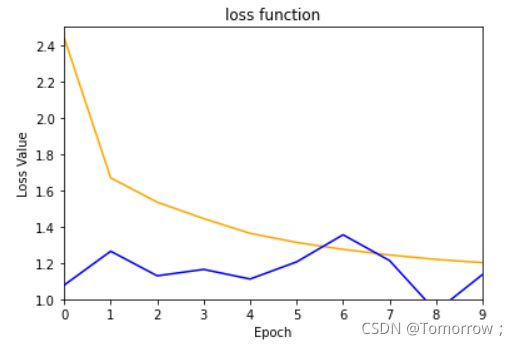

17.绘制loss和acc曲线

"""绘图"""

plt.xlabel('Epoch')

plt.xlim((0,9))

plt.ylabel('Loss Value')

plt.ylim((1,2.5))

plt.title('loss function')

plt.plot(loss_all['train'] ,color='orange')

plt.plot(loss_all['val'],color='blue' )

plt.show()

plt.ylim((0, 1))

plt.xlim((0, 9))

plt.plot(acc['train'] ,color='orange')

plt.plot(acc['val'],color='blue' )

plt.title('accuracy rate')

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.show()

验证集的数据有一些问题,后面会持续修改

18.完整代码

"""导包"""

import collections

import numpy as np

import math

import os

import shutil

import pandas as pd

import torch

import torchvision

import matplotlib.pyplot as plt

from torch import nn

import torch .nn.functional as F

"""整理数据集"""

def read_csv_labels(fname):

"""读取 fname 来给标签字典返回一个文件名"""

with open(fname, 'r') as f:

lines = f.readlines()[1:]

tokens = [l.rstrip().split(',') for l in lines]

return dict(((name, label) for name, label in tokens))

labels = read_csv_labels('../CIFAR10 Image-Classification/trainLabels.csv')

for item in labels.items():

print(item)

print('类别 :', len(set(labels.values())))

"""将验证集从原始的数据集中拆分出来"""

data_dir=('../CIFAR10 Image-Classification/')

def copyfile(filename, target_dir):

"""将文件复制到目标目录"""

os.makedirs(target_dir, exist_ok=True)

shutil.copy(filename, target_dir)

def reorg_train_valid(data_dir, labels, valid_ratio):

n = collections.Counter(labels.values()).most_common()[-1][1]

n_valid_per_label = max(1, math.floor(n * valid_ratio))

label_count = {}

for train_file in os.listdir(os.path.join(data_dir,'train')):

label = labels[train_file.split('.')[0]]

fname = os.path.join(data_dir, 'train', train_file)

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'train_valid', label))

if label not in label_count or label_count[label] < n_valid_per_label:

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'valid', label))

label_count[label] = label_count.get(label, 0) + 1

else:

copyfile(fname, os.path.join(data_dir, 'train_valid_test',

'train', label))

return n_valid_per_label

"""在预测期间整理测试集,以方便读取"""

def reorg_test(data_dir):

for test_file in os.listdir(os.path.join(data_dir, 'test')):

copyfile(os.path.join(data_dir, 'test', test_file),

os.path.join(data_dir, 'train_valid_test', 'test',

'unknown'))

"""调用前面定义的函数"""

def reorg_cifar10_data(data_dir, valid_ratio):

labels = read_csv_labels(os.path.join(data_dir, 'trainLabels.csv'))

reorg_train_valid(data_dir, labels, valid_ratio)

reorg_test(data_dir)

valid_ratio = 0.1

reorg_cifar10_data(data_dir, valid_ratio)

"""图像增广"""

transform_train = torchvision.transforms.Compose([

torchvision.transforms.Resize(40),

torchvision.transforms.RandomResizedCrop(32, scale=(0.64, 1.0),

ratio=(1.0, 1.0)),

torchvision.transforms.RandomHorizontalFlip(),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize([0.4914, 0.4822, 0.4465],

[0.2023, 0.1994, 0.2010])])

transform_test = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize([0.4914, 0.4822, 0.4465],

[0.2023, 0.1994, 0.2010])])

"""读取由原图像组成的数据集"""

train_ds, train_valid_ds = [torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'train_valid_test', folder),

transform=transform_train) for folder in ['train', 'train_valid']]

valid_ds, test_ds = [torchvision.datasets.ImageFolder(

os.path.join(data_dir, 'train_valid_test', folder),

transform=transform_test) for folder in ['valid', 'test']]

"""制作dataloader"""

train_loader, train_valid_loader = [torch.utils.data.DataLoader(

dataset, batch_size=100, shuffle=True, drop_last=True,num_workers=2)

for dataset in (train_ds, train_valid_ds)]

valid_loader = torch.utils.data.DataLoader(valid_ds, batch_size=10, shuffle=False,

drop_last=True,num_workers=2)

test_loader = torch.utils.data.DataLoader(test_ds, batch_size=100, shuffle=False,

drop_last=False,num_workers=2)

"""定义模型"""

from torchvision import models as models

net=models.resnet18(pretrained=True)

net.fc=torch.nn.Linear(512,10)

print(net)

"""权重初始化"""

import torch

import torch.nn.init as init

for name,module in net._modules.items() :

if (name=='fc'):

init.kaiming_uniform_(module.weight,a=0,mode='fan_in')

"""调用GPU"""

devices=torch.device("cuda:0" if torch.cuda.is_available() else"cpu")

print(devices)

"""定义优化器"""

optimizer = torch.optim.SGD(net.parameters(), lr=1e-1, momentum=0.9,weight_decay=5e-4)

StepLR = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5,gamma=0.65)

"""定义准确率函数"""

import torch

def accuracy(pred,target):

pred_label=torch .argmax(pred,1)

correct=sum(pred_label==target).to(torch .float )

return correct,len(pred)

acc={'train':[],"val":[]}

loss_all={'train':[],"val":[]}

celoss=nn.CrossEntropyLoss()

best_acc=0

"""训练和验证"""

net.to(devices)

for epoch in range(10):

print('epoch',epoch+1,'*******************************')

net.train()

train_total_loss,train_correctnum, train_prednum=0.,0.,0.

for images,labels in train_loader:

images,labels=images.to(devices),labels.to(devices)

outputs=net(images)

loss=celoss(outputs,labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_total_loss+=loss.item()

correctnum,prednum= accuracy(outputs,labels)

train_correctnum +=correctnum

train_prednum +=prednum

net.eval()

valid_total_loss,valid_correctnum, valid_prednum=0.,0.,0.

for images,labels in valid_loader:

images,labels=images.to(devices),labels.to(devices)

outputs=net(images)

loss=celoss(outputs,labels)

valid_total_loss += loss.item()

correctnum,prednum= accuracy(outputs,labels)

valid_correctnum +=correctnum

valid_prednum +=prednum

"""计算平均损失"""

train_loss = train_total_loss/len(train_loader)

valid_loss = valid_total_loss/len(valid_loader)

"""将损失值存入字典"""

loss_all['train'].append(train_loss )

loss_all['val'].append(valid_loss)

"""将准确率存入字典"""

acc['train'].append(train_correctnum/train_prednum)

acc['val'].append(valid_correctnum/valid_prednum)

print('Training Loss: {:.6f} \tValidation Loss: {:.6f}'.format(train_loss, valid_loss))

print('Training Acc: {:.6f} \tValidation Acc: {:.6f}'.format(train_correctnum/train_prednum,valid_correctnum/valid_prednum))

"""绘图"""

plt.xlabel('Epoch')

plt.xlim((0,9))

plt.ylabel('Loss Value')

plt.ylim((1,2.5))

plt.title('loss function')

plt.plot(loss_all['train'] ,color='orange')

plt.plot(loss_all['val'],color='blue' )

plt.show()

plt.ylim((0, 1))

plt.xlim((0, 9))

plt.plot(acc['train'] ,color='orange')

plt.plot(acc['val'],color='blue' )

plt.title('accuracy rate')

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.show()