自定义卷积权重

import torch

import torch.nn as nn

import torch.nn.functional as F

class CNN(nn.Module):

def __init__(self,

in_channels=1,

out_channels=2,

kernel=3,

stride=1,

padding=0,

weights=None,

bias=None):

super(CNN, self).__init__()

self.stride = stride

self.padding = padding

if weights is not None:

assert weights.shape[0] == out_channels and weights.shape[

1] == in_channels and weights.shape[

3] == kernel, 'please check the weight shape'

self.weight = nn.Parameter(torch.tensor(weights))

else:

self.weight = nn.Parameter(

torch.randn(out_channels, in_channels, kernel,

kernel))

if bias is not None:

self.bias = nn.Parameter(torch.tensor(bias))

else:

self.bias = nn.Parameter(torch.randn([out_channels]))

def forward(self, x):

out = F.conv2d(input=x,

weight=self.weight,

bias=self.bias,

stride=self.stride,

padding=self.padding)

return out

def run_conv(in_channel=1,

out_channel=2,

input=None,

weight=None,

bias=None,

kernel=3,

stride=1,

padding=0):

assert input is not None, 'check input first'

input = torch.tensor(input, dtype=torch.float)

if weight is not None:

weight = torch.unsqueeze(torch.tensor(weight, dtype=torch.float), 1)

if bias is not None:

bias = torch.tensor(bias, dtype=torch.float)

conv = CNN(in_channels=in_channel,

out_channels=out_channel,

kernel=kernel,

stride=stride,

padding=padding,

weights=weight,

bias=bias)

return conv(input)

if __name__ == '__main__':

kernel = 3

stride = 1

pad = [0, kernel // 2, max(kernel - 1, 0)]

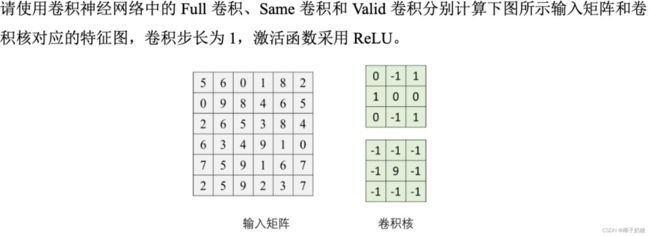

input = [[[[5, 6, 0, 1, 8, 2], [0, 9, 8, 4, 6, 5], [2, 6, 5, 3, 8, 4],

[6, 3, 4, 9, 1, 0], [7, 5, 9, 1, 6, 7], [2, 5, 9, 2, 3, 7]]]]

bias = [0]

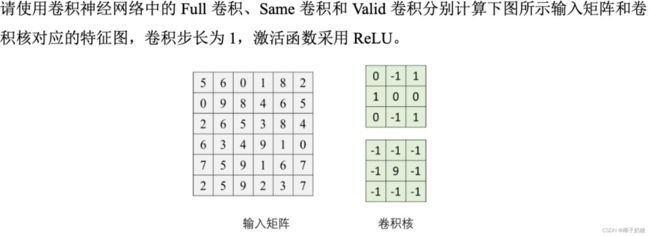

weight = [[[-1, -1, -1], [-1, 9, -1], [-1, -1, -1]],

[[0, -1, 1], [1, 0, 0], [0, -1, 1]]]

for p in pad:

for w in weight:

out = run_conv(out_channel=1,

in_channel=1,

input=input,

weight=[w],

bias=bias,

stride=stride,

kernel=kernel,

padding=p)

print(out)

参考:

- 果壳深度学习2020试卷

- 卷积的三种模式:full, same, valid

- pytorch 如何自定义卷积核权值参数(盗版比原文百度搜素排名高就离谱,但好像这个也不太像个人博客,发文频率有点高。。)