单特征与多特征 求梯度下降对比

#变量初始化。

#数据集

X_train = np.array([[2104, 5, 1, 45], [1416, 3, 2, 40], [852, 2, 1, 35]])

y_train = np.array([460, 232, 178])

#w,b初值

b_init = 785.1811367994083

w_init = np.array([ 0.39133535, 18.75376741, -53.36032453, -26.42131618])1、求偏导

单变量求偏导:

def compute_gradient(x, y, w, b):

"""

Computes the gradient for linear regression

Args:

x (ndarray (m,)): Data, m examples

y (ndarray (m,)): target values

w,b (scalar) : model parameters

Returns

dj_dw (scalar): The gradient of the cost w.r.t. the parameters w

dj_db (scalar): The gradient of the cost w.r.t. the parameter b

"""

# Number of training examples

m = x.shape[0]

dj_dw = 0

dj_db = 0

for i in range(m):

f_wb = w * x[i] + b

dj_dw_i = (f_wb - y[i]) * x[i] #对于x[0]、x[1].... w求偏导

dj_db_i = f_wb - y[i] #对于x[0]、x[1].... 对b求偏导

dj_db += dj_db_i # 对b求偏导,1~m个数据的各个偏导的和及

dj_dw += dj_dw_i

dj_dw = dj_dw / m

dj_db = dj_db / m

return dj_dw, dj_db多变量求偏导:

多变量指有多个特征值,举房价预测例子来说就是,影响价格的因素还包括,卧室数量、房子年龄、楼层数、面积。这四个特征值共同决定房子价格。

这里的w、X都为向量形式。w=[w1,w2,w3,w4]

假设有4个特征值。

有下标j就代表每个样例都有多个特征。每经历一次外循环,将本次样例的各个特征的dj_dw[j]求出来(j范围有特征值数量决定)。于是最终dj_dw也包括四个值,如下:

def compute_gradient(X, y, w, b):

"""

Computes the gradient for linear regression

Args:

X (ndarray (m,n)): Data, m examples with n features

y (ndarray (m,)) : target values

w (ndarray (n,)) : model parameters

b (scalar) : model parameter

Returns:

dj_dw (ndarray (n,)): The gradient of the cost w.r.t. the parameters w.

dj_db (scalar): The gradient of the cost w.r.t. the parameter b.

"""

m,n = X.shape #(number of examples, number of features) #(3,4)即m=3 n=4

# print(f"n={n}")

dj_dw = np.zeros((n,))

dj_db = 0.

#i表示行,j表示列 。

#一共有m=3个样例,

for i in range(m):

err = (np.dot(X[i], w) + b) - y[i] #对于wi,i是几就需要将X[i]看作变量。然后求出f_wb=np.dot(X[i], w) + b。 预测值;w是一个数组,四个值

for j in range(n):

#循环第一次j=0.内层循环进行,err分别*的是样例的特征数组X[x1,x2,x3,x4]中的四个列值。

#每个样例的(f_wb-y[i])都是在外层循环中固定了的。但是求w的偏导还需额外*x[i]值。

#对于一个特征值,求w偏导,一个样例只用*一次x特征值。对于4个不同特征值xi,一个样例要*4次x特征值。所以进行了n=4次内循环。一共有m=3个样例,所以外循环3次。

dj_dw[j] = dj_dw[j] + err * X[i, j]

dj_db = dj_db + err #每更新完一次w(即内循环循环一次),b也相应更新一次。下一次循环则就在这次b值的基础上+err.

dj_dw = dj_dw / m

dj_db = dj_db / m

return dj_db, dj_dw

#调用

#Compute and display gradient

tmp_dj_db, tmp_dj_dw = compute_gradient(X_train, y_train, w_init, b_init)

print(f'dj_db at initial w,b: {tmp_dj_db}')

print(f'dj_dw at initial w,b: \n {tmp_dj_dw}')最终结果为:

2、多变量进行梯度下降

则通过不断对w和b进行更新迭代,代价J也不断减小,偏导数逐渐趋于0时,最终找到 适合的参数w(多特征下,w是一个系列值)和b。差不多的模型就找到了,最终便可以使用此模型进行房价预测。

def gradient_descent(X, y, w_in, b_in, cost_function, gradient_function, alpha, num_iters):

"""

Performs batch gradient descent to learn theta. Updates theta by taking

num_iters gradient steps with learning rate alpha

Args:

X (ndarray (m,n)) : Data, m examples with n features

y (ndarray (m,)) : target values

w_in (ndarray (n,)) : initial model parameters

b_in (scalar) : initial model parameter

cost_function : function to compute cost

gradient_function : function to compute the gradient

alpha (float) : Learning rate

num_iters (int) : number of iterations to run gradient descent

Returns:

w (ndarray (n,)) : Updated values of parameters

b (scalar) : Updated value of parameter

"""

# An array to store cost J and w's at each iteration primarily for graphing later

#一个数组,用于存储每次迭代的成本J,主要用于以后的绘图

J_history = []

# copy.deepcopy()函数是一个深复制函数。所谓深复制,就是从输入变量完全复刻一个相同的变量,无论怎么改变新变量,原有变量的值都不会受到影响。

w = copy.deepcopy(w_in) #avoid modifying global w within function 避免在函数内修改全局w

b = b_in

for i in range(num_iters):

# Calculate the gradient and update the parameters

# 计算梯度并使用gradient_function更新参数

dj_db,dj_dw = gradient_function(X, y, w, b)

# Update Parameters using w, b, alpha and gradient

w = w - alpha * dj_dw ##None

b = b - alpha * dj_db ##None

# Save cost J at each iteration

if i<100000: # prevent resource exhaustion

J_history.append( cost_function(X, y, w, b))

# Print cost every at intervals 10 times or as many iterations if < 10

# 每隔10次打印一次成本,如果<10次,则重复次数相同

# math.ceil:向上取整,四舍五入。

if i% math.ceil(num_iters / 10) == 0:

print(f"Iteration {i:4d}: Cost {J_history[-1]:8.2f} ")

return w, b, J_history #return final w,b and J history for graphing接下来给定初值,进行预测:

# initialize parameters

initial_w = np.zeros_like(w_init)

initial_b = 0.

# some gradient descent settings

iterations = 1000

alpha = 5.0e-7

# run gradient descent

w_final, b_final, J_hist = gradient_descent(X_train, y_train, initial_w, initial_b,

compute_cost, compute_gradient,

alpha, iterations)

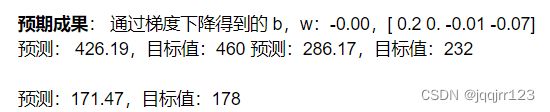

print(f"b,w found by gradient descent: {b_final:0.2f},{w_final} ")

m,_ = X_train.shape

for i in range(m):

print(f"prediction: {np.dot(X_train[i], w_final) + b_final:0.2f}, target value: {y_train[i]}")利用w_final和b_final值结合得到我们最终的预测函数计算式f_wb={np.dot(X_train[i], w_final) + b_final

得到的成果:

此时得到的模型还需优化,因为最终得到的代价函数还显示在下降,说明并不是一个最好的点。它还有下降空间!