【机器学习实战】五、实现非线性回归模型案例

非线性回归模型案例

一、导入第三方库、读取显示数据

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from linear_regression import LinearRegression

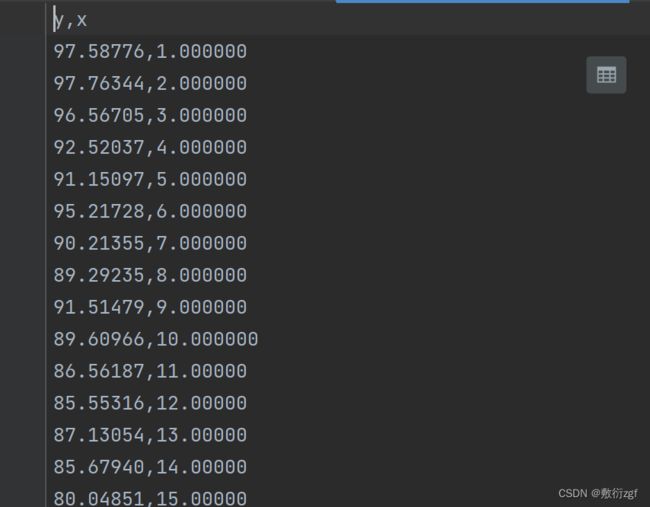

data = pd.read_csv('./data/non-linear-regression-x-y.csv')

x = data['x'].values.reshape((data.shape[0],1)) # 拿到x

y = data['y'].values.reshape((data.shape[0],1)) # 拿到y

data.head(10)

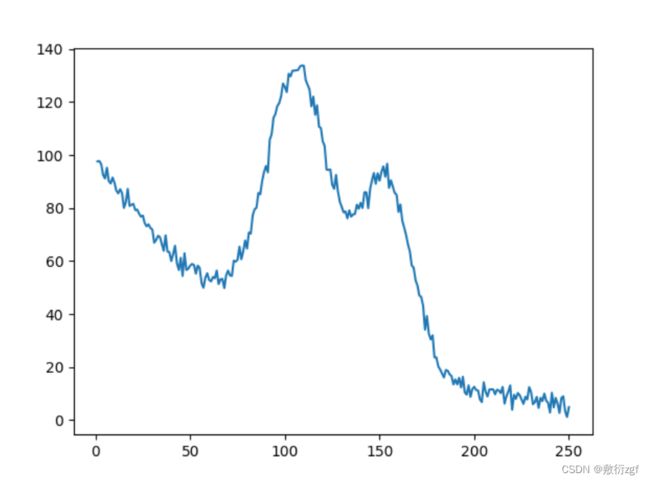

plt.plot(x , y)

plt.show()

二、设置参数

num_iterations :模型迭代次数;

learning_rate :学习率;

polynomial_degree : 特征变换

sinusoid_degree : sin(x) 非线性变换

normalize_data :标准化数据

num_iterations = 5000 # 设置模型迭代次数5000

learning_rate = 0.02 # 学习率

polynomial_degree = 15 #

sinusoid_degree = 15

normalize_data = True

三、多项式特征变换

"""Add polynomial features to the features set"""

import numpy as np

from .normalize import normalize

def generate_polynomials(dataset, polynomial_degree, normalize_data=False):

"""变换方法:

x1, x2, x1^2, x2^2, x1*x2, x1*x2^2, etc.

"""

features_split = np.array_split(dataset, 2, axis=1)

dataset_1 = features_split[0]

dataset_2 = features_split[1]

(num_examples_1, num_features_1) = dataset_1.shape

(num_examples_2, num_features_2) = dataset_2.shape

if num_examples_1 != num_examples_2:

raise ValueError('Can not generate polynomials for two sets with different number of rows')

if num_features_1 == 0 and num_features_2 == 0:

raise ValueError('Can not generate polynomials for two sets with no columns')

if num_features_1 == 0:

dataset_1 = dataset_2

elif num_features_2 == 0:

dataset_2 = dataset_1

num_features = num_features_1 if num_features_1 < num_examples_2 else num_features_2

dataset_1 = dataset_1[:, :num_features]

dataset_2 = dataset_2[:, :num_features]

polynomials = np.empty((num_examples_1, 0))

for i in range(1, polynomial_degree + 1):

for j in range(i + 1):

polynomial_feature = (dataset_1 ** (i - j)) * (dataset_2 ** j)

polynomials = np.concatenate((polynomials, polynomial_feature), axis=1)

if normalize_data:

polynomials = normalize(polynomials)[0]

return polynomials

四、sin(x)非线性变换

import numpy as np

def generate_sinusoids(dataset, sinusoid_degree):

"""

sin(x).

"""

num_examples = dataset.shape[0]

sinusoids = np.empty((num_examples, 0))

for degree in range(1, sinusoid_degree + 1):

sinusoid_features = np.sin(degree * dataset)

sinusoids = np.concatenate((sinusoids, sinusoid_features), axis=1)

return sinusoids

五、调用训练模型方法

linear_regression = LinearRegression(x , y, polynomial_degree ,sinusoid_degree , normalize_data)

(theta , cost_history) = linear_regression.train(

learning_rate , num_iterations

)

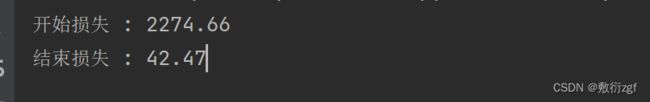

print('开始损失 : {:.2f}'.format(cost_history[0])) # 第一个损失值

print('结束损失 : {:.2f}'.format(cost_history[-1])) # 最后一个损失值

六、绘制曲线图

绘制损失变化曲线图

theta_table = pd.DataFrame({'Model Parameters' : theta.flatten()})

plt.plot(range(num_iterations), cost_history)

plt.xlabel('Lterations')

plt.ylabel('Cost')

plt.title('Gradient Descent Progress')

plt.show()

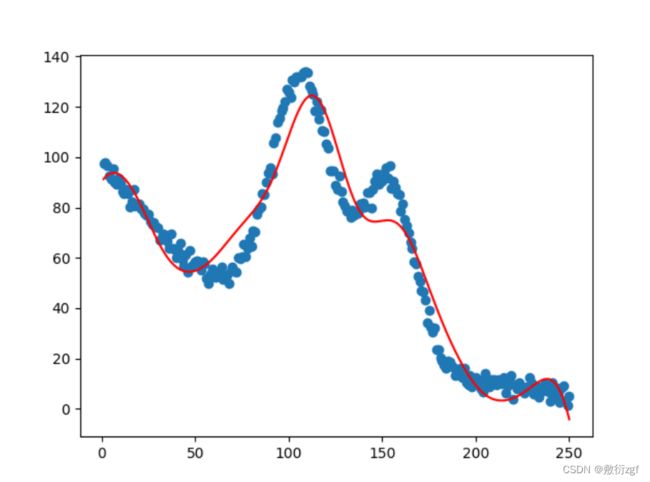

绘制非线性回归预测模型折线图

predictions_num = 1000

x_predictions = np.linspace(x.min() , x.max() , predictions_num).reshape(predictions_num , 1)

y_predictions = linear_regression.predict(x_predictions)

plt.scatter(x, y, label = 'Training Dataset')

plt.plot(x_predictions , y_predictions , 'r' ,label = 'Prediction')

plt.show()