使用pytorch搭建MLP多层感知器分类网络判断LOL比赛胜负

使用pytorch搭建MLP多层感知器分类网络判断LOL比赛胜负

1. 数据集

百度网盘链接,提取码:q79p

数据集文件格式为CSV。数据集包含了大约5万场英雄联盟钻石排位赛前15分钟的数据集合,总共48651条数据,该数据集的每条数据共有18个属性,除去比赛ID属性,每条数据总共有17项有效属性,其中blue_win是数据集的标签为蓝方的输赢,其余16项属性可作为模型训练的输入属性。![]()

2. 分析

预测英雄联盟比赛的输赢可以理解为根据比赛信息对比赛进行分类的问题,机器学习中有很多的分类算法都可以应用到此问题之中,例如支持向量机算法、朴素贝叶斯算法、决策树算法、神经网络模型(多层感知机)等。

3. 使用pytorch进行编码实现

以下代码中首行为该脚本文件的文件名。四个文件设置好文件名并放置好数据集即可运行。

数据集加载

编写自定义的数据加载类,该类继承Dataset。

在加载数据集时对数据集进行标准化处理,之后将数据集随机按照60%训练集,10%验证集,20%测试集的比例进行划分。

#MyDataSet.py

import torch

import random, csv

import numpy as np

from torch.utils.data import Dataset

from sklearn import preprocessing

class MyDataSet(Dataset):

"""

MyDataSet类继承自torch中的DataSet实现对数据集的读取

"""

def __init__(self, root, mode):

"""

:param root: 数据集的路径

:param mode: train,val,test

"""

super(MyDataSet, self).__init__()

self.mode = mode # 设置读取读取数据集的模式

self.root = root # 数据集存放的路径

label = []

with open(root, 'r') as f: # 从csv中读取数据

reader = csv.reader(f)

result = list(reader)

del result[0] # 删除表头

random.shuffle(result)

for i in range(len(result)):

del result[i][0]

del result[i][0]

label.append(int(result[i][0]))

del result[i][0]

result = np.array(result, dtype=np.float)

# result = preprocessing.scale(result).tolist()

result = preprocessing.StandardScaler().fit_transform(result).tolist() # 对数据进行预处理

# result = preprocessing.MinMaxScaler().fit_transform(result).tolist()

assert len(result) == len(label)

self.labels = label

self.datas = result

if mode == 'train': # 划分训练集为60%

self.datas = self.datas[:int(0.6 * len(self.datas))]

self.labels = self.labels[:int(0.6 * len(self.labels))]

elif mode == 'val': # 划分验证集为20%

self.datas = self.datas[int(0.6 * len(self.datas)):int(0.8 * len(self.datas))]

self.labels = self.labels[int(0.6 * len(self.labels)):int(0.8 * len(self.labels))]

else: # 划分测试集为20%

self.datas = self.datas[int(0.8 * len(self.datas)):]

self.labels = self.labels[int(0.8 * len(self.labels)):]

def __len__(self):

return len(self.datas)

def __getitem__(self, idx):

# idx~[0~len(data)]

data, label = self.datas[idx], self.labels[idx]

data = torch.tensor(data)

label = torch.tensor(label)

return data, label

def main():

file_path = "dataset/League of Legends.csv"

db = MyDataSet(file_path, 'train')

x,y = next(iter(db))

print(x.shape)

print(y.shape)

if __name__ == '__main__':

main()

多层感知器网络构建(基本上是组件化构建,很简单)

多层感知机总共包括四层,层数参数为(16,214,214,2),其中16为输入的每条比赛的属性个数,2为输出的类别,另外为了防止过拟合在感知机中加入了dropout层。

#MLP.py

import torch.nn as nn

import torch.nn.functional as F

import torch

class MLP(nn.Module):

def __init__(self, input_num, output_num):

"""

:param input_num: 输出的节点数

:param output_num: 输出的节点数

"""

super(MLP, self).__init__()

hidden1 = 214 # 第二层节点数

hidden2 = 214 # 第三层节点数

self.fc1 = nn.Linear(input_num, hidden1)

self.fc2 = nn.Linear(hidden1, hidden2)

self.fc3 = nn.Linear(hidden2, output_num)

# 使用dropout防止过拟合

self.dropout = nn.Dropout(0.2)

def forward(self, x):

out = torch.relu(self.fc1(x))

out = self.dropout(out)

out = torch.relu(self.fc2(out))

out = self.dropout(out)

out = self.fc3(out)

return out

训练

训练时的epoch数为560,优化器使用Adam,初始学习率设置为0.001。

训练隔5个epoch输出训练模型在验证集上的准确率、混淆矩阵、F1-score。

并利用visdom对训练loss、以及验证集loss进行了可视化。

可以使用命令 python -m visdom.server启动visdom,并在浏览器中输入url

http://localhost:8097/查看。

# train_mlp.py

import torch

from torch import optim, nn

from torch.utils.data import DataLoader

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

from MyDataSet import MyDataSet

from MLP import MLP

import visdom

from tools import plot_confusion_matrix

import sklearn.metrics as m

batchsz = 32

lr = 1e-3 # 学习率

epoches = 560

torch.manual_seed(1234)

file_path = "dataset/League of Legends.csv"

# 读取数据

train_db = MyDataSet(file_path, mode='train')

val_db = MyDataSet(file_path, mode='val')

test_db = MyDataSet(file_path, mode='test')

train_loader = DataLoader(train_db, batch_size=batchsz, shuffle=True)

val_loader = DataLoader(val_db, batch_size=batchsz)

test_loader = DataLoader(test_db, batch_size=batchsz)

viz = visdom.Visdom()

# 计算正确率

def evaluate(model, loader):

"""

:param model: 网络模型

:param loader: 数据集

:return: 正确率

"""

correct = 0

total = len(loader.dataset)

for x, y in loader:

with torch.no_grad():

logits = model(x)

pred = logits.argmax(dim=1)

correct += torch.eq(pred, y).sum().float().item()

return correct / total

# 计算分类的各种指标

def test_evaluate(model, loader):

"""

:param model: 网络模型

:param loader: 数据集

:return: 正确率

"""

y_true = []

predict = []

for x, y in loader:

with torch.no_grad():

logits = model(x)

result = logits.argmax(dim=1)

for i in y.numpy():

y_true.append(i)

for j in result.numpy():

predict.append(j)

print(classification_report(y_true, predict))

plot_confusion_matrix(confusion_matrix(y_true, predict), classes=range(2), title='confusion matrix')

print("混淆矩阵")

print(confusion_matrix(y_true, predict))

print("f1-score:{}.".format(m.f1_score(y_true, predict)))

return accuracy_score(y_true, predict)

def main():

model = MLP(16, 2) # 初始化模型

optimizer = optim.Adam(model.parameters(), lr=lr) # 设置Adam优化器

criterion = nn.CrossEntropyLoss() # 设置损失函数

best_epoch, best_acc = 0, 0

viz.line([0], [-1], win='loss', opts=dict(title='loss')) # visdom画loss图

viz.line([0], [-1], win='val_acc', opts=dict(title='val_acc')) # visdom画val_acc图

for epoch in range(epoches):

for step, (x, y) in enumerate(train_loader):

# x:[b,16] ,y[b]

logits = model(x)

loss = criterion(logits, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

viz.line([loss.item()], [epoch], win='loss', update='append')

if epoch % 5 == 0:

val_acc = evaluate(model, val_loader)

# train_acc = evaluate(model,train_loader)

print("epoch:[{}/{}]. val_acc:{}.".format(epoch, epoches, val_acc))

# print("train_acc", train_acc)

viz.line([val_acc], [epoch], win='val_acc', update='append')

if val_acc > best_acc:

best_epoch = epoch

best_acc = val_acc

torch.save(model.state_dict(), 'best.mdl')

print('best acc:{}. best epoch:{}.'.format(best_acc, best_epoch))

model.load_state_dict(torch.load('best.mdl'))

print("loaded from ckpt!")

test_acc = test_evaluate(model, test_loader)

print("test_acc:{}".format(test_acc))

if __name__ == '__main__':

main()

工具类:实现绘制混淆矩阵的功能

# tools.py

import matplotlib.pyplot as plt

import numpy as np

def plot_confusion_matrix(cm, classes,

normalize=False,

title=None,

cmap=plt.cm.Blues):

"""

This function prints and plots the confusion matrix.

Normalization can be applied by setting `normalize=True`.

"""

if not title:

if normalize:

title = 'Normalized confusion matrix'

else:

title = 'Confusion matrix, without normalization'

# Compute confusion matrix

# Only use the labels that appear in the data

# classes = classes[unique_labels(y_true, y_pred)]

if normalize:

cm = cm.astype('float') / cm.sum(axis=1)[:, np.newaxis]

print("Normalized confusion matrix")

else:

pass

# print('Confusion matrix, without normalization')

# print(cm)

fig, ax = plt.subplots()

im = ax.imshow(cm, interpolation='nearest', cmap=cmap)

ax.figure.colorbar(im, ax=ax)

# We want to show all ticks...

ax.set(xticks=np.arange(cm.shape[1]),

yticks=np.arange(cm.shape[0]),

# ... and label them with the respective list entries

xticklabels=classes, yticklabels=classes,

title=title,

ylabel='True label',

xlabel='Predicted label')

# Rotate the tick labels and set their alignment.

plt.setp(ax.get_xticklabels(), rotation=45, ha="right",

rotation_mode="anchor")

# Loop over data dimensions and create text annotations.

fmt = '.2f' if normalize else 'd'

thresh = cm.max() / 2.

for i in range(cm.shape[0]):

for j in range(cm.shape[1]):

ax.text(j, i, format(cm[i, j], fmt),

ha="center", va="center",

color="white" if cm[i, j] > thresh else "black")

fig.tight_layout()

plt.show()

return ax

4.实验结果

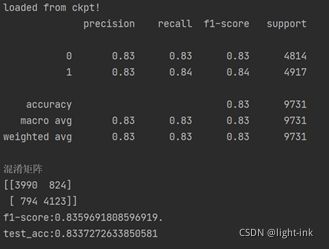

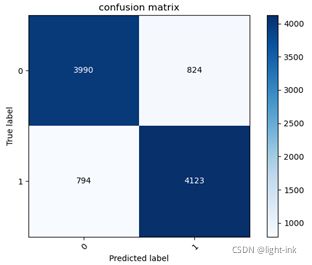

训练过程中保存在验证集上表现最好的模型,利用最终训练好的模型对测试数据集进行分类,得到测试指标,如图所示,可以看到最终训练得到的模型在测试集上的准确率为83.37%,f1-score为0.8370,各个类别的精确率在图中也有展示。另外还绘制出了实验的混淆矩阵图。

对于多分类任务可在此代码上做简单修改完成多分类任务。

完整的项目地址:github链接 https://github.com/Wind-feng/classification.git

(该项目中利用sklearn包实现了简单的SVM、高斯贝叶斯、多项式贝叶斯算法与MLP进行对比,详细介绍可以参考项目中的readme.md文件。)