深度残差网络(ResNet)之ResNet34的实现和个人浅见

深度残差网络(ResNet)之ResNet34的实现和个人浅见

一、残差网络简介

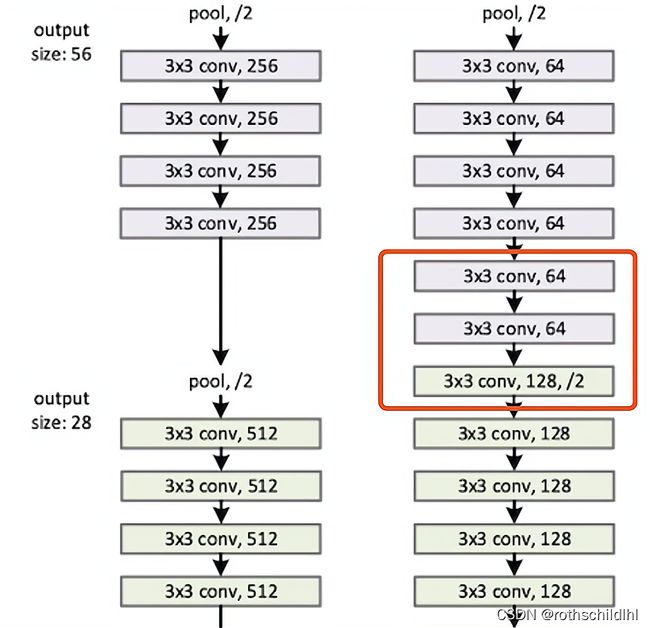

残差网络是由来自Microsoft Research的4位学者提出的卷积神经网络,在2015年的ImageNet大规模视觉识别竞赛(ImageNet Large Scale Visual Recognition Challenge, ILSVRC)中获得了图像分类和物体识别的优胜。 残差网络的特点是容易优化,并且能够通过增加相当的深度来提高准确率。其内部的残差块使用了跳跃连接(shortcut),缓解了在深度神经网络中增加深度带来的梯度消失问题。残差网络(ResNet)的网络结构图举例如下:

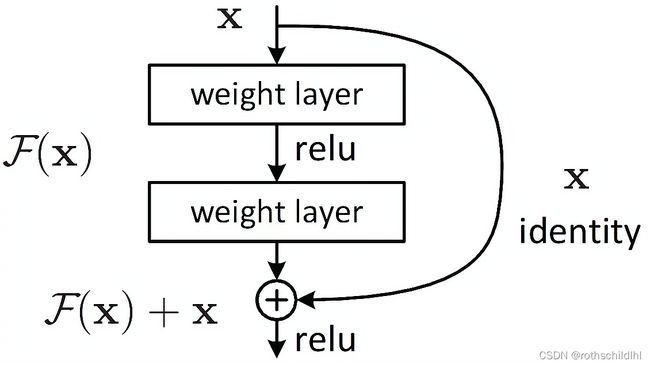

二、shortcut和Residual Block的简介

深度残差网络(ResNet)除最开始的卷积池化和最后池化的全连接之外,网络中有很多结构相似的单元,这些重复的单元的共同点就是有个跨层直连的shortcut,同时将这些单元称作Residual Block。Residual Block的构造图如下(图中 x identity 标注的曲线表示 shortcut):

三、实现逻辑

逻辑实现顺序按照下面代码中的的标号按顺序执行理解,但注意一定要搞明白通道数的一个变化和forward调用及Sequential调用的方式相同。

四、代码及结果

from torch import nn

import torch as t

from torch.nn import functional as F

from torch.autograd import Variable as V

class ResidualBlock(nn.Module): # 定义ResidualBlock类 (11)

"""实现子modual:residualblock"""

def __init__(self,inchannel,outchannel,stride=1,shortcut=None): # 初始化,自动执行 (12)

super(ResidualBlock, self).__init__() # 继承nn.Module (13)

self.left = nn.Sequential( # 左网络,构建Sequential,属于特殊的module,类似于forward前向传播函数,同样的方式调用执行 (14)(31)

nn.Conv2d(inchannel,outchannel,3,stride,1,bias=False),

nn.BatchNorm2d(outchannel),

nn.ReLU(inplace=True),

nn.Conv2d(outchannel,outchannel,3,1,1,bias=False),

nn.BatchNorm2d(outchannel)

)

self.right = shortcut # 右网络,也属于Sequential,见(8)步可知,并且充当残差和非残差的判断标志。 (15)

def forward(self,x): # ResidualBlock的前向传播函数 (29)

out = self.left(x) # # 和调用forward一样如此调用left这个Sequential(30)

if self.right is None: # 残差(ResidualBlock)(32)

residual = x #(33)

else: # 非残差(非ResidualBlock) (34)

residual = self.right(x) # (35)

out += residual # 结果相加 (36)

print(out.size()) # 检查每单元的输出的通道数 (37)

return F.relu(out) # 返回激活函数执行后的结果作为下个单元的输入 (38)

class ResNet(nn.Module): # 定义ResNet类,也就是构建残差网络结构 (2)

"""实现主module:ResNet34"""

def __init__(self,numclasses=1000): # 创建实例时直接初始化 (3)

super(ResNet, self).__init__() # 表示ResNet继承nn.Module (4)

self.pre = nn.Sequential( # 构建Sequential,属于特殊的module,类似于forward前向传播函数,同样的方式调用执行 (5)(26)

nn.Conv2d(3,64,7,2,3,bias=False), # 卷积层,输入通道数为3,输出通道数为64,包含在Sequential的子module,层层按顺序自动执行

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(3,2,1)

)

self.layer1 = self.make_layer(64,128,4) # 输入通道数为64,输出为128,根据残差网络结构将一个非Residual Block加上多个Residual Block构造成一层layer(6)

self.layer2 = self.make_layer(128,256,4,stride=2) # 输入通道数为128,输出为256 (18,流程重复所以标注省略7-17过程)

self.layer3 = self.make_layer(256,256,6,stride=2) # 输入通道数为256,输出为256 (19,流程重复所以标注省略7-17过程)

self.layer4 = self.make_layer(256,512,3,stride=2) # 输入通道数为256,输出为512 (20,流程重复所以标注省略7-17过程)

self.fc = nn.Linear(512,numclasses) # 全连接层,属于残差网络结构的最后一层,输入通道数为512,输出为numclasses (21)

def make_layer(self,inchannel,outchannel,block_num,stride=1): # 创建layer层,(block_num-1)表示此层中Residual Block的个数 (7)

"""构建layer,包含多个residualblock"""

shortcut = nn.Sequential( # 构建Sequential,属于特殊的module,类似于forward前向传播函数,同样的方式调用执行 (8)

nn.Conv2d(inchannel,outchannel,1,stride,bias=False),

nn.BatchNorm2d(outchannel)

)

layers = [] # 创建一个列表,将非Residual Block和多个Residual Block装进去 (9)

layers.append(ResidualBlock(inchannel,outchannel,stride,shortcut)) # 非残差也就是非Residual Block创建及入列表 (10)

for i in range(1,block_num):

layers.append(ResidualBlock(outchannel,outchannel)) # 残差也就是Residual Block创建及入列表 (16)

return nn.Sequential(*layers) # 通过nn.Sequential函数将列表通过非关键字参数的形式传入,并构成一个新的网络结构以Sequential形式构成,一个非Residual Block和多个Residual Block分别成为此Sequential的子module,层层按顺序自动执行,并且类似于forward前向传播函数,同样的方式调用执行 (17) (28)

def forward(self,x): # ResNet类的前向传播函数 (24)

x = self.pre(x) # 和调用forward一样如此调用pre这个Sequential(25)

x = self.layer1(x) # 和调用forward一样如此调用layer1这个Sequential(27)

x = self.layer2(x) # 和调用forward一样如此调用layer2这个Sequential(39,流程重复所以标注省略28-38过程)

x = self.layer3(x) # 和调用forward一样如此调用layer3这个Sequential(40,流程重复所以标注省略28-38过程)

x = self.layer4(x) # 和调用forward一样如此调用layer4这个Sequential(41,流程重复所以标注省略28-38过程)

x = F.avg_pool2d(x,7) # 平均池化 (42)

x = x.view(x.size(0),-1) # 设置返回结果的尺度 (43)

return self.fc(x) # 返回结果 (44)

model = ResNet() # 创建ResNet残差网络结构的模型的实例 (1)

input = V(t.randn(1,3,224,224)) # 输入数据的创建,注意要报证通道数与残差网络结构每层需要的通道数一致,此数据通道数为3 (22)

output = model(input) # 把数据输入残差模型,等同于开始调用ResNet类的前向传播函数 (23)

print(output) # 输出运行的结果 (45)

全部运行结果如下:

D:\Anaconda\python.exe E:/pythonProjecttest/ResNet34.py

torch.Size([1, 128, 56, 56])

torch.Size([1, 128, 56, 56])

torch.Size([1, 128, 56, 56])

torch.Size([1, 128, 56, 56])

torch.Size([1, 256, 28, 28])

torch.Size([1, 256, 28, 28])

torch.Size([1, 256, 28, 28])

torch.Size([1, 256, 28, 28])

torch.Size([1, 256, 14, 14])

torch.Size([1, 256, 14, 14])

torch.Size([1, 256, 14, 14])

torch.Size([1, 256, 14, 14])

torch.Size([1, 256, 14, 14])

torch.Size([1, 256, 14, 14])

torch.Size([1, 512, 7, 7])

torch.Size([1, 512, 7, 7])

torch.Size([1, 512, 7, 7])

tensor([[-6.9146e-01, 4.2167e-02, 6.7924e-01, 3.3181e-02, -8.8691e-01,

5.7865e-01, -2.2614e-01, 1.1027e-01, -8.9046e-01, -5.5591e-01,

-1.9302e-01, -8.6779e-01, 5.6450e-01, 2.5317e-01, 1.8407e-01,

3.1509e-01, 3.0071e-01, 1.8821e-01, 1.9301e-01, 2.2245e-01,

-7.0432e-02, -5.5133e-01, 1.3677e-01, -5.1272e-01, 1.4352e+00,

1.0956e-01, -5.4226e-02, 2.3303e-01, -1.8693e-01, 8.5983e-02,

-1.6748e-02, 3.5629e-01, 6.9455e-01, -2.1752e-01, -9.7052e-01,

-6.2566e-02, 1.7783e-02, -5.3616e-01, -1.6616e-01, 2.3980e-01,

-6.5388e-01, 4.6447e-01, -1.1445e-01, 3.9800e-01, -6.1762e-01,

2.1847e-01, 3.0629e-01, 9.6355e-01, 4.8554e-01, -1.3560e-01,

-1.1258e+00, 4.5508e-01, -6.5425e-02, 2.2604e-01, 8.4579e-01,

3.5011e-01, -4.8174e-02, 8.6373e-02, 8.3569e-01, 5.2538e-01,

-3.7520e-01, -1.0723e+00, 1.7073e-01, -3.4342e-01, -1.0935e+00,

2.5121e-01, 7.0005e-01, 3.2427e-01, 5.2415e-01, -1.0085e+00,

6.7876e-01, -1.2413e-01, -2.9308e-01, 1.4041e-01, 8.0507e-01,

-1.5743e-01, 7.9150e-01, 8.3295e-01, -3.7907e-01, 1.3265e+00,

3.8708e-02, -2.8288e-01, 3.4668e-01, -2.4022e-01, 8.8009e-01,

4.9280e-01, -5.6786e-01, 5.9342e-01, 1.7836e-02, 6.1642e-01,

-3.7113e-01, 5.5412e-03, -7.4085e-01, 9.4929e-01, -1.7198e-01,

-3.5788e-02, 6.8394e-01, -1.0366e+00, 1.5008e-01, -3.0477e-01,

-2.1622e-01, -6.1331e-01, -5.3795e-01, -2.8243e-01, -3.9197e-02,

3.1893e-01, 3.7748e-01, 3.6212e-01, -6.8486e-02, -4.4467e-01,

-2.1987e-01, 7.9024e-02, -7.7868e-02, 3.7589e-01, -1.2438e+00,

-5.0136e-01, 5.3716e-01, 2.3972e-01, 3.3805e-01, -1.4474e-01,

-4.2362e-01, -6.8376e-03, 1.3206e-01, -1.6898e+00, -1.5755e-01,

-2.9422e-01, 7.4048e-01, -1.0545e+00, -1.0378e-01, 1.7759e-01,

-6.3554e-01, -8.9613e-01, 5.3591e-01, 5.7314e-01, 1.9101e-02,

9.6170e-01, 3.9993e-01, 2.7950e-01, -8.5327e-02, -6.6949e-01,

1.4542e-01, 1.6011e-01, 9.8337e-02, -7.9863e-04, 5.9889e-01,

-9.5556e-01, -4.1383e-01, 2.0492e-01, -3.1978e-01, 7.3895e-01,

2.5687e-01, 7.5821e-01, 9.2650e-01, -1.9801e-01, -6.8704e-01,

4.2957e-01, -1.8863e-01, 4.7952e-01, 9.6284e-01, -4.0856e-01,

-1.9873e-01, -1.0955e-01, 3.9147e-01, 6.4608e-01, 1.1610e-01,

9.1627e-01, 3.2636e-01, -3.3887e-01, -7.0482e-01, 1.0206e+00,

-9.4433e-01, 1.7178e-01, 1.2179e+00, -8.5136e-02, 1.0335e+00,

1.8280e+00, 8.7046e-01, -6.8005e-01, 1.0859e+00, 3.7428e-01,

-1.3247e+00, -1.2270e+00, 9.5850e-01, -8.3043e-01, -1.2327e+00,

-6.2339e-01, 3.4727e-01, 4.3749e-01, 2.7013e-01, -1.0430e-01,

-2.0612e-01, 1.8494e-01, -4.5996e-01, -2.3210e-01, -3.7490e-01,

-8.7007e-01, -6.7116e-01, 2.3342e-01, 2.5999e-01, -3.3351e-01,

2.3589e-01, 9.8910e-02, -8.1655e-01, 2.5841e-01, -8.8194e-01,

6.8509e-01, -2.4890e-02, 9.0165e-01, -5.3347e-01, 2.2197e-01,

1.3163e+00, 8.4227e-02, 6.3555e-01, 7.9277e-01, 1.2936e-01,

1.0270e+00, 8.6476e-01, 7.1398e-02, 1.4991e-01, 4.8816e-01,

4.8867e-01, 1.6423e-01, 1.4731e-01, 6.8859e-01, 1.5584e-01,

2.9952e-01, -7.8371e-01, 1.8490e-01, -2.1610e-02, -5.3605e-01,

-2.5407e-01, 1.7297e-01, 2.2950e-01, -6.2010e-01, 4.6020e-01,

6.3796e-02, 3.1704e-01, -8.6192e-02, 1.5022e-01, -4.5151e-04,

2.5592e-01, -1.0011e+00, 1.0367e+00, -4.9935e-01, 2.3648e-01,

-8.8412e-01, 4.8926e-01, -4.6301e-01, -2.2750e-01, -2.3098e-01,

6.1564e-01, -6.5003e-02, -8.3130e-01, -4.0774e-01, 1.0351e+00,

-2.2826e-01, 1.8550e-01, -2.2818e-01, -9.0132e-01, -1.2123e-02,

-8.1003e-01, -7.1771e-02, -3.9507e-01, -1.7745e+00, 3.3608e-01,

1.4656e+00, 7.2080e-01, 1.8197e-02, 6.9331e-01, 4.4406e-01,

-6.4882e-01, -7.4701e-01, -2.8917e-01, -4.7285e-01, -5.2247e-01,

4.8074e-01, -1.1043e+00, -9.0061e-01, -1.3376e+00, -4.4920e-02,

2.6194e-02, -6.9110e-01, 1.8998e-01, 6.8125e-01, 2.6472e-01,

-1.9500e-01, 1.1790e-01, 6.9329e-01, 8.9654e-02, 1.0810e-01,

-1.2606e-01, -2.9633e-01, 9.3100e-01, -9.3621e-02, -2.2722e-01,

-6.8945e-01, 5.3516e-01, 3.4176e-01, 2.8460e-01, -9.2238e-02,

-5.1156e-01, -3.6413e-02, 1.6606e-01, -7.6782e-02, 1.1313e+00,

4.2051e-01, 5.6609e-01, 3.9709e-01, -1.2401e-01, -8.0785e-01,

-2.2174e-01, 1.7601e-01, 1.4581e-01, 4.0443e-01, -4.0146e-01,

6.8066e-01, -7.1709e-01, -1.6881e-01, -1.3064e-01, -6.2189e-01,

-5.9187e-01, 5.7100e-02, -2.2695e-01, -7.2139e-01, 1.2794e-01,

-4.4380e-01, -1.2599e+00, 9.6533e-01, 6.2063e-01, -5.3975e-01,

-1.1087e+00, 4.9467e-02, 1.1644e+00, -7.1682e-02, -2.0245e-02,

1.1163e+00, 5.0045e-01, -3.5761e-01, -6.9026e-01, -3.6600e-01,

7.0356e-01, 3.2557e-01, -2.7923e-01, 1.1310e+00, 9.7589e-02,

3.4966e-01, 8.2451e-01, 5.8476e-01, 3.8739e-01, -2.3314e-02,

-1.6036e+00, -1.7503e-01, -8.7333e-01, -1.0493e+00, 1.0798e+00,

3.7905e-01, 5.0393e-01, 5.0268e-02, -1.4805e-01, 5.7094e-01,

-2.6040e-01, 5.3364e-01, 5.2263e-01, -1.6035e+00, -3.1318e-01,

4.4510e-01, 2.8889e-01, -8.4225e-01, 1.5495e-01, 8.1316e-01,

4.4937e-01, -6.0873e-01, -2.9327e-01, 7.9566e-02, -8.0235e-01,

-3.9711e-02, -6.8741e-01, 4.1276e-01, 1.9693e-02, 2.6155e-01,

-4.5858e-02, 2.4107e-01, -4.7513e-01, 4.7742e-01, 1.6110e+00,

5.1279e-01, 1.0366e+00, -2.3587e-01, -1.2311e+00, 9.5383e-02,

-1.9257e-01, 5.6524e-01, 1.6578e-01, 5.4815e-01, -1.1047e+00,

1.2389e+00, 5.0674e-01, -5.8751e-01, -5.9493e-01, 3.1465e-01,

2.7059e-02, 1.3419e+00, -8.1904e-02, -1.9739e-01, 5.5819e-01,

-4.7174e-01, -2.3256e-02, -1.2037e+00, -1.1361e+00, -3.1907e-02,

4.5880e-01, -1.4822e+00, -3.3344e-01, 9.3805e-01, 8.1625e-01,

9.1937e-01, -4.8903e-01, -1.1362e+00, -6.4240e-02, 1.5777e+00,

2.9890e-01, -1.0674e-02, 7.2148e-02, 3.1490e-02, 3.1332e-01,

6.6058e-01, -1.0778e+00, 5.9494e-01, -7.8678e-02, -4.3562e-01,

-1.3410e-01, -1.6717e+00, -9.5148e-01, 1.5534e-01, 5.6049e-01,

-1.0570e+00, 1.0599e+00, -3.1479e-01, -4.0848e-01, 9.0255e-01,

-3.4225e-02, -3.1815e-01, 6.3247e-01, 3.4287e-01, -4.6938e-01,

4.0070e-01, 6.5028e-01, 5.2902e-01, 1.8778e-01, -2.3887e-01,

8.0638e-02, 4.6696e-01, -4.9926e-01, -4.4118e-01, -1.8332e-01,

7.9211e-01, -6.7340e-01, -8.6322e-01, 4.1429e-01, -6.0763e-01,

-1.5356e-02, -2.3949e-01, -9.3849e-03, 2.0337e-01, 5.5797e-01,

-7.9195e-01, -4.7047e-01, -1.0102e+00, 3.2557e-01, 3.6674e-01,

-1.1477e+00, -6.8755e-01, -2.2235e-01, -6.2278e-01, -1.5749e+00,

8.6700e-01, 1.9642e-01, 2.5546e-02, -1.5557e+00, -3.0034e-01,

-6.4555e-01, -2.7915e-01, 1.4744e-01, -2.5721e-01, -1.2529e-01,

-3.8708e-01, 2.5351e-01, 4.5655e-01, 1.0690e+00, -2.0470e-01,

-4.3875e-01, -3.5612e-01, 5.4389e-01, -8.2081e-01, 9.2760e-02,

9.5563e-01, 3.3499e-01, 5.8023e-01, 2.7864e-01, -2.9261e-01,

4.4507e-01, -1.3242e-01, -1.7397e-01, 1.0680e+00, -5.7670e-02,

6.2292e-01, 3.0558e-01, -6.8610e-01, -4.7339e-01, 1.7880e-01,

-8.8752e-01, 1.2576e-01, -2.8937e-01, 3.6523e-01, 6.1390e-01,

7.8106e-02, 1.2329e-01, 2.4615e-01, -1.3942e-01, -7.4529e-01,

-5.6265e-01, 6.4526e-01, 1.2768e-01, 6.3676e-01, -5.6866e-02,

-9.1949e-01, 7.2047e-01, 7.1974e-01, -8.6021e-01, -5.0419e-01,

-6.3278e-01, -4.2891e-01, 6.0974e-01, -8.3915e-01, 1.0890e-01,

4.3381e-01, -2.7232e-02, -4.6082e-01, -1.4681e-01, -7.1417e-01,

4.0820e-01, -8.4892e-01, -4.9780e-01, -8.0484e-01, 6.1057e-01,

3.5519e-01, 3.2500e-01, -2.8149e-02, -6.7602e-01, -2.2134e-01,

4.1522e-01, 8.3226e-02, -5.4950e-01, -2.6734e-02, -3.3536e-01,

3.0666e-01, -1.2788e+00, 4.8756e-01, 1.8283e-01, -6.3399e-01,

1.0271e-01, 5.0841e-01, 1.0546e-02, -6.8459e-01, 9.6788e-01,

8.7046e-01, -2.5414e-01, -1.0958e+00, -2.5689e-01, 1.7565e-01,

6.5372e-01, -8.7773e-01, 3.5046e-01, -3.9997e-01, 3.4477e-01,

-1.3991e-01, -2.1905e-01, -3.9819e-01, -1.4343e-01, 6.8163e-02,

-7.6764e-01, 1.6220e-01, 8.5901e-02, -5.1995e-01, 1.8644e-01,

3.6650e-01, 3.5908e-01, -5.7888e-01, -1.3542e+00, 2.6814e-01,

-1.0036e+00, 3.9858e-01, 2.6495e-01, -7.6400e-01, -5.4448e-01,

-5.9419e-01, 4.0788e-01, 1.2597e-01, -2.9616e-01, -1.4144e-01,

1.0728e-01, 4.9507e-01, 1.3832e-01, -2.7218e-01, 1.2166e-01,

8.7592e-01, -3.7296e-01, -1.1912e+00, -7.3491e-01, -7.8698e-01,

1.0110e-01, 1.1248e+00, 2.2468e-01, -7.6400e-01, -8.0860e-02,

5.6361e-01, 8.4763e-01, -1.2029e-01, -3.0640e-01, 6.5850e-01,

-1.1623e-01, -1.4514e-01, -4.6952e-01, 4.6256e-01, -1.3589e-01,

-9.2259e-01, -2.0492e-01, 2.5564e-01, 3.2460e-02, -4.7368e-01,

5.0535e-01, -5.2564e-01, 7.5076e-01, 7.1643e-02, 5.2787e-01,

-4.3318e-01, 9.3648e-02, -7.1120e-01, 2.4847e-01, 9.4715e-01,

-1.4319e-01, -4.8840e-01, 4.1323e-01, 1.6466e-02, 9.0499e-01,

-1.6844e-01, -9.5059e-01, -3.2361e-03, 3.2867e-01, -1.1569e-01,

-4.5970e-01, -1.8464e-01, 2.4641e-01, 2.5110e-01, -9.8225e-01,

-1.0733e-01, -3.3652e-01, -6.6524e-01, 1.4894e-03, -6.4410e-02,

-3.5465e-01, -3.9935e-01, 8.0049e-01, -1.3986e-01, -3.1070e-01,

-6.1491e-01, -3.1478e-01, 6.0563e-01, -6.8818e-01, -6.6083e-01,

-4.0017e-02, 2.7928e-01, 3.8338e-01, -4.5361e-01, -4.3925e-01,

2.5260e-01, 7.6989e-01, 5.1986e-01, -1.9983e-01, 1.3787e+00,

1.0702e-01, 9.0152e-01, 3.2067e-01, -5.8014e-01, -3.3622e-01,

3.6457e-01, 7.0897e-01, 7.3571e-01, -1.2167e-01, -7.7574e-01,

2.0244e-01, -5.7603e-01, -2.2578e-01, 3.9322e-02, -2.7605e-01,

9.4713e-01, -5.2219e-02, 3.5428e-01, -6.4227e-02, -1.7601e-01,

-3.8277e-01, -6.3595e-01, 8.5544e-01, 2.2130e-01, 6.0075e-01,

-3.3424e-01, -9.1250e-02, 3.3984e-01, -6.9116e-01, -8.9798e-01,

-3.6494e-01, 5.9792e-02, 1.3505e+00, 1.2452e+00, 8.0825e-02,

4.9315e-01, -3.3050e-01, -6.0244e-01, -6.4396e-01, -1.0282e-01,

1.3876e-01, -5.7287e-01, 1.3552e+00, 9.9222e-01, 8.3570e-01,

9.3523e-01, -5.6584e-02, -3.1673e-01, -1.2097e+00, 2.4559e-01,

-3.2425e-01, -1.6121e-01, 1.5395e-01, 8.7392e-02, 2.9914e-01,

2.6780e-01, 7.2765e-01, 3.6384e-01, 3.4335e-01, -5.2233e-02,

8.4107e-01, -8.8247e-01, 4.9839e-01, 7.6217e-01, -6.7514e-01,

3.7350e-01, 5.3938e-01, -6.8437e-01, -6.4952e-01, 4.4572e-02,

-2.1992e-01, 6.9987e-01, -2.0559e-01, -3.9114e-01, -2.7535e-01,

1.9988e-01, -3.2468e-01, 3.6333e-01, -4.7070e-01, 5.2315e-01,

-1.6918e-01, -1.7833e-01, 8.5354e-02, 6.9523e-01, 2.7907e-01,

-7.7410e-01, 3.4230e-01, -3.2303e-01, 3.0155e-01, -4.3414e-01,

6.1294e-02, 1.0578e+00, 1.4585e+00, 3.3167e-01, -6.2328e-01,

2.9279e-01, -1.5948e-01, 3.8757e-01, 8.2591e-01, 2.1672e-01,

5.7766e-01, -2.9127e-01, 2.0358e-01, 1.3536e-01, 6.4433e-01,

-1.7001e-01, 1.4311e-01, 6.3427e-01, -2.3018e-01, 1.6083e-01,

7.2771e-01, 5.2825e-01, -7.0237e-01, 4.3208e-01, 9.3117e-01,

-6.8031e-01, 7.0680e-01, 4.3550e-02, -3.3826e-01, -5.0752e-01,

-1.6684e-01, -1.4690e+00, -3.1424e-01, 3.7802e-01, -5.5311e-01,

-2.4757e-01, 5.6382e-01, -4.5089e-01, -2.6551e-01, -1.0993e-01,

-9.6827e-02, 3.3746e-01, -2.6545e-01, 5.2481e-01, 1.6289e-01,

-1.5862e+00, -5.2655e-01, -8.5029e-02, -3.4376e-01, -3.9005e-01,

8.9304e-01, -1.0885e+00, 3.3496e-01, -2.2014e-01, -1.1807e+00,

-5.3162e-01, -8.6276e-01, 2.7761e-01, -3.3421e-01, 6.1454e-01,

9.7243e-02, 1.9139e-01, 1.9339e+00, 2.8953e-01, 8.7675e-02,

-7.9156e-02, -3.8548e-01, 5.9511e-01, -3.0891e-02, 6.5158e-01,

1.5401e-01, -7.9967e-01, -4.8655e-01, 3.4292e-01, 8.8471e-02,

-5.0044e-01, 5.0710e-01, -4.9008e-01, 6.4452e-01, 4.9921e-01,

-1.0480e+00, -4.0598e-01, -4.3537e-01, -4.6960e-01, 1.3774e-01,

2.1498e-01, -9.6566e-01, 5.3262e-02, -4.9292e-01, 5.7287e-02,

3.9360e-01, 1.4621e-01, 4.3472e-01, 9.7618e-02, 3.2723e-01,

-7.5500e-01, 1.8167e-01, -6.0623e-01, 5.3683e-02, -1.3577e-01,

-2.9641e-01, 5.2730e-01, 2.2035e-01, 2.2513e-01, 3.3321e-01,

2.7845e-01, -4.5338e-01, 2.2105e-01, 2.2002e-01, 4.7957e-02,

2.9550e-01, 5.6597e-01, -4.9719e-02, 1.2617e+00, 1.6641e-02,

7.4306e-01, -9.8551e-02, 2.6660e-01, -1.1283e+00, -5.2514e-01,

3.3837e-01, -1.6981e-01, 2.2495e-01, 1.3348e-01, 9.4413e-01,

1.0574e+00, 5.1845e-01, 7.2063e-01, -3.4801e-01, 5.4844e-02,

-4.6694e-01, -8.0858e-01, 1.2096e+00, 9.2510e-01, -4.9450e-01,

3.5264e-01, 2.4308e-01, -3.4107e-02, 1.0145e+00, -4.1692e-01,

3.0685e-02, 5.7708e-01, 7.7829e-01, -1.1859e-01, 6.1153e-01,

-3.2466e-01, -3.0122e-01, 1.0990e+00, 1.6825e-02, 3.6798e-02,

1.6682e-02, -6.6916e-01, 1.0122e+00, 5.2224e-01, 1.7511e-01,

-2.0930e-01, -1.3777e-01, -1.0232e+00, -8.5871e-01, 3.5513e-01,

-2.6112e-01, -1.3086e-01, -3.0986e-01, -3.0738e-01, -1.5250e-01,

-1.2116e+00, -2.1632e-01, 9.3498e-01, -3.7403e-01, 2.5177e-01,

-1.2901e+00, 8.2175e-01, -2.4575e-01, -4.4658e-01, -4.3875e-01,

6.1427e-01, 1.6391e-01, 4.0375e-01, -4.4465e-01, 4.0423e-01,

5.4346e-01, -9.5232e-01, -6.8879e-01, 7.4688e-01, -2.4348e-01,

1.3636e+00, 3.9611e-01, -7.5341e-01, 1.5457e-01, -1.2955e+00,

2.9039e-01, -1.8801e-02, 6.1748e-01, 4.4085e-01, 3.0806e-01,

-1.2679e-01, -7.2623e-01, 3.0089e-01, -3.5200e-01, 1.4377e-01,

-7.3124e-01, -1.9353e-01, 2.7860e-01, -9.7880e-02, 5.4347e-01,

4.5906e-01, -2.5582e-01, 5.1523e-01, -8.1713e-02, 3.3685e-01,

-1.0462e+00, 7.3278e-01, -2.7332e-01, 1.8338e-01, -3.4347e-01,

-5.2576e-01, -6.6501e-01, -1.0592e-01, 3.9719e-01, -2.3533e-01,

3.6105e-01, 1.3998e+00, 4.3156e-01, 1.5737e+00, 2.1686e-01,

-3.5330e-01, -1.0931e+00, 3.6434e-01, -7.3331e-01, -3.8396e-01]],

grad_fn=)

Process finished with exit code 0