pytorch 图像预处理之减去均值,除以方差

在使用 torchvision.transforms进行数据处理时我们经常进行的操作是:

transforms.Normalize((0.485,0.456,0.406), (0.229,0.224,0.225))

前面的(0.485,0.456,0.406)表示均值,分别对应的是RGB三个通道;后面的(0.229,0.224,0.225)则表示的是标准差。这上面的均值和标准差的值是ImageNet数据集计算出来的,所以很多人都使用它们。

GPU上面的环境变化太复杂,这里我直接给出在笔记本CPU上面的运行时间结果。由于方式3需要将tensor转换到GPU上面,这一过程很消耗时间,大概需要十秒,故而果断抛弃这样的做法

img (168, 300, 3)

sub div in numpy,time 0.0110

sub div in torch.tensor,time 0.0070

sub div in torch.tensor with torchvision.transforms,time 0.0050

tensor1=tensor2

tensor2=tensor3

img (1079, 1349, 3)

sub div in numpy,time 0.1899

sub div in torch.tensor,time 0.1469

sub div in torch.tensor with torchvision.transforms,time 0.1109

tensor1=tensor2

tensor2=tensor3

耗时最久的是numpy,其次是转换成torch.tensor,最快的是直接使用torchvision.transforms

我现在在GPU上面跑的程序GPU利用率特别低(大多数时间维持在2%左右,只有很少数的时间超过80%),然后设置打印点调试程序时发现,getitem()输出一张图像的时间在0.1秒的数量级,这对于GPU而言是非常慢的,因为GPU计算速度很快,CPU加载图像和预处理图像的速度赶不上GPU的计算速度,就会导致显卡大量时间处于空闲状态,经过对于图像I/O部分代码的定位,发现是使用numpy减去图像均值除以方差这一操作浪费了太多时间,而且输入图像的分辨率越大,所消耗的时间就会更多,原则上,图像预处理每个阶段的时间需要维持在0.01秒的数量级

import numpy as np

import time

import torch

import torchvision.transforms as transforms

import cv2

img_path='F:\\2\\00004.jpg'

PIXEL_MEANS =(0.485, 0.456, 0.406) # RGB format mean and variances

PIXEL_STDS = (0.229, 0.224, 0.225)

方式一 在numpy中进行减去均值除以方差,最后转换成torch.tensor

one_start=time.time()

img=cv2.imread(img_path) # (H,W,C)

img=img[:,:,::-1] # GBR转换为RGB

img=img.astype(np.float32, copy=False) # 像素值变为浮点数

img/=255.0

img-=np.array(PIXEL_MEANS)

img/=np.array(PIXEL_STDS)

tensor1=torch.from_numpy(img.copy()) # 输出的应该是转换成torch.tensor的标准形式

tensor1=tensor1.permute(2,0,1) # (H,W,C)转为(C,H,W)

one_end=time.time()

print('sub div in numpy,time {:.4f}'.format(one_end-one_start))

del img

方式二 转换成torch.tensor,再减去均值除以方差

two_start=time.time()

img=cv2.imread(img_path)

img=img[:,:,::-1]

print('img',img.shape,np.min(img),np.min(img))

tensor2=torch.from_numpy(img.copy()).float()

tensor2/=255.0

tensor2-=torch.tensor(PIXEL_MEANS)

tensor2/=torch.tensor(PIXEL_STDS)

tensor2=tensor2.permute(2,0,1)

two_end=time.time()

print('sub div in torch.tensor,time {:.4f}'.format(two_end-two_start))

del img

方式三 转换成torch.tensor,再放到GPU上面,最后减去均值除以方差

# three_start=time.time()

# img=cv2.imread(img_path)

# img=img[:,:,::-1]

# tensor3=torch.from_numpy(img.copy()).cuda().float()

# tensor3-=torch.tensor(PIXEL_MEANS).cuda()

# tensor3/=torch.tensor(PIXEL_STDS).cuda()

# three_end=time.time()

# print('sub div in torch.tensor on cuda,time {:.4f}'.format(three_end-three_start))

# del img

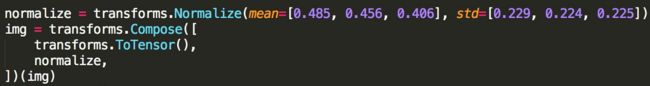

方式四 转换成torch.tensor,使用transform方法减去均值除以方差

four_start=time.time()

img=cv2.imread(img_path)

img=img[:,:,::-1]

transform=transforms.Compose(

[transforms.ToTensor(),transforms.Normalize(PIXEL_MEANS, PIXEL_STDS)]

)

tensor4=transform(img.copy())

four_end=time.time()

print('sub div in torch.tensor with torchvision.transforms,time {:.4f}'.format(four_end-four_start))

del img

if torch.sum(tensor1-tensor2)<=1e-3:

print('tensor1=tensor2')

if torch.sum(tensor2-tensor4)==0:

print('tensor2=tensor3')

# if tensor3==tensor4:

# print('tensor3=tensor4')

参考1

参考2