摄像头标定(carlibration)、Homography以及它的matlab实现

- Homogeneous Coordinates

- Pin-hole camera Model

- Standard Perspective Camera Model

- affine camera model

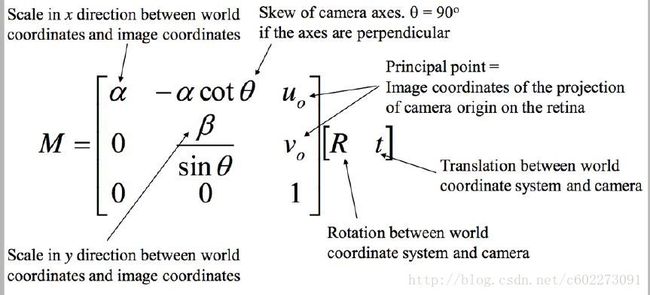

- 计算标定矩阵

- 总结

- homography

- Matlab实现图片拼接

- 计算H

- 把你的照片嵌入海报

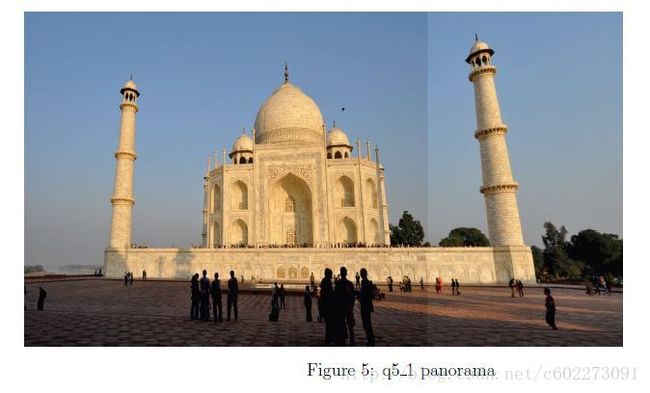

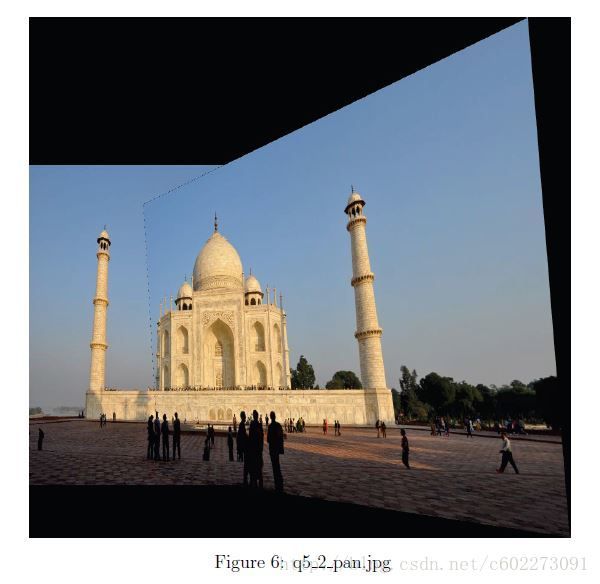

- 图片拼接

转载请注明:http://blog.csdn.net/c602273091/article/details/54572428

Homogeneous Coordinates

点的二维坐标可以用三维表示,因为用二维怎么表示无穷,用无穷符号么?显然不够理想。当w=0的时候,就在无穷远点。

Pin-hole camera Model

在小孔模型的基础上,我们进一步得到摄像头的透视模型。

Standard Perspective Camera Model

当分子为0的时候,那么这时候这个公式表示方向为 a1 的平面,感觉这个东西和ORB-SLAM描述的点云的方向一样。

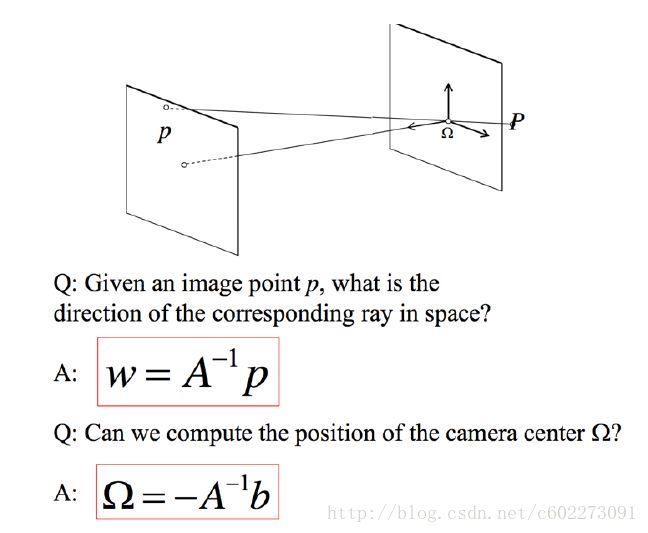

给定一个图片上的平面的点,怎么计算它真实的3D点的拍摄方向以及怎么计算摄像头的中心呢?

现在我才知道Gary讲的东西真是很有用。

affine camera model

如果M矩阵最后一行是[0 0 0 1],那么这就是一个仿射相机,并且真实世界与图片只是线性变化。比如weak Perspective。

计算标定矩阵

总结

对于优化部分不理解的话可以看我代码的实现,里面讲得更加清楚些。

homography

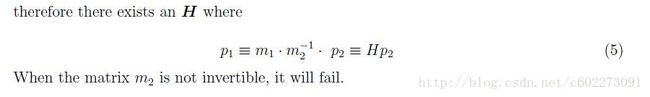

如果你对上面的标定已经了解了的话,对于H矩阵应该更容易理解。H这个指的在低视角(两幅图片之间拍摄角度)、近似平面或平面(这样就是线性变换)情况下,拍摄的图片之间可以进行转换。

比如p1和p2分别是两幅图片上的点,对应同一个3D坐标。那么在满足低视角、近乎平面的时候就有:(看我的第一次CV作业的理论部分)

Matlab实现图片拼接

计算H

把上面的式子写出来计算它的H,代码如下:

这里要记住matlab是列存储的。

% YU CHEN

% Q 4.1

% 2016

% CMU ECE

function H2to1 = computeH(p1, p2)

N = size(p1, 2); % the number of points

A = zeros(2*N, 9); % set A to the required size

p1 = p1';

p2 = p2';

for i = 1 : N

j = bitshift(i, 1);

A(j - 1,:) = [p2(i,:), 1, zeros(1,3), -p1(i,1)*[p2(i,:), 1]];

A(j, :) = [zeros(1,3), p2(i,:), 1, -p1(i,2)*[p2(i,:), 1]];

end

ATA = A'* A;

[EigVector, EigValue] = eig(ATA);

EigV = diag(EigValue); % get eign value from symmetry line.

[~,pos] = min(EigV); % get the minimal value position.

H = EigVector(:, pos); % get the vectoe with smallest eign value.

H2to1 = reshape(H,3,3)';把你的照片嵌入海报

实现代码如下:如果你看我的代码会发现我的代码用了很多matlab技巧做加速,而且基本看不到for循环。

function q42checker

img_PNCpark = imread('pnc.jpg');

img_yourname = imread('pnc_tomap.jpg');

A = load('Q4.2.p1p2.mat');

[img_yourname_warped, img_PNCpark_yourname]=warp2PNCpark(img_PNCpark, img_yourname, A.p1, A.p2);

A.p1 = A.p1';

A.p2 = A.p2';

colors = ['r+'; 'b+'; 'g+'; 'm+'; 'c+'; 'k+'; 'w+'; 'y+'];

subaxis(2,2,1, 'Spacing', 0.03, 'Padding', 0, 'Margin', 0);

imshow(img_PNCpark);

hold on;

for u=1:size(A.p1, 1)

plot(A.p1(u, 1), A.p1(u, 2), colors(mod(u-1,size(colors,1))+1, :), 'MarkerSize', 24, 'LineWidth', 6);

end

subaxis(2,2,2, 'Spacing', 0.03, 'Padding', 0, 'Margin', 0);

imshow(img_yourname);

hold on;

for u=1:size(A.p2, 1)

plot(A.p2(u, 1), A.p2(u, 2), colors(mod(u-1,size(colors,1))+1, :), 'MarkerSize', 24, 'LineWidth', 6);

end

subaxis(2,2,3, 'Spacing', 0.03, 'Padding', 0, 'Margin', 0);

imshow(img_yourname_warped);

subaxis(2,2,4, 'Spacing', 0.03, 'Padding', 0, 'Margin', 0);

imshow(img_PNCpark_yourname);

end% YU CHEN

% Q 4.3

% 2016

function [img_YuChen_warped, img_PNCpark_yourname] = warp2PNCpark(img_PNCpark, img_YuChen, p1, p2)

H = computeH(p1, p2);

% get warped name

img_YuChen_warped = warpH(img_YuChen, H, size(img_PNCpark), 0);

% combine warped image with mine

R0 = ~img_YuChen_warped(:,:,1)>0;

G0 = ~img_YuChen_warped(:,:,2)>0;

B0 = ~img_YuChen_warped(:,:,3)>0;

R0 = uint8(R0);

G0 = uint8(G0);

B0 = uint8(B0);

img_PNCpark_yourname = img_PNCpark;

img_PNCpark_yourname(:,:,1) = img_PNCpark(:,:,1).*R0;

img_PNCpark_yourname(:,:,2) = img_PNCpark(:,:,2).*G0;

img_PNCpark_yourname(:,:,3) = img_PNCpark(:,:,3).*B0;

img_PNCpark_yourname = img_PNCpark_yourname + img_YuChen_warped;% Draw lines between corresponding points in two image

% img1, img2, two images of equal size

% pts - a 4xN matrix of points, where pts(1:2,:) are the points in image

% 1 and pts(3:4,:) are points in image 2.

function [] = plotMatches(img1,img2, pts)

if (size(img1) ~= size(img2))

fprintf('Images must be the same size.\n');

return

end

img = [img1 img2];

imshow(img);

axis equal;

lx = [pts(1,:); pts(3,:)+size(img1,2)];

ly = [pts(2,:); pts(4,:)];

perm = randperm(size(pts,2)) ;

sel = perm(1:100) ;

line(lx(:,sel),ly(:,sel),'Color','g');

end% YU CHEN

% Q 4.2

% 2016

%clc;

%clear all;

img1 = imread('pnc.jpg');

img2 = imread('pnc_tomap.jpg');

[points1,points2] = cpselect(img1,img2);

% use cpselect to save 2 sets of point pairs

% ... move to p1 and p2 as required

p1 = p1';

p2 = p2';

save('Q4.2.p1p2.mat', 'p1', 'p2'); % save it图片拼接

function [H2to1_q51,warped_q51,panoImg_q51]= q5_1(im1,im2,pts)

H2to1_q51 = computeH(pts(1:2,:), pts(3:4,:));

% save H2to1

save('q5_1.mat', 'H2to1_q51'); % save it

w = size(pts, 2);

thirdCol = ones(w, 1);

pt1 = [(pts(1:2,:))', thirdCol];

pt2 = [(pts(3:4,:))', thirdCol];

% save warped feature points

taj2totaj1warpedFeature = H2to1_q51*pt2';

% normalization, very important

for i = 1:w

taj2totaj1warpedFeature(:,i) = taj2totaj1warpedFeature(:,i)./taj2totaj1warpedFeature(3,i);

end

save('q5_1_warpedFeatures.mat', 'taj2totaj1warpedFeature');

% calculate RMSE

diff = pts(1:2,:)-taj2totaj1warpedFeature(1:2,:);

numP = size(diff, 2);

RMSE = sqrt(sum(diff(1,:).^2 + diff(2,:).^2)/numP);

disp('The ROOT MEAN SQUARE ERROR BETWEEN CORRESPONDING POINTS is');

format short g;

RMSE

outSize = [size(im1,1), 3000];

img2Size = size(im2);

Img2Map = zeros(img2Size(1:2));

%round((pts(3:4,:))')

Img2Map(round((pts(4,:))'), round((pts(3,:))')) = 1;

Img2Map = uint8(Img2Map);

%size(im2(:,:,1))

%im2(:,:,1) = im2(:,:,1).*Img2Map;

%im2(:,:,2) = im2(:,:,2).*Img2Map;

%im2(:,:,3) = im2(:,:,3).*Img2Map;

% get warped image

warped_q51 = warpH(im2, H2to1_q51, outSize, 0);

imwrite(warped_q51,'q5_1.jpg','jpg');

imshow( warped_q51);

% produce panorama

panoImg_q51 = warped_q51;

row1 = 1;

col1 = 2;

%panoImg_q51(round((pts(row1,:))'), round((pts(col1,:))'), 1) = im1(round((pts(row1,:))'), round((pts(col1,:))'), 1);

%panoImg_q51(round((pts(row1,:))'), round((pts(col1,:))'), 2) = im1(round((pts(row1,:))'), round((pts(col1,:))'), 2);

%panoImg_q51(round((pts(row1,:))'), round((pts(col1,:))'), 3) = im1(round((pts(row1,:))'), round((pts(col1,:))'), 3);

img1Size = size(im1);

%[X, Y] = meshgrid(1:img1Size(2), 1:img1Size(1));

%lengthIm = img1Size(1)*img1Size(2);

%X = reshape(X, lengthIm, 1);

%Y = reshape(Y, lengthIm, 1);

%m1Index = [Y, X];

%m1IndexRow = m1Index(:,row1);

%m1IndexCol = m1Index(:,col1);

%panoImg_q51(m1IndexRow, m1IndexCol, 1) = im1(m1IndexRow, m1IndexCol, 1);

%panoImg_q51(m1IndexRow, m1IndexCol, 2) = im1(m1IndexRow, m1IndexCol, 2);

%panoImg_q51(m1IndexRow, m1IndexCol, 3) = im1(m1IndexRow, m1IndexCol, 3);

panoImg_q51(1:img1Size(1), 1:img1Size(2), 1) = im1(1:img1Size(1), 1:img1Size(2), 1);

panoImg_q51(1:img1Size(1), 1:img1Size(2), 2) = im1(1:img1Size(1), 1:img1Size(2), 2);

panoImg_q51(1:img1Size(1), 1:img1Size(2), 3) = im1(1:img1Size(1), 1:img1Size(2), 3);

imshow(panoImg_q51);

imwrite(panoImg_q51,'q5_1_pan.jpg','jpg');

end

function [H2to1, panoImg_q51] = q5_2(img1, img2, pts)

% calculate H

H2to1 = computeH(pts(1:2,:), pts(3:4,:));

% four corners

c1 = [0,size(img1, 2), size(img1, 2),0; 0,0,size(img1, 1),size(img1, 1); 1,1,1,1];

c2 = [0,size(img2, 2), size(img2, 2),0; 0,0,size(img2, 1),size(img2, 1); 1,1,1,1];

% warp corners

c1_warped = c1;

c2_warped = H2to1*c2;

% normalize to 1

for i = 1:4

c2_warped(:,i) = c2_warped(:,i)./c2_warped(3,i);

end

c1_warped = round(c1_warped);

c2_warped = round(c2_warped);

maxY = max([c1_warped(2,:),c2_warped(2,:)]);

minY = min([c1_warped(2,:),c2_warped(2,:)]);

maxX = max([c1_warped(1,:),c2_warped(1,:)]);

minX = min([c1_warped(1,:),c2_warped(1,:)]);

% calculate the four corners after transformation

out_size = [maxY - minY, maxX - minX];

% calculate scalar

s = 1280/out_size(2);

M = [s, 0, -s*minX; 0, s, -s*minY; 0, 0, 1];

out_size = round(s*out_size);

% warp img1 and img2

warp_im1=warpH(img1, M, out_size, 0);

warp_im2=warpH(img2, M*H2to1, out_size, 0);

% calculate overlapped area and set to 0

R2 = uint8(~warp_im2(:,:,1)>0);

G2 = uint8(~warp_im2(:,:,2)>0);

B2 = uint8(~warp_im2(:,:,3)>0);

panoImg_q51 = warp_im1;

panoImg_q51(:,:,1) = warp_im1(:,:,1).*R2;

panoImg_q51(:,:,2) = warp_im1(:,:,2).*G2;

panoImg_q51(:,:,3) = warp_im1(:,:,3).*B2;

% combine two warped images

panoImg_q51 = panoImg_q51 + warp_im2;

imwrite(panoImg_q51,'q5_2_pan.jpg','jpg');

end我做的这个完整的代码不就会放在Github上

点击这里

希望可以给作者一颗心,鼓励作者继续前进~

PS:以上ppt截图来自于CMU ECE的Gary老师。