PaddleOCR 文字检测部分源码学习(2)-loss函数(1)

2021SC@SDUSC

代码位置:ppocr–>losses–>det_basic_loss.py

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import numpy as np

import paddle

from paddle import nn

import paddle.nn.functional as F

class BalanceLoss(nn.Layer):

def __init__(self,

balance_loss=True,

main_loss_type='DiceLoss',

negative_ratio=3,

return_origin=False,

eps=1e-6,

**kwargs):

"""

对该损失函数的分析

这是可微二值化文本检测的平衡损失。

参数:

balance_lose:是否为平衡损失,默认为真,布尔类型

main_lose_type:选择

CrossEntropy,DiceLoss,Euclidean,BCELoss,MaskL1Loss中的一种,默认是DiceLoss

negative_ratio:浮点数,默认为3

return_origin:是否返回不平衡损失,默认为False

eps:浮动,默认为1e-6

"""

super(BalanceLoss, self).__init__()

self.balance_loss = balance_loss

self.main_loss_type = main_loss_type

self.negative_ratio = negative_ratio

self.return_origin = return_origin

self.eps = eps

if self.main_loss_type == "CrossEntropy":

self.loss = nn.CrossEntropyLoss()

elif self.main_loss_type == "Euclidean":

self.loss = nn.MSELoss()

elif self.main_loss_type == "DiceLoss":

self.loss = DiceLoss(self.eps)

elif self.main_loss_type == "BCELoss":

self.loss = BCELoss(reduction='none')

elif self.main_loss_type == "MaskL1Loss":

self.loss = MaskL1Loss(self.eps)

else:

loss_type = [

'CrossEntropy', 'DiceLoss', 'Euclidean', 'BCELoss', 'MaskL1Loss'

]

raise Exception(

"main_loss_type in BalanceLoss() can only be one of {}".format(

loss_type))

def forward(self, pred, gt, mask=None):

"""

这是可微二值化文本检测的平衡损失

pred:预测的特征映射

gt:ground truth (即正确的标注)特征映射

mask:遮罩映射

返回:平衡损失

"""

"""

如果self.main_loss的输入参数是DiceLoss,对于返回标量值的Loss,在mask上执行ohem

mask = ohem_batch(pred, gt, mask,self.negative_ratio)

loss = self.loss(pred, gt, mask)

"""

positive = gt * mask

negative = (1 - gt) * mask

positive_count = int(positive.sum())

negative_count = int(

min(negative.sum(), positive_count * self.negative_ratio))

loss = self.loss(pred, gt, mask=mask)

if not self.balance_loss:

return loss

positive_loss = positive * loss

negative_loss = negative * loss

negative_loss = paddle.reshape(negative_loss, shape=[-1])

if negative_count > 0:

sort_loss = negative_loss.sort(descending=True)

negative_loss = sort_loss[:negative_count]

# negative_loss, _ = paddle.topk(negative_loss, k=negative_count_int)

balance_loss = (positive_loss.sum() + negative_loss.sum()) / (

positive_count + negative_count + self.eps)

else:

balance_loss = positive_loss.sum() / (positive_count + self.eps)

if self.return_origin:

return balance_loss, loss

return balance_loss

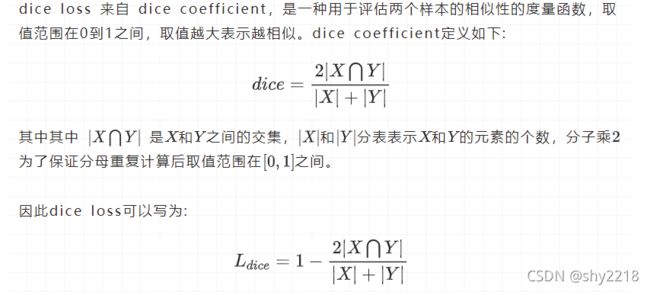

class DiceLoss(nn.Layer):

def __init__(self, eps=1e-6):

super(DiceLoss, self).__init__()

self.eps = eps

def forward(self, pred, gt, mask, weights=None):

"""

DiceLoss function.

"""

assert pred.shape == gt.shape

assert pred.shape == mask.shape

if weights is not None:

assert weights.shape == mask.shape

mask = weights * mask

intersection = paddle.sum(pred * gt * mask)

union = paddle.sum(pred * mask) + paddle.sum(gt * mask) + self.eps

loss = 1 - 2.0 * intersection / union

assert loss <= 1

return loss

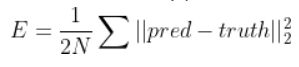

class MaskL1Loss(nn.Layer):

def __init__(self, eps=1e-6):

super(MaskL1Loss, self).__init__()

self.eps = eps

def forward(self, pred, gt, mask):

"""

Mask L1 Loss

"""

loss = (paddle.abs(pred - gt) * mask).sum() / (mask.sum() + self.eps)

loss = paddle.mean(loss)

return loss

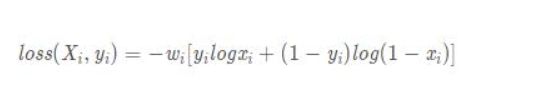

class BCELoss(nn.Layer):

def __init__(self, reduction='mean'):

super(BCELoss, self).__init__()

self.reduction = reduction

def forward(self, input, label, mask=None, weight=None, name=None):

loss = F.binary_cross_entropy(input, label, reduction=self.reduction)

return loss

def ohem_single(score, gt_text, training_mask, ohem_ratio):

pos_num = (int)(np.sum(gt_text > 0.5)) - (

int)(np.sum((gt_text > 0.5) & (training_mask <= 0.5)))

if pos_num == 0:

# selected_mask = gt_text.copy() * 0 # may be not good

selected_mask = training_mask

selected_mask = selected_mask.reshape(

1, selected_mask.shape[0], selected_mask.shape[1]).astype('float32')

return selected_mask

neg_num = (int)(np.sum(gt_text <= 0.5))

neg_num = (int)(min(pos_num * ohem_ratio, neg_num))

if neg_num == 0:

selected_mask = training_mask

selected_mask = selected_mask.reshape(

1, selected_mask.shape[0], selected_mask.shape[1]).astype('float32')

return selected_mask

neg_score = score[gt_text <= 0.5]

# 将负样本得分从高到低排序

neg_score_sorted = np.sort(-neg_score)

threshold = -neg_score_sorted[neg_num - 1]

# 选出 得分高的 负样本 和正样本 的 mask

selected_mask = ((score >= threshold) |

(gt_text > 0.5)) & (training_mask > 0.5)

selected_mask = selected_mask.reshape(

1, selected_mask.shape[0], selected_mask.shape[1]).astype('float32')

return selected_mask

def ohem_batch(scores, gt_texts, training_masks, ohem_ratio):

scores = scores.numpy()

gt_texts = gt_texts.numpy()

training_masks = training_masks.numpy()

selected_masks = []

for i in range(scores.shape[0]):

selected_masks.append(

ohem_single(scores[i, :, :], gt_texts[i, :, :], training_masks[

i, :, :], ohem_ratio))

selected_masks = np.concatenate(selected_masks, 0)

selected_masks = paddle.to_tensor(selected_masks)

return selected_masks

- 上文中提到的损失函数

- mask是什么意思?

基本定义:

用选定的图像、图形或物体,对待处理的图像(全部或局部)进行遮挡,来控制图像处理的区域或处理过程。用于覆盖的特定图像或物体称为掩模或模板。光学图像处理中,掩模可以为胶片、滤光片等。数字图像处理中,掩模为二维矩阵数组,有时也用多值图像。

数字图像处理中,图像掩模主要用于:

①提取感兴趣区。用预先制作的感兴趣区掩模与待处理图像相乘,得到感兴趣区图像,感兴趣区内图像值保持不变,而区外图像值都为0。

②屏蔽作用。用掩模对图像上某些区域作屏蔽,使其不参加处理或不参加处理参数的计算,或仅对屏蔽区作处理或统计。

③结构特征提取。用相似性变量或图像匹配方法检测和提取图像中与掩模相似的结构特征。

④特殊形状图像的制作。用选定的图像、图形或物体,对待处理的图像(全部或局部)进行遮挡,来控制图像处理的区域或处理过程。用于覆盖的特定图像或物体称为掩模或模板。