吴恩达机器学习python作业之K-means

参考链接:吴恩达|机器学习作业7.0.k-means聚类_学吧学吧终成学霸的博客-CSDN博客

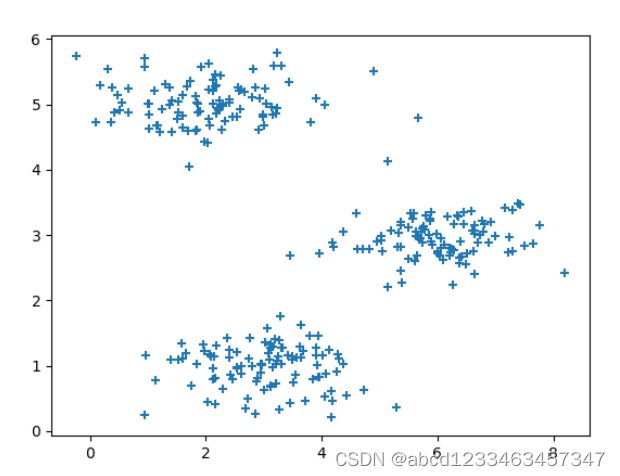

任务一:实现K-means算法

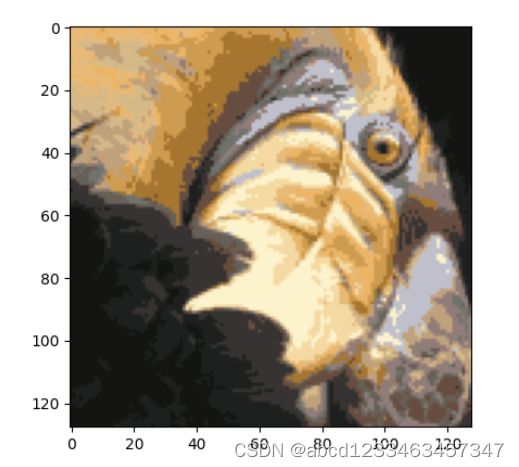

任务二:使用K-means算法进行图像压缩

任务一:实现K-means算法

numpy中的norm()函数求范数_若水cjj的博客-CSDN博客_np.norm

import numpy as np

from matplotlib import pyplot as plt

from scipy import io

import random

#1.读取数据

dt = io.loadmat("E:\机器学习\吴恩达\data_sets\ex7data2.mat")

x = dt["X"] #(300,2)

#2.可视化数据集

"""plt.scatter(x[:,0],x[:,1],marker="+")

plt.show()"""

#3.实现K-means算法

def findClosestCentroids(x,center):

samples = x.shape[0] #样本数量:300

centroids = np.zeros(samples)

for i in range(samples):

temp = x[i,:] - center

c_temp = np.linalg.norm(x=temp, ord=2,axis=1,keepdims=False)

#axis=1表示对矩阵的每一行求范数

#keepdims=False表示结果不保留二维特性

centroids[i] = np.argmin(c_temp)

#找出最大值的索引

return centroids

def computeCentroids(x,classes):

result = np.zeros((3,2))

for i in range(3):

index = np.where(classes == i)

temp_x = x[index]

result[i,:] = np.sum(temp_x,axis=0)/temp_x.shape[0]

return result

def K_means(x,centroids):

#不放回地取样k个样本作为簇中心

for i in range(10):

classes = findClosestCentroids(x,centroids)

centroids = computeCentroids(x, classes)

return centroids

# [[2.42830111 3.15792418]

# [5.81350331 2.63365645]

# [7.11938687

# 3.6166844]]

k=3

centroids = np.array([[3, 3], [6, 2], [8, 5]])

centroids = K_means(x,centroids)

classes = findClosestCentroids(x,centroids)

colors = ['r', 'g', 'b']

markers = ['o','+','x']

for i in range(3):

index_x = np.where(classes==i)

xi = x[index_x]

plt.scatter(xi[:,0],xi[:,1],c=colors[i],marker=markers[i])

plt.show()

任务二:使用K-means算法进行图像压缩

方法一:使用自己定义的函数

10次随机初始化,10次迭代

100次随机初始化,10次迭代

10次随机初始化,100次迭代

import numpy as np

from matplotlib import pyplot as plt

import matplotlib.image as mpimg

#1.读取数据

dt = mpimg.imread(r"E:\机器学习\吴恩达\data_sets\bird_small.png") #(128, 128, 3)

#2.可视化

"""plt.imshow(dt) # 显示图片

plt.axis('off') # 不显示坐标轴

plt.show()"""

#3.进行K-means

#随机初始化簇中心

def kMeansInitCentroids(x, k):

randidx = np.random.permutation(x) #随机排列

centroids = randidx[:k, :] #选前K个

return centroids

#进行簇分配cluster assignment

def findClosestCentroids(x,center):

samples = x.shape[0] #样本数量:300

centroids = np.zeros(samples)

sum = 0

for i in range(samples):

temp = x[i,:] - center

c_temp = np.linalg.norm(x=temp, ord=2,axis=1,keepdims=False)

#axis=1表示对矩阵的每一行求范数

#keepdims=False表示结果不保留二维特性

centroids[i] = np.argmin(c_temp)

#找出最大值的索引

sum += np.sum(x[i,:]-center[int(centroids[i])])

return centroids,sum

#计算簇中心

def computeCentroids(x,classes,k):

result = np.zeros((k,x.shape[1]))

for i in range(k):

index = np.where(classes == i)

temp_x = x[index]

result[i,:] = np.sum(temp_x,axis=0)/temp_x.shape[0]

return result

def k_means(x,k,max_init,max_iters):

cost = INF

for j in range(max_init):

center = kMeansInitCentroids(x, k)

for i in range(max_iters):

classes,temp_sum = findClosestCentroids(x, center)

center = computeCentroids(x,classes,k)

if temp_sum < cost:

cost = temp_sum

result = center

return result

INF = 1e10

x = dt.reshape(-1,3)

K = 16

MAXIMUM_iterations = 10

MAXIMUM_init = 100

centroids = k_means(x,K,MAXIMUM_init,MAXIMUM_iterations)

classes,p = findClosestCentroids(x, centroids)

presssed = np.zeros(x.shape)

for i in range(len(centroids)):

presssed[classes==i] = centroids[i]

presssed = presssed.reshape(dt.shape)

plt.imshow(presssed)

plt.show()

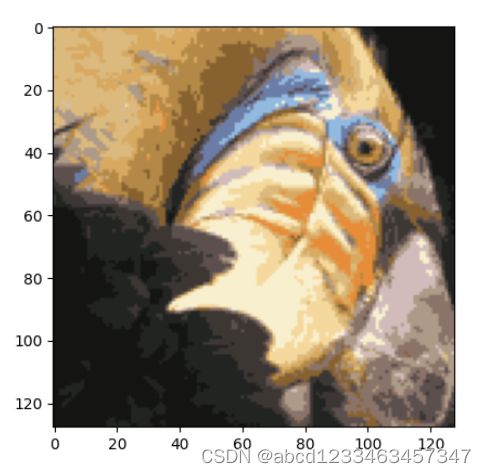

方法二:调包:使用MiniBatchKMeans

import numpy as np

from matplotlib import pyplot as plt

import matplotlib.image as mpimg

from sklearn.cluster import MiniBatchKMeans

#1.读取数据

dt = mpimg.imread(r"E:\机器学习\吴恩达\data_sets\bird_small.png") #(128, 128, 3)

#2.可视化

"""plt.imshow(dt) # 显示图片

plt.axis('off') # 不显示坐标轴

plt.show()"""

#3.使用MiniBatchKMeans聚类

K = 16

temp = dt.reshape(-1,3)

kmeans = MiniBatchKMeans(K)

kmeans.fit(temp) #先fit

pressed = kmeans.cluster_centers_[kmeans.predict(temp)] #再predict,然后再分配标记的聚类中心的RGB编码

pressed = pressed.reshape(dt.shape)

plt.imshow(pressed)

plt.show()