Hive day02 (table)

-

table

目录

table

1.创建表 create table

常见数据类类型

创建hive table

2.导入数据

3.DML:

1. load 加载数据

2.insert

3.清空表中数据

4.select

1.where 过滤条件

2.order by 排序语法

3.like 语法 模糊匹配

4.合并表

5.null 处理

6.分组 聚合函数 join

7.join

8.开窗函数

5.command line

-

1.创建表 create table

- create table test ();

create table test ( id int comment '用户id', name String, age bigint )comment 'test table' row format delimited fields terminated by ',' stored as textfile; -

常见数据类类型

- 数值

- 整型 [ int bigint ]

- 小数 【float double decimal】

- 字符串

- string

- char

- 时间

- 日期 DATE:YYYY-MM-DD

- 时间戳 TIMESTAMP:YYYY-MM-DD HH:MM:SS

- 数值

-

创建hive table

- create table test ();

-

2.导入数据

- local :从本地磁盘向hive table导入数据

不加local : 从hdfs向hive table导入数据 - load data local inpath '/home/hadoop/tmp/test.txt' into TABLE test;

- 案例

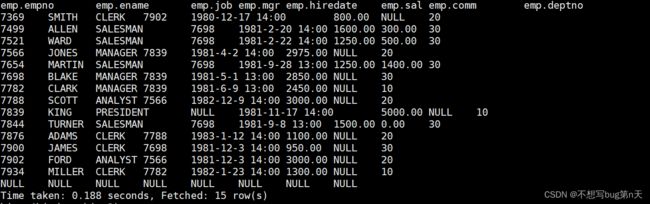

- 将emp表数据导入本地文件

路径:/home/hadoop/tmp

[hadoop@bigdata13 tmp]$ vim emp.txt//emp表数据 7369,SMITH,CLERK,7902,1980-12-17 14:00,800,,20 7499,ALLEN,SALESMAN,7698,1981-2-20 14:00,1600,300,30 7521,WARD,SALESMAN,7698,1981-2-22 14:00,1250,500,30 7566,JONES,MANAGER,7839,1981-4-2 14:00,2975,,20 7654,MARTIN,SALESMAN,7698,1981-9-28 13:00,1250,1400,30 7698,BLAKE,MANAGER,7839,1981-5-1 13:00,2850,,30 7782,CLARK,MANAGER,7839,1981-6-9 13:00,2450,,10 7788,SCOTT,ANALYST,7566,1982-12-9 14:00,3000,,20 7839,KING,PRESIDENT,,1981-11-17 14:00,5000,,10 7844,TURNER,SALESMAN,7698,1981-9-8 13:00,1500,0,30 7876,ADAMS,CLERK,7788,1983-1-12 14:00,1100,,20 7900,JAMES,CLERK,7698,1981-12-3 14:00,950,,30 7902,FORD,ANALYST,7566,1981-12-3 14:00,3000,,20 7934,MILLER,CLERK,7782,1982-1-23 14:00,1300,,10 -

创建表(hive下)

CREATE TABLE emp ( empno decimal(4,0) , ename string , job string , mgr decimal(4,0) , hiredate string , sal decimal(7,2) , comm decimal(7,2) , deptno decimal(2,0) ) row format delimited fields terminated by ',' stored as textfile; -

插入emp数据

hive (bigdata_hive2)> load data local inpath '/home/hadoop/tmp/emp.txt' into table emp;

- 将emp表数据导入本地文件

- local :从本地磁盘向hive table导入数据

-

3.DML:

-

1. load 加载数据

-

1.加载本地数据

load data local inpath '/home/hadoop/tmp/emp.txt' INTO TABLE emp; -

2 加载hdfs数据

[hadoop@bigdata13 tmp]$ hadoop fs -ls /data [hadoop@bigdata13 tmp]$ hadoop fs -put ./emp.txt /data [hadoop@bigdata13 tmp]$ hadoop fs -ls /data Found 1 items -rw-r--r-- 3 hadoop supergroup 799 2022-11-30 21:53 /data/emp.txt load data inpath '/data/emp.txt' INTO TABLE emp; 1.table : hdfs metastore hadoop fs -mv xxx /table/ [建议先不要这么做] -

3. 覆盖表中数据

load data local inpath '/home/hadoop/tmp/emp.txt' OVERWRITE INTO TABLE emp;-

hive 不要用update delete 因为效率低

-

-

-

2.insert

- 1.Inserting data into Hive Tables from queries

insert into|OVERWRITE table tablename selectQury

追加数据

覆盖数据

- 2.Inserting values into tables from SQL 【不推荐使用】

INSERT INTO TABLE tablename

VALUES values_row [, values_row ...]

1.每导入一条数据 就会触发一次 mapreduce job 效率太低 - 案例

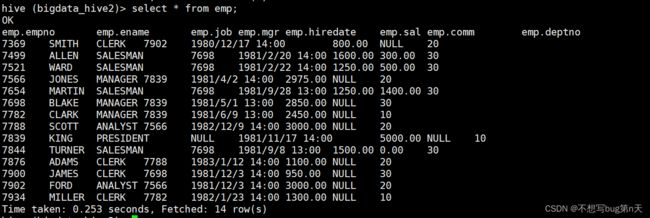

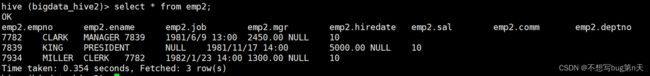

emp2: //向emp2中插入emp的数据 insert into table emp2 select * from emp; //覆盖原有数据 只插入emp中deptno=10的数据 insert overwrite table emp2 select * from emp where deptno=10;

- 1.Inserting data into Hive Tables from queries

-

3.清空表中数据

-

TRUNCATE [TABLE] table_name truncate table emp2;

-

-

4.select

-

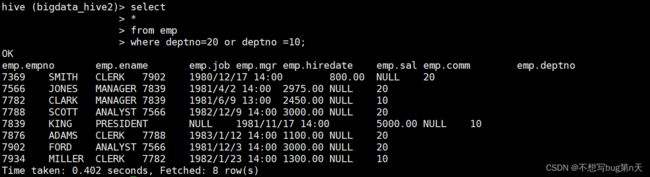

1.where 过滤条件

-

2.order by 排序语法

- 1.默认asc 升序

- 2.降序 desc

select sal from emp order by sal desc;

-

3.like 语法 模糊匹配

- 1. _ 占位符

- 2. % 模糊

-

4.合并表

- 1.union 去重

- 2.union all 不去重

- 案例

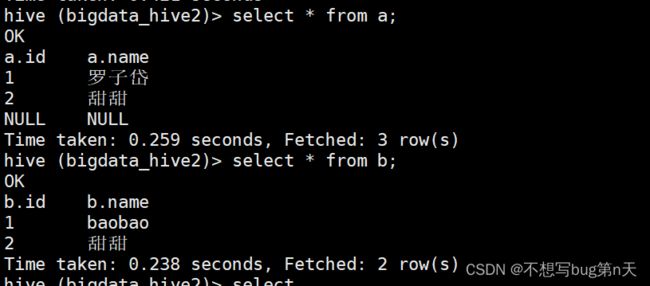

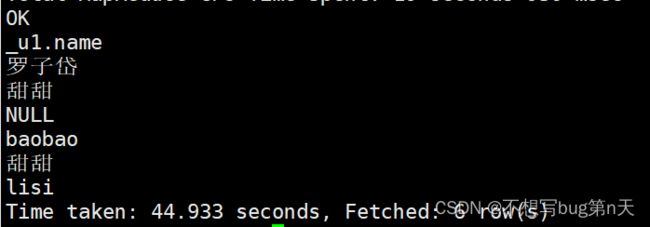

create table a(id int ,name string) row format delimited fields terminated by ',' ; create table b(id int ,name string) row format delimited fields terminated by ',' ; load data local inpath "/home/hadoop/tmp/a.txt" into table a; load data local inpath "/home/hadoop/tmp/b.txt" into table b; select name from a union all select name from b; select name from a union all select name from b union all select "lisi" as name ; select name,"1" as pk from a union all select name,"2" as pk from b union all select "lisi" as name,"3" as id ;

-

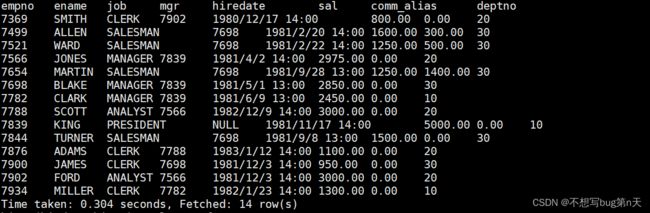

5.null 处理

- 1. 过滤

where xxx is not null

is null 作用一样 <=> - 2. etl 转换

ifnull => hive里没有

coalesce =》

nvl =》 - 补充:

查看hive支持的function :

y=f(x)

SHOW FUNCTIONS [LIKE ""];

show functions like nvl; => 判断 function hive 是否存在

desc function nvl; =》 查看某个函数具体使用 - 案例

select empno, ename, job, mgr, hiredate, sal, nvl(comm,0) as comm_alias, deptno from emp;

- 1. 过滤

-

6.分组 聚合函数 join

- 1.group by

- 1.和聚合函数一起使用

- 2. 一个 或者多个 column 进行分组

- 3.分组字段 select 出现 和 group by 出现 要一致

- having : 条件过滤

只能在group by 后面使用

- 2.聚合函数

sum max min avg count - 案例

select sum(sal) as sal_sum, max(sal) as sal_max, min(sal) as sal_min, avg(sal) as sal_avg, count(1) as cnt from emp ; select job, sum(sal) as sal_sum, max(sal) as sal_max, min(sal) as sal_min, avg(sal) as sal_avg, count(1) as cnt from emp group by job having sal_sum > 6000; //子查询: select job, sal_sum, sal_max, sal_min, sal_avg, cnt from ( select job, sum(sal) as sal_sum, max(sal) as sal_max, min(sal) as sal_min, avg(sal) as sal_avg, count(1) as cnt from emp group by job ) as a where sal_sum > 6000;

- 1.group by

-

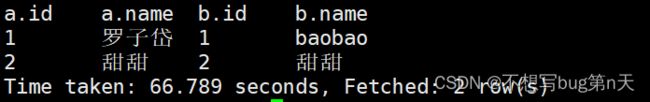

7.join

- 1.inner join [join] 内连接

select a.*, b.* from ( select * from a ) as a join ( select * from b ) as b on a.id = b.id; - 2.left join 左连接

select a.*, b.* from ( select * from a ) as a join ( select * from b ) as b on a.id = b.i - 3.right join 右连接

select a.*, b.* from ( select * from a ) as a right join ( select * from b ) as b on a.id = b.id;

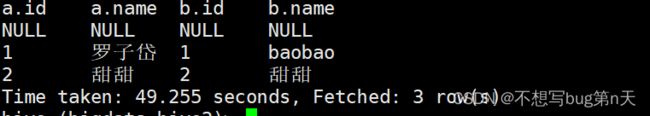

- 4.full join 全连接

select a.*, b.* from ( select * from a ) as a full join ( select * from b ) as b on a.id = b.id;

- 1.inner join [join] 内连接

- 案例 emp表

- 1.各个部门每年入职的人数

- 思路

table:emp

维度:部门 每年

指标:人数

where: noselect

部门 每年

人数

from emp

group by 部门 每年;每年=》hiredate 处理et

-

1980-12-17 00:00:00 => etl 使用处理日期相关的function date_format

-

select deptno,date_format(hiredate,'YYYY') as year, count(1) as cnt from emp group by deptno,date_format(hiredate,'YYYY');

- 思路

- 2.整个公司每年每月的入职人数

- 思路:

hiredate :1980-12-17 00:00:00 =》 1980-12 -

select date_format(hiredate,'YYYY-MM') as ymoth, count(1) as cnt from emp group by date_format(hiredate,'YYYY-MM');

- 思路:

- 3.销售部和经理部入职的人薪资范围在1500-2500 每年每月的入职人数

- 4.公司内有绩效的员工每年每月的入职人数

- 5.销售部和经理部入职的人薪资范围在1500-2500 每年每月的入职人数 以及员工信息

- 1.各个部门每年入职的人数

-

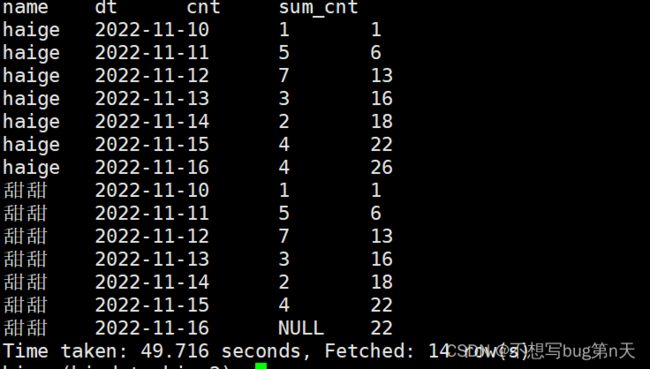

8.开窗函数

- 聚合函数:多行数据按照一定规则 进行聚合为 一行

理论上 聚合后的行数 <=聚合前的行数

-

窗口函数/开窗函数:窗口 + 函数

-

窗口: 函数 运行时 计算的数据集的范围

-

函数: 运行时函数

-

语法结构:

函数 over([partition by xxx,...] [order by xxx,....])

over: 以谁进行开窗 table、

parition by : 以谁进行分组 table columns

order by : 以谁进行排序 table columns函数:开窗函数、聚合函数

-

案例:既要显示聚合前的数据,又要显示聚合后的数据?

eg:

id name sal

1 zs 3w

2 ls 2.5w

3 ww 2w需求: 按照工资降序排列 还显示对应的 排名

id name sal rank

1 zs 3w 1

2 ls 2.5w 2

3 ww 2w 3-

数据:

// mt.txt

haige,2022-11-10,1

haige,2022-11-11,5

haige,2022-11-12,7

haige,2022-11-13,3

haige,2022-11-14,2

haige,2022-11-15,4

haige,2022-11-16,4甜甜,2022-11-10,1

甜甜,2022-11-11,5

甜甜,2022-11-12,7

甜甜,2022-11-13,3

甜甜,2022-11-14,2

甜甜,2022-11-15,4

甜甜,2022-11-16,4 -

建表

create table user_mt ( name string, dt string, cnt int ) row format delimited fields terminated by ',' ; - 插入数据

load data local inpath '/home/hadoop/tmp/mt.txt' overwrite into table user_mt; - 查询命令

select name , dt , cnt , sum(cnt) over(partition by name order by dt ) as sum_cnt from user_mt;

-

-

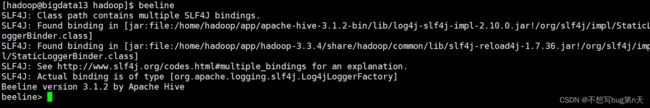

5.command line

-

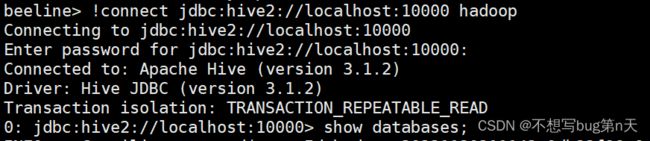

beeline => 连接 hive =》 hdfs

对hdfs 做一个设置 代理设置:路径:/home/hadoop/app/hadoop/etc/hadoop core-site.xml:hadoop.proxyuser.hadoop.hosts * hadoop.proxyuser.hadoop.groups * -

分布式进行分发:[hadoop@bigdata13 hadoop]$ xsync core-site.xml

-

重启hdfs

-

[hadoop@bigdata13 hadoop]$ hadoop-cluster stop

-

[hadoop@bigdata13 hadoop]$ hadoop-cluster start

-

-

启动hiveserver2

路径:/home/hadoop/app/hive/bin/

[hadoop@bigdata13 bin]$ ./hiveserver2

-

-

beeline> !connect jdbc:hive2://localhost:10000 hadoop

-

-

- 聚合函数:多行数据按照一定规则 进行聚合为 一行

-

-

-