深度学习-【图像分类】学习笔记 6ResNet

文章目录

- 6.1 ResNet网络结构,BN以及迁移学习详解

-

- residual结构

- Batch Normalizetion详解

- 迁移学习简介

- 6.1.2 ResNeXt网络结构

- 6.2 使用pytorch搭建ResNet并基于迁移学习训练

- 6.2.2 使用pytorch搭建ResNeXt

-

- model.py

- train.py

- predict.py

6.1 ResNet网络结构,BN以及迁移学习详解

论文链接:https://arxiv.org/abs/1512.03385

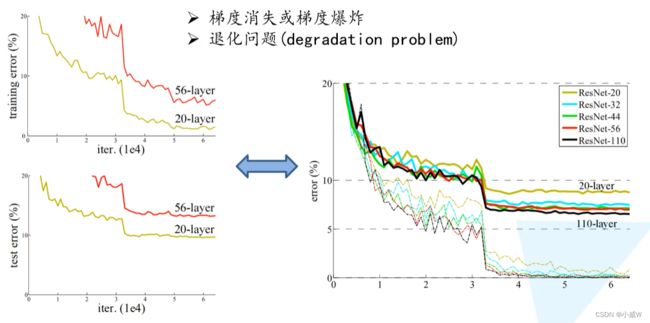

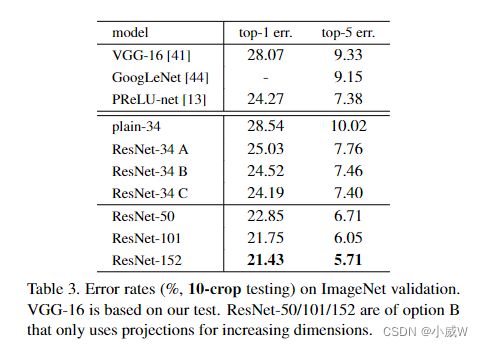

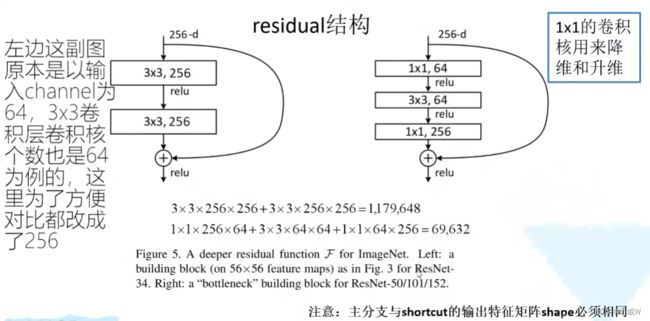

residual结构

右图,使用1×1卷积核先降维,再升维。

从图中算式可以看出减少了参数。

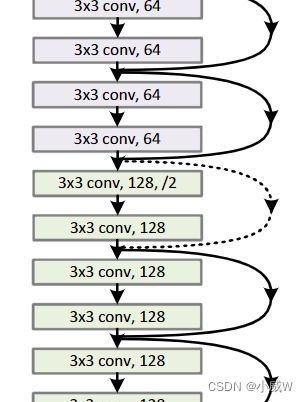

论文中分别有实线和虚线的残差结构。

下图讲解:

实线:输入和输出的特征矩阵的shape是一样的。

虚线:输入和输出的特征矩阵的shape是不一样的。

可以看出修改了主分支的stride,以及shortcut部分加了一个1×1卷积。

※ 对于层数较少的ResNet(18,34),通过最大池化层后的输出shape是[56, 56, 256],而层数较多的ResNet(50, 101, 152)通过最大池化层后的输出shape是[56, 56, 64],因此它们的conv2_x对应的第一个虚线残差层仅调整特征矩阵的深度,高和宽不变;后续的conv3\4\5_x还会改变高和宽。

(18,34层的ResNet的conv2_x没有虚线残差层)。

Batch Normalizetion详解

参考博文:https://blog.csdn.net/qq_37541097/article/details/104434557(主要看这篇文章)

Batch Normalization的目的就是使feature map满足均值为0,方差为1的分布规律。

“对于一个拥有d维的输入x,我们将对它的每一个维度进行标准化处理。”

迁移学习简介

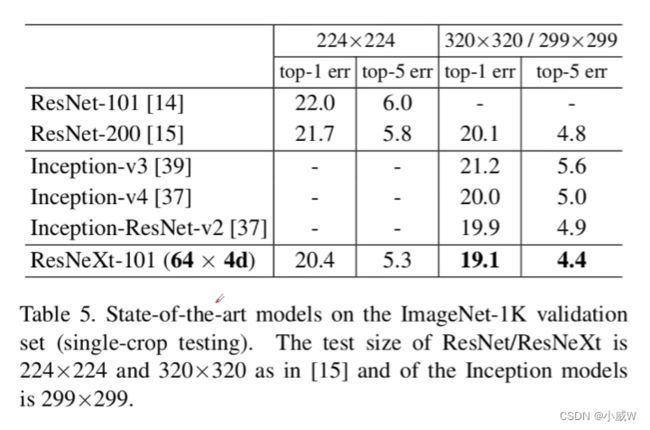

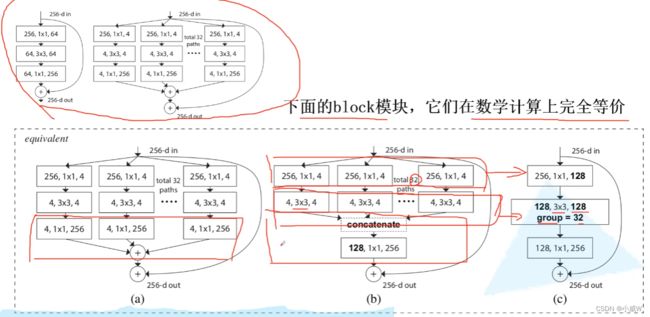

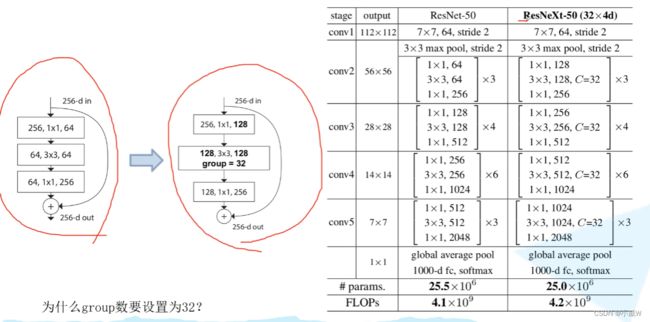

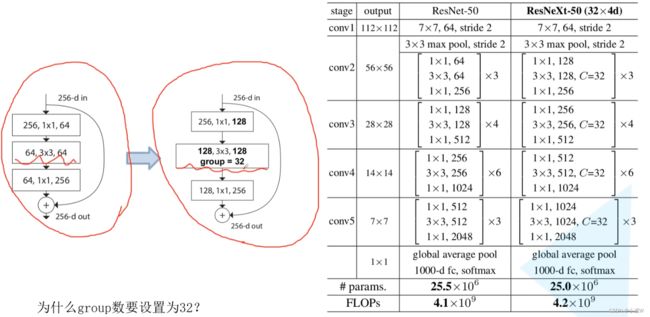

6.1.2 ResNeXt网络结构

论文链接:https://readpaper.com/paper/2953328958

对于深层结构的ResNet,使用右边的结构代替左边的对应结构。

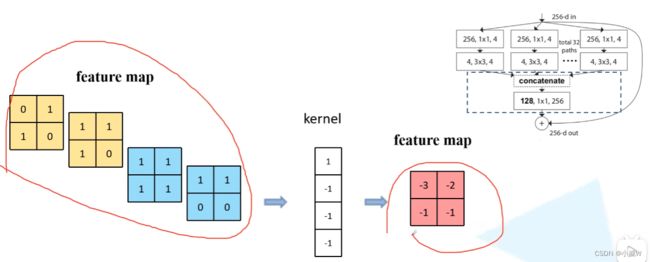

Group Convolution 组卷积

卷积核的channel要和输入的channel相同。

组卷积的参数个数是普通卷积的 1/g。

当g = Cin,n = Cin,此时就是DW Conv(Depthwise conv)。相当于对输入特征矩阵的每一个channel分配了一个channel为1的卷积核进行卷积。

相关链接:https://zhuanlan.zhihu.com/p/226448051

作者尝试了不同 C 和 d 的搭配的效果(错误率):

结果是 32 比较好。

6.2 使用pytorch搭建ResNet并基于迁移学习训练

50、101、152-layer的残差块里的第三层的卷积核个数是第一层、第二层的四倍。(e.g. 256 = 64 * 4)。

18、34层:

对于padding和stride = 1,output = (input - 3 + 2 * 1) / 1 + 1 = input。

当stride = 2,output = (input - 3 + 2 * 1) / 2 + 1 = input / 2 + 0.5 = input / 2(pytorch会 向下取整)

50、101、152层:

注意:原论文中,在虚线残差结构的主分支上,第一个1x1卷积层的步距是2,第二个3x3卷积层步距是1。但在pytorch官方实现过程中是第一个1x1卷积层的步距是1,第二个3x3卷积层步距是2,这么做的好处是能够在top1上提升大概0.5%的准确率。可参考Resnet v1.5 https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch

6.2.2 使用pytorch搭建ResNeXt

model.py

import torch.nn as nn

import torch

class BasicBlock(nn.Module):

"""

18和34层的残差结构

"""

expansion = 1

def __init__(self, in_channel, out_channel, stride=1, downsample=None, **kwargs):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=3, stride=stride, padding=1, bias=False) # 因为使用BN,所以不使用bias

self.bn1 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(out_channel)

self.downsample = downsample # 决定是否是虚线的残差结构

def forward(self, x):

identity = x

if self.downsample is not None: # 如果是虚线,进行下采样

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out += identity

out = self.relu(out)

return out

class Bottleneck(nn.Module):

"""

注意:原论文中,在虚线残差结构的主分支上,第一个1x1卷积层的步距是2,第二个3x3卷积层步距是1。

但在pytorch官方实现过程中是第一个1x1卷积层的步距是1,第二个3x3卷积层步距是2,

这么做的好处是能够在top1上提升大概0.5%的准确率。

可参考Resnet v1.5 https://ngc.nvidia.com/catalog/model-scripts/nvidia:resnet_50_v1_5_for_pytorch

"""

expansion = 4 # 因为50、101、152-layer的残差块里的第三层的卷积核个数是第一层、第二层的4倍。

def __init__(self, in_channel, out_channel, stride=1, downsample=None,

groups=1, width_per_group=64): # groups和width_per_group用于ResNeXt

super(Bottleneck, self).__init__()

width = int(out_channel * (width_per_group / 64.)) * groups # 当groups=1, width_per_group=64,width=out_channels,就是ResNet

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=width,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv2 = nn.Conv2d(in_channels=width, out_channels=width, groups=groups,

kernel_size=3, stride=stride, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(width)

# -----------------------------------------

self.conv3 = nn.Conv2d(in_channels=width, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self,

block, # 选择block

blocks_num, # 使用残差结构的数目

num_classes=1000,

include_top=True, #

groups=1,

width_per_group=64):

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.groups = groups

self.width_per_group = width_per_group

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

padding=3, bias=False) # conv1

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, blocks_num[0]) # conv2_x

self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2) # conv3_x

self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2) # conv4_x

self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2) # conv5_x

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') # 为卷积层初始化

def _make_layer(self, block, channel, block_num, stride=1):

downsample = None

if stride != 1 or self.in_channel != channel * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channel * block.expansion))

layers = []

layers.append(block(self.in_channel,

channel,

downsample=downsample,

stride=stride,

groups=self.groups,

width_per_group=self.width_per_group)) # 第一个虚线残差块

self.in_channel = channel * block.expansion

for _ in range(1, block_num):

layers.append(block(self.in_channel,

channel,

groups=self.groups,

width_per_group=self.width_per_group)) # 后续的残差块

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resnet34(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet34-333f7ec4.pth

return ResNet(BasicBlock, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet50(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet50-19c8e357.pth

return ResNet(Bottleneck, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

def resnet101(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnet101-5d3b4d8f.pth

return ResNet(Bottleneck, [3, 4, 23, 3], num_classes=num_classes, include_top=include_top)

def resnext50_32x4d(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnext50_32x4d-7cdf4587.pth

groups = 32

width_per_group = 4

return ResNet(Bottleneck, [3, 4, 6, 3],

num_classes=num_classes,

include_top=include_top,

groups=groups,

width_per_group=width_per_group)

def resnext101_32x8d(num_classes=1000, include_top=True):

# https://download.pytorch.org/models/resnext101_32x8d-8ba56ff5.pth

groups = 32

width_per_group = 8

return ResNet(Bottleneck, [3, 4, 23, 3],

num_classes=num_classes,

include_top=include_top,

groups=groups,

width_per_group=width_per_group)

train.py

import os

import sys

import json

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import transforms, datasets

from tqdm import tqdm

from model import resnet34

import torchvision.models.resnet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("using {} device.".format(device))

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 16

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=nw)

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=nw)

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

net = resnet34()

# load pretrain weights

# download url: https://download.pytorch.org/models/resnet34-333f7ec4.pth

model_weight_path = "./resnet34-pre.pth"

assert os.path.exists(model_weight_path), "file {} does not exist.".format(model_weight_path)

net.load_state_dict(torch.load(model_weight_path, map_location='cpu')) # 先载入权重

# for param in net.parameters():

# param.requires_grad = False # 冻结除最后一个全连接层之外的权重(因为最后一个全连接层是后面替换的)

# change fc layer structure

in_channel = net.fc.in_features

net.fc = nn.Linear(in_channel, 5) # 修改了最后一个fc层,因为花分类集是5类而不是1000类。(进行在载入weight之后)

net.to(device)

# define loss function

loss_function = nn.CrossEntropyLoss()

# construct an optimizer

params = [p for p in net.parameters() if p.requires_grad]

optimizer = optim.Adam(params, lr=0.0001)

epochs = 3

best_acc = 0.0

save_path = './resNet34.pth'

train_steps = len(train_loader)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

logits = net(images.to(device))

loss = loss_function(logits, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

epochs,

loss)

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

# loss = loss_function(outputs, test_labels)

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_bar.desc = "valid epoch[{}/{}]".format(epoch + 1,

epochs)

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('Finished Training')

if __name__ == '__main__':

main()

predict.py

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import resnet34

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

data_transform = transforms.Compose(

[transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]) # 和训练方法一样的标准化处理

# load image

img_path = "../tulip.jpg"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f:

class_indict = json.load(f)

# create model

model = resnet34(num_classes=5).to(device)

# load model weights

weights_path = "./resNet34.pth"

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path, map_location=device))

# prediction

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

predict[predict_cla].numpy())

plt.title(print_res)

for i in range(len(predict)):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict[i].numpy()))

plt.show()

if __name__ == '__main__':

main()