关于WiderPerson数据说明(使用后笔记)

0.数据下载

0.1 官方下载

数据主页(包含google云盘、百度云盘下载方式)

Google Drive or Baidu Drive (jx5p)

0.2 我自己的csdn链接

WiderPerson.zip官网下载

数据解压之后:

1.不同目录下说明

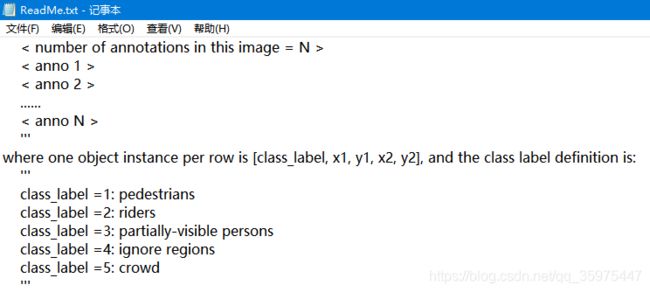

1.0 ReadMe.txt

关于该数据集的解释,我主要关注的类别对应的标签:

1.1 Annotations

以.jpg.txt结尾~,好吧。其中000040.jpg.txt去掉了,因为坏掉了,特意下载了官方数据做了进一步确认,所以包括jpg也一起删掉了。还有一件比较重要的事,test的标注是没有的,所以Annotations中是不包含test.txt中文件名对应的.jpg.txt标注的。

下面是txt文件,第一行是目标数,第二行开始,第一列是类别,2-5列是坐标。

(

objection_numlabel xmin ymin xmax ymax)

1.2 Evaluation

主要是一些matlab的脚本,由于本人电脑比较烂,就不装这些了,对于Widerperson转VOC数据的,WiderPerson行人检测数据集,这篇写得是极好的了。

1.3 Images

这个文件夹下,就是包含所有的训练、验证、测试的图像了。

图有各种各样的从网上download下来的那种,水印还在呢,所以够一般化。

尺寸有几百到几千不等,所以有些也可以自己去掉,对于只像用其中几个类别的,可以在写csv文件的时候,去掉。

下面写了个小脚本,提取我想要类别的数据,然后写到csv文件中(name,filename,height,width,xmax,xmin,ymax,ymin)。

__author__ = 'gmt'

#将文件中的核心数据提取出来

import json

import random

import copy

import os

import cv2

if __name__ == '__main__':

infos = ['train_info', 'valid_info', 'test_info']

# infos=read_top(r"./csvdata/TownCentre-groundtruth.top")

# anno_path = './WiderPerson/Annotations/'

imgs_path = './WiderPerson/Images/'

train_info = './WiderPerson/train.txt'

valid_info = './WiderPerson/val.txt'

test_info = './WiderPerson/test.txt'

train_fp=open('./csvdata/pedestrain_train.csv','w')

valid_fp=open('./csvdata/pedestrain_valid.csv','w')

test_fp=open('./csvdata/pedestrain_test.csv','w')

# train_fp.writelines("class,filename,height,width,xmax,xmin,ymax,ymin\n")

# valid_fp.writelines("class,filename,height,width,xmax,xmin,ymax,ymin\n")

# test_fp.writelines("class,filename,height,width,xmax,xmin,ymax,ymin\n")

name = "pedestrain"

count = 0

for info_i in infos:

if info_i == 'train_info':

info = train_info

fp=train_fp

elif info_i == 'valid_info':

info = valid_info

fp=valid_fp

else:

info = test_info

fp=test_fp

fp.writelines("class,filename,height,width,xmax,xmin,ymax,ymin\n")

with open(info, 'r') as f:

img_ids = [x for x in f.read().splitlines()]

for img_id in img_ids: # '000040'

count += 1

print("count: " + str(count))

filename = img_id + '.jpg'

img_path = imgs_path + filename

img = cv2.imread(img_path)

height = img.shape[0]

width = img.shape[1]

label_path = img_path.replace('Images', 'Annotations') + '.txt'

if not os.path.exists(label_path):

continue

with open(label_path) as file:

line = file.readline()

count_p = int(line.split('\n')[0]) # 里面行人个数

print("count: " + str(count) + " , count_p: " + str(count_p))

line = file.readline()

while line:

cls = int(line.split(' ')[0])

# < class_label =1: pedestrians > 行人

# < class_label =2: riders > 骑车的

# < class_label =3: partially-visible persons > 遮挡的部分行人

# < class_label =4: ignore regions > 一些假人,比如图画上的人

# < class_label =5: crowd > 拥挤人群,直接大框覆盖了

# if cls == 1 or cls == 2 or cls == 3:

if cls == 2:

xmin = float(line.split(' ')[1])

ymin = float(line.split(' ')[2])

xmax = float(line.split(' ')[3])

ymax = float(line.split(' ')[4].split('\n')[0])

fp.writelines("%s,%s,%d,%d,%s,%s,%s,%s\n"%(name,filename,height,width,xmax,xmin,ymax,ymin))

line = file.readline()

cv2.imshow('result', img)

cv2.waitKey(10)

train_fp.close()

valid_fp.close()

test_fp.close()

检测框在图像上可视化:

import os

import cv2

if __name__ == '__main__':

path = './WiderPerson/train.txt'

with open(path, 'r') as f:

img_ids = [x for x in f.read().splitlines()]

for img_id in img_ids: # '000040'

img_path = './WiderPerson/Images/' + img_id + '.jpg'

img = cv2.imread(img_path)

im_h = img.shape[0]

im_w = img.shape[1]

label_path = img_path.replace('Images', 'Annotations') + '.txt'

if not os.path.exists(label_path):

continue

with open(label_path) as file:

line = file.readline()

count = int(line.split('\n')[0]) # 里面行人个数

line = file.readline()

while line:

cls = int(line.split(' ')[0])

# < class_label =1: pedestrians > 行人

# < class_label =2: riders > 骑车的

# < class_label =3: partially-visible persons > 遮挡的部分行人

# < class_label =4: ignore regions > 一些假人,比如图画上的人

# < class_label =5: crowd > 拥挤人群,直接大框覆盖了

if cls == 1 or cls == 2 or cls == 3:

xmin = float(line.split(' ')[1])

ymin = float(line.split(' ')[2])

xmax = float(line.split(' ')[3])

ymax = float(line.split(' ')[4].split('\n')[0])

img = cv2.rectangle(img, (int(xmin), int(ymin)), (int(xmax), int(ymax)), (0, 255, 0), 2)

line = file.readline()

cv2.imshow('result', img)

cv2.waitKey(500)

1.4 关于几个txt文件

以train.txt为例吧,因为每个文件里都是文件名,所以不赘述。

2.个人评价

优点:数据量大,各种场景下密集人群都包含。

缺点:都是单帧图像,对于想使用其中的测试集,做跟踪,基本不用考虑了。还是自己乖乖地去录段小视频吧~~~

参考

1.WiderPerson行人检测数据集