《Getting Started with NLP》chap11:Named-entity recognition

《Getting Started with NLP》chap11:Named-entity recognition

最近需要做一些NER相关的任务,来学习一下这本书的第十一章

文章目录

- 《Getting Started with NLP》chap11:Named-entity recognition

- 11.1 Named entity recognition: Definitions and challenges

-

- 11.1.1 Named entity types

-

- Exercise 11.1

- 11.1.2 Challenges in named entity recognition

-

- 引入思考:Exercise 11.2

- Let’s look into these challenges together

-

- full entity span identification

- ambiguity

- 11.2 Named-entity recognition as a sequence labeling task

-

- 11.2.1 The basics: BIO scheme

-

- 引例

- further extensions:IO scheme 、 BIOES scheme

- Exercise 11.3

- Exercise 11.4

- 11.2.2 What does it mean for a task to be sequential?

- 11.2.3 Sequential solution for NER

- 11.3 Practical applications of NER

-

- 11.3.1 Data loading and exploration

-

- extract the data from the input file using pandas

- extract the information on the news sources only

- extract the content of articles from a specific source

- 11.3.2 Named entity types exploration with spaCy

-

- Code to populate a dictionary with NEs extracted from news articles

-

- codespace炸了

- colab也不行(呃,其实是因为一开始尝试的时候csv还没上传完整)

- 换成kaggle跑通了呜呜

- Code to print out the named entities dictionary

- Code to aggregate the counts on all named entity types

- 11.3.3 Information extraction revisited

-

- Code to extract the indexes of the words covered by the NE

- Code to extract information about the main participants of the action

- Code to extract information on the specific entity

- 11.3.4 Named entities visualization 很炫酷

-

- Code to visualize named entities of various types in their contexts of use

-

- 拿个句子先试试

- 试试中文的!

- 处理这个数据集

- Code to visualize named entities of a specific type only

- Summary

- 参考资料

-

This chapter covers

-

Introducing named-entity recognition (NER)

-

Overviewing sequence labeling approaches in NLP

-

Integrating NER into downstream tasks

什么是downstream task:

Downstream tasks refer to tasks that depend on the output of a previous task. For example, if task A is to extract data from a website, and task B is to analyze the data that was extracted, then task B is a downstream task of task A. Downstream tasks are often used in natural language processing (NLP) to refer to tasks that use the output of a natural language model as input. For example, once a natural language model has generated a set of language inputs, downstream tasks might include tasks like text classification, question answering, or machine translation. -

Introducing further data preprocessing tools and techniques

-

-

In this chapter, you will be working with the task of named-entity recognition (NER), concerned with detection and type identification of named entities (NEs). Named entities are real-world objects (people, locations, organizations) that can be referred to with a proper name. The most widely used entity types include person, location (abbreviated as LOC), organization (abbreviated as ORG), and geopolitical entity (abbreviated as GPE).

In practice, the set of name extended with further expressions such as dates, time references, numerical expressions (e.g., referring to money and currency(货币) indicators), and so on. Moreover, the types listed so far are quite general, but NER can also be adapted to other domains and application areas. For example, in biomedical(生物医学) applications, “entities” can denote different types of proteins and genes, in the financial domain they can cover specific types of products, and so on.

-

Named entities play an important role in natural language understanding (you have already seen examples from question answering and information extraction) and can be combined with the tasks that you addressed earlier in this book. Such tasks, which rely on the output of NLP tools (e.g., NER models) are technically called downstream tasks, since they aim to solve a problem different from, say, NER itself, but at the same time they benefit from knowing about named entities in text. For instance, identifying entities related to people, locations, organizations, and products in reviews can help better understand users’ or customers’ sentiments toward particular aspects of the product or service.

-

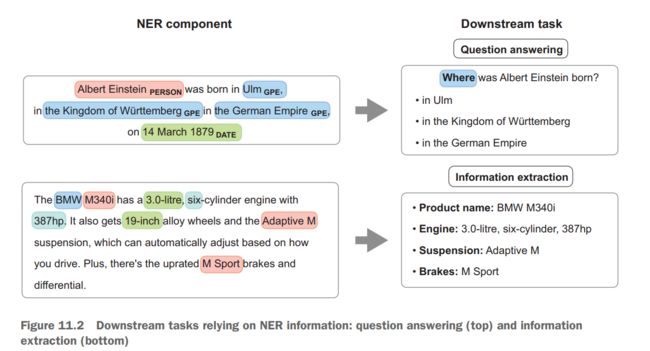

examples: the use of NER for two downstream tasks.

-

In the context of question answering, NER helps to identify the chunks of text that can answer a specific type of a question.

- For example, named entities denoting locations (LOC), or geopolitical entities (GPE) are appropriate as answers for a Where?question.

-

In the context of information extraction, NER can help identify useful characteristics of a product that may be informative on their own or as features in a sentiment analysis or another related task.

-

Another example of a downstream task in which NER plays a central role is stock market movement prediction. It is widely known that certain types of events influence the trends in stock price movements (for more examples and justification, see Ding et al. [2014], Using Structured Events to Predict Stock Price Movement: An Empirical Investigation, which you can access at https://aclanthology.org/D14-1148.pdf). For instance, the news about Steve Jobs’s death negatively impacted Apple’s stock price immediately after the event, while the news about Microsoft buying a new mobile phone business positively impacted its stock price. Suppose your goal is to build an application that can extract relevant facts from the news (e.g., “Apple’s CEO died”; “Microsoft buys mobile phone business”; “Oracle sues Google”) and then use these facts to predict stock prices for these companies. Figure 11.3 visualizes this idea

-

-

11.1 Named entity recognition: Definitions and challenges

11.1.1 Named entity types

- We start by defining the major named entity types and their usefulness for downstream tasks. Figure 11.4 shows entities of five different types (GPE for geopolitical entity, ORG for organization, CARDINAL for cardinal numbers, DATE, and PERSON) highlighted in a typical sentence that you could see on the news

![]()

cardinal (also cardinal number)

a number that represents amount, such as 1, 2, 3, rather than order, such as 1st, 2nd, 3rd

基数

In natural language processing, “cardinal” is a type of entity that refers to a numerical value. In named entity recognition (NER), cardinal entities are numbers that represent a specific quantity, such as “five” or “twenty-three.” Cardinal entities are often distinguished from other types of numerical entities, such as ordinal entities (which indicate a position in a sequence, such as “first” or “third”) and percentage entities (which represent a ratio or proportion, such as “50%”). NER systems may use machine learning algorithms to identify and classify cardinal entities in text data.

-

The notation(符号) used in this sentence is standard for the task of named entity recognition: some labels like DATE and PERSON are self-explanatory(无需解释的); others are abbreviations(缩写)or short forms of the full labels (e.g., ORG for organization). The set of labels comes from the widely adopted annotation(注释) scheme(方案)in OntoNotes (see full documentation at http://mng.bz/Qv5R). What is important from a practitioner’s(从业者) point of view is that this is the scheme that is used in NLP tools, including spaCy.

-

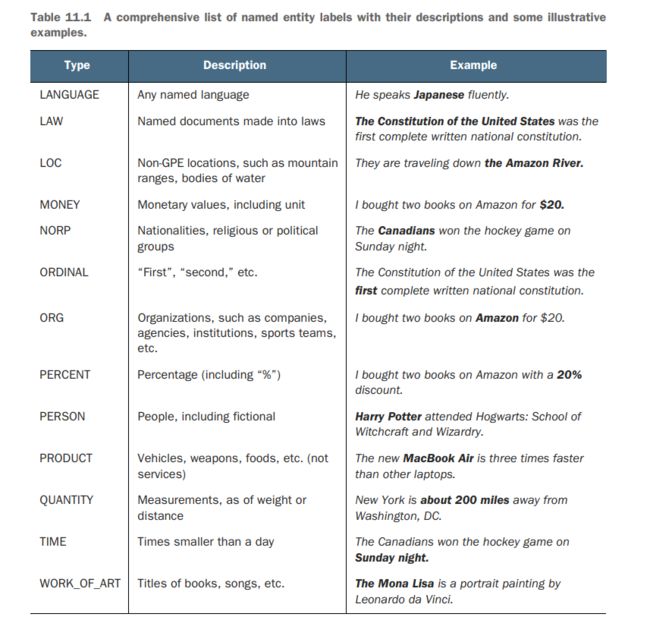

Table 11.1 lists all named entity types typically used in practice and identified in text by spaCy’s NER component and provides a description and some illustrative examples for each of them.

-

A couple of observations are due at this point. (需要注意的是)

- First, note that named entities of any type can consist of a single word (e.g., “two” or “tomorrow”) and longer expressions (e.g., “MacBook Air” or “about 200 miles”).

- Second, the same word or expression may represent an entity of a different type, depending on the context. For example, “Amazon” may refer to a river (LOC) or to a company (ORG).

Exercise 11.1

The NE labeling presented in table 11.1 is used in spaCy. Familiarize yourself with the types and annotation by running spaCy’s NER on a selected set of examples. You can use the sentences from table 11.1 or experiment with your own set of sentences. Do you disagree with any of the results?

-

code

import spacy nlp = spacy.load("en_core_web_sm") doc = nlp("I bought two books on Amazon") for ent in doc.ents: print(ent.text, ent.label_)输出结果为

two CARDINAL Amazon ORG- 前置除了需要install spacy ,还需要

python -m spacy download en_core_web_sm

- 前置除了需要install spacy ,还需要

NOTE Check out the different language models available for use with spaCy: https://spacy.io/models/en.

- Small model (en_core_web_sm) is suitable for most purposes and is more efficient to upload and use.

- However, larger models like en_core_web_md (medium) and en_core_web_lg (large) are more powerful, and some NLP tasks will require the use of such larger models. The models should be installed prior to running the code examples with spaCy. You can also install the models from within the Jupyter Notebook using the command

!python -m spacy download en_core_web_md.spaCy的官方文档感觉很不错

11.1.2 Challenges in named entity recognition

NER is a task concerned with identification of a word or phrase that constitutes an entity and with detection of the type of the identified entity. As examples from the previous section suggest, each of these steps has its own challenges.

引入思考:Exercise 11.2

- What challenges can you identify in NER, based on the examples from table 11.1?(前面那张表格)

Let’s look into these challenges together

full entity span identification

-

The first task that an NER algorithm solves is full entity span identification(span,跨度). As you can see in the examples from figure 11.2 and table 11.1, some entities consist of a single word, while others may span whole expressions, and it is not always trivial(容易解决的) to identify where an expression starts and where it finishes. For instance, does the full entity consist of Amazon or Amazon River? It would seem reasonable to select the longest sequence of words that are likely to constitute a single named entity. However, compare the following two sentences:

- Check out our [Amazon River]LOC maps selection.

- On [Amazon]ORG [River maps]PRODUCT from ABC Publishers are sold for

$5(If this sentence baffles(迷惑) you, try adding a comma(逗号), as in “On Amazon, River maps from ABC Publishers are sold for $5.”)

The first sentence contains a named entity of the type location (Amazon River). Even though the second sentence contains the same sequence of words, each of these two words actually belongs to a different named entity—Amazon is an organization, while River is part of a product name River maps.

ambiguity

-

The following examples illustrate one of the core reasons why natural language processing is challenging—ambiguity. You have seen some examples of sentences with ambiguous analysis before (e.g., when we discussed parsing and part-of-speech tagging in chapter 4).

-

For NER, ambiguity poses a number of challenges: one is related to span identification, as just demonstrated. Another one is related to the fact that the same words and phrases may or may not be named entities.

-

For some examples, consider the following pairs, where the first sentence in each pair contains a word used as a common, general noun, and the second sentence contains the same word being used as (part of) a named entity:

- “An apple a day keeps a doctor away” versus “Apple announces a new iPad Pro.”

- “Turkey is the main dish served at Thanksgiving” versus “Turkey is a country with amazing landscapes.”

- “The tiger is the largest living cat species” versus “Tiger Woods is an American professional golfer.”

Can you spot any characteristics distinguishing the two types of word usage (as a common noun versus as a named entity) that may help the algorithm distinguish between the two? Think about this question, and we will discuss the answer to it in the next section. (后面回头来看这个问题)

-

Ambiguity in NER poses a challenge, and not only when the algorithm needs to define whether a word or a phrase is a named entity or not. Even if a word or a phrase is identified to be a named entity, the same entity may belong to different NE types.

- For example, Amazon may refer to a location or a company, April may be a name of a person or a month, JFK may refer to a person or a facility, and so on.

So,an algorithm has to identify the span of a potential named entity and make sure the identified expression or word is indeed a named entity, since the same phrase or word may or may not be an NE, depending on the context of use. But even when it is established that an expression or a word is a named entity, this named entity may still belong to different types. How does the algorithm deal with these various levels of complexity?

-

First of all, a typical NER algorithm combines the span identification and the named entity type identification steps into a single, joint task.

-

Second, it approaches this task as a sequence labeling problem: specifically, it goes through the running text word by word and tries to decide whether a word is part of a specific type of a named entity. Figure 11.5 provides the mental model for this process.

![]()

这里的mental model我感觉翻译为概念模型比较好? (或者思维导图也可以算是mental model)

A mental model is a simplified representation of a complex system or concept that we use to understand and reason about that system or concept. Mental models help us process and make sense of new information by allowing us to relate it to concepts and information that we already know. They also help us anticipate(预料) how a system or concept is likely to behave in the future based on our understanding of it.↳Mental models are often used in problem-solving and decision-making, as they allow us to evaluate different options and predict the likely outcomes of different courses of action. They are also an important component of learning and knowledge acquisition, as they help us organize and integrate new information into our existing understanding of the world.

- In fact, many tasks in NLP are framed as sequence labeling tasks, since language has a clear sequential nature. We have not looked into sequential tasks and sequence labeling in this book before, so let’s discuss this topic now.

11.2 Named-entity recognition as a sequence labeling task

Not surprisingly, named-entity recognition is addressed using machine-learning algorithms, which are capable of learning useful characteristics of the context. NER is typically addressed with supervised machine-learning algorithms, which means that such algorithms are trained on annotated data. To that end, let’s start with the questions of how the data should be labeled for sequential tasks, such as NER, in a way that the algorithm can benefit from the most.

11.2.1 The basics: BIO scheme

引例

看这个例子

“Inc.” is an abbreviation for “Incorporated.” It is a legal designation that is used by businesses to indicate that they are a corporation.

![]()

We said before that the way the NER algorithm identifies named entities and their types is by considering every word in sequence and deciding whether this word belongs to a named entity of a particular type.

For instance, in Apple Inc., the word Apple is at the beginning of a named entity of type ORG and Inc. is at its end. Explicitly annotating the beginning and the end of a named-entity expression and training the algorithm on such annotation helps it capture the information that if a word Apple is classified as beginning a named entity ORG, it is very likely that it will be followed by a word that finishes this named-entity expression.

The labeling scheme that is widely used for NER and similar sequence labeling tasks is called BIO scheme, since it comprises three types of tags: beginning, inside, and outside. We said that the goal of an NER algorithm is to jointly assign to every word its position in a named entity and its type, so in fact this scheme is expanded to accommodate for the type tags too.

For instance, there are tags B-PER and I-PER for the words beginning and inside of a named entity of the PER type; similarly, there are B-ORG, I-ORG, B-LOC, I-LOC tags, and so on.

O-tag is reserved for all words that are outside of any named entity, and for that reason, it does not have a type extension. Figure 11.6 shows the application of this scheme to the short example “tech giant Apple Inc.”

In total, there are 2n+1 tags for n named entity types plus a single O-tag: for the 18 NE types from the OntoNotes presented in table 11.1, this amounts to 37 tags in total.

further extensions:IO scheme 、 BIOES scheme

BIO scheme has two further extensions that you might encounter in practice: a less fine-grained IO scheme, which distinguishes between the inside and outside tags only, and a more fine-grained BIOES scheme, which also adds an end-of-entity tag for each type and a single-word entity for each type that consists of a single word. Table 11.2 illustrates the application of these annotation schemes to the beginning of our example.

Exercise 11.3

- Table 11.2 doesn’t contain the annotation for the rest of the sentence. Provide the annotation for “the company’s CEO Tim Cook said” using the IO, BIO, and BIOES schemes.

The IO, BIO, and BIOES schemes are all annotation schemes that are used to label the words in a text as part of natural language processing tasks, such as named entity recognition (NER). These schemes are used to create training data for machine learning models that are used to identify named entities in text.

The IO (Inside, Outside) scheme is a simple labeling scheme that consists of only two tags: I (Inside) and O (Outside). It is used to indicate whether a word is part of a named entity or not.

The BIO (Beginning, Inside, Outside) scheme is similar to the IO scheme, but it includes an additional tag for the beginning of a named entity. This scheme is often used to identify the boundaries of named entities in text.

The BIOES (Beginning, Inside, Outside, End, Single) scheme is an extension of the BIO scheme that includes additional tags for the end of a named entity and for named entities that consist of a single word. This scheme is used to more accurately identify the boundaries and types of named entities in text.

Exercise 11.4

- The complexity of a supervised machine-learning task depends on the number of classes to distinguish between. The BIO scheme consists of 37 tags for 18 entity types. How many tags are there in the IO and BIOES schemes?

- The IO scheme has one I-tag for each entity type plus a single O-tag for words outside any entity type. This results in n+1, or 19 tags for 18 entity types.

- The BIOES scheme has 4 tags for each entity type (B, I, E, S) plus one O-tag for words outside any entity type. This results in 4n+1, or 73 tags.

- The more detailed schemes provide for finer(更精细的) granularity(粒度) but also come at an expense of having more classes for the algorithm to distinguish between.

- While the BIO scheme allows the algorithm to train on 37 classes, the BIOES scheme has almost twice as many classes, which means the algorithm has to deal with higher complexity and may make more mistakes.

11.2.2 What does it mean for a task to be sequential?

-

Many real-word tasks show sequential nature.

-

作者举了俩例子

-

水的温度变化

-

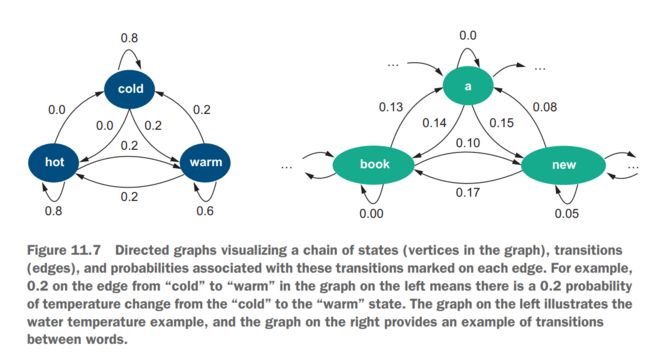

As an illustrative example, let’s consider how the temperature of water changes with respect to various possible actions applied to it. Suppose water can stay in one of three states—cold, warm, or hot, as figure 11.7 (left) illustrates. You can apply different actions to it. For example, heat it up or let it cool down. Let’s call a change from one state to another a state transition. Suppose you start heating cold water up and measure water temperature at regular intervals, say, every minute. Most likely you would observe the following sequence of states: cold → . . . → cold → warm → . . . → warm → hot. In other words, to get to the “hot” state, you would first stay in the “cold” state for some time; then you would need to transition through the “warm” state, and finally you would reach the “hot” state. At the same time, it is physically impossible to transition from the “cold” to the “hot” state immediately, bypassing the “warm” state. The reverse is true as well: if you let water cool down, the most likely sequence will be hot → . . . → hot → warm → . . . → warm → cold, but not hot → cold

In fact, these types of observations can be formalized and expressed as probabilities. For example, to estimate how probable it is to transition from the “cold” state to the “warm” state, you use your timed measurements and calculate the proportion of times that the temperature transitioned cold → warm among all the observations made for the “cold” state:

Such probabilities estimated from the data and observations simply reflect how often certain events occur compared to other events and all possible outcomes. Figure 11.7(left) shows the probabilities on the directed edges. The edges between hot → cold and cold → hot are marked with 0.0, reflecting that it is impossible for the temperature to change between “hot” and “cold” directly bypassing(绕过) the “warm” state. At the same time, you can see that the edges from the state back to itself are assigned with quite high probabilities: P(hot → hot) = 0.8 means that 80% of the time if water temperature is hot at this particular point in time it will still be hot at the next time step (e.g., in a minute). Similarly, 60% of the time water will be warm at the next time step if it is currently warm, and in 80% of the cases water will still be cold in a minute from now if it is currently cold.

Also note that this scheme describes the set of possibilities fully: suppose water is currently hot. What temperature will it be in a minute? Follow the arrows in figure 11.7 (left) and you will see that with a probability of 0.8 (or in 80% of the cases), it will still be hot and with a probability of 0.2 (i.e., in the other 20%), it will be warm.

What if it is currently warm? Then, with a probability of 0.6, it will still be warm in a minute, but there is a 20% chance that it will change to hot and a 20% chance that it will change to cold.

-

-

语句

-

Where do language tasks fit into this? As a matter of fact, language is a highly structured, sequential system. For instance, you can say “Albert Einstein was born in Ulm” or “In Ulm, Albert Einstein was born,” but “Was Ulm Einstein born Albert in” is definitely weird(怪异的) if not nonsensical(荒谬的,无稽之谈的) and can be understood only because we know what each word means and, thus, can still try to make sense of such word salad(词语混杂). At the same time, if you shuffle the words in other expressions like “Ann gave Bob a book,” you might end up not understanding what exactly is being said. In “A Bob book Ann gave,” who did what to whom? This shows that language has a specific structure to it and if this structure is violated, it is hard to make sense of the result. Figure 11.7 (right) shows a transition system for language, which follows a very similar strategy to the water temperature example from figure 11.7 (left).

It shows that if you see a word “a,” the next word may be “book” (“a book”) with a probability of 0.14, “new” (“a new house”) with a 15% chance, or some other word. If you see a word “new,” with a probability of 0.05, it may be followed by another “new” (“a new, new house”), with an 8% chance it may be followed by “a” (“no matter how new a car is, . . .”), in 17% of the cases it will be followed by “book” (“a new book”), and so on. Finally, if the word that you currently see is “book,” it will be followed by “a” (“book a flight”) 13% of the time, by “new” (“book new flights”) 10% of the time, or by some other word (note that in the language example, not all possible transitions are visualized in figure 11.7). Such predictions on the likely sequences of words are behind many NLP applications. For instance, word prediction is used in predictive keyboards, query completion, and so on. Note that in the examples presented in figure 11.7, the sequential models take into account a single previous state to predict the current state.

Technically, such models are called first-order Markov models or Markov chains .

马尔科夫模型或马尔科夫链

In a Markov chain, the probabilistic transitions between states are described by a transition matrix, which specifies the probability of transitioning from one state to another. The state of the system at any given time is called a Markov state, and the set of all possible states is called the state space. The behavior of the system over time is described by a sequence of random variables, called a Markov process.

It is also possible to take into account longer history of events.

For example, second-order Markov models look into two previous states to predict the current state and so on.

NLP models that do not observe word order and shuffle words freely (as in “A Bob book Ann gave”) are called bag-of-words models. The analogy(类比) is that when you put words in a “bag,” their relative order is lost, and they get mixed among themselves like individual items in a bag. A number of NLP tasks use bag-of-words models. The tasks that you worked on before made little if any use of the sequential nature of language. Sometimes the presence of individual words is informative enough for the algorithm to identify a class (e.g., lottery strongly suggests spam, amazing is a strong signal of a positive sentiment, and rugby has a strong association with the sports topic). Yet, as we have noted earlier in this chapter, for NER it might not be enough to just observe a word (is “Apple” a fruit or a company?) or even a combination of words (as in “Amazon River Maps”). More information needs to be extracted from the context and the way the previous words are labeled with NER tags. In the next section, you will look closely into how NER uses sequential information and how sequential information is encoded as features for the algorithm to make its decisions.

-

11.2.3 Sequential solution for NER

Just like water temperature cannot change from “cold” immediately to “hot” or vice versa without going through the state of being “warm,” and just like there are certain sequential rules to how words are put together in a sentence (with “a new book” being much more likely in English than “a book new”), there are certain sequential rules to be observed in NER.

For instance, if a certain word is labeled as beginning a particular type of an entity (e.g., B-GPE for “New” in “New York”), it cannot be directly followed by an NE tag denoting inside of an entity of another type (e.g., I-EVENT cannot be assigned to “York” in “New York” when “New” is already labeled as B-GPE, as I-GPE is the correct tag).

In contrast, I-EVENT is applicable to “Year” in “New Year” after “New” being tagged as B-EVENT. To make such decisions, an NER algorithm takes into account the context, the labels assigned to the previous words, and the current word and its properties.

Let’s consider two examples with somewhat similar contexts:

![]()

Your goal in the NER task is to assign the most likely sequence of tags to each sentence. Ideally, you would like to end up with the following labeling for the sentences: O – O – B-EVENT – I-EVENT for “They celebrated New Year” and O – O – O – B-GPE – I-GPE for “They live in New York.”

Figure 11.8 visualizes such “ideal” labeling for “They celebrated New Year” (using an abbreviation EVT for EVENT for space reasons

As figure 11.8 shows, it is possible to start a sentence with a word labeled as a beginning of some named entity, such as B-EVENT or B-EVT (as in “Christmas B-EVT is celebrated on December 25”).

However, it is not possible to start a sentence with I-EVT (the tag for inside the EVENT entity), which is why it is grayed out in figure 11.8 and there is no arrow connecting the beginning of the sentence (the START state) to I-EVT. Since the second word, “celebrated,” is a verb, it is unlikely that it belongs to any named entity type; therefore, the most likely tag for it is O.

“New” can be at the beginning of event (B-EVT as in “New Year”) or another entity type (e.g., B-GPE as in “New York”), or it can be a word used outside any entity (O).

Finally, the only two possible transitions after tag B-EVT are O (if an event is named with a single word, like “Christmas”) or I-EVT. All possible transitions are marked with arrows in figure 11.8; all impossible states are grayed out with the impossible transitions dropped (i.e., no connecting arrows); and the states and transitions highlighted in bold are the preferred ones.

As you can see, there are multiple sources of information that are taken into account here: word position in the sentence matters (tags of the types O and BENTITY—outside an entity and beginning an entity, respectively—can apply to the first word in a sentence, but I-ENTITY cannot); word characteristics matter (a verb like “celebrate” is unlikely to be part of any entity); the previous word and tag matter (if the previous tag is B-EVENT, the current tag is either I-EVENT or O); the word shape matters (capital N in “New” makes it a better candidate for being part of an entity, while the most likely tag for “new” is O); and so on.

This is, essentially(本质上), how the algorithm tries to assign the correct tag to each word in the sequence.

For instance, suppose you have assigned tags O – O – B-EVENT to the sequence “They celebrated New” and your current goal is to assign an NE tag to the word “Year”. The algorithm may consider a whole set of characteristic rules—let’s call them features by analogy(类比) with the features used by supervised machine-learning algorithms in other tasks. The features in NER can use any information related to the current NE tag and previous NE tags, current word and the preceding context, and the position of the word in the sentence.

Let’s define some feature templates for the features helping the algorithm predict that word 4 \text{word}_4 word4 in “They celebrated New Year” (i.e., word 4 \text{word}_4 word4=“Year”) should be assigned with the tag I-EVENT after the previous word “New” is assigned with B-EVENT. It is common to use the notation y i y_i yi for the current tag, y i − 1 y_{i-1} yi−1 for the previous one, X X X for the input, and i i i for the position, so let’s use this notation in the feature templates

![]()

A gazetteer(地名录) (e.g., www.geonames.org) is a list of place names with millions of entries for locations, including detailed geographical and political

information. It is a very useful resource for identification of LOC, GPE, and

some other types of named entities.Word shape is determined as follows: capital letters are replaced with X, lowercase letters are replaced with x, numbers are replaced with d, and punctuation marks are preserved; for example, “U.S.A.” can be represented as “X.X.X.” and “11–12p.m.” as “d–dx.x.” This helps capture useful generalizable information.

Feature indexes used in this list are made up, and as you can see, the list of features grows quickly with the examples from the data. When applied to our example, the features will yield the following values:

![]()

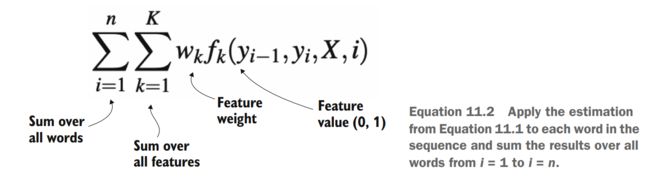

It should be noted that no single feature is capable of correctly identifying an NE tag in all cases; moreover, some features may be more informative than others. What the algorithm does in practice is it weighs the contribution from each feature according to its informativeness and then it combines the values from all features, ranging from feature k = 1 k = 1 k=1 to feature k = K k = K k=K (where k k k is just an index), by summing the individual contributions as follows

![]()

The appropriate weights in this equation are learned from labeled data as is normally done for supervised machine-learning algorithms. As was pointed out earlier, the ultimate goal of the algorithm is to assign the correct tags to all words in the sequence, so the expression is actually applied to each word in sequence, from i = 1 i = 1 i=1 (i.e., the first word in the sentence) to i = n i = n i=n (the last word); that is

Specifically, this means that the algorithm is not only concerned with the correct assignment of the tag I-EVENT to “Year” in “They celebrated New Year”, but also with the correct assignment of the whole sequence of tags O – O – B-EVENT – I-EVENT to “They celebrated New Year”.

However, originally, the algorithm knows nothing about the correct tag for “They” and the correct tag for “celebrated” following “They”, and so on. Since originally the algorithm doesn’t know about the correct tags for the previous words, it actually considers all possible tags for the first word, then all possible tags for the second word, and so on. In other words, for the first word, it considers whether “They” can be tagged as B-EVENT, I-EVENT, B-GPE, I-GPE, . . . , O, as figure 11.8 demonstrated earlier; then for each tag applied to “They”, the algorithm moves on and considers whether “celebrated” can be tagged as B-EVENT, I-EVENT, B-GPE, I-GPE, . . . , O; and so on.

In the end, the result you are interested in is the sequence of all NE tags for all words that is most probable; that is

The formula in Equation 11.3 is exactly the same as the one in Equation 11.2, with just one modification: argmax means that you are looking for the sequence that results in the highest probability estimated by the rest of the formula; Y Y Y stands for the whole sequence of tags for all words in the input sentence; and the fancy font(花体字) Y Y Y denotes the full set of possible combinations of tags.

Recall the three BIO-style schemes introduced earlier in this chapter: the most coarse-grained(粗粒度) IO scheme has 19 tags, which means that the total number of possible tag combinations for the sentence “They celebrated New Year”, consisting of 4 words, is 1 9 4 = 130 , 321 19^4 =130,321 194=130,321; the middle-range BIO scheme contains 37 distinct tags and results in 3 7 4 = 1 , 874 , 161 37^4 =1,874,161 374=1,874,161 possible combinations; and finally, the most fine-grained BIOES scheme results in 7 3 4 = 28 , 398 , 241 73^4 =28,398,241 734=28,398,241 possible tag combinations for a sentence consisting of 4 words.

Note that a sentence consisting of 4 words is a relatively short sentence, yet the brute-force(蛮力法) algorithm (i.e., the one that simply iterates through each possible combination at each step) rapidly becomes highly inefficient. After all, some tag combinations (like O → I-EVENT) are impossible, so there is no point in wasting effort on even considering them. In practice, instead of a brute-force algorithm, more efficient algorithms based on dynamic programming(动态规划) are used (the algorithm that is widely used for language-related sequence labeling tasks is the Viterbi algorithm;

Instead of exhaustively(彻底地) considering all possible combinations, at each step a dynamic programming algorithm calculates the probability of all possible solutions given only the best, most optimal (最优的)solution for the previous step. The algorithm then calculates the best move at the current point and stores it as the current best solution. When it moves to the next step, it again considers only this best solution rather than all possible solutions, thus considerably(大幅度地) reducing the number of overall possibilities to only the most promising ones. Figure 11.9 demonstrates the intuition behind dynamic estimation of the best NE tag that should be selected for “Year” given that the optimal solution O – O – B-EVENT is found for “They celebrated New”.

This, in a nutshell, is how a sequence labeling algorithm solves the task of tag assignment. As was highlighted before, NER is not the only task that demonstrates sequential effects, and a number of other tasks in NLP are solved this way.

The approach to sequence labeling outlined in this section is used by machine-learning algorithms, most notably, conditional random fields, although you don’t need to implement your own NER to be able to benefit from the results of this step in the NLP pipeline. For instance, spaCy has an NER implementation that you are going to rely on to solve the task set out in the scenario for this chapter. The next section delves(探索;深入寻找,搜寻) into implementation (实现)details.

Conditional random fields (CRFs) are a type of probabilistic graphical model used for modeling and predicting structured data, such as sequences or sets of interconnected items. Like other graphical models, CRFs use a graph structure to represent the relationships between different variables and their dependencies. However, unlike many other graphical models, CRFs are specifically designed to handle structured data and make use of the relationships between variables to improve prediction accuracy.

条件随机场 (CRF) 是一种概率图模型,用于建模和预测结构化数据,例如序列或相互关联的项目集。 与其他图形模型一样,CRF 使用图形结构来表示不同变量之间的关系及其依赖关系。 然而,与许多其他图形模型不同,CRF 专门设计用于处理结构化数据并利用变量之间的关系来提高预测准确性。

CRFs are often used in natural language processing tasks, such as part-of-speech tagging and named entity recognition, where the input data consists of a sequence of words or other tokens and the output is a sequence of tags or labels. CRFs can also be applied to other types of structured data, such as biological sequences, and have been used in a variety of other applications, including image segmentation and handwritten character recognition.

CRFs are related to other probabilistic models, such as hidden Markov models and Markov random fields, and can be trained using a variety of algorithms, including gradient descent and the Expectation-Maximization (EM) algorithm.

11.3 Practical applications of NER

作者还是带我们回到了开头说的那个股票的例子

Let’s remind ourselves of the scenario for this chapter. It is widely known that certain events influence the trends of stock price movements. Specifically, you can extract relevant facts from the news and then use these facts to predict company stock prices.

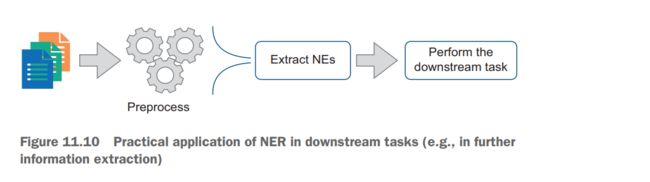

Suppose you have access to a large collection of news; now your task is to extract the relevant events and facts that can be linked to the stock market in the downstream (stock market price prediction) application. How will you do that? This means that you have access to a collection of news texts, and among other preprocessing steps, you apply NER. Then you can focus only on the texts and sentences that are relevant for your task.

For instance, if you are interested in the recent events, in which a particular company (e.g., “Apple”) participated, you can easily identify such texts, sentences, and contexts. Figure 11.10 shows a flow diagram for this process.

11.3.1 Data loading and exploration

-

数据集是用的Kaggle的这个数据集:All the news | Kaggle

-

The dataset consists of 143,000 articles scraped from 15 news websites, including the New York Times, CNN, Business Insider, Washington Post, and so on.

-

The dataset is quite big and is split into three comma-separated values (CSV) files. In the examples in this chapter, you are going to be working with the file called articles1.csv, but you are free to use other files in your own experiments.

csv

Comma-separated values (CSV) is a simple file format used to store tabular(表格式的) data, such as a spreadsheet or database. A CSV file stores data in plain text, with each line representing a row of the table and each field (column) within that row separated by a comma.

Many datasets available via Kaggle and similar platforms are stored in the .csv format. This basically means that the data is stored as a big spreadsheet(电子表格) file, where information is split between different rows and columns. For instance, in articles1.csv,

each row represents a single news article, described with a set of columns containing

information on its title, author, the source website, the date of publication, its full content, and so on. The separator(分隔符) used to define the boundary between the information

belonging to different data fields in .csv files is a comma. It’s time now to familiarize

yourselves with pandas, a useful data-preprocessing toolkit that helps you work with

files in such formats as .csv and easily extract information from them.

-

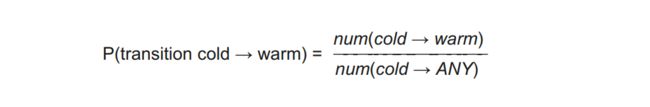

extract the data from the input file using pandas

import pandas as pd

path = "all-the-news/"

df = pd.read_csv(path + "articles1.csv")

读取得到的df是DataFrame, a labeled data structure with columns of potentially different types

df.shape

The function

df.shapeprints out the dimensionality of the data structure

(50000,10)

df.head()

![]()

extract the information on the news sources only

sources = df["publication"].unique()

print(sources)

['New York Times' 'Breitbart' 'CNN' 'Business Insider' 'Atlantic']

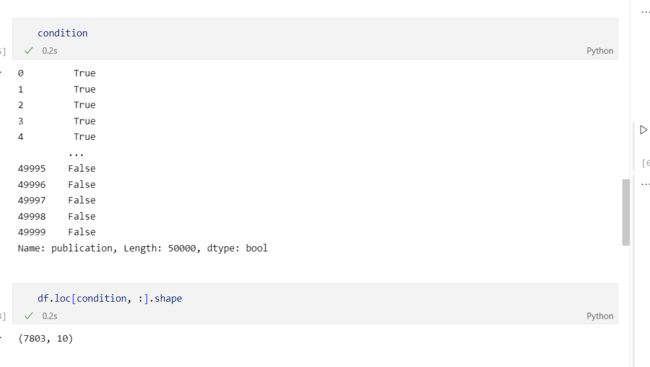

extract the content of articles from a specific source

Since the DataFrame contains as many as 50,000 articles, for the sake of this applica-

tion, let’s focus on some articles only. We will extract the text (content) of the first

1,000 articles published in the New York Times.

condition = df["publication"].isin(["New York Times"])

content_df = df.loc[condition, :]["content"][:1000]

content_df.shape

content_df.head()

In this code,

df.loc[condition, :]is used to select rows from the DataFramedfbased on the condition provided. The:after the comma indicates that all columns should be selected for the rows that match the condition.

type(content_df):pandas.core.series.Series

(1000,)

0 WASHINGTON — Congressional Republicans have...

1 After the bullet shells get counted, the blood...

2 When Walt Disney’s “Bambi” opened in 1942, cri...

3 Death may be the great equalizer, but it isn’t...

4 SEOUL, South Korea — North Korea’s leader, ...

Name: content, dtype: object

-

看看里面的中间变量是什么

11.3.2 Named entity types exploration with spaCy

Now that the data is loaded, let’s explore what entity types it contains.

Let’s start by iterating through the news articles, collecting all named entities identified in texts and storing the number of occurrences in a Python dictionary.

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-VNnsdGDe-1673432362017)(null)]

Code to populate a dictionary with NEs extracted from news articles

import spacy

nlp = spacy.load("en_core_web_md")

def collect_entites(data_frame):

named_entities = {}

processed_docs = []

for item in data_frame:

doc = nlp(item)

processed_docs.append(doc)

for ent in doc.ents:

# For each entity, extract the text with ent.text (e.g., Apple) and store it as entity_text

entity_text = ent.text # e.g., Apple

# Identify the type of the entity with ent.label_ (e.g.,ORG) and store it as entity_type.

entity_type = str(ent.label_) # e.g., ORG

current_ents = {} # e.g., [Apple: 1, Facebook: 2, ...]

# For each entity type,extract the list of currently stored entities with their counts

if entity_type in named_entities.keys():

current_ents = named_entities.get(entity_type)

# Update the counts in current_ents, incrementing the count for the entity stored as entity_text

current_ents[entity_text] = current_ents.get(entity_text, 0) + 1

named_entities[entity_type] = current_ents

return named_entities, processed_docs

named_entities, processed_docs = collect_entites(content_df)

这段代码做了啥

This code uses the spaCy library to extract named entities from a data frame of text content. The

collect_entitesfunction takes a data frame as an input and returns two outputs: a dictionary of named entities, and a list of processed documents.First, it initializes two empty variables, named_entities and processed_docs. Then, it iterates through each item in the input data frame and applies spaCy’s natural language processing (NLP) model to each item, creating a spaCy “doc” object for each one. These doc objects are then appended to the processed_docs list.

Next, for each named entity in the doc object, the code extracts the entity’s text and label, and uses them to update the named_entities dictionary. The dictionary is structured with entity labels as keys (e.g., “ORG” for organizations) and values that are another dictionary containing the entities as keys and their counts as values (e.g., {“Apple”: 2, “Facebook”: 3} for the “ORG” label). If a new entity label is encountered, it is added as a new key in the named_entities dictionary with an empty dictionary as its value.

Finally, the function returns both the named_entities dictionary and processed_docs list. It is important to note that this code will extract the entities and count them, but it doesn’t handle lower case named entities or handle common english words such as “The” and “And” which might be extracted as entities in some scenarios.

codespace炸了

![]()

这一步查了好多解决方案比如安装ipywidgets这些…都解决不了

我担心是因为md模型大了些,codespace吃不消之类的,换成sm还是不行

codespace export到repo里面也fail了…

colab也不行(呃,其实是因为一开始尝试的时候csv还没上传完整)

换colab试试吧…

我先是把codespace fork到我的的一个repo里面,然后git到我的colab里面

啊这…转战kaggle试试??

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-txsygll0-1673432360874)(null)]

换成kaggle跑通了呜呜

kaggle读文件的时候记得前面加上../input

![]()

对了,kaggle和colab都可以link to github

Code to print out the named entities dictionary

def print_out(named_entities):

for key in named_entities.keys():

print(key)

entities = named_entities.get(key)

sorted_keys = sorted(entities, key=entities.get, reverse=True)

for item in sorted_keys[:10]:

if (entities.get(item) > 1):

print(" " + item + ": " + str(entities.get(item)))

print_out(named_entities)

This code defines a function called

print_out, which takes a named_entities dictionary as an input and prints out the top 10 entities for each entity label, sorted by count in descending order.First, the function uses a for loop to iterate through the keys of the named_entities dictionary. For each key, it gets the corresponding dictionary of entities and counts (e.g., {“Apple”: 2, “Facebook”: 3} for the “ORG” label) and assigns it to the variable

entities.Next, it uses the

sortedfunction with thekeyparameter set toentities.getto sort the entities by count in descending order. Thereverse=Trueargument is used to sort in descending order.Then, it iterates through the top 10 entities in the sorted list and checks if their count is greater than 1, and if so, it prints out the entity and its count, indented by 2 spaces for readability. This will help you to identify the most common entities in the text.

-

结果如下

ORG Senate: 373 Congress: 352 the White House: 258 White House: 249 The New York Times: 221 House: 203 Times: 192 WASHINGTON: 125 Volkswagen: 118 the European Union: 111 NORP American: 984 Republicans: 525 Republican: 473 Democrats: 400 Russian: 337 Chinese: 288 Americans: 270 British: 185 Muslim: 170 Democrat: 165 MONEY 1: 66 2: 23 millions of dollars: 20 10: 19 3: 19 billions of dollars: 18 100: 17 5: 17 4: 15 $1 billion: 14 CARDINAL one: 1006 two: 908 three: 353 One: 333 seven: 170 four: 168 1: 141 •: 137 five: 132 thousands: 122 DATE Friday: 436 Wednesday: 374 Tuesday: 338 Monday: 295 Sunday: 279 Thursday: 279 last year: 254 Saturday: 243 years: 205 2014: 205 PERSON Trump: 3401 Obama: 372 Donald J. Trump: 199 Clinton: 185 Spicer: 136 Sessions: 123 Gorsuch: 118 Hillary Clinton: 114 Kushner: 103 Putin: 99 GPE the United States: 1173 Russia: 526 China: 515 Washington: 499 New York: 364 America: 358 Iran: 294 Mexico: 264 Britain: 236 California: 206 LAW the Affordable Care Act: 114 Constitution: 93 Roe v. Wade: 13 the Clean Air Act: 12 the Geneva Conventions: 9 the First Amendment: 6 The Affordable Care Act: 5 the Affordable Care Act’s: 4 the First Amendment’s: 3 the Johnson Amendment: 3 LOC Europe: 150 Africa: 54 Asia: 52 Silicon Valley: 47 Earth: 40 the Middle East: 39 North: 37 South: 30 Pacific: 25 West: 23 ORDINAL first: 1222 second: 224 third: 89 First: 38 fourth: 38 fifth: 21 45th: 12 Second: 10 sixth: 10 40th: 7 TIME morning: 150 night: 133 evening: 75 hours: 73 afternoon: 64 that night: 23 the morning: 22 last night: 21 tonight: 20 overnight: 17 FAC Broadway: 76 the White House: 48 Trump Tower: 45 Capitol: 31 Vatican: 31 Fifth Avenue: 13 the C. I. A.: 13 Kennedy Airport: 13 the Oval Office: 11 Times Square: 11 PRODUCT Twitter: 76 Obamas: 14 Cowboys: 13 Facebook: 12 Adumim: 11 Manchester: 8 Boko Haram: 6 Cellectis: 6 Air Force One: 5 Grammy: 5 PERCENT 5 percent: 23 20 percent: 21 3 percent: 15 4 percent: 15 6 percent: 13 2 percent: 13 1 percent: 13 40 percent: 12 100 percent: 10 9 percent: 10 EVENT World War II: 49 the Super Bowl: 36 Super Bowl: 28 New Year’s Eve: 18 Inauguration Day: 17 Olympic: 14 the Vietnam War: 12 Wimbledon: 12 New Year’s Day: 11 the Cold War: 11 WORK_OF_ART Saturday Night Live: 25 Moonlight: 20 La La Land: 19 Bible: 17 The Daily: 16 Your Morning Briefing: 15 Hidden Figures: 15 The Mary Tyler Moore Show: 14 La La Land”: 14 Meet the Press: 11 QUANTITY about 30 miles: 3 40 miles: 3 hundreds of miles: 3 less than a mile: 3 150 miles: 3 about 600 miles: 3 more than 100 pounds: 2 20 pounds: 2 hundreds of feet: 2 Three miles: 2 LANGUAGE English: 46 Arabic: 9 Spanish: 6 Hebrew: 5 Mandarin: 3 Filipino: 3 French: 2 Excedrin: 2

Perhaps not surprisingly, the most frequent geopolitical entity mentioned in the NewYork Times articles is the United States. It is mentioned 1,148 times in total; in fact, thiscan be combined with the counts for other expressions used for the same entity (e.g.America and the like). It is followed by Russia (526 times) and China (515).As you can see, there is a lot of information contained in this dictionary.

Another way in which you can explore the statistics on various NE types is to aggregate the counts on the types and print out the number of unique entries (e.g., Apple

and Facebook would be counted as two separate named entities under the ORG type),

as well as the total number of occurrences of each type (e.g., 175 counts for Facebook

and 63 for Apple would result in the total number of 238 occurrences of the type

ORG). Listing 11.7 shows how to do that. It suggests that you print out information on

the entity type (e.g., ORG), the number of unique entries belonging to a particular type

(e.g., Apple and Facebook would contribute as two different entries for ORG), and the

total number of occurrences of the entities of that particular type. To do that, you

extract and aggregate the statistics for each NE type, and in the end, you print out the

results in a tabulated format, with each row storing the statistics on a separate NE type.

Code to aggregate the counts on all named entity types

rows = []

rows.append(["Type:", "Entries:", "Total:"])

for ent_type in named_entities.keys():

# Extract and aggregate the statistics for each NE type.

rows.append([ent_type, str(len(named_entities.get(ent_type))),

str(sum(named_entities.get(ent_type).values()))])

columns = zip(*rows)

column_widths = [max(len(item) for item in col) for col in columns]

for row in rows:

print(''.join(' {:{width}} '.format(row[i], width=column_widths[i])

for i in range(0, len(row))))

This code defines a list called

rowsand appends a header row to it. Then, it uses a for loop to iterate through the keys of the named_entities dictionary (the entity types), for each type it calculates the number of unique entities and the total count of that type and append those values to rows list as a new row.Then, it uses the zip function to transpose the rows so that the columns of data can be processed. Next, it uses a list comprehension to determine the maximum length of each column of data and saves it to the variable

column_widths. Finally, it uses a nested for loop to iterate through the rows and columns, and print out the data, formatted to the widths specified in column_widths.This will create a cleanly formatted table with 3 columns: “Type”, “Entries”, and “Total” that shows the number of unique entities and the total count for each entity label. Additionally, this way, you can easily spot which label type have more unique entities or the total count of entities for each label.

输出结果

As this table shows, the most frequently used named entities in the news articles are entities of the following types: PERSON, GPE, ORG, and DATE. This is, perhaps, not very surprising. After all, most often news reports on the events that are related to people (PERSON), companies (ORG), countries (GPE), and usually news articles include references to specific dates. At the same time, the least frequently used entities are the ones of the type LANGUAGE: there are only 12 unique languages mentioned in this news articles dataset, and in total they are mentioned 85 times. Among the most frequently mentioned are English (48 times), Arabic (8), and Spanish (7). You may also note that the ORDINAL type has only 68 unique entries: it is, naturally, a very compact list of items including entries like first, second, third, and so on

11.3.3 Information extraction revisited

Consider the scenario again: your task is to build an information extraction application focused on companies and the news that reports on these companies. The dataset at hand, according to table 11.4, contains information on as many as 4,892 companies. Of course, not all of them will be of interest to you, so it would make sense to select a few and extract information on them.

Chapter 4 looked into the information extraction task, which was concerned with the extraction of relevant facts (e.g., actions in which certain personalities of interest are involved). Let’s revisit this task here, making the necessary modifications. Specifically, the following ones:

- Let’s extract actions together with their participants but focus on participants of a particular type, such as companies (ORG) or a specific company (Apple). For that, you will work with a subset of sentences that contain the entity of interest.

- Let’s extract the contexts in which an entity of interest (e.g., Apple) is one of the main participants (e.g., “Apple sued Qualcomm” or “Russia required Apple to . . .”). For that, you will use the linguistic information from spaCy’s NLP pipeline, focusing on the cases where the entity is the subject (the main participant of the main action as Apple is in “Apple sued Qualcomm”) or the object (the second participant of the main action as Apple is in “Russia required Apple to . . .”). This information can be extracted from the spaCy’s parser output using nsubj and dobj relations, respectively

- Oftentimes the second participant of the action is linked to the main verb via a preposition. For instance, compare “Russia required Apple” to “The New York Times wrote about Apple”. In the first case, Apple is the direct object of the main verb required, and in the second case, it is an indirect object of the main verb wrote. Let’s make sure that both cases are covered by our information extraction algorithm.

- Finally, as observed in the earlier examples, named entities may consist of a single word (Apple) or of several words (Apple Inc.). To that end, let’s make sure the code applies to both cases.

Code to extract the indexes of the words covered by the NE

- Each word in a sentence has a unique index that is linked to its position in the sentence

- The goal is to extract not only the word marked as nsubj or dobj but also the whole named entity that plays that role

- The best way to do this is to match the named entities to their roles in the sentence via the indexes assigned to the named entities in the sentence

- An example is given of a named entity “The New York Times” in the sentence "The New York Times wrote about Apple"The goal is to identify whether “The New York Times” is a participant of the main action (wrote) in the sentenceThe indexes of the words covered by “The New York Times” in the sentence are [0,1,2,3].Check if a word with any of these indexes plays a role of the subject or an object in the sentence.The word that is the subject in the sentence has the index of 3.Therefore, the whole named entity “The New York Times” can be returned as the subject of the main action in the sentence.

entity = "The New York Times"

sentences = ["The New York Times wrote about Apple"]

def extract_span(sent, entity):

indexes = []

for ent in sent.ents:

if ent.text==entity:

for i in range(int(ent.start), int(ent.end)):

indexes.append(i)

return indexes

def extract_information(sent, entity, indexes):

# Initialize the list of actions and an action with two participants.

actions = []

action = ""

participant1 = ""

participant2 = ""

for token in sent:

if token.pos_=="VERB" and token.dep_=="ROOT":

# Initialize the indexes for the subject and the object related to the main verb.

subj_ind = -1

obj_ind = -1

# Store the main verb itself in the action variable.

action = token.text

children = [child for child in token.children]

for child1 in children:

# Find the subject via the nsubj relation and store it as participant1 and its index as subj_ind.

if child1.dep_=="nsubj":

participant1 = child1.text

subj_ind = int(child1.i)

if child1.dep_=="prep":

participant2 = ""

child1_children = [child for child in child1.children]

for child2 in child1_children:

if child2.pos_ == "NOUN" or child2.pos_ == "PROPN":

participant2 = child2.text

obj_ind = int(child2.i)

if not participant2=="":

if subj_ind in indexes:

actions.append(entity + " " + action + " " + child1.text + " " + participant2)

elif obj_ind in indexes:

actions.append(participant1 + " " + action + " " + child1.text + " " + entity)

# If both participants of the action are identified,add the action with two participants to the list of actions.

if child1.dep_=="dobj" and (child1.pos_ == "NOUN"

or child1.pos_ == "PROPN"):

participant2 = child1.text

obj_ind = int(child1.i)

if subj_ind in indexes:

actions.append(entity + " " + action + " " + participant2)

elif obj_ind in indexes:

# If there is no preposition attached to the verb, find a direct object of the main verb via the dobj relation.

actions.append(participant1 + " " + action + " " + entity)

# If the final list of actions is not empty, print out the sentence and all actions together with the participants.

if not len(actions)==0:

print (f"\nSentence = {sent}")

for item in actions:

print(item)

for sent in sentences:

doc = nlp(sent)

indexes = extract_span(doc, entity)

print(indexes)

extract_information(doc, entity, indexes)

Now let’s apply this code to your texts extracted from the news articles. Note, however, that the code in listing 11.9 applies to the sentence level, since it relies on the information extracted from the parser (which applies to each sentence rather than the whole text). In addition, if you are only interested in a particular entity, it doesn’t make sense to waste the algorithm’s efforts on the texts and sentences that don’t mention this entity at all. To this end, let’s first extract all sentences that mention the entity in question from processed_docs and then apply the extract_information method to extract all tuples (participant1 + action + participant2) from the sentences, where either participant1 or participant2 is the entity you are interested in.

Code to extract information about the main participants of the action

def entity_detector(processed_docs, entity, ent_type):

output_sentences = []

for doc in processed_docs:

for sent in doc.sents:

if entity in [ent.text for ent in sent.ents if ent.label_==ent_type]:

output_sentences.append(sent)

return output_sentences

entity = "Apple"

ent_sentences = entity_detector(processed_docs, entity, "ORG")

print(len(ent_sentences))

for sent in ent_sentences:

indexes = extract_span(sent, entity)

extract_information(sent, entity, indexes)

Code to extract information on the specific entity

def entity_detector(processed_docs, entity, ent_type):

output_sentences = []

for doc in processed_docs:

for sent in doc.sents:

if entity in [ent.text for ent in sent.ents if ent.label_==ent_type]:

output_sentences.append(sent)

return output_sentences

entity = "Apple"

ent_sentences = entity_detector(processed_docs, entity, "ORG")

print(len(ent_sentences))

for sent in ent_sentences:

indexes = extract_span(sent, entity)

extract_information(sent, entity, indexes)

This code uses “Apple” as the entity of interest and specifically looks for sentences, in which the company (ORG) Apple is mentioned. As the printout message shows, there are 59 such sentences. Not all sentences among these 59 sentences mention Apple as a subject or object of the main action, but the last line of code returns a number of such sentences with the tuples summarizing the main content:

47

Sentence = Apple, complying with what it said was a request from Chinese authorities, removed news apps created by The New York Times from its app store in China late last month.

Apple removed apps

Sentence = Apple removed both the and apps from the app store in China on Dec. 23.

Apple removed apps

Apple removed from store

Apple removed on Dec.

Sentence = Apple has previously removed other, less prominent media apps from its China store.

Apple removed apps

Apple removed from store

Sentence = On Friday, Apple, its longtime partner, sued Qualcomm over what it said was $1 billion in withheld rebates.

Apple sued Qualcomm

看到这里,才明白前面那个extract_information到底是要干啥

11.3.4 Named entities visualization 很炫酷

One of the most useful ways to explore named entities contained in text and to extract relevant information is to visualize the results of NER.

接下来使用spaCy的可视化工具displaCy

“entity of interest"在书里面大概是这个意思? refers to a specific named entity that the user wants to extract information about from a text.

Code to visualize named entities of various types in their contexts of use

拿个句子先试试

from spacy import displacy

text = "When Sebastian Thrun started working on self-driving cars at Google in 2007, \

few people outside of the company took him seriously."

doc = nlp(text)

displacy.render(doc, style="ent")

![]()

试试中文的!

需要

!python -m spacy download zh_core_web_sm(sm、md啥的自己选),我先用小的来玩玩

from spacy import displacy

text2 = "2010年4月,小米成立于中华人民共和国北京市。[5],并于2011年8月发布小米手机进军手机市场[6]。据全球市场调研机构Canalys的统计,在2021年第二季度,小米智能手机市场占有率位居全球第二,占比17%[7]。小米还是继苹果、三星、华为之后第四家拥有手机芯片自研能力的手机厂商[8]。 小米旗下拥有多个子品牌,面向不同产品品类、地区市场及消费人群。通过与其生态链企业的研发与合作,其旗下产品涵盖了智能手机、小米手环、小米电视、小米空气净化器等多种智能化的消费电子产品[9]。小米拥有其直接控股或间接控制的生态链企业多达近400家,产业覆盖智能硬件、生活消费用品、\

教育、游戏、社交网络、文化娱乐、医疗健康、汽车交通、金融等多个领域[10]。"

nlp2 = spacy.load("zh_core_web_sm")

doc = nlp2(text2)

displacy.render(doc, style="ent")

![]()

处理这个数据集

def visualize(processed_docs, entity, ent_type):

for doc in processed_docs:

for sent in doc.sents:

if entity in [ent.text for ent in sent.ents if ent.label_==ent_type]:

displacy.render(sent, style="ent")

visualize(processed_docs, "Apple", "ORG")

This code displays all sentences, in which the company (ORG) Apple is mentioned. Other entities are highlighted with distinct colors.

Code to visualize named entities of a specific type only

Finally, you might be interested specifically in the contexts in which the company Apple is mentioned alongside other companies. Let’s filter out all other information and highlight only named entities of the same type as the entity in question (i.e., all ORG NEs in this case).

def count_ents(sent, ent_type):

return len([ent.text for ent in sent.ents if ent.label_==ent_type])

def entity_detector_custom(processed_docs, entity, ent_type):

output_sentences = []

for doc in processed_docs:

for sent in doc.sents:

if entity in [ent.text for ent in sent.ents if ent.label_==ent_type and

count_ents(sent, ent_type)>1]:

output_sentences.append(sent)

return output_sentences

output_sentences = entity_detector_custom(processed_docs, "Apple", "ORG")

print(len(output_sentences))

With an updated entity_detector_custom function, you extract only the sentences that mention the input entity of a specified type as well as at least one other entity of the same type. You can print out the number of sentences identified this way as a sanity check. Then you define the visualize_type function that applies visualization to the entities of a predefined type only. spaCy allows you to customize the colors for visualization and to apply gradient (you can choose other colors from https://htmlcolorcodes.com/color-chart/), and using this customized color scheme, you can finally visualize the results.

def visualize_type(sents, entity, ent_type):

colors = {"ORG": "linear-gradient(90deg, #64B5F6, #E0F7FA)"}

options = {"ents": ["ORG"], "colors": colors}

for sent in sents:

displacy.render(sent, style="ent", options=options)

visualize_type(output_sentences, "Apple", "ORG")

Summary

这章学会了什么?

可以从新闻文章中提取相关事件和感兴趣的参与者(例如特定公司)所采取action的summarization。这些事件可以在下游任务中进一步使用。例如,如果还收集了有关股票价格转移的数据,则可以将从新闻提取的事件链接到此类事件后立即发生的股票价格的变化,这将帮助预测股票价格可能如何变化,未来的类似事件。

- Named-entity recognition (NER) is one of the core NLP tasks; however, when the main goal of the application you are developing is not concerned with improving the core NLP task itself but rather relies on the output from the core NLP technology, this is called a downstream task. The tasks that benefit from NER include information extraction, question answering, and the like.

- Named entities are real-world objects (people, locations, organizations, etc.) that can be referred to with a proper name, and named-entity recognition is concerned with identification of the full span of such entities (as entities may consist of a single word like Apple or of multiple words like Albert Einstein) and the type of the expression.

- The four most widely used types include person, location, organization, and geopolitical entity, although other types like time references and monetary units are also typically added. Moreover, it is also possible to train a customized NER algorithm for a specific domain. For instance, in biomedical texts, gene and protein names represent named entities.

- NER is a challenging task. The major challenges are concerned with the identification of the full span of the expression (e.g., Amazon versus Amazon River) and the type (e.g., Washington may be an entity of up to 4 different types depending on the context). The span and type identification are the tasks that in NER are typically solved jointly.

- The set of named entities often used in practice is derived from the OntoNotes, and it contains 18 distinct NE types. The annotation scheme used to label NEs in data is called a BIO scheme (with a more coarse-grained variant being the IO, and the more fine-grained one being the BIOES scheme).

- This scheme explicitly annotates each word as beginning an NE, being inside of an NE, or being outside of an NE.

- The NER task is typically framed as a sequence labeling task, and it is commonly addressed using a feature-based approach. NER is not the only NLP task that is solved using sequence labeling, since language shows strong sequential effects. Part-of-speech tagging overviewed in chapter 4 is another example of a sequential task.

- You can apply spaCy in practice to extract named entities of interest and facts related to these entities from a collection of news articles.

- A very popular format in data science is CSV, which uses a comma as a delimiter. An easy-to-use open source data analysis and manipulation tool for Python practitioners that helps you work with such files is pandas.

- Finally, you can explore the results of NER visually, using the displaCy tool and color-coding entities of different types with its help.

感觉本书还有一大亮点就是推荐的材料,很多地方都附上了论文链接

![]()

参考资料

-

看到一个spacy官方的很好的教程:https://course.spacy.io/zh/

-

这个也不错:

- Introduction to Cultural Analytics & Python

-

displaCy:https://demos.explosion.ai/displacy-ent