绝缘子红外图像检测项目(TF2)

目录

1. 项目背景

2. 图像数据集介绍

labelimg的安装流程:

1. 打开Anaconda Prompt(Anaconda3)

2. 创建一个新环境来安装labelimg

3. 激活新创建的环境labelimg

4.输入

5.输入labelimg 即可运行

3. 模型介绍

4. 模型性能测试

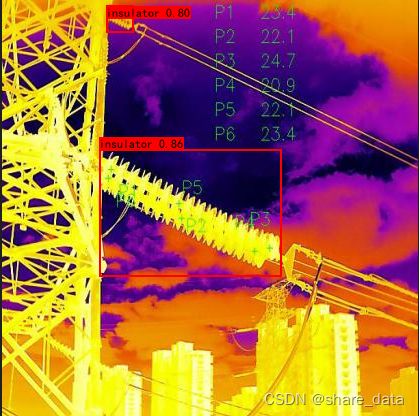

1. 项目背景

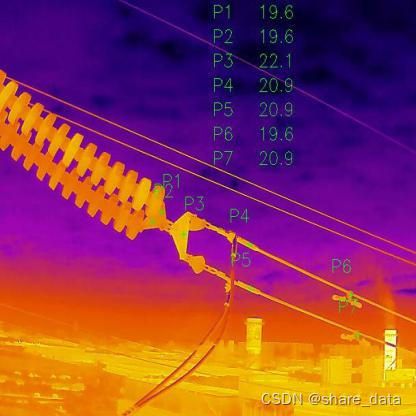

瓷绝缘子在长期机电负荷与恶劣气候条件影响下,易发生劣化并出现零值现象,导致绝缘子串的有效爬电距离缩短,在过电压下易发生闪络击穿,严重威胁输配电线路的安全运行。红外热像法因其非接触式、安全高效的优点,成为现有技术中相对可行的零值绝缘子带电检测方法。

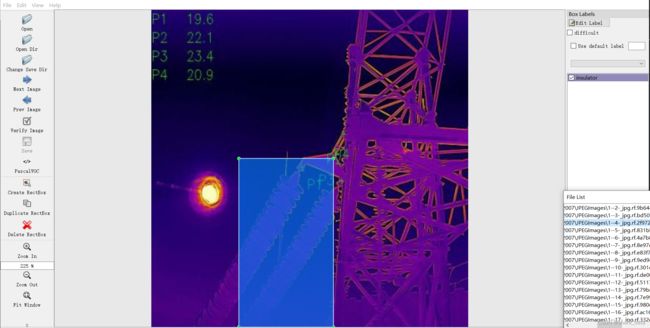

2. 图像数据集介绍

收集到440幅绝缘子红外测温图像,并利用labelimg对其中的绝缘子进行标注。

绝缘子VOC标签的格式如下:

1.jpg

1.jpg

roboflow.ai

416

416

3

0

labelimg的安装流程:

1. 打开Anaconda Prompt(Anaconda3)

2. 创建一个新环境来安装labelimg

conda create -n labelimg python=3.73. 激活新创建的环境labelimg

conda activate labelimg4.输入

conda activate labelimg5.输入labelimg 即可运行

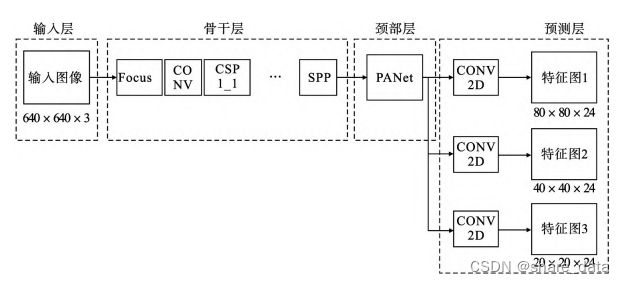

3. 模型介绍

模型训练过程中采用余弦退火衰减算法、并采用adam优化完成对模型权值参数的更新,其中训练集:测试集=9:1,冻结原始预训练YOLOv5模型前234层,迭代训练800个epoch,batchsize1=8,再解冻迭代训练200个epoch,batchsize2=4,3060ti显卡。

主干特征提取网络:

from functools import wraps

import tensorflow as tf

from tensorflow.keras import backend as K

from tensorflow.keras.initializers import RandomNormal

from tensorflow.keras.layers import (Add, BatchNormalization, Concatenate,

Conv2D, Layer, MaxPooling2D,

ZeroPadding2D)

from tensorflow.keras.regularizers import l2

from utils.utils import compose

class SiLU(Layer):

def __init__(self, **kwargs):

super(SiLU, self).__init__(**kwargs)

self.supports_masking = True

def call(self, inputs):

return inputs * K.sigmoid(inputs)

def get_config(self):

config = super(SiLU, self).get_config()

return config

def compute_output_shape(self, input_shape):

return input_shape

class Focus(Layer):

def __init__(self):

super(Focus, self).__init__()

def compute_output_shape(self, input_shape):

return (input_shape[0], input_shape[1] // 2 if input_shape[1] != None else input_shape[1], input_shape[2] // 2 if input_shape[2] != None else input_shape[2], input_shape[3] * 4)

def call(self, x):

return tf.concat(

[x[..., ::2, ::2, :],

x[..., 1::2, ::2, :],

x[..., ::2, 1::2, :],

x[..., 1::2, 1::2, :]],

axis=-1

)

@wraps(Conv2D)

def DarknetConv2D(*args, **kwargs):

darknet_conv_kwargs = {'kernel_initializer' : RandomNormal(stddev=0.02), 'kernel_regularizer' : l2(kwargs.get('weight_decay', 5e-4))}

darknet_conv_kwargs['padding'] = 'valid' if kwargs.get('strides')==(2, 2) else 'same'

try:

del kwargs['weight_decay']

except:

pass

darknet_conv_kwargs.update(kwargs)

return Conv2D(*args, **darknet_conv_kwargs)

#---------------------------------------------------#

# 卷积块 -> 卷积 + 标准化 + 激活函数

# DarknetConv2D + BatchNormalization + SiLU

#---------------------------------------------------#

def DarknetConv2D_BN_SiLU(*args, **kwargs):

no_bias_kwargs = {'use_bias': False}

no_bias_kwargs.update(kwargs)

if "name" in kwargs.keys():

no_bias_kwargs['name'] = kwargs['name'] + '.conv'

return compose(

DarknetConv2D(*args, **no_bias_kwargs),

BatchNormalization(momentum = 0.97, epsilon = 0.001, name = kwargs['name'] + '.bn'),

SiLU())

def Bottleneck(x, out_channels, shortcut=True, weight_decay=5e-4, name = ""):

y = compose(

DarknetConv2D_BN_SiLU(out_channels, (1, 1), weight_decay=weight_decay, name = name + '.cv1'),

DarknetConv2D_BN_SiLU(out_channels, (3, 3), weight_decay=weight_decay, name = name + '.cv2'))(x)

if shortcut:

y = Add()([x, y])

return y

def C3(x, num_filters, num_blocks, shortcut=True, expansion=0.5, weight_decay=5e-4, name=""):

hidden_channels = int(num_filters * expansion)

x_1 = DarknetConv2D_BN_SiLU(hidden_channels, (1, 1), weight_decay=weight_decay, name = name + '.cv1')(x)

x_2 = DarknetConv2D_BN_SiLU(hidden_channels, (1, 1), weight_decay=weight_decay, name = name + '.cv2')(x)

for i in range(num_blocks):

x_1 = Bottleneck(x_1, hidden_channels, shortcut=shortcut, weight_decay=weight_decay, name = name + '.m.' + str(i))

#----------------------------------------------------------------#

route = Concatenate()([x_1, x_2])

return DarknetConv2D_BN_SiLU(num_filters, (1, 1), weight_decay=weight_decay, name = name + '.cv3')(route)

def SPPBottleneck(x, out_channels, weight_decay=5e-4, name = ""):

x = DarknetConv2D_BN_SiLU(out_channels // 2, (1, 1), weight_decay=weight_decay, name = name + '.cv1')(x)

maxpool1 = MaxPooling2D(pool_size=(5, 5), strides=(1, 1), padding='same')(x)

maxpool2 = MaxPooling2D(pool_size=(9, 9), strides=(1, 1), padding='same')(x)

maxpool3 = MaxPooling2D(pool_size=(13, 13), strides=(1, 1), padding='same')(x)

x = Concatenate()([x, maxpool1, maxpool2, maxpool3])

x = DarknetConv2D_BN_SiLU(out_channels, (1, 1), weight_decay=weight_decay, name = name + '.cv2')(x)

return x

def resblock_body(x, num_filters, num_blocks, expansion=0.5, shortcut=True, last=False, weight_decay=5e-4, name = ""):

# 320, 320, 64 => 160, 160, 128

x = ZeroPadding2D(((1, 0),(1, 0)))(x)

x = DarknetConv2D_BN_SiLU(num_filters, (3, 3), strides = (2, 2), weight_decay=weight_decay, name = name + '.0')(x)

if last:

x = SPPBottleneck(x, num_filters, weight_decay=weight_decay, name = name + '.1')

return C3(x, num_filters, num_blocks, shortcut=shortcut, expansion=expansion, weight_decay=weight_decay, name = name + '.1' if not last else name + '.2')

def darknet_body(x, base_channels, base_depth, weight_decay=5e-4):

# 640, 640, 3 => 320, 320, 12

x = Focus()(x)

# 320, 320, 12 => 320, 320, 64

x = DarknetConv2D_BN_SiLU(base_channels, (3, 3), weight_decay=weight_decay, name = 'backbone.stem.conv')(x)

# 320, 320, 64 => 160, 160, 128

x = resblock_body(x, base_channels * 2, base_depth, weight_decay=weight_decay, name = 'backbone.dark2')

# 160, 160, 128 => 80, 80, 256

x = resblock_body(x, base_channels * 4, base_depth * 3, weight_decay=weight_decay, name = 'backbone.dark3')

feat1 = x

# 80, 80, 256 => 40, 40, 512

x = resblock_body(x, base_channels * 8, base_depth * 3, weight_decay=weight_decay, name = 'backbone.dark4')

feat2 = x

# 40, 40, 512 => 20, 20, 1024

x = resblock_body(x, base_channels * 16, base_depth, shortcut=False, last=True, weight_decay=weight_decay, name = 'backbone.dark5')

feat3 = x

return feat1,feat2,feat3yolov5模型:

from tensorflow.keras.layers import (Concatenate, Input, Lambda, UpSampling2D,

ZeroPadding2D)

from tensorflow.keras.models import Model

from nets.CSPdarknet import (C3, DarknetConv2D, DarknetConv2D_BN_SiLU,

darknet_body)

from nets.yolo_training import yolo_loss

#---------------------------------------------------#

def yolo_body(input_shape, anchors_mask, num_classes, phi, weight_decay=5e-4):

depth_dict = {'s' : 0.33, 'm' : 0.67, 'l' : 1.00, 'x' : 1.33,}

width_dict = {'s' : 0.50, 'm' : 0.75, 'l' : 1.00, 'x' : 1.25,}

dep_mul, wid_mul = depth_dict[phi], width_dict[phi]

base_channels = int(wid_mul * 64) # 64

base_depth = max(round(dep_mul * 3), 1) # 3

inputs = Input(input_shape)

feat1, feat2, feat3 = darknet_body(inputs, base_channels, base_depth, weight_decay)

P5 = DarknetConv2D_BN_SiLU(int(base_channels * 8), (1, 1), weight_decay=weight_decay, name = 'conv_for_feat3')(feat3)

P5_upsample = UpSampling2D()(P5)

P5_upsample = Concatenate(axis = -1)([P5_upsample, feat2])

P5_upsample = C3(P5_upsample, int(base_channels * 8), base_depth, shortcut = False, weight_decay=weight_decay, name = 'conv3_for_upsample1')

P4 = DarknetConv2D_BN_SiLU(int(base_channels * 4), (1, 1), weight_decay=weight_decay, name = 'conv_for_feat2')(P5_upsample)

P4_upsample = UpSampling2D()(P4)

P4_upsample = Concatenate(axis = -1)([P4_upsample, feat1])

P3_out = C3(P4_upsample, int(base_channels * 4), base_depth, shortcut = False, weight_decay=weight_decay, name = 'conv3_for_upsample2')

P3_downsample = ZeroPadding2D(((1, 0),(1, 0)))(P3_out)

P3_downsample = DarknetConv2D_BN_SiLU(int(base_channels * 4), (3, 3), strides = (2, 2), weight_decay=weight_decay, name = 'down_sample1')(P3_downsample)

P3_downsample = Concatenate(axis = -1)([P3_downsample, P4])

P4_out = C3(P3_downsample, int(base_channels * 8), base_depth, shortcut = False, weight_decay=weight_decay, name = 'conv3_for_downsample1')

P4_downsample = ZeroPadding2D(((1, 0),(1, 0)))(P4_out)

P4_downsample = DarknetConv2D_BN_SiLU(int(base_channels * 8), (3, 3), strides = (2, 2), weight_decay=weight_decay, name = 'down_sample2')(P4_downsample)

P4_downsample = Concatenate(axis = -1)([P4_downsample, P5])

P5_out = C3(P4_downsample, int(base_channels * 16), base_depth, shortcut = False, weight_decay=weight_decay, name = 'conv3_for_downsample2')

out2 = DarknetConv2D(len(anchors_mask[2]) * (5 + num_classes), (1, 1), strides = (1, 1), weight_decay=weight_decay, name = 'yolo_head_P3')(P3_out)

out1 = DarknetConv2D(len(anchors_mask[1]) * (5 + num_classes), (1, 1), strides = (1, 1), weight_decay=weight_decay, name = 'yolo_head_P4')(P4_out)

out0 = DarknetConv2D(len(anchors_mask[0]) * (5 + num_classes), (1, 1), strides = (1, 1), weight_decay=weight_decay, name = 'yolo_head_P5')(P5_out)

return Model(inputs, [out0, out1, out2])

def get_train_model(model_body, input_shape, num_classes, anchors, anchors_mask, label_smoothing):

y_true = [Input(shape = (input_shape[0] // {0:32, 1:16, 2:8}[l], input_shape[1] // {0:32, 1:16, 2:8}[l], \

len(anchors_mask[l]), num_classes + 5)) for l in range(len(anchors_mask))]

model_loss = Lambda(

yolo_loss,

output_shape = (1, ),

name = 'yolo_loss',

arguments = {

'input_shape' : input_shape,

'anchors' : anchors,

'anchors_mask' : anchors_mask,

'num_classes' : num_classes,

'label_smoothing' : label_smoothing,

'balance' : [0.4, 1.0, 4],

'box_ratio' : 0.05,

'obj_ratio' : 1 * (input_shape[0] * input_shape[1]) / (640 ** 2),

'cls_ratio' : 0.5 * (num_classes / 80)

}

)([*model_body.output, *y_true])

model = Model([model_body.input, *y_true], model_loss)

return model

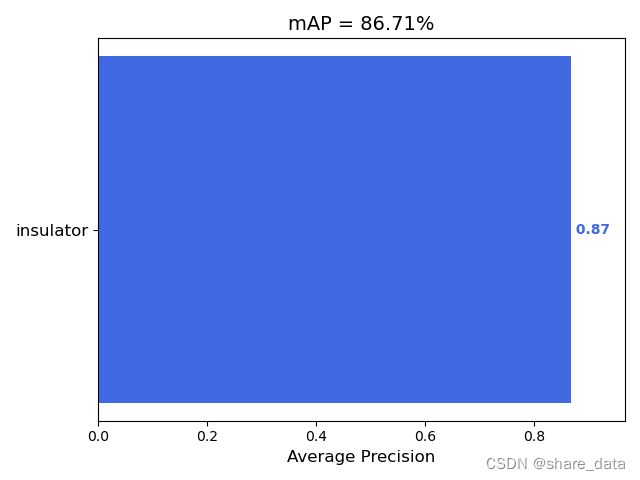

4. 模型性能测试

模型测试的mAP值:

获取mAP的程序:

import os

import xml.etree.ElementTree as ET

import tensorflow as tf

from PIL import Image

from tqdm import tqdm

from utils.utils import get_classes

from utils.utils_map import get_coco_map, get_map

from yolo import YOLO

gpus = tf.config.experimental.list_physical_devices(device_type='GPU')

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu, True)

if __name__ == "__main__":

classes_path = 'data/defeat_name.txt'

MINOVERLAP = 0.5

VOCdevkit_path = 'VOCdevkit'

image_ids = open(os.path.join(VOCdevkit_path, "VOC2007/ImageSets/Main/test.txt")).read().strip().split()

if not os.path.exists(map_out_path):

os.makedirs(map_out_path)

if not os.path.exists(os.path.join(map_out_path, 'ground-truth')):

os.makedirs(os.path.join(map_out_path, 'ground-truth'))

if not os.path.exists(os.path.join(map_out_path, 'detection-results')):

os.makedirs(os.path.join(map_out_path, 'detection-results'))

if not os.path.exists(os.path.join(map_out_path, 'images-optional')):

os.makedirs(os.path.join(map_out_path, 'images-optional'))

class_names, _ = get_classes(classes_path)

if map_mode == 0 :

print("Load model.")

yolo = YOLO(confidence = 0.001, nms_iou = 0.5)

print("Load model done.")

print("Get predict result.")

for image_id in tqdm(image_ids):

image_path = os.path.join(VOCdevkit_path, "VOC2007/JPEGImages/"+image_id+".jpg")

image = Image.open(image_path)

if map_vis:

image.save(os.path.join(map_out_path, "images-optional/" + image_id + ".jpg"))

yolo.get_map_txt(image_id, image, class_names, map_out_path)

print("Get predict result done.")

实例预测: