YOLOv1的pytorch复现版本,博主亲自测试完整复现。

在学习目标检测时候发现网上很多只讲原理流程的没有现场复现成功的,以下就是我在网上找了许久成功运行的YOLOv1 pytorch复现版本.

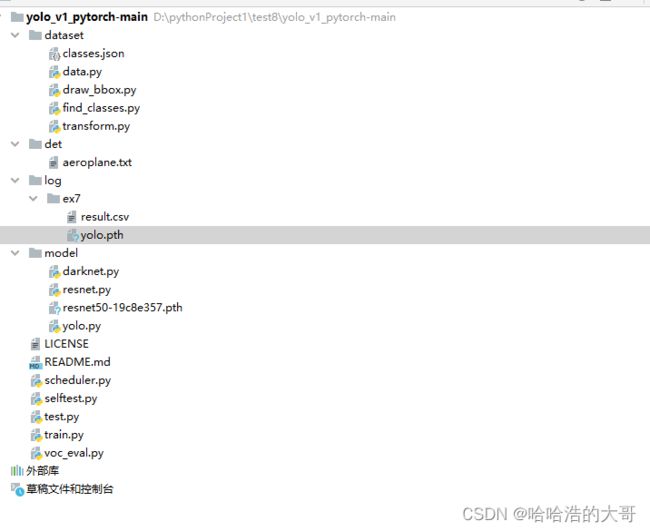

由于我对模型进行训练了,里面采用的前半部分卷积网络是使用resnet50,在官方训练好的resnet50网络参数上在进行的训练,这就意味着模型有点大,截图如下

仅仅这个模型训练好的参数就900M,所有资料如下。

链接:https://pan.baidu.com/s/1O31dOYinjjD5wawIhSxtIQ

提取码:2022

以下是整个模块的大小,说白了就是这个模型参数占空间。

整个模型部署的文件:

成果展示:

里面代码我都进行了注释,主要的模块如下:

find_classes.py

from dataset.data import xml2dict

import xml.etree.ElementTree as ET

from tqdm import tqdm

import json

import os

# 本模块主要检索voc文件中存放的类,并以json字典的方式存放

json_path = './classes.json' # 存放对应的类和编号

root = r'G:\数据集\voc\VOCtest_06-Nov-2007\VOCdevkit\VOC2007'

annotation_root = os.path.join(root, 'Annotations') # 进入标签文件夹

annotation_list = os.listdir(annotation_root) # 标签文件夹的名称

annotation_list = [os.path.join(annotation_root, a) for a in annotation_list] # 打开每一个标签文件

s = set() # 存放无序不重复元素,若重复则不添加

for annotation in tqdm(annotation_list):

xml = ET.parse(os.path.join(annotation)).getroot()

data = xml2dict(xml)['object'] # 读取一个文件中的所有对象,将存在的对象都存放s中

if isinstance(data, list): # 添加容错率,防止出现格式问题

for d in data:

s.add(d['name'])

else:

s.add(data['name'])

s = list(s) # s由字典形式变成列表形式

s.sort() # 排序

data = {value: i for i, value in enumerate(s)} # 将列表中的元素按照标号存放在字典中

json_str = json.dumps(data) # 转换成json格式

with open(json_path, 'w') as f:

f.write(json_str) # 将字典写入json文件中

transform.py

import torch

import torchvision

import random

# 图像处理函数

class Compose:

def __init__(self, transforms):

self.transforms = transforms # 转换函数

def __call__(self, image, label):

for t in self.transforms: # 轮流使用图片转换函数

image, label = t(image, label)

return image, label

class ToTensor: #转换类型

def __init__(self):

self.totensor = torchvision.transforms.ToTensor()

def __call__(self, image, label):

image = self.totensor(image)

label = torch.tensor(label)

return image, label

class RandomHorizontalFlip:

def __init__(self, p=0.5):

self.p = p

def __call__(self, image, label):

"""

:param label: xmin, ymin, xmax, ymax

如果图片被水平翻转,那么label的xmin与xmax会互换,变成 xmax, ymin, xmin, ymax

由于YOLO的输出是(center_x, center_y, w, h) ,因此label的xmin与xmax换位不会影响损失计算与训练

但是需要注意w,h计算时使用abs

"""

if random.random() < self.p:

#print("测试1",image.shape) torch.Size([3, 375, 500])

height, width = image.shape[-2:] # height:375 width:500

# print("测试2",label) tensor([[300., 167., 397., 268., 10.]])

image = image.flip(-1) # 水平翻转

bbox = label[:, :4]

# print("测试3",bbox) tensor([[300., 167., 397., 268.]])

# bbox: xmin, ymin, xmax, ymax

bbox[:, [0, 2]] = width - bbox[:, [0, 2]]

label[:, :4] = bbox

# print("测试4",label) tensor([[200., 167., 103., 268., 10.]]}

return image, label

class Resize:

def __init__(self, image_size, keep_ratio=True):

"""

:param image_size: int

keep_ratio = True 保留宽高比

keep_ratio = False 填充成正方形

"""

self.image_size = image_size

self.keep_ratio = keep_ratio

def __call__(self, image, label):

"""

:param in_image: tensor [3, h, w]

:param label: xmin, ymin, xmax, ymax

:return:

"""

# 将所有图片左上角对齐构成448*448tensor的Transform

h, w = tuple(image.size()[1:])

label[:, [0, 2]] = label[:, [0, 2]] / w

label[:, [1, 3]] = label[:, [1, 3]] / h

if self.keep_ratio:

r_h = min(self.image_size / h, self.image_size / w)

r_w = r_h

else:

r_h = self.image_size / h

r_w = self.image_size / w

h, w = int(r_h * h), int(r_w * w)

h, w = min(h, self.image_size), min(w, self.image_size)

label[:, [0, 2]] = label[:, [0, 2]] * w

label[:, [1, 3]] = label[:, [1, 3]] * h

T = torchvision.transforms.Resize([h, w])

Padding = torch.nn.ZeroPad2d((0, self.image_size - w, 0, self.image_size - h))

image = Padding(T(image))

assert list(image.size()) == [3, self.image_size, self.image_size]

# print("测试5",label) tensor([[268.8000, 149.6320, 355.7120, 240.1280, 10.0000]]}

return image, label

data.py

from dataset.transform import *

from torch.utils.data import Dataset

import xml.etree.ElementTree as ET

from PIL import Image

import numpy as np

import json

import os

def get_file_name(root, layout_txt):

# 读取root/layout_txt文件,得到一个字符串,以\n回车符为分隔符分割成list,[:-1]去除末尾的分隔符

with open(os.path.join(root, layout_txt)) as layout_txt:

file_name = layout_txt.read().split('\n')[:-1]

return file_name

def xml2dict(xml):

# 产生一个字典框架:data:{'folder': None, 'filename': None, 'source': None, 'owner': None, 'size': None, 'segmented': None, 'object': None}

# 将xml文件中的信息慢慢加载到字典data中去

data = {c.tag: None for c in xml}

# print("测试",data)

# exit()

for c in xml:

def add(data, tag, text):

if data[tag] is None:

data[tag] = text

elif isinstance(data[tag], list):

data[tag].append(text)

else:

data[tag] = [data[tag], text]

return data

if len(c) == 0:

data = add(data, c.tag, c.text)

else:

data = add(data, c.tag, xml2dict(c))

return data

class VOC0712Dataset(Dataset):

def __init__(self, root, class_path, transforms, mode, data_range=None, get_info=False):

# label: xmin, ymin, xmax, ymax, class

# root:根目录 class_path:包含所有类的字典路径 transforms:图片处理 mode:train or test

with open(class_path, 'r') as f:

json_str = f.read()

self.classes = json.loads(json_str) # 将类都加在到classes变量中

layout_txt = None

if mode == 'train':

root = [root[0], root[0], root[1], root[1]] # 主要目的就是同时将voc2007和voc2012联合起来

layout_txt = [r'ImageSets\Main\train.txt', r'ImageSets\Main\val.txt',

r'ImageSets\Main\train.txt', r'ImageSets\Main\val.txt']

elif mode == 'test':

if not isinstance(root, list): # 防止输入为单元素报错,并将其改成列表形式

root = [root]

layout_txt = [r'ImageSets\Main\test.txt']

assert layout_txt is not None, 'Unknown mode' # 如果mode不是train or test,抛出异常 Unknown mode

self.transforms = transforms

self.get_info = get_info

self.image_list = []

self.annotation_list = []

for r, txt in zip(root, layout_txt):

self.image_list += [os.path.join(r, 'JPEGImages', t + '.jpg') for t in get_file_name(r, txt)]

# 将每个图片的地址保存在image_list中

self.annotation_list += [os.path.join(r, 'Annotations', t + '.xml') for t in get_file_name(r, txt)]

# 将每个图片的xml文件信息保存在annotation_list中

# data_range是个二元数组,如data_range = [200,1000]表示数据集取200到1000区间的数据

if data_range is not None:

self.image_list = self.image_list[data_range[0]: data_range[1]]

self.annotation_list = self.annotation_list[data_range[0]: data_range[1]]

def __len__(self):

# 返回数据集的长度

return len(self.annotation_list)

def __getitem__(self, idx):

image = Image.open(self.image_list[idx]) # 获取图片

image_size = image.size # 获取图片的尺寸

label = self.label_process(self.annotation_list[idx])

# 获取图片标签label label: xmin, ymin, xmax, ymax, class

# label = [[156. 97. 351. 270. 6.]]

if self.transforms is not None: #是否转换图片

image, label = self.transforms(image, label)

if self.get_info: # 表示是否需要图像名称以及图像大小信息

return image, label, os.path.basename(self.image_list[idx]).split('.')[0], image_size

else:

return image, label

def label_process(self, annotation):

xml = ET.parse(os.path.join(annotation)).getroot()

data = xml2dict(xml)['object']

'''

此为xml文件中object中的格式

darknet.py

import torch.nn as nn

def conv(in_ch, out_ch, k_size=3, stride=1, padding=1):

# 输入通道,输出通道,卷积核,步幅,填充

return nn.Sequential(

nn.Conv2d(in_ch, out_ch, k_size, stride, padding, bias=False), # 卷积

nn.LeakyReLU(0.1) # 使用LeakyReLU激活函数,参数为0.1

)

def make_layer(param):

layers = []

if not isinstance(param[0], list): # 提高容错性,将param变成列表

param = [param]

for p in param:

layers.append(conv(*p))

return nn.Sequential(*layers)

class Block(nn.Module):

def __init__(self, param, use_pool=True):

super(Block, self).__init__()

self.conv = make_layer(param)

self.pool = nn.MaxPool2d(2)

self.use_pool = use_pool # 判断是否使用池化

def forward(self, x):

x = self.conv(x)

if self.use_pool:

x = self.pool(x)

return x

class DarkNet(nn.Module):

def __init__(self):

super(DarkNet, self).__init__()

self.conv1 = Block([[3, 64, 7, 2, 3]]) # 使用各种复合网络层

self.conv2 = Block([[64, 192, 3, 1, 1]])

self.conv3 = Block([[192, 128, 1, 1, 0],

[128, 256, 3, 1, 1],

[256, 256, 1, 1, 0],

[256, 512, 3, 1, 1]])

self.conv4 = Block([[512, 256, 1, 1, 0],

[256, 512, 3, 1, 1],

[512, 256, 1, 1, 0],

[256, 512, 3, 1, 1],

[512, 256, 1, 1, 0],

[256, 512, 3, 1, 1],

[512, 256, 1, 1, 0],

[256, 512, 3, 1, 1],

[512, 512, 1, 1, 0],

[512, 1024, 3, 1, 1]])

self.conv5 = Block([[1024, 512, 1, 1, 0],

[512, 1024, 3, 1, 1],

[1024, 512, 1, 1, 0],

[512, 1024, 3, 1, 1],

[1024, 1024, 3, 1, 1],

[1024, 1024, 3, 2, 1]], False)

self.conv6 = Block([[1024, 1024, 3, 1, 1],

[1024, 1024, 3, 1, 1]], False)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='leaky_relu')

# 使用正态分布对输入张量进行赋值,权重需要通过卷积隐性确定,需要设置mode = fan_in

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.conv6(x)

return x

if __name__ == "__main__":

import torch

x = torch.randn([1, 3, 448, 448])

net = DarkNet()

print(net)

out = net(x)

print(out.size()) # 1*1024*7*7

yolo.py

import numpy as np

from model.darknet import DarkNet

from model.resnet import resnet_1024ch

import torch

import torch.nn as nn

import torchvision

class yolo(nn.Module):

def __init__(self, s, cell_out_ch, backbone_name, pretrain=None):

# net = yolo(7, 2 * 5 + 20, 'resnet', pretrain=None)

# s:分成7*7格,纵向有30个通道,使用前一半网络的名称,是否预训练

"""

return: [s, s, cell_out_ch]

"""

super(yolo, self).__init__()

self.s = s

self.backbone = None

self.conv = None

if backbone_name == 'darknet': # 判断是否前一半使用什么模型

self.backbone = DarkNet()

elif backbone_name == 'resnet':

self.backbone = resnet_1024ch(pretrained=pretrain)

self.backbone_name = backbone_name

assert self.backbone is not None, 'Wrong backbone name' # 输入错误的模型名称

self.fc = nn.Sequential(

nn.Linear(1024 * s * s, 4096), # 全连接层

nn.LeakyReLU(0.1), # 使用LeakyReLU激活函数

nn.Dropout(0.5), # 防止模型过拟合

nn.Linear(4096, s * s * cell_out_ch) # 输出7*7*30

)

def forward(self, x):

batch_size = x.size(0) # batch_size 批量处理的总数

x = self.backbone(x) # 放入预处理函数 输入:torch.Size([1, 1024, 7, 7]) 输出:torch.Size([1, 1024, 7, 7])

# print("测试1",x.shape)

x = torch.flatten(x, 1) # 按照第1个维度拼接起来 输出:torch.Size([1, 50176])

# print("测试2",x.shape)

x = self.fc(x) # 输出:torch.Size([1, 1470])

# print("测试3",x.shape)

x = x.view(batch_size, self.s ** 2, -1) # 输出:torch.Size([1, 49, 30])

# print("测试4", x.shape)

return x

class yolo_loss:

def __init__(self, device, s, b, image_size, num_classes):

# yolo_loss('cpu', 2, 2, image_size, 2)

# device:cpu s:表示分成的格子数为s*s b:表示每个格子预测的目标框 image_size: 表示图片的尺寸 num_classes:种类数

self.device = device

self.s = s

self.b = b

self.image_size = image_size

self.num_classes = num_classes

self.batch_size = 0

def __call__(self, input, target):

"""

:param input: (yolo net output)

tensor[s, s, b*5 + n_class] bbox: b * (c_x, c_y, w, h, obj_conf), class1_p, class2_p.. %

:param target: (dataset) tensor[n_bbox] bbox: x_min, ymin, xmax, ymax, class

:return: loss tensor

grid type: [[bbox, ..], [], ..] -> bbox_in_grid: c_x(%), c_y(%), w(%), h(%), class(int)

target to grid type

if s = 7 -> grid idx: 1 -> 49

"""

self.batch_size = input.size(0) # batch_size

# label预处理,target 变为x,y,w,h,c

target = [self.label_direct2grid(target[i]) for i in range(self.batch_size)]

# IoU 匹配predictor和label

# 以Predictor为基准,每个Predictor都有且仅有一个需要负责的Target(前提是Predictor所在Grid Cell有Target中心位于此)

# x, y, w, h, c

match = []

conf = [] # 置信度

for i in range(self.batch_size):

#输入input[i]: (4+1)*b(目标框)+c(分类)

m, c = self.match_pred_target(input[i], target[i])

# print("测试7",input[i])

match.append(m)

conf.append(c)

loss = torch.zeros([self.batch_size], dtype=torch.float, device=self.device)

xy_loss = torch.zeros_like(loss)

wh_loss = torch.zeros_like(loss)

conf_loss = torch.zeros_like(loss)

class_loss = torch.zeros_like(loss)

for i in range(self.batch_size):

loss[i], xy_loss[i], wh_loss[i], conf_loss[i], class_loss[i] = \

self.compute_loss(input[i], target[i], match[i], conf[i])

return torch.mean(loss), torch.mean(xy_loss), torch.mean(wh_loss), torch.mean(conf_loss), torch.mean(class_loss)

def label_direct2grid(self, label):

# print("测试6", label)

"""

:param label: dataset type: xmin, ymin, xmax, ymax, class

:return: label: grid type, if the grid doesn't have object -> put None

将label转换为c_x, c_y, w, h, conf再根据不同的grid cell分类,并转换成百分比形式

若一个grid cell中没有label则都用None代替

"""

output = [None for _ in range(self.s ** 2)] # out = [None, None, None, None]

# print("测试1",output)tensor([[200., 200., 353., 300., 1.],

size = self.image_size // self.s # h, w s=2,h和w都是中心点到边缘的距离

# print("测试2",label) tensor([[200., 200., 353., 300., 1.],

n_bbox = label.size(0) # 一共有多少组数据 5

# print("测试3",n_bbox) 5

label_c = torch.zeros_like(label) # 生成和 label维度相同全是0的tensor变量

# print("测试4",label_c) tensor([[0., 0., 0., 0., 0.],

label_c[:, 0] = (label[:, 0] + label[:, 2]) / 2

label_c[:, 1] = (label[:, 1] + label[:, 3]) / 2

label_c[:, 2] = abs(label[:, 0] - label[:, 2])

label_c[:, 3] = abs(label[:, 1] - label[:, 3])

label_c[:, 4] = label[:, 4]

idx_x = [int(label_c[i][0]) // size for i in range(n_bbox)]

idx_y = [int(label_c[i][1]) // size for i in range(n_bbox)]

label_c[:, 0] = torch.div(torch.fmod(label_c[:, 0], size), size)

label_c[:, 1] = torch.div(torch.fmod(label_c[:, 1], size), size)

label_c[:, 2] = torch.div(label_c[:, 2], self.image_size)

label_c[:, 3] = torch.div(label_c[:, 3], self.image_size)

for i in range(n_bbox): # 轮流处理每组数据

idx = idx_y[i] * self.s + idx_x[i]

if output[idx] is None:

output[idx] = torch.unsqueeze(label_c[i], dim=0)

else:

output[idx] = torch.cat([output[idx], torch.unsqueeze(label_c[i], dim=0)], dim=0)

# print("测试5",output)tensor([[0.6652, 0.6094, 0.2188, 0.3862, 1.0000], x,y,w,h,conf

# exit() input = [[200., 200., 353., 300., 1.], output = [[0.6652, 0.6094, 0.2188, 0.3862, 1.0000]

return output

def match_pred_target(self, input, target): # 获取目标框

# 输入input[i]: (4+1)*b(目标框)+c(分类)

match = []

conf = [] # 置信度

with torch.no_grad():

# input:1,30 input_bbox: 1,2,5 2*(4+1)

input_bbox = input[:, :self.b * 5].reshape(-1, self.b, 5)

ious = [match_get_iou(input_bbox[i], target[i], self.s, i) #得到所有的目标框

for i in range(self.s ** 2)]

for iou in ious:

if iou is None:

match.append(None)

conf.append(None)

else:

keep = np.ones([len(iou[0])], dtype=bool) #生成相同数组,使用bool值作为填充元素

m = []

c = []

for i in range(self.b):

if np.any(keep) == False: # 对所有元素或运算

break

idx = np.argmax(iou[i][keep])

np_max = np.max(iou[i][keep])

m.append(np.argwhere(iou[i] == np_max).tolist()[0][0])

c.append(np.max(iou[i][keep]))

keep[idx] = 0

match.append(m)

conf.append(c)

# print("mache:",match,"conf:",conf)

# mache: [[1, 0], None, [0], [0, 1]]

# conf: [[0.2081377, 0.15084402], None, [0.10520169], [0.42359748, 0.30520925]]

# exit()

return match, conf

def compute_loss(self, input, target, match, conf):

# 计算损失

ce_loss = nn.CrossEntropyLoss()

input_bbox = input[:, :self.b * 5].reshape(-1, self.b, 5)

input_class = input[:, self.b * 5:].reshape(-1, self.num_classes)

input_bbox = torch.sigmoid(input_bbox)

loss = torch.zeros([self.s ** 2], dtype=torch.float, device=self.device)

xy_loss = torch.zeros_like(loss)

wh_loss = torch.zeros_like(loss)

conf_loss = torch.zeros_like(loss)

class_loss = torch.zeros_like(loss)

for i in range(self.s ** 2):

# 0 xy_loss, 1 wh_loss, 2 conf_loss, 3 class_loss

l = torch.zeros([4], dtype=torch.float, device=self.device)

# Neg

if target[i] is None:

# λ_noobj = 0.5

obj_conf_target = torch.zeros([self.b], dtype=torch.float, device=self.device)

l[2] = torch.sum(torch.mul(0.5, torch.pow(input_bbox[i, :, 4] - obj_conf_target, 2)))

else:

# λ_coord = 5

l[0] = torch.mul(5, torch.sum(torch.pow(input_bbox[i, :, 0] - target[i][match[i], 0], 2) +

torch.pow(input_bbox[i, :, 1] - target[i][match[i], 1], 2)))

l[1] = torch.mul(5, torch.sum(torch.pow(torch.sqrt(input_bbox[i, :, 2]) -

torch.sqrt(target[i][match[i], 2]), 2) +

torch.pow(torch.sqrt(input_bbox[i, :, 3]) -

torch.sqrt(target[i][match[i], 3]), 2)))

obj_conf_target = torch.tensor(conf[i], dtype=torch.float, device=self.device)

l[2] = torch.sum(torch.pow(input_bbox[i, :, 4] - obj_conf_target, 2))

l[3] = ce_loss(input_class[i].unsqueeze(dim=0).repeat(target[i].size(0), 1),

target[i][:, 4].long())

loss[i] = torch.sum(l)

xy_loss[i] = torch.sum(l[0])

wh_loss[i] = torch.sum(l[1])

conf_loss[i] = torch.sum(l[2])

class_loss[i] = torch.sum(l[3])

return torch.sum(loss), torch.sum(xy_loss), torch.sum(wh_loss), torch.sum(conf_loss), torch.sum(class_loss)

def cxcywh2xyxy(bbox):

# print("测试10",bbox)

"""

:param bbox: [bbox, bbox, ..] tensor c_x(%), c_y(%), w(%), h(%), c

"""

bbox[:, 0] = bbox[:, 0] - bbox[:, 2] / 2 #x中心

bbox[:, 1] = bbox[:, 1] - bbox[:, 3] / 2 #y中心

bbox[:, 2] = bbox[:, 0] + bbox[:, 2]

bbox[:, 3] = bbox[:, 1] + bbox[:, 3]

# print("测试11",bbox)

# 测试10[[0.225 0.12 0.22 0.3 0.35]

# 测试11[[0.11499999 - 0.03000001 0.33499998 0.27 0.35]

return bbox

def match_get_iou(bbox1, bbox2, s, idx):

# bbox1/2:x,y,w,h,c

"""

:param bbox1: [bbox, bbox, ..] tensor c_x(%), c_y(%), w(%), h(%), c

:return:

"""

# bbox1,bbox2是目标检测框

if bbox1 is None or bbox2 is None: # 如果检测框都没有目标则返回None

return None

# print("测试7",bbox1)

# 测试7

# tensor([[0.4500, 0.2400, 0.2200, 0.3000, 0.3500],

# [0.5400, 0.6600, 0.7000, 0.8000, 0.8000]])

bbox1 = np.array(bbox1.cpu())

bbox2 = np.array(bbox2.cpu())

# c_x, c_y转换为对整张图片的百分比

bbox1[:, 0] = bbox1[:, 0] / s

bbox1[:, 1] = bbox1[:, 1] / s

bbox2[:, 0] = bbox2[:, 0] / s

bbox2[:, 1] = bbox2[:, 1] / s

# print("测试8", bbox1)

# 测试8[[0.225 0.12 0.22 0.3 0.35]

# [0.27 0.33 0.70.8 0.8]]

# c_x, c_y加上grid cell左上角左边变成完整坐标

grid_pos = [(j / s, i / s) for i in range(s) for j in range(s)]

bbox1[:, 0] = bbox1[:, 0] + grid_pos[idx][0]

bbox1[:, 1] = bbox1[:, 1] + grid_pos[idx][1]

bbox2[:, 0] = bbox2[:, 0] + grid_pos[idx][0]

bbox2[:, 1] = bbox2[:, 1] + grid_pos[idx][1]

# print("测试9", bbox1)

# 测试9[[0.225 0.12 0.22 0.3 0.35]

# [0.27 0.33 0.7 0.8 0.8]]

bbox1 = cxcywh2xyxy(bbox1)

bbox2 = cxcywh2xyxy(bbox2)

# print("测试9", bbox1)

# 测试9[[0.11499999 - 0.03000001 0.33499998 0.27 0.35]

# [-0.07999998 - 0.06999999 0.62 0.73 0.8]]

# exit()

return get_iou(bbox1, bbox2)

def get_iou(bbox1, bbox2): # 返回目标框的面积

"""

:param bbox1: [bbox, bbox, ..] tensor xmin ymin xmax ymax

:param bbox2:

:return: area:

"""

s1 = abs(bbox1[:, 2] - bbox1[:, 0]) * abs(bbox1[:, 3] - bbox1[:, 1])

s2 = abs(bbox2[:, 2] - bbox2[:, 0]) * abs(bbox2[:, 3] - bbox2[:, 1])

ious = []

for i in range(bbox1.shape[0]):

xmin = np.maximum(bbox1[i, 0], bbox2[:, 0])

ymin = np.maximum(bbox1[i, 1], bbox2[:, 1])

xmax = np.minimum(bbox1[i, 2], bbox2[:, 2])

ymax = np.minimum(bbox1[i, 3], bbox2[:, 3])

in_w = np.maximum(xmax - xmin, 0)

in_h = np.maximum(ymax - ymin, 0)

in_s = in_w * in_h

iou = in_s / (s1[i] + s2 - in_s)

# print("测试12",iou) [0.13336225 0.2081377 ]

# exit()

ious.append(iou)

ious = np.array(ious)

return ious

def nms(bbox, conf_th, iou_th):

bbox = np.array(bbox.cpu())

bbox[:, 4] = bbox[:, 4] * bbox[:, 5]

bbox = bbox[bbox[:, 4] > conf_th]

order = np.argsort(-bbox[:, 4])

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

iou = get_iou(np.array([bbox[i]]), bbox[order[1:]])[0]

inds = np.where(iou <= iou_th)[0]

order = order[inds + 1]

return bbox[keep]

# yolo后处理

def output_process(output, image_size, s, b, conf_th, iou_th):

"""

output:前处理的结果: torch.Size([1, 49, 30])

image_size:图片尺寸

s:分成s*s块

b:每个grid cell 预测两个目标框

conf_th:

iou_th:

param: output in batch

:return output: list[], bbox: xmin, ymin, xmax, ymax, obj_conf, classes_conf, classes

"""

batch_size = output.size(0)

size = image_size // s

# print("test1",output)

output = torch.sigmoid(output)

# Get Class

classes_conf, classes = torch.max(output[:, :, b * 5:], dim=2)

classes = classes.unsqueeze(dim=2).repeat(1, 1, 2).unsqueeze(dim=3)

classes_conf = classes_conf.unsqueeze(dim=2).repeat(1, 1, 2).unsqueeze(dim=3)

bbox = output[:, :, :b * 5].reshape(batch_size, -1, b, 5)

bbox = torch.cat([bbox, classes_conf, classes], dim=3)

# To Direct

bbox[:, :, :, [0, 1]] = bbox[:, :, :, [0, 1]] * size

bbox[:, :, :, [2, 3]] = bbox[:, :, :, [2, 3]] * image_size

grid_pos = [(j * image_size // s, i * image_size // s) for i in range(s) for j in range(s)]

def to_direct(bbox):

for i in range(s ** 2):

bbox[i, :, 0] = bbox[i, :, 0] + grid_pos[i][0]

bbox[i, :, 1] = bbox[i, :, 1] + grid_pos[i][1]

return bbox

bbox_direct = torch.stack([to_direct(b) for b in bbox])

bbox_direct = bbox_direct.reshape(batch_size, -1, 7)

# cxcywh to xyxy

bbox_direct[:, :, 0] = bbox_direct[:, :, 0] - bbox_direct[:, :, 2] / 2

bbox_direct[:, :, 1] = bbox_direct[:, :, 1] - bbox_direct[:, :, 3] / 2

bbox_direct[:, :, 2] = bbox_direct[:, :, 0] + bbox_direct[:, :, 2]

bbox_direct[:, :, 3] = bbox_direct[:, :, 1] + bbox_direct[:, :, 3]

bbox_direct[:, :, 0] = torch.maximum(bbox_direct[:, :, 0], torch.zeros(1))

bbox_direct[:, :, 1] = torch.maximum(bbox_direct[:, :, 1], torch.zeros(1))

bbox_direct[:, :, 2] = torch.minimum(bbox_direct[:, :, 2], torch.tensor([image_size]))

bbox_direct[:, :, 3] = torch.minimum(bbox_direct[:, :, 3], torch.tensor([image_size]))

bbox = [torch.tensor(nms(b, conf_th, iou_th)) for b in bbox_direct]

bbox = torch.stack(bbox)

return bbox

if __name__ == "__main__":

import torch

# Test yolo

x = torch.randn([1, 3, 448, 448])

# B * 5 + n_classes

net = yolo(7, 2 * 5 + 20, 'resnet', pretrain=None)

# net = yolo(7, 2 * 5 + 20, 'darknet', pretrain=None)

# print(net)

out = net(x)

# print(out[0][0])

print(out.size())

# Test yolo_loss

# 测试时假设 s=2, class=2

s = 2

b = 2

image_size = 448 # h, w

input = torch.tensor([[[0.45, 0.24, 0.22, 0.3, 0.35, 0.54, 0.66, 0.7, 0.8, 0.8, 0.17, 0.9],

[0.37, 0.25, 0.5, 0.3, 0.36, 0.14, 0.27, 0.26, 0.33, 0.36, 0.13, 0.9],

[0.12, 0.8, 0.26, 0.74, 0.8, 0.13, 0.83, 0.6, 0.75, 0.87, 0.75, 0.24],

[0.1, 0.27, 0.24, 0.37, 0.34, 0.15, 0.26, 0.27, 0.37, 0.34, 0.16, 0.93]]])

target = [torch.tensor([[200, 200, 353, 300, 1],

[220, 230, 353, 300, 1],

[15, 330, 200, 400, 0],

[100, 50, 198, 223, 1],

[30, 60, 150, 240, 1]], dtype=torch.float)]

criterion = yolo_loss('cpu', 2, 2, image_size, 2)

loss = criterion(input, target)

print(loss)

train.py

from dataset.data import VOC0712Dataset

from dataset.transform import *

from model.yolo import yolo, yolo_loss

from scheduler import Scheduler

import torch

from torch.utils.data import DataLoader

from torch import optim

from torch.cuda.amp import autocast, GradScaler

from tqdm import tqdm

import pandas as pd

import json

import os

import warnings

class CFG: # 设置参数

device = 'cuda:0' if torch.cuda.is_available() else 'cpu' # 运行设备cpu or gpu

# 数据集地址

root0712 = [r'G:\数据集\voc\VOCtrainval_06-Nov-2007\VOCdevkit\VOC2007', r'G:\数据集\voc\VOCtrainval_11-May-2012\VOCdevkit\VOC2012']

class_path = r'./dataset/classes.json' # 类别

model_root = r'D:\pythonProject1\test8\yolo_v1_pytorch-main\log\ex7' # 存放参数

model_path = None # 创建存放模型文件夹 手动创建

backbone = 'resnet' # 选择已经训练过的模型

pretrain = 'model/resnet50-19c8e357.pth' # resnet50官方训练好的参数

with_amp = True # True采用混合精度,False采用常规精度

S = 7 # 分成7*7grid cell

B = 2 # 每个grid cell 预测的目标框数目

image_size = 448 # 输入图片尺寸

transforms = Compose([ # 图像处理

ToTensor(),

RandomHorizontalFlip(0.5),

Resize(448, keep_ratio=False)

])

start_epoch = 0

epoch = 135

batch_size = 16

num_workers = 2

# freeze_backbone_till = -1 则不冻结

freeze_backbone_till = 30

scheduler_params = {

'lr_start': 1e-3 / 4,

'step_warm_ep': 10,

'step_1_lr': 1e-2 / 4,

'step_1_ep': 75,

'step_2_lr': 1e-3 / 4,

'step_2_ep': 40,

'step_3_lr': 1e-4 / 4,

'step_3_ep': 10

}

momentum = 0.9

weight_decay = 0.0005

def collate_fn(batch):

return tuple(zip(*batch))

class AverageMeter:

def __init__(self):

self.reset()

def reset(self):

self.val = 0

self.avg = 0

self.sum = 0

self.count = 0

def update(self, val, n=1):

self.val = val

self.sum += val * n

self.count += n

self.avg = self.sum / self.count

def train():

device = torch.device(CFG.device)

print('Train:\nDevice:{}'.format(device))

with open(CFG.class_path, 'r') as f:

json_str = f.read()

classes = json.loads(json_str) # 类别

CFG.num_classes = len(classes) # 类别数

# dataset

train_ds = VOC0712Dataset(CFG.root0712, CFG.class_path, CFG.transforms, 'train',data_range=[100,2000])

test_ds = VOC0712Dataset(CFG.root0712, CFG.class_path, CFG.transforms, 'test',data_range=[100,1000])

# dataloader

train_dl = DataLoader(train_ds, batch_size=CFG.batch_size, shuffle=True,

num_workers=CFG.num_workers, collate_fn=collate_fn)

test_dl = DataLoader(test_ds, batch_size=CFG.batch_size, shuffle=False,

num_workers=CFG.num_workers, collate_fn=collate_fn)

yolo_net = yolo(s=CFG.S, cell_out_ch=CFG.B * 5 + CFG.num_classes, backbone_name=CFG.backbone, pretrain=CFG.pretrain)

# s:7 c:2*(4+1)+20 backbone_name:resnet pretrain:预训练好的参数文件

yolo_net.to(device)

if CFG.model_path is not None: # 存放模型参数

yolo_net.load_state_dict(torch.load(CFG.model_path))

if CFG.freeze_backbone_till != -1: # 将resnet模型参数保留不改变

print('Freeze Backbone')

for param in yolo_net.backbone.parameters():

param.requires_grad_(False)

param = [p for p in yolo_net.parameters() if p.requires_grad] # 选择需要训练的参数

optimizer = optim.SGD(param, lr=CFG.scheduler_params['lr_start'],

momentum=CFG.momentum, weight_decay=CFG.weight_decay)

# 优化器选择需要优化的网络参数,学习率,动量,权重衰减

criterion = yolo_loss(CFG.device, CFG.S, CFG.B, CFG.image_size, len(train_ds.classes))

# 损失

scheduler = Scheduler(optimizer, **CFG.scheduler_params)

scaler = GradScaler() # 自动混合精度使用

for _ in range(CFG.start_epoch):

scheduler.step() # 随着训练轮数来调整学习率

best_train_loss = 1e+9

train_losses = []

test_losses = []

lrs = []

for epoch in range(CFG.start_epoch, CFG.epoch): #当训练轮数超过30轮时,才改变整个模型的参数

if CFG.freeze_backbone_till != -1 and epoch >= CFG.freeze_backbone_till:

print('Unfreeze Backbone')

for param in yolo_net.backbone.parameters():

param.requires_grad_(True)

CFG.freeze_backbone_till = -1

# Train

yolo_net.train()

loss_score = AverageMeter() # 计算平均损失

dl = tqdm(train_dl, total=len(train_dl)) # 显示进度条

for images, labels in dl:

batch_size = len(labels)

images = torch.stack(images) # 沿着新维度拼接images

images = images.to(device)

labels = [label.to(device) for label in labels]

optimizer.zero_grad() # 梯度归零

if CFG.with_amp: # 使用混合精度

with autocast():

outputs = yolo_net(images)

loss, xy_loss, wh_loss, conf_loss, class_loss = criterion(outputs, labels)

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

else:

outputs = yolo_net(images)

loss, xy_loss, wh_loss, conf_loss, class_loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

loss_score.update(loss.detach().item(), batch_size)

dl.set_postfix(Mode='Train', AvgLoss=loss_score.avg, Loss=loss.detach().item(),

Epoch=epoch, LR=optimizer.param_groups[0]['lr']) # 进度条显示信息

lrs.append(optimizer.param_groups[0]['lr']) # 记录lr的变化

scheduler.step() # lr优化

train_losses.append(loss_score.avg) # 记录loss

print('Train Loss: {:.4f}'.format(loss_score.avg))

if best_train_loss > loss_score.avg: # 训练损失值减小保存模型参数

print('Save yolo_net to {}'.format(os.path.join(CFG.model_root, 'yolo.pth')))

torch.save(yolo_net.state_dict(), os.path.join(CFG.model_root, 'yolo.pth'))

best_train_loss = loss_score.avg

loss_score.reset() # loss值归零

with torch.no_grad():

# Test

yolo_net.eval()

dl = tqdm(test_dl, total=len(test_dl))

for images, labels in dl:

batch_size = len(labels)

images = torch.stack(images)

images = images.to(device)

labels = [label.to(device) for label in labels]

outputs = yolo_net(images)

loss, xy_loss, wh_loss, conf_loss, class_loss = criterion(outputs, labels)

loss_score.update(loss.detach().item(), batch_size)

dl.set_postfix(Mode='Test', AvgLoss=loss_score.avg, Loss=loss.detach().item(), Epoch=epoch)

test_losses.append(loss_score.avg)

print('Test Loss: {:.4f}'.format(loss_score.avg))

df = pd.DataFrame({'Train Loss': train_losses, 'Test Loss': test_losses, 'LR': lrs})

df.to_csv(os.path.join(CFG.model_root, 'result.csv'), index=True) # 保存参数到 excel 中

if __name__ == '__main__':

warnings.filterwarnings('ignore') # 警告过滤器

train()

test.py

from dataset.data import VOC0712Dataset, Compose, ToTensor, Resize

from dataset.draw_bbox import draw

from model.yolo import yolo, output_process

from voc_eval import voc_eval

import torch

import pandas as pd

from tqdm import tqdm

import json

class CFG:

device = 'cuda:0' if torch.cuda.is_available() else 'cpu'

root = r'G:\数据集\voc\VOCtrainval_06-Nov-2007\VOCdevkit\VOC2007'

class_path = r'dataset/classes.json'

model_path = r'D:\pythonProject1\test8\yolo_v1_pytorch-main\log\ex7\yolo.pth'

detpath = r'det\{}.txt'

annopath = r'G:\数据集\voc\VOCtrainval_06-Nov-2007\VOCdevkit\VOC2007\Annotations\{}.xml'

imagesetfile = r'G:\数据集\voc\VOCtrainval_06-Nov-2007\VOCdevkit\VOC2007\ImageSets\Main\test.txt'

classname = None

test_range = [100, 150]

show_image = True

get_ap = False

backbone = 'resnet'

S = 7

B = 2

image_size = 448

get_info = True

transforms = Compose([

ToTensor(),

Resize(448, keep_ratio=False)

])

num_classes = 0

conf_th = 0.2

iou_th = 0.5

def test():

device = torch.device(CFG.device)

print('Test:\nDevice:{}'.format(device))

dataset = VOC0712Dataset(CFG.root, CFG.class_path, CFG.transforms, 'test',

data_range=CFG.test_range, get_info=CFG.get_info)

# dataset = VOC0712Dataset(CFG.root, CFG.class_path, CFG.transforms, 'test',

# data_range=[100, 300], get_info=CFG.get_info)

with open(CFG.class_path, 'r') as f:

json_str = f.read()

classes = json.loads(json_str)

CFG.classname = list(classes.keys())

CFG.num_classes = len(CFG.classname)

yolo_net = yolo(s=CFG.S, cell_out_ch=CFG.B * 5 + CFG.num_classes, backbone_name=CFG.backbone)

yolo_net.to(device)

yolo_net.load_state_dict(torch.load(CFG.model_path))

bboxes = []

with torch.no_grad():

for image, label, image_name, input_size in tqdm(dataset):

image = image.unsqueeze(dim=0)

image = image.to(device)

output = yolo_net(image)

output = output_process(output.cpu(), CFG.image_size, CFG.S, CFG.B, CFG.conf_th, CFG.iou_th)

if CFG.show_image:

draw(image.squeeze(dim=0), output.squeeze(dim=0), classes, show_conf=True)

draw(image.squeeze(dim=0), label, classes, show_conf=True)

# 还原

output[:, :, [0, 2]] = output[:, :, [0, 2]] * input_size[0] / CFG.image_size

output[:, :, [1, 3]] = output[:, :, [1, 3]] * input_size[1] / CFG.image_size

output = output.squeeze(dim=0).numpy().tolist()

if len(output) > 0:

pred = [[image_name, output[i][-3] * output[i][-2]] + output[i][:4] + [int(output[i][-1])]

for i in range(len(output))]

bboxes += pred

det_list = [[] for _ in range(CFG.num_classes)]

for b in bboxes:

det_list[b[-1]].append(b[:-1])

if CFG.get_ap :

map = 0

for idx in range(CFG.num_classes):

file_path = CFG.detpath.format(CFG.classname[idx])

txt = '\n'.join([' '.join([str(i) for i in item]) for item in det_list[idx]])

with open(file_path, 'w') as f:

f.write(txt)

rec, prec, ap = voc_eval(CFG.detpath, CFG.annopath, CFG.imagesetfile, CFG.classname[idx])

print(rec)

print(prec)

map += ap

print(ap)

map /= CFG.num_classes

print('mAP', map)

if __name__ == '__main__':

test()

我的建议是可以直接去看下我复现的那位博主的版本,对着那位博主github上传的资源直接下载用pycharm加载出来,然后对照着我所给出来的主要模块的注释(源代码注释很少,我基本都注释了),就可以很轻松的复现出来。

传送门:(以下两种方式都可以)

http://t.csdn.cn/OEHNb![]() http://t.csdn.cn/OEHNb(14条消息) 复现YOLO v1 PyTorch_Eclipse_777的博客-CSDN博客

http://t.csdn.cn/OEHNb(14条消息) 复现YOLO v1 PyTorch_Eclipse_777的博客-CSDN博客