Android MNN部署模型

项目需要在Android平台用MNN部署语音模型,实现实时语音转文字等功能,先简单搭建下环境,跑通直线检测模型.

这边记录下过程,及出现的问题.

项目环境:

1.编译MNN生成动/静态库(.so/.a)

上篇已完成库文件编译,可参考Android编译MNN

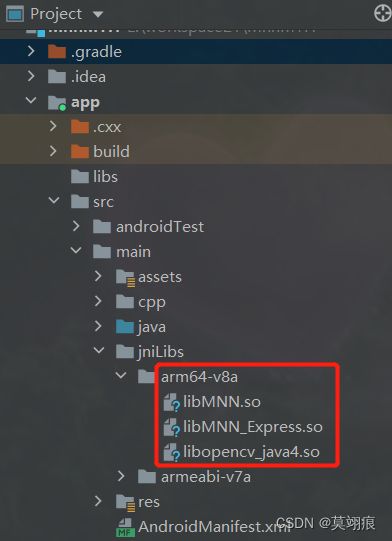

2.配置库文件和头文件

3.配置CMake(CMakeLists.txt)

CMakeLists配置字段具体含义可参考Android NDK项目CMakeLists.txt配置导入第三方库

# For more information about using CMake with Android Studio, read the

# documentation: https://d.android.com/studio/projects/add-native-code.html

# Sets the minimum version of CMake required to build the native library.

cmake_minimum_required(VERSION 3.18.1)

# Declares and names the project.

project("mnnmyh")

aux_source_directory(. SRC_LIST) # 当前文件夹下的所有文件,不包含子文件夹

add_library( # Sets the name of the library.

mnnmyh

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

#native-lib.cpp)

${SRC_LIST})

#mnn

include_directories(${CMAKE_SOURCE_DIR}/MNN/)

include_directories(${CMAKE_SOURCE_DIR}/MNN/plugin)

add_library(libMNN SHARED IMPORTED)

set_target_properties(

libMNN

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libMNN.so

)

include_directories(${CMAKE_SOURCE_DIR}/MNN/expr)

add_library(libMNN_Express SHARED IMPORTED)

set_target_properties(

libMNN_Express

PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/../jniLibs/${ANDROID_ABI}/libMNN_Express.so

)

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log)

target_link_libraries( # Specifies the target library.

mnnmyh

# Links the target library to the log library

# included in the NDK.

${log-lib}

libMNN_Express

libMNN

)

4.build.gradle配置(app/build.gradle)

android {

compileSdk 31

defaultConfig {

...省略无关代码

externalNativeBuild {

cmake {

cppFlags '-std=c++11'

arguments "-DANDROID_STL=c++_shared"

}

}

ndk {

//编译那些cpu架构的库

abiFilters 'armeabi-v7a','arm64-v8a'

}

}

//配置CMakeLists路径,如果创建的是支持C++的项目,此处会自动配置

externalNativeBuild {

cmake {

path file('src/main/cpp/CMakeLists.txt')

version '3.18.1'

}

}

sourceSets {

main {

jniLibs.srcDirs = ['libs']

}

}

//此处可指定NDK版本(AS已下载的版本)

ndkVersion '22.0.7026061'

}

...

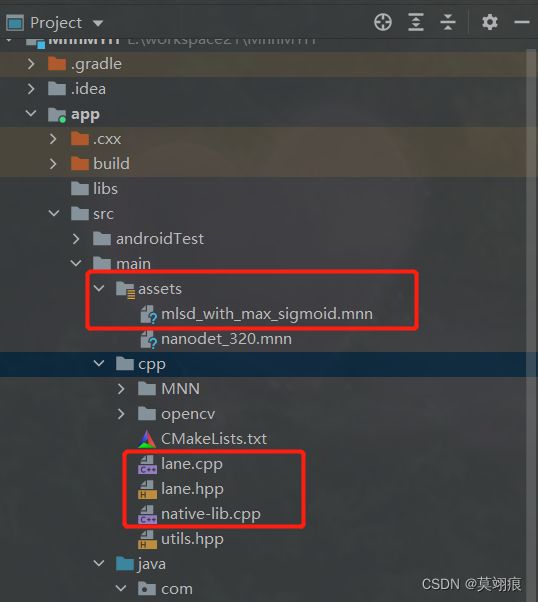

5.测试MNN加载调用模型

这边主要参考直线检测模型

#ifndef __LANE_H__

#define __LANE_H__

#include lane.cpp

#include "lane.hpp"

bool LaneDetect::hasGPU = false;

bool LaneDetect::toUseGPU = false;

LaneDetect *LaneDetect::detector = nullptr;

LaneDetect::LaneDetect(const std::string &mnn_path, bool useGPU)

{

toUseGPU = hasGPU && useGPU;

m_net = std::shared_ptr<MNN::Interpreter>(MNN::Interpreter::createFromFile(mnn_path.c_str()));

m_backend_config.precision = MNN::BackendConfig::PrecisionMode::Precision_Low; // 精度

m_backend_config.power = MNN::BackendConfig::Power_Normal; // 功耗

m_backend_config.memory = MNN::BackendConfig::Memory_Normal; // 内存占用

m_config.backendConfig = &m_backend_config;

m_config.numThread = 4;

if (useGPU) {

m_config.type = MNN_FORWARD_OPENCL;

}

m_config.backupType = MNN_FORWARD_CPU;

MNN::CV::ImageProcess::Config img_config; // 图像处理

::memcpy(img_config.mean, m_mean_vals, sizeof(m_mean_vals)); // (img - mean)*norm

::memcpy(img_config.normal, m_norm_vals, sizeof(m_norm_vals));

img_config.sourceFormat = MNN::CV::BGR;

img_config.destFormat = MNN::CV::RGB;

pretreat = std::shared_ptr<MNN::CV::ImageProcess>(MNN::CV::ImageProcess::create(img_config));

MNN::CV::Matrix trans;

trans.setScale(1.0f, 1.0f); // scale

pretreat->setMatrix(trans);

m_session = m_net->createSession(m_config); //创建session

m_inTensor = m_net->getSessionInput(m_session, NULL);

m_net->resizeTensor(m_inTensor, {1, 3, m_input_size, m_input_size});

m_net->resizeSession(m_session);

std::cout << "session created" << std::endl;

}

LaneDetect::~LaneDetect()

{

m_net->releaseModel();

m_net->releaseSession(m_session);

}

inline int LaneDetect::clip(float value)

{

if (value > 0 && value < m_input_size)

return int(value);

else if (value < 0)

return 1;

else

return m_input_size - 1;

}

std::vector<LaneDetect::Lanes> LaneDetect::decodeHeatmap(const float* hm,int w, int h, double threshold, double lens_threshold)

{

// 线段中心点(256*256),线段偏移(4*256*256)

const float* displacement = hm+m_hm_size*m_hm_size;

// exp(center,center);

std::vector<float> center;

for (int i = 0;i < m_hm_size*m_hm_size; i++)

{

center.push_back( hm[i] ); // mlsd.mnn原始需要1/(exp(-hm[i]) + 1)

}

center.resize(m_hm_size*m_hm_size);

std::vector<int> index(center.size(), 0);

for (int i = 0 ; i != index.size() ; i++) {

index[i] = i;

}

sort(index.begin(), index.end(),

[&](const int& a, const int& b) {

return (center[a] > center[b]); // 从大到小排序

}

);

std::vector<Lanes> lanes;

for (int i = 0; i < index.size(); i++)

{

int yy = index[i]/m_hm_size; // 除以宽得行号

int xx = index[i]%m_hm_size; // 取余宽得列号

Lanes Lane;

Lane.x1 = xx + displacement[index[i] + 0*m_hm_size*m_hm_size];

Lane.y1 = yy + displacement[index[i] + 1*m_hm_size*m_hm_size];

Lane.x2 = xx + displacement[index[i] + 2*m_hm_size*m_hm_size];

Lane.y2 = yy + displacement[index[i] + 3*m_hm_size*m_hm_size];

Lane.lens = sqrt(pow(Lane.x1 - Lane.x2,2) + pow(Lane.y1 - Lane.y2,2));

Lane.conf = center[index[i]];

if (center[index[i]] > threshold && lanes.size() < m_top_k)

{

if ( Lane.lens > lens_threshold)

{

Lane.x1 = clip(w * Lane.x1 / (m_input_size / 2));

Lane.x2 = clip(w * Lane.x2 / (m_input_size / 2));

Lane.y1 = clip(h * Lane.y1 / (m_input_size / 2));

Lane.y2 = clip(h * Lane.y2 / (m_input_size / 2));

lanes.push_back(Lane);

}

}

else

break;

}

return lanes;

}

std::vector<LaneDetect::Lanes> LaneDetect::detect(const cv::Mat& img, unsigned char* image_bytes, int width, int height, double threshold, double lens_threshold)

{

// 图像处理

cv::Mat preImage = img.clone();

cv::resize(preImage,preImage,cv::Size(m_input_size,m_input_size));

pretreat->convert(preImage.data, m_input_size, m_input_size, 0, m_inTensor);

// 推理

m_net->runSession(m_session);

MNN::Tensor *output= m_net->getSessionOutput(m_session, NULL);

MNN::Tensor tensor_scores_host(output, output->getDimensionType());

output->copyToHostTensor(&tensor_scores_host);

auto score = output->host<float>(); // 得到结果指针

std::vector<LaneDetect::Lanes> lanes = decodeHeatmap(score, width, height, threshold,lens_threshold);

return lanes;

}

native-lib.cpp

直线检测只用到了init()和detect(),其他是测试opencv的不用管.

#include " , "(FFFFFF)V");

jobjectArray ret = env->NewObjectArray(result.size(), line_cls, nullptr);

int i = 0;

for (auto &line:result) {

env->PushLocalFrame(1);

jobject obj = env->NewObject(line_cls, cid, line.x1, line.y1, line.x2, line.y2, line.lens,

line.conf);

obj = env->PopLocalFrame(obj);

env->SetObjectArrayElement(ret, i++, obj);

}

return ret;

}

- native方法接口类

public class JniBitmapUtil {

private static final String TAG = "JniBitmapUtil";

// Used to load the 'mnnmyh' library on application startup.

static {

System.loadLibrary("mnnmyh");

}

public static native String stringFromJNI();

public static native void init(String name,String path,boolean useGPU);

public static native LaneInfo[] detect(Bitmap bitmap,byte[] imageBytes,int width,int height,double threshold, double lens_threshold);

}

- Android调用

//初始化

//保证模型在下面的目录

String path = getExternalFilesDir(null) + File.separator;

String name = "mlsd_with_max_sigmoid.mnn";

JniBitmapUtil.init(name,path,true);

//直线检测

...

mMnnTestBinding.bt.setOnClickListener(v -> {

if (mBitmap == null){

Toast.makeText(this, "图片呢", Toast.LENGTH_SHORT).show();

return;

}

byte[] bitmap = bitmapToByteArray(mBitmap);

LaneInfo[] laneInfos = JniBitmapUtil.detect(mBitmap, bitmap, mBitmap.getWidth(), mBitmap.getHeight(), 0.2, 20);

Bitmap bitmap2 = mBitmap.copy(Bitmap.Config.ARGB_8888, true);

Bitmap bitmap1 = drawBoxRects(bitmap2, laneInfos);

mMnnTestBinding.img.setImageBitmap(bitmap1);

});

...

private Bitmap drawBoxRects(Bitmap mutableBitmap, LaneInfo[] results) {

if (results == null || results.length <= 0) {

return mutableBitmap;

}

Log.i(TAG, "drawBoxRects: size = "+results.length);

Canvas canvas = new Canvas(mutableBitmap);

final Paint boxPaint = new Paint();

boxPaint.setAlpha(200);

boxPaint.setStrokeWidth(2);

for (LaneInfo line : results) {

//boxPaint.setColor(Color.argb(255, 255, 0, 0));

boxPaint.setColor(Color.YELLOW);

boxPaint.setStyle(Paint.Style.FILL); // 设置只绘制图形内容

canvas.drawLine(line.x0,line.y0,line.x1,line.y1,boxPaint);

}

return mutableBitmap;

}

private byte[] bitmapToByteArray(Bitmap bitmap) {

int bytes = bitmap.getByteCount();

ByteBuffer buf = ByteBuffer.allocate(bytes);

bitmap.copyPixelsToBuffer(buf);

return buf.array();

}

demo下载地址(https://download.csdn.net/download/qq_35193677/86497475)

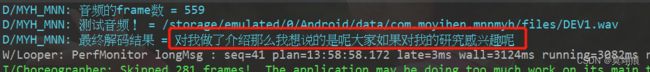

6.其他

后期也测了语音模型,可以正常运行,能实现语音转文字demo。

std::string wav = "/storage/emulated/0/Android/data/com.moyihen.mnnmyh/files/DEV1.wav";

auto feature_config = wenet::InitFeaturePipelineConfigFromFlags();

wenet::WavReader wav_reader(wav);

auto feature_pipeline = std::make_shared<wenet::FeaturePipeline>(*feature_config);

feature_pipeline->AcceptWaveform(std::vector<float>(wav_reader.data(), wav_reader.data() + wav_reader.num_sample()));

int num_frames = feature_pipeline->num_frames();

LOGD("音频的frame数 = %d",num_frames);

LOGD("测试音频! = %d",wav.c_str());

/*std::cout<<"音频的frame数: "<

//for(int i=0;i<10;i++){std::cout<

wenet::MNNDecoder decoder(feature_pipeline); //, *decode_config);

decoder.Reset();

decoder.print_elements();

decoder.Decode();

std::string result = decoder.get_result();

LOGD("最终解码结果 = %s",result.c_str());