「解析」Pytorch 转置卷积 & 内部计算

在图像分割以及其他领域可能会看到转置卷积,但是查看官方文档好像理解也有点困难,网上的博客好像也没写太清楚,特别是转置卷积内部的运算过程,个人觉得只有真正了解了转置卷积的内部运算过程,才能掌握转置卷积,只记公式是无法掌握转置卷积的。此外由于最近在复现 TSGB算法需要,将 转置卷积整理了下,希望对各位小伙伴有帮助!

卷积运算不会增大 input 的尺度,通常是不变,或者降低

而转置卷积则可以用来增大 input 的尺度

转置卷积原论文:Deconvolutional Networks

文章目录

- 1、公式

- 2、自写转置卷积

- 3、padding

- 4、output_padding

- 5、Error

- 图示计算过程

在 Pytorch 中,卷积操作主要可以分为两类,第一类是正常的卷积操作,第二类为转置卷积。这两类卷积分别有三个子类,即一维卷积、二维卷积 & 三维卷积。卷积核 & 转置卷积 都有一个公共的父类,即 _ConvNd 类,这个类是隐藏的,具体代码在 torch/nn/modules/conv.py 文件夹下。

1、公式

完整版 : H o u t = s t r i d e ⋅ ( H i n − 1 ) − 2 ⋅ p a d d i n g + d i l a t i o n ⋅ ( k e r n e l _ s i z e − 1 ) + o u t _ p a d d i n g + 1 H o u t = s t r i d e ⋅ ( H i n − 1 ) + k e r n e l _ s i z e + o u t _ p a d d i n g − 2 ⋅ p a d d i n g 「 d i l a t i o n = 1 」 \text{完整版}:H_{out}=stride\cdot (H_{in}−1) −2\cdot padding+dilation\cdot (kernel\_size−1)+out\_padding+1 \\ \quad \\ \quad \\ \color{red}\bm{ \mathbf{ H_{out}=stride\cdot (H_{in}−1)+ kernel\_size+out\_padding −2\cdot padding \quad } } 「dilation=1」 完整版:Hout=stride⋅(Hin−1)−2⋅padding+dilation⋅(kernel_size−1)+out_padding+1Hout=stride⋅(Hin−1)+kernel_size+out_padding−2⋅padding「dilation=1」

记录下,以下两种2倍上采样 参数配置

nn.ConvTranspose2d(in_chan, out_chan, kernel=2, stride=2)

nn.ConvTranspose2d(in_chan, out_chan, kernel=3, stride=2, padding=1, output_padding=1)

torch.nn.ConvTranspose2d( in_channels : int,

out_channels : int,

kernel_size : Union[T, Tuple[T, T]],

stride : Union[T, Tuple[T, T]] = 1,

padding : Union[T, Tuple[T, T]] = 0,

output_padding : Union[T, Tuple[T, T]] = 0,

groups : int = 1,

bias : bool = True,

dilation : int = 1,

padding_mode : str = 'zeros')

- in_channels(

int) – 输入层的通道数; - out_channels(

int) – 输出层的通道数; - kerner_size(

int or tuple) - 卷积核的大小; - stride(

int or tuple,optional) - 卷积步长「⚠️此处的stride不是卷积核移动的步长,而卷积运算后的步长」; - padding(

int or tuple, optional) - 对于⚠️output feature map 的填充,四周均删除,详情见 【padding】; - output_padding(

int or tuple, optional) - 输出Featuremap 填充的尺寸,⚠️只在最右列和最下行填充,上行和左列不填充,详情见 【output_padding】; - group(

int, optional) - 控制 输入和输出之间的连接。in_channels 和out_channels 都必须按照group分组; - dilation(

int or tuple, optional) – 卷积核元素之间的间距; - padding_mode – 填充模式选择;

- bias(

bool, optional) - 如果bias=True,添加偏置,Defaule=True;

Examples:

# With square kernels and equal stride

m = nn.ConvTranspose2d(16, 33, 3, stride=2)

# non-square kernels and unequal stride and with padding

m = nn.ConvTranspose2d(16, 33, (3, 5), stride=(2, 1), padding=(4, 2))

input = torch.randn(20, 16, 50, 100)

output = m(input)

# exact output size can be also specified as an argument

input = torch.randn(1, 16, 12, 12)

downsample = nn.Conv2d(16, 16, 3, stride=2, padding=1)

upsample = nn.ConvTranspose2d(16, 16, 3, stride=2, padding=1)

h = downsample(input)

h.size()

output = upsample(h, output_size=input.size())

output.size()

同样的卷积参数 与 转置卷积参数(输入通道/输出通道相反),先经过卷积运算后再进行转置卷积运算,会得到与输入相同的 尺寸

X = torch.rand((1,7,16,16))

conv = nn.Conv2d(7, 10, kernel_size=3, padding=2, stride=3)

tconv = nn.ConvTranspose2d(10, 7, kernel_size=3, padding=2, stride=3)

tconv(conv(X)).shape == X.shape

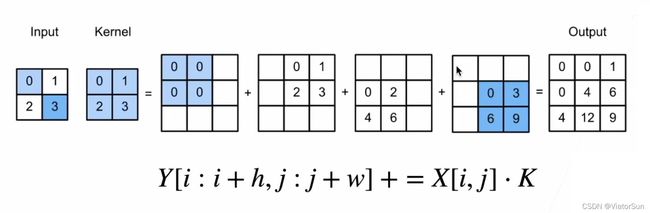

2、自写转置卷积

def trans_conv(X, K):

h, w = K.shape

Y = torch.zeros((X.shape[0] + h - 1,

X.shape[1] + w - 1) )

for i in range(X.shape[0]):

for j in range(X.shape[1]):

Y[i:i+h, j:j+w] += X[i,j] * K # 对应元素相乘

return Y

3、padding

import torch

import torch.nn as nn

import torch.nn.functional as F

tconv = nn.ConvTranspose2d(1,1,kernel_size=3,stride=1,padding=1,output_padding=0,bias=False)

tconv2 = nn.ConvTranspose2d(1,1,kernel_size=3,stride=1,padding=0,output_padding=0,bias=False)

tconv2.weight.data = tconv.weight.data

a = torch.randn(1,1,3,3)

out = tconv(a)

out2 = tconv2(a)

print(out.shape)

print(out2.shape)

# 输出结果

tensor([[[[-0.6313, 0.7197, -0.0730],

[-0.0682, 0.2572, -0.9262],

[ 0.4393, -0.0197, 0.2226]]]],

grad_fn=<SlowConvTranspose2DBackward>)

tensor([[[[-0.1825, 0.5451, -0.0489, 0.4533, -0.2810],

[ 0.4646, -0.6313, 0.7197, -0.0730, 0.1872],

[-0.2107, -0.0682, 0.2572, -0.9262, -0.0682],

[-0.0663, 0.4393, -0.0197, 0.2226, -0.0704],

[ 0.0144, 0.0723, -0.2561, -0.1143, 0.1171]]]],

grad_fn=<SlowConvTranspose2DBackward>)

out1 的结果实际就是将 out2 的最外一圈删除了

4、output_padding

tconv = nn.ConvTranspose2d(1,1,kernel_size=3,stride=2,padding=0,output_padding=0,bias=False)

tconv2 = nn.ConvTranspose2d(1,1,kernel_size=3,stride=2,padding=0,output_padding=1,bias=False)

tconv2.weight.data = tconv.weight.data

a = torch.randn(1,1,3,3)

out = tconv(a)

out2 = tconv2(a)

print(out)

print(out2)

# 输出结果

tensor([[[[ 4.5072e-01, 1.9174e-01, 2.4592e-01, -4.6482e-02, -1.8972e-01, -4.4077e-02, -8.1649e-02],

[-2.1112e-01, -2.1895e-01, 5.7506e-01, 5.3078e-02, -7.8469e-02, 5.0331e-02, -1.2043e-01],

[ 4.3586e-02, 1.8305e-01, 4.3104e-01, -3.6797e-02, -2.0301e-02, -8.7202e-03, -3.5626e-02],

[-1.5602e-01, -1.6180e-01, 4.1664e-01, 3.0571e-02, -7.4014e-02, -8.9690e-04, 2.1461e-03],

[-6.0989e-01, -1.3788e-01, -3.1028e-01, -7.2944e-02, -9.8111e-01, -3.5419e-01, -6.5575e-01],

[ 1.8546e-01, 1.9234e-01, -3.8625e-01, 7.6701e-02, 2.0665e-01, 4.0463e-01, -9.6819e-01],

[ 2.5432e-01, -3.6325e-02, -4.0285e-02, -1.4486e-02, 4.7852e-01, -7.6420e-02, -2.9811e-01]]]], grad_fn=<SlowConvTranspose2DBackward>)

tensor([[[[ 4.5072e-01, 1.9174e-01, 2.4592e-01, -4.6482e-02, -1.8972e-01, -4.4077e-02, -8.1649e-02, 0.0000e+00],

[-2.1112e-01, -2.1895e-01, 5.7506e-01, 5.3078e-02, -7.8469e-02, 5.0331e-02, -1.2043e-01, 0.0000e+00],

[ 4.3586e-02, 1.8305e-01, 4.3104e-01, -3.6797e-02, -2.0301e-02, -8.7202e-03, -3.5626e-02, 0.0000e+00],

[-1.5602e-01, -1.6180e-01, 4.1664e-01, 3.0571e-02, -7.4014e-02, -8.9690e-04, 2.1461e-03, 0.0000e+00],

[-6.0989e-01, -1.3788e-01, -3.1028e-01, -7.2944e-02, -9.8111e-01, -3.5419e-01, -6.5575e-01, 0.0000e+00],

[ 1.8546e-01, 1.9234e-01, -3.8625e-01, 7.6701e-02, 2.0665e-01, 4.0463e-01, -9.6819e-01, 0.0000e+00],

[ 2.5432e-01, -3.6325e-02, -4.0285e-02, -1.4486e-02, 4.7852e-01, -7.6420e-02, -2.9811e-01, 0.0000e+00],

[ 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00, 0.0000e+00]]]], grad_fn=<SlowConvTranspose2DBackward>)

5、Error

当 output padding > 1时,必须保证 output padding 小于 stride or dilation,否则会报错

RuntimeError: output padding must be smaller than either stride or dilation, but got

output_padding_height: 1

output_padding_width: 1

stride_height: 1

stride_width: 1

dilation_height: 1

dilation_width: 1

图示计算过程