detectron2 训练labelme标定的coco数据集

labelme是一个很好用的实例分割标定工具,detectron2可以很方便的进行mask rcnn等网络的训练,detectron2可以直接接受coco格式数据集进行输入。

流程:

(1)lableme进行数据的标注,获得json文件;

(2)通过labelme2coco.py文件把标注的数据集转换成coco格式;

(3)使用detectron2进行训练和测试。

需要注意的是labelme转coco给的例子里面是把_background_作为第0类的,而detectron2的输入的标准coco数据集格式类别编号是从1开始的。也就是说如果使用labelme官方给的labelme2coco工具,那么在设置类别个数的时候,实际标注为n类,则类别数应加上背景,写为n+1。

如果不想类别数加1可以通过修改代码实现:

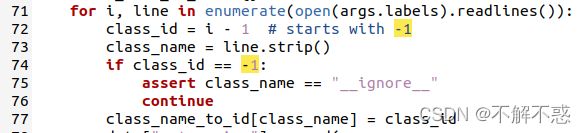

修改前:

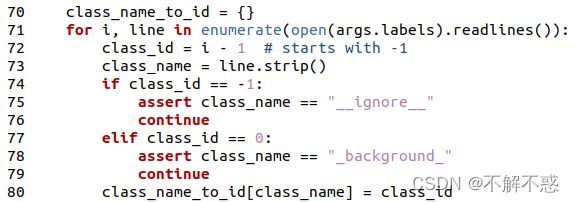

修改后:

训练:

# Detectron2 maskRCNN训练自己的数据集 https://www.jianshu.com/p/0e00400fdaa5

import torch, torchvision

print(torch.__version__, torch.cuda.is_available())

# Some basic setup:

# Setup detectron2 logger

import detectron2

from detectron2.utils.logger import setup_logger

setup_logger()

# import some common libraries

import numpy as np

import os, json, cv2, random

#from google.colab.patches import cv2_imshow

# import some common detectron2 utilities

from detectron2 import model_zoo

from detectron2.engine import DefaultPredictor

from detectron2.config import get_cfg

from detectron2.utils.visualizer import Visualizer

from detectron2.data import MetadataCatalog, DatasetCatalog

from detectron2.data.datasets import register_coco_instances

# register_coco_instances("bondOnlyDataset_train", {},

# r'/home/whq/git_rep/labelme/examples/instance_segmentation/data_dataset_coco/annotations.json',

# r'/home/whq/git_rep/labelme/examples/instance_segmentation/data_dataset_coco/')

# register_coco_instances("bondOnlyDataset_val", {},

# r'/home/whq/git_rep/labelme/examples/instance_segmentation/data_dataset_coco/annotations.json',

# r'/home/whq/git_rep/labelme/examples/instance_segmentation/data_dataset_coco/')

register_coco_instances("bondOnlyDataset_train", {},

r'~/datasets/data_dataset_coco/annotations.json',

r'~/datasets/data_dataset_coco/')

register_coco_instances("bondOnlyDataset_val", {},

r'~/datasets/data_dataset_coco/annotations.json',

r'~/datasets/data_dataset_coco/')

my_bond_metadata = MetadataCatalog.get("bondOnlyDataset_train")

print(my_bond_metadata)

# MetadataCatalog.get("bondOnlyDataset_train").thing_classes = ["face_main"]

# print(my_bond_metadata)

# train

from detectron2.engine import DefaultTrainer

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.DATASETS.TRAIN = ("bondOnlyDataset_train")

cfg.DATASETS.TEST = ()

cfg.DATALOADER.NUM_WORKERS = 2

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml") # Let training initialize from model zoo

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.SOLVER.BASE_LR = 0.00025 # pick a good LR

cfg.SOLVER.MAX_ITER = 300 # 300 iterations

cfg.SOLVER.STEPS = [] # do not decay learning rate

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 128 # faster, and good enough for this toy dataset (default: 512)

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 2 #the number of classes

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

trainer = DefaultTrainer(cfg)

trainer.resume_or_load(resume=False)

trainer.train()

# Inference should use the config with parameters that are used in training

# cfg now already contains everything we've set previously. We changed it a little bit for inference:

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_final.pth") # path to the model we just trained

# cfg.MODEL.WEIGHTS = os.path.join(r'/home/whq/.torch/iopath_cache/detectron2/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl') # path to the model we just trained

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.3 # set a custom testing threshold

predictor = DefaultPredictor(cfg)

from detectron2.utils.visualizer import ColorMode

img_path = r'~/datasets/data_dataset_coco/JPEGImages/10_color_1.jpg'

# img_path = r'/home/whq/git_rep/labelme/examples/instance_segmentation/data_dataset_coco/JPEGImages/2011_000003.jpg'

im = cv2.imread(img_path)

outputs = predictor(im) # format is documented at https://detectron2.readthedocs.io/tutorials/models.html#model-output-format

print(outputs)

import matplotlib.pyplot as plt

def cv2_imshow(im):

im = cv2.cvtColor(im, cv2.COLOR_BGR2RGB)

plt.figure(), plt.imshow(im), plt.axis('off');

v = Visualizer(im[:, :, ::-1],

metadata=my_bond_metadata,

scale=1,

instance_mode=2 # remove the colors of unsegmented pixels. This option is only available for segmentation models

)

out = v.draw_instance_predictions(outputs["instances"].to("cpu"))

cv2_imshow(out.get_image()[:, :, ::-1])

#

plt.show()

# cv2.namedWindow("image",0)

cv2.imshow("image",out.get_image()[:, :, ::-1])

cv2.waitKey(1000) # 需要加显示时间,否则可能会报错

# cv2.destroyWindow("image")测试:

# Detectron2 maskRCNN训练自己的数据集 https://www.jianshu.com/p/0e00400fdaa5

import torch, torchvision

print(torch.__version__, torch.cuda.is_available())

# Some basic setup:

# Setup detectron2 logger

import detectron2

from detectron2.utils.logger import setup_logger

setup_logger()

# import some common libraries

import numpy as np

import os, json, cv2, random

#from google.colab.patches import cv2_imshow

# import some common detectron2 utilities

from detectron2 import model_zoo

from detectron2.engine import DefaultPredictor

from detectron2.config import get_cfg

from detectron2.utils.visualizer import Visualizer

from detectron2.data import MetadataCatalog, DatasetCatalog

from detectron2.data.datasets import register_coco_instances

register_coco_instances("bondOnlyDataset_train", {},

r'~datasets/data_dataset_coco/annotations.json',

r'~/datasets/data_dataset_coco/')

register_coco_instances("bondOnlyDataset_val", {},

r'~/datasets/data_dataset_coco/annotations.json',

r'~/datasets/data_dataset_coco/')

my_bond_metadata = MetadataCatalog.get("bondOnlyDataset_train")

print(my_bond_metadata)

from detectron2.engine import DefaultTrainer

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.DATASETS.TRAIN = ("bondOnlyDataset_train")

cfg.DATASETS.TEST = ()

cfg.DATALOADER.NUM_WORKERS = 2

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml") # Let training initialize from model zoo

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.SOLVER.BASE_LR = 0.00025 # pick a good LR

cfg.SOLVER.MAX_ITER = 300 # 300 iterations

cfg.SOLVER.STEPS = [] # do not decay learning rate

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 128 # faster, and good enough for this toy dataset (default: 512)

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 2 #the number of classes

# os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

# trainer = DefaultTrainer(cfg)

# trainer.resume_or_load(resume=False)

# trainer.train()

# Inference should use the config with parameters that are used in training

# cfg now already contains everything we've set previously. We changed it a little bit for inference:

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_final.pth") # path to the model we just trained

# cfg.MODEL.WEIGHTS = os.path.join(r'/home/whq/.torch/iopath_cache/detectron2/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl') # path to the model we just trained

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.3 # set a custom testing threshold

predictor = DefaultPredictor(cfg)

from detectron2.utils.visualizer import ColorMode

img_path = r'~/datasets/data_dataset_coco/JPEGImages/10_color_1.jpg'

# img_path = r'/home/whq/git_rep/labelme/examples/instance_segmentation/data_dataset_coco/JPEGImages/2011_000003.jpg'

im = cv2.imread(img_path)

outputs = predictor(im) # format is documented at https://detectron2.readthedocs.io/tutorials/models.html#model-output-format

v = Visualizer(im[:, :, ::-1],

metadata=my_bond_metadata,

scale=1,

instance_mode=2 # remove the colors of unsegmented pixels. This option is only available for segmentation models

)

out = v.draw_instance_predictions(outputs["instances"].to("cpu"))

cv2.namedWindow("image",0)

cv2.imshow("image",out.get_image()[:, :, ::-1])

cv2.waitKey(3000)

cv2.destroyWindow("image")

def showMask(outputs):

mask = outputs["instances"].to("cpu").get("pred_masks").numpy()

#这里的mask的通道数与检测到的示例的个数一致,把所有通道的mask合为一个通道

img = np.zeros((mask.shape[1],mask.shape[2]))

for i in range(mask.shape[0]):

img += mask[i]

np.where(img>0,255,0)

cv2.namedWindow("mask",0)

cv2.imshow("mask",img)

cv2.waitKey(1)

from detectron2.data.detection_utils import read_image

img_path = r'~/datasets/data_dataset_coco/JPEGImages/10_color_1.jpg'

im = read_image(img_path, format="BGR")

# im = cv2.imread(img_path)

print(im)

outputs = predictor(im)

# print(outputs.shape)

print(outputs)

showMask(outputs)

cv2.namedWindow("image",0)

cv2.imshow("image",im)

cv2.waitKey(3000)

cv2.destroyAllWindows()