轻量级神经网络——MobileNet V2

轻量级神经网络——MobileNet V2

目录

- 轻量级神经网络——MobileNet V2

-

- 你好——MobileNet V2

- 很高兴认识你——MobileNet V2

- 你最近还好吗——MobileNet V2

- 来划重点——Inverted_res_block

- 完整代码演示——MobileNetV2

最近太闲了,突然想记录一下学习感悟,初来乍到,请大家多多包含!!!!

你好——MobileNet V2

论文下载:https://arxiv.org/abs/1801.04381,

代码下载:https://github.com/shicai/MobileNet-Caffe,

MobileNet系列出了非常多的小网络,可以说MobileNet V2就是谷歌神力延续,当然V2实在V1上的加强或者改进。那么接下来我们就一起来解开V2的神秘面纱,同志们准备好了吗!!!

很高兴认识你——MobileNet V2

上创新点——走你:

1、Inverted Residual Block(在进行3x3深度可分离卷积之前,先利用1x1卷积升维,然后在使用1x1卷积降维操作:1x1Conv+3x3DWConv+1*1Conv,和ResNet50恰好相反)

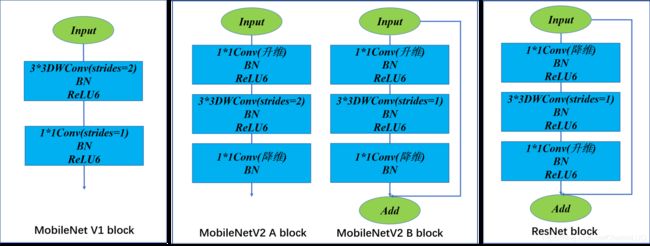

为了更直观的额区别V1、V2、ResNet50,我们可以从下图

解释:从图上可以看出区别,MobileNet V1首次使用了深度可分离卷积,并且使用了ReLU6激活函数。在MobileNet V2的网络设计中,除了继续使用深度可分离(中间那个)结构之外,还使用了Expansion layer和 Projection layer。这个projection layer也是使用 1x1Conv 的网络结构,作用是希望把高维特征映射到低维空间去。另外说一句,使用1x1Conv 的网络结构将高维空间映射到低纬空间的设计有的时候我们也称之为Bottleneck layer。Expansion layer的功能正相反,使用 1x1Conv 的网络结构,目的是将低维空间映射到高维空间。这里Expansion有一个超参数是维度扩展几倍。应用深度可分离卷积进行处理。整个网络是中间胖,两头窄,像一个纺锤形。bottleneck residual block(ResNet论文中的)是中间窄两头胖,在MobileNetV2中正好反了过来,所以,在MobileNetV2的论文中我们称这样的网络结构为Inverted residuals。需要注意的是residual connection是在输入和输出的部分进行连接。

2、Linear Bottleneck,因为从高维向低维转换,使用ReLU激活函数可能会造成信息丢失或破坏(不使用非线性激活数数)。所以在projection convolution这一部分,我们不再使用ReLU激活函数而是使用线性激活函数。

你最近还好吗——MobileNet V2

为什么要采用这样一个结构,使用这样的结构能带来那些好处呢,要解决这个疑问,我们首先要知道一个理论:tensor维度越低,卷积层的乘法计算量就越小。那么如果整个网络都是低维的tensor,那么整体计算速度就会很快。但是如果只是使用低维的tensor效果并不会好。如果卷积层的过滤器都是使用低维的tensor来提取特征的话,那么就没有办法提取到整体的足够多的信息。所以,如果提取特征数据的话,因此尽可能的使用高维的tensor来来提取特征。因此V2采用这样一个结构,既减少了参数量的同时又使用了更多的高维特征。

先通过Expansion layer来扩展维度,之后在用深度可分离卷积来提取特征,之后使用Projection layer来压缩数据,让网络从新变小。因为Expansion layer 和 Projection layer都是有可以学习的参数,所以整个网络结构可以学习到如何更好的扩展数据和从新压缩数据。

来划重点——Inverted_res_block

前面我们讲了MobileNetv2相比v1的两个主要改进:linear bottleneck和inverted residual。改进后的新结构称为bottleneck residual block,表1给出了这个block的内部结构,其中t是扩展比。

上代码,接下来一起看一下Inverted_res_block模块的代码吧

#########################################

# 压缩通道 #

#########################################

def make_divisible(v, divisor, min_value=None):

if min_value is None:

min_value = divisor

new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

if new_v < 0.9 * v:

new_v += divisor

return new_v

#########################################

# 定义RelU6激活函数 #

#########################################

def relu6(x):

return relu(x, max_value=6)

#########################################

# 使用inverted_res_block模块 #

#########################################

def inverted_res_block(inputs,

expansion,

stride,

alpha,

filters,

block_id,

skip_connection,

rate=1):

# inputs._keras_shape[-1]

in_channels = inputs.shape[-1].value

# 定于深度卷积的filter

pointwise_conv_filters = int(filters * alpha)

pointwise_filters = make_divisible(pointwise_conv_filters, 8)

x = inputs

prefix = 'expanded_conv_{}_'.format(block_id)

# Expandsion

if block_id:

x = Conv2D(expansion * in_channels, kernel_size=1, padding='same',

use_bias=False, activation=None,

name=prefix + 'expand')(x)

x = BatchNormalization(epsilon=1e-3, momentum=0.999,

name=prefix + 'expand_BN')(x)

x = Activation(relu6, name=prefix + 'expand_relu')(x)

else:

prefix = 'expanded_conv_'

# Depthwise

x = DepthwiseConv2D(kernel_size=3, strides=stride, activation=None,

use_bias=False, padding='same', dilation_rate=(rate, rate),

name=prefix + 'depthwise')(x)

x = BatchNormalization(epsilon=1e-3, momentum=0.999,

name=prefix + 'depthwise_BN')(x)

x = Activation(relu6, name=prefix + 'depthwise_relu')(x)

# Projection

x = Conv2D(pointwise_filters,

kernel_size=1, padding='same', use_bias=False, activation=None,

name=prefix + 'project')(x)

x = BatchNormalization(epsilon=1e-3, momentum=0.999,

name=prefix + 'project_BN')(x)

if skip_connection:

return Add(name=prefix + 'add')([inputs, x])

# if in_channels == pointwise_filters and stride == 1:

# return Add(name='res_connect_' + str(block_id))([inputs, x])

return x

完整代码演示——MobileNetV2

# -------------------------------------------------------------#

# MobileNetV2网络

# -------------------------------------------------------------#

import math

import numpy as np

import tensorflow as tf

from keras import backend as K

from keras.preprocessing import image

from keras.models import Model

from keras.layers.normalization import BatchNormalization

from keras.layers import Conv2D, Add, ZeroPadding2D, GlobalAveragePooling2D, Dropout, Dense

from keras.layers import MaxPooling2D,Activation,DepthwiseConv2D,Input,GlobalMaxPooling2D

from keras.applications import imagenet_utils

from keras.applications.imagenet_utils import decode_predictions

from keras.utils.data_utils import get_file

from keras.activations import relu

#########################################

# 压缩通道 #

#########################################

def make_divisible(v, divisor, min_value=None):

if min_value is None:

min_value = divisor

new_v = max(min_value, int(v + divisor / 2) // divisor * divisor)

if new_v < 0.9 * v:

new_v += divisor

return new_v

########################################

# 计算padding的大小 #

########################################

def correct_pad(inputs, kernel_size):

img_dim = 1

input_size = K.int_shape(inputs)[img_dim:(img_dim + 2)]

if isinstance(kernel_size, int):

kernel_size = (kernel_size, kernel_size)

if input_size[0] is None:

adjust = (1, 1)

else:

adjust = (1 - input_size[0] % 2, 1 - input_size[1] % 2)

correct = (kernel_size[0] // 2, kernel_size[1] // 2)

return ((correct[0] - adjust[0], correct[0]),

(correct[1] - adjust[1], correct[1]))

#########################################

# 定义RelU6激活函数 #

#########################################

def relu6(x):

return relu(x, max_value=6)

#########################################

# 使用inverted_res_block模块 #

#########################################

def inverted_res_block(inputs,

expansion,

stride,

alpha,

filters,

block_id,

skip_connection,

rate=1):

# inputs._keras_shape[-1]

in_channels = inputs.shape[-1].value

# 定于深度卷积的filter

pointwise_conv_filters = int(filters * alpha)

pointwise_filters = make_divisible(pointwise_conv_filters, 8)

x = inputs

prefix = 'expanded_conv_{}_'.format(block_id)

# Expandsion

if block_id:

x = Conv2D(expansion * in_channels, kernel_size=1, padding='same',

use_bias=False, activation=None,

name=prefix + 'expand')(x)

x = BatchNormalization(epsilon=1e-3, momentum=0.999,

name=prefix + 'expand_BN')(x)

x = Activation(relu6, name=prefix + 'expand_relu')(x)

else:

prefix = 'expanded_conv_'

# Depthwise

x = DepthwiseConv2D(kernel_size=3, strides=stride, activation=None,

use_bias=False, padding='same', dilation_rate=(rate, rate),

name=prefix + 'depthwise')(x)

x = BatchNormalization(epsilon=1e-3, momentum=0.999,

name=prefix + 'depthwise_BN')(x)

x = Activation(relu6, name=prefix + 'depthwise_relu')(x)

# Projection

x = Conv2D(pointwise_filters,

kernel_size=1, padding='same', use_bias=False, activation=None,

name=prefix + 'project')(x)

x = BatchNormalization(epsilon=1e-3, momentum=0.999,

name=prefix + 'project_BN')(x)

if skip_connection:

return Add(name=prefix + 'add')([inputs, x])

# if in_channels == pointwise_filters and stride == 1:

# return Add(name='res_connect_' + str(block_id))([inputs, x])

return x

# TODO Change path to v1.1

BASE_WEIGHT_PATH = ('https://github.com/JonathanCMitchell/mobilenet_v2_keras/'

'releases/download/v1.1/')

def MobileNetV2(input_shape=[224, 224, 3],

alpha=1.0,

include_top=True,

weights='imagenet',

classes=1000):

rows = input_shape[0]

img_input = Input(shape=input_shape)

# stem部分

# 224,224,3 -> 112,112,32

first_block_filters = make_divisible(32 * alpha, 8)

x = ZeroPadding2D(padding=correct_pad(img_input, 3),

name='Conv1_pad')(img_input)

x = Conv2D(first_block_filters,

kernel_size=3,

strides=(2, 2),

padding='valid',

use_bias=False,

name='Conv1')(x)

x = BatchNormalization(epsilon=1e-3,

momentum=0.999,

name='bn_Conv1')(x)

x = Activation(relu6, name='Conv1_relu')(x)

# 112,112,32 -> 112,112,16

x = inverted_res_block(x, filters=16, alpha=alpha, stride=1,

expansion=1, block_id=0)

# 112,112,16 -> 56,56,24

x = inverted_res_block(x, filters=24, alpha=alpha, stride=2,

expansion=6, block_id=1)

x = inverted_res_block(x, filters=24, alpha=alpha, stride=1,

expansion=6, block_id=2)

# 56,56,24 -> 28,28,32

x = inverted_res_block(x, filters=32, alpha=alpha, stride=2,

expansion=6, block_id=3)

x = inverted_res_block(x, filters=32, alpha=alpha, stride=1,

expansion=6, block_id=4)

x = inverted_res_block(x, filters=32, alpha=alpha, stride=1,

expansion=6, block_id=5)

# 28,28,32 -> 14,14,64

x = inverted_res_block(x, filters=64, alpha=alpha, stride=2,

expansion=6, block_id=6)

x = inverted_res_block(x, filters=64, alpha=alpha, stride=1,

expansion=6, block_id=7)

x = inverted_res_block(x, filters=64, alpha=alpha, stride=1,

expansion=6, block_id=8)

x = inverted_res_block(x, filters=64, alpha=alpha, stride=1,

expansion=6, block_id=9)

# 14,14,64 -> 14,14,96

x = inverted_res_block(x, filters=96, alpha=alpha, stride=1,

expansion=6, block_id=10)

x = inverted_res_block(x, filters=96, alpha=alpha, stride=1,

expansion=6, block_id=11)

x = inverted_res_block(x, filters=96, alpha=alpha, stride=1,

expansion=6, block_id=12)

# 14,14,96 -> 7,7,160

x = inverted_res_block(x, filters=160, alpha=alpha, stride=2,

expansion=6, block_id=13)

x = inverted_res_block(x, filters=160, alpha=alpha, stride=1,

expansion=6, block_id=14)

x = inverted_res_block(x, filters=160, alpha=alpha, stride=1,

expansion=6, block_id=15)

# 7,7,160 -> 7,7,320

x = inverted_res_block(x, filters=320, alpha=alpha, stride=1,

expansion=6, block_id=16)

if alpha > 1.0:

last_block_filters = make_divisible(1280 * alpha, 8)

else:

last_block_filters = 1280

# 7,7,320 -> 7,7,1280

x = Conv2D(last_block_filters,

kernel_size=1,

use_bias=False,

name='Conv_1')(x)

x = BatchNormalization(epsilon=1e-3,

momentum=0.999,

name='Conv_1_bn')(x)

x = Activation(relu6, name='out_relu')(x)

x = GlobalAveragePooling2D()(x)

x = Dense(classes, activation='softmax',

use_bias=True, name='Logits')(x)

inputs = img_input

model = Model(inputs, x, name='mobilenetv2_%0.2f_%s' % (alpha, rows))

# Load weights.

if weights == 'imagenet':

if include_top:

model_name = ('mobilenet_v2_weights_tf_dim_ordering_tf_kernels_' +

str(alpha) + '_' + str(rows) + '.h5')

weight_path = BASE_WEIGHT_PATH + model_name

weights_path = get_file(

model_name, weight_path, cache_subdir='models')

else:

model_name = ('mobilenet_v2_weights_tf_dim_ordering_tf_kernels_' +

str(alpha) + '_' + str(rows) + '_no_top' + '.h5')

weight_path = BASE_WEIGHT_PATH + model_name

weights_path = get_file(

model_name, weight_path, cache_subdir='models')

model.load_weights(weights_path)

elif weights is not None:

model.load_weights(weights)

return model