循环神经网络的简洁实现

这里写目录标题

- 前言

- 具体实现

-

- 加载数据

- 相邻采样

- 梯度修剪

- one-hot编码

- 参数

- 定义模型

- 后记

前言

- 本文用于记录循环神经网络的简单实现及个人笔记,防止遗忘,内容来自https://tangshusen.me/Dive-into-DL-PyTorch/#/chapter06_RNN/6.5_rnn-pytorch

具体实现

加载数据

- 加载数据,同时将字符转化为索引

def load_data_jay_lyrics():

corpus_chars=open('jaychou_lyrics.txt',encoding='utf-8').read()

# corpus_chars[:40] '想要有直升机\n想要和你飞到宇宙去\n想要和你融化在一起\n融化在宇宙里\n我每天每天每'

# 这个数据集有6万多个字符。为了打印方便,我们把换行符替换成空格,然后仅使用前1万个字符来训练模型。

corpus_chars=corpus_chars.replace('\n',' ').replace('\r',' ')

corpus_chars=corpus_chars[:10000]

# corpus_chars[:40] '想要有直升机 想要和你飞到宇宙去 想要和你融化在一起 融化在宇宙里 我每天每天每'

# 建立字符索引

idx_to_char=list(set(corpus_chars))

# print(len(idx_to_char)) 1027

char_to_idx=dict([(char,i) for i,char in enumerate(idx_to_char)])

vocab_size=len(char_to_idx)

# 将训练数据集中每个字符转化为索引,并打印前20个字符及其对应的索引。

corpus_indices = [char_to_idx[char] for char in corpus_chars]

# sample = corpus_indices[:20]

# print('chars:', ''.join([idx_to_char[idx] for idx in sample]))

# print('indices:', sample)

# chars: 想要有直升机 想要和你飞到宇宙去 想要和

# indices: [465, 568, 415, 6, 329, 512, 337, 465, 568, 73, 904, 454, 372, 28, 613, 85, 337, 465, 568, 73]

return corpus_indices,char_to_idx,idx_to_char,vocab_size

- corpus_indices:[170, 539, 969, 48, 94, 576, 253, 170, 539, 49, 903, 157, 530, 334, 577, 332, 253, 170, 539, 49……]对应的就是[想 要 有 直 升 机 想 要 和 你 飞 到 宇 宙 去 想 要 和 你 融 化 在 一 起 ]的id

- char_to_idx:是乱序字典,给定char返回id

- idx_to_char:是乱序列表,给定id返回char

- vocab_size:字典大小

相邻采样

- 首先明白要采什么样,要采样[batch_size,num_steps]的数据,所谓相邻其实就是两次采样之间的数据是连续的,相邻采样其实就是先把整个的数据集corpus_indices分成[batch_size,-1]的形状,然后每次都在第二维上选num_steps列,即行全要,就是batch_size,然后列每次取num_steps列数据。

# 相邻采样

def data_iter_consecutive(corpus_indices, batch_size, num_steps, device=None):

if device is None:

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

corpus_indices = torch.tensor(corpus_indices, dtype=torch.float32, device=device)

data_len = len(corpus_indices)

batch_len = data_len // batch_size

indices = corpus_indices[0: batch_size*batch_len].view(batch_size, batch_len)

epoch_size = (batch_len - 1) // num_steps

for i in range(epoch_size):

i = i * num_steps

X = indices[:, i: i + num_steps]

Y = indices[:, i + 1: i + num_steps + 1]

yield X, Y

梯度修剪

- 循环神经网络中较容易出现梯度衰减或梯度爆炸。我们会在6.6节(通过时间反向传播)中解释原因。为了应对梯度爆炸,我们可以裁剪梯度(clip gradient)。假设我们把所有模型参数梯度的元素拼接成一个向量 g,并设裁剪的阈值是θ。裁剪后的梯度

的L2范数不超过θ。

# 梯度修剪

def grad_clipping(params, theta, device):

norm = torch.tensor([0.0], device=device)

for param in params:

norm += (param.grad.data ** 2).sum()

norm = norm.sqrt().item()

if norm > theta:

for param in params:

param.grad.data *= (theta / norm)

one-hot编码

- 该例用的独热编码,不多说

def one_hot(x, n_class, dtype=torch.float32):

# X shape: (batch), output shape: (batch, n_class)

x = x.long()

res = torch.zeros(x.shape[0], n_class, dtype=dtype, device=x.device)

res.scatter_(1, x.view(-1, 1), 1)

return res

# 本函数已保存在d2lzh_pytorch包中方便以后使用

def to_onehot(X, n_class):

# X shape: (batch, seq_len), output: seq_len elements of (batch, n_class)

return [one_hot(X[:, i], n_class) for i in range(X.shape[1])]

参数

- 由于采用的独热编码,所以nn.RNN的input_size=vocab_size,即词典的尺寸。

device=torch.device('cuda' if torch.cuda.is_available() else 'cpu')

(corpus_indices, char_to_idx, idx_to_char, vocab_size) = load_data_jay_lyrics()

num_hiddens = 256

# rnn_layer = nn.LSTM(input_size=vocab_size, hidden_size=num_hiddens) # 已测试

rnn_layer = nn.RNN(input_size=vocab_size, hidden_size=num_hiddens)

num_steps = 35

batch_size = 2

state = None

X = torch.rand(num_steps, batch_size, vocab_size)

Y, state_new = rnn_layer(X, state)

定义模型

# 本类已保存在d2lzh_pytorch包中方便以后使用

class RNNModel(nn.Module):

def __init__(self, rnn_layer, vocab_size):

super(RNNModel, self).__init__()

self.rnn = rnn_layer

self.hidden_size = rnn_layer.hidden_size * (2 if rnn_layer.bidirectional else 1)

self.vocab_size = vocab_size

self.dense = nn.Linear(self.hidden_size, vocab_size)

self.state = None

def forward(self, inputs, state): # inputs: (batch, seq_len)

# 获取one-hot向量表示

X = to_onehot(inputs, self.vocab_size) # X是个list

Y, self.state = self.rnn(torch.stack(X), state)

# 全连接层会首先将Y的形状变成(num_steps * batch_size, num_hiddens),它的输出

# 形状为(num_steps * batch_size, vocab_size)

output = self.dense(Y.view(-1, Y.shape[-1]))

return output, self.state

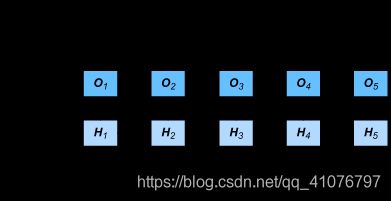

- 上面inputs的形状是[batch_size,num_steps],经过to_onehot之后,变为X:[num_steps,batch_size,vocab_size],但是X是个列表,形状类似

[tensor([[0.7861, 0.7531, 0.2099],

[0.8453, 0.1873, 0.4204]]), tensor([[0.7861, 0.7531, 0.2099],

[0.8453, 0.1873, 0.4204]])]

即只有最外层是列表,里面是tensor,然后送入rnn。其中的torch.stack其实就是把列表变成tensor,形状如下:

tensor([[[0.1195, 0.5092, 0.8881],

[0.6585, 0.2135, 0.9743]],

[[0.1195, 0.5092, 0.8881],

[0.6585, 0.2135, 0.9743]]]) - 然后我们看看rnn里发生了什么:

rnn里实现了两个过程,一是对于每个字的纵向预测过程,二是使用前面的经验(即隐藏状态)来生成后面字的预测。

下面是rnn过程的实现:

num_inputs, num_hiddens, num_outputs = vocab_size, 256, vocab_size

print('will use', device)

def get_params():

def _one(shape):

ts = torch.tensor(np.random.normal(0, 0.01, size=shape), device=device, dtype=torch.float32)

return torch.nn.Parameter(ts, requires_grad=True)

# 隐藏层参数

W_xh = _one((num_inputs, num_hiddens))

W_hh = _one((num_hiddens, num_hiddens))

b_h = torch.nn.Parameter(torch.zeros(num_hiddens, device=device, requires_grad=True))

# 输出层参数

W_hq = _one((num_hiddens, num_outputs))

b_q = torch.nn.Parameter(torch.zeros(num_outputs, device=device, requires_grad=True))

return nn.ParameterList([W_xh, W_hh, b_h, W_hq, b_q])

上面是生成参数的函数,下面是初始化隐藏状态

def init_rnn_state(batch_size, num_hiddens, device):

return (torch.zeros((batch_size, num_hiddens), device=device), )

下面是rnn的实现过程:

def rnn(inputs, state, params):

# inputs和outputs皆为num_steps个形状为(batch_size, vocab_size)的矩阵

W_xh, W_hh, b_h, W_hq, b_q = params

H, = state

outputs = []

for X in inputs:

H = torch.tanh(torch.matmul(X, W_xh) + torch.matmul(H, W_hh) + b_h)

Y = torch.matmul(H, W_hq) + b_q

outputs.append(Y)

return outputs, (H,)

我们可以看出,每个for循环是从inputs中拿出一个[batch_size,vocab_size],也就是说每一次循环就是进行一个字的纵向计算。

- 然后我们看到

output = self.dense(Y.view(-1, Y.shape[-1]))

首先把Y从[num_steps,batch_size,vocab_size]变为[num_steps*batch_size,vocab_size],然后放入预测层,是一个全连接层,最终输出是(num_steps * batch_size, vocab_size)

- 再有,

self.hidden_size = rnn_layer.hidden_size * (2 if rnn_layer.bidirectional else 1)

假如是双向的,即lstm,那么,隐藏层尺寸是二倍,因为有来自前后的隐藏层。

后记

- 文章写得比较乱,主要是在看了Dive-into-DL-PyTorch之后做的笔记,再复习的时候可以更快的领会。