《Web安全之深度学习实战》笔记:第三章 循环神经网络

本章主要讲解RNN的基本使用方法。

一、基于lstm对影评进行分类

这是基于imdb.pkl数据集对影评分类,使用的是lstm算法。

代码如下所示

from __future__ import division, print_function, absolute_import

import tflearn

from tflearn.data_utils import to_categorical, pad_sequences

from tflearn.datasets import imdb

from tflearn.layers.embedding_ops import embedding

from tflearn.layers.recurrent import bidirectional_rnn, BasicLSTMCell

import os

import pickle

from six.moves import urllib

import tflearn

from tflearn.data_utils import *

# IMDB Dataset loading

train, test, _ = imdb.load_data(path='c:\\data\imdb\imdb.pkl', n_words=10000,

valid_portion=0.1)

trainX, trainY = train

testX, testY = test

def lstm(trainX, trainY,testX, testY):

# Data preprocessing

# Sequence padding

trainX = pad_sequences(trainX, maxlen=100, value=0.)

testX = pad_sequences(testX, maxlen=100, value=0.)

# Converting labels to binary vectors

trainY = to_categorical(trainY, nb_classes=2)

testY = to_categorical(testY, nb_classes=2)

# Network building

net = tflearn.input_data([None, 100])

net = tflearn.embedding(net, input_dim=10000, output_dim=128)

net = tflearn.lstm(net, 128, dropout=0.8)

net = tflearn.fully_connected(net, 2, activation='softmax')

net = tflearn.regression(net, optimizer='adam', learning_rate=0.001,

loss='categorical_crossentropy')

# Training

model = tflearn.DNN(net, tensorboard_verbose=0)

model.fit(trainX, trainY, validation_set=(testX, testY), show_metric=True,

batch_size=32,run_id="rnn-lstm")

lstm(trainX, trainY,testX, testY)运行结果如下

| Adam | epoch: 001 | loss: 0.48533 - acc: 0.7824 | val_loss: 0.48509 - val_acc: 0.7832 -- iter: 22500/22500

| Adam | epoch: 002 | loss: 0.31607 - acc: 0.8826 | val_loss: 0.43608 - val_acc: 0.8088 -- iter: 22500/22500

| Adam | epoch: 003 | loss: 0.21343 - acc: 0.9283 | val_loss: 0.45056 - val_acc: 0.8192 -- iter: 22500/22500

| Adam | epoch: 004 | loss: 0.18641 - acc: 0.9394 | val_loss: 0.47450 - val_acc: 0.8212 -- iter: 22500/22500

| Adam | epoch: 005 | loss: 0.13402 - acc: 0.9530 | val_loss: 0.61099 - val_acc: 0.8116 -- iter: 22500/22500

| Adam | epoch: 006 | loss: 0.10798 - acc: 0.9615 | val_loss: 0.60526 - val_acc: 0.8052 -- iter: 22500/22500

| Adam | epoch: 007 | loss: 0.09115 - acc: 0.9816 | val_loss: 0.65701 - val_acc: 0.8048 -- iter: 22500/22500

| Adam | epoch: 008 | loss: 0.07989 - acc: 0.9794 | val_loss: 0.66359 - val_acc: 0.8032 -- iter: 22500/22500

| Adam | epoch: 009 | loss: 0.07500 - acc: 0.9831 | val_loss: 0.76490 - val_acc: 0.7984 -- iter: 22500/22500

| Adam | epoch: 010 | loss: 0.08060 - acc: 0.9807 | val_loss: 0.75649 - val_acc: 0.7988 -- iter: 22500/22500从这10轮的运行效果上看训练集准确率很好,但是测试集合性能稍差,说明泛化能力 不太行,更糟糕的是多轮下来其实验证集合的准确率几乎没有明显变化,而loss相比还增加了。

二、基于bi_lstm对影评进行分类

本小节也是基于imdb.pkl数据集对影评分类,使用的是bi-lstm算法。

代码如下

from __future__ import division, print_function, absolute_import

import tflearn

from tflearn.data_utils import to_categorical, pad_sequences

from tflearn.datasets import imdb

from tflearn.layers.embedding_ops import embedding

from tflearn.layers.recurrent import bidirectional_rnn, BasicLSTMCell

import os

import pickle

from six.moves import urllib

import tflearn

from tflearn.data_utils import *

# IMDB Dataset loading

train, test, _ = imdb.load_data(path='c:\\data\imdb\imdb.pkl', n_words=10000,

valid_portion=0.1)

trainX, trainY = train

testX, testY = test

def bi_lstm(trainX, trainY,testX, testY):

trainX = pad_sequences(trainX, maxlen=200, value=0.)

testX = pad_sequences(testX, maxlen=200, value=0.)

# Converting labels to binary vectors

trainY = to_categorical(trainY, nb_classes=2)

testY = to_categorical(testY, nb_classes=2)

# Network building

net = tflearn.input_data(shape=[None, 200])

net = tflearn.embedding(net, input_dim=20000, output_dim=128)

net = tflearn.bidirectional_rnn(net, BasicLSTMCell(128), BasicLSTMCell(128))

net = tflearn.dropout(net, 0.5)

net = tflearn.fully_connected(net, 2, activation='softmax')

net = tflearn.regression(net, optimizer='adam', loss='categorical_crossentropy')

# Training

model = tflearn.DNN(net, clip_gradients=0., tensorboard_verbose=2)

model.fit(trainX, trainY, validation_set=0.1, show_metric=True, batch_size=64,run_id="rnn-bilstm")

bi_lstm(trainX, trainY,testX, testY)运行结果如下:

| Adam | epoch: 001 | loss: 0.66180 - acc: 0.6439 | val_loss: 0.70163 - val_acc: 0.5142 -- iter: 20250/20250

| Adam | epoch: 002 | loss: 0.62416 - acc: 0.7158 | val_loss: 0.85242 - val_acc: 0.5764 -- iter: 20250/20250

| Adam | epoch: 003 | loss: 0.59404 - acc: 0.6370 | val_loss: 0.65801 - val_acc: 0.5809 -- iter: 20250/20250

| Adam | epoch: 004 | loss: 0.47706 - acc: 0.8007 | val_loss: 0.57805 - val_acc: 0.7427 -- iter: 20250/20250

| Adam | epoch: 005 | loss: 0.53896 - acc: 0.7441 | val_loss: 0.61494 - val_acc: 0.6960 -- iter: 20250/20250

| Adam | epoch: 006 | loss: 0.42812 - acc: 0.8276 | val_loss: 0.57085 - val_acc: 0.7542 -- iter: 20250/20250

| Adam | epoch: 007 | loss: 0.38480 - acc: 0.8393 | val_loss: 0.66420 - val_acc: 0.7222 -- iter: 20250/20250

| Adam | epoch: 008 | loss: 0.38083 - acc: 0.8644 | val_loss: 0.63575 - val_acc: 0.7578 -- iter: 20250/20250

| Adam | epoch: 009 | loss: 0.36859 - acc: 0.8441 | val_loss: 0.55491 - val_acc: 0.7453 -- iter: 20250/20250

| Adam | epoch: 010 | loss: 0.30381 - acc: 0.8878 | val_loss: 0.52895 - val_acc: 0.7751 -- iter: 20250/20250与lstm类似,从这10轮的运行效果上看训练集准确率相对较好,但是测试集合性能稍差,说明泛化能力 不太行。更比lstm相对而言进步的是,多轮下来其实验证集合的准确率在增加,而loss相比也在降低。

三、莎士比亚写作

本小节是基于莎士比亚的小说,可以自动写作,这部分原理实际上是char-rnn,使用的是tensorflow中tflearn.SequenceGenerator来实现的。

在《web安全之机器学习入门》中,第16章的第4小节和 第6小节都是使用此生成功能,其中16.4是生成常用城市名称的,而16.6是生成常用密码的,具体链接如下:

《Web安全之机器学习入门》笔记:第十六章 16.4 生成城市名称

《Web安全之机器学习入门》笔记:第十六章 16.6 生成常用密码

本小节的莎士比亚写作的代码如下所示

def shakespeare():

path = "c:\\data\shakespeare\shakespeare_input.txt"

#path = "shakespeare_input-100.txt"

char_idx_file = 'char_idx.pickle'

if not os.path.isfile(path):

urllib.request.urlretrieve(

"https://raw.githubusercontent.com/tflearn/tflearn.github.io/master/resources/shakespeare_input.txt", path)

maxlen = 25

char_idx = None

if os.path.isfile(char_idx_file):

print('Loading previous char_idx')

char_idx = pickle.load(open(char_idx_file, 'rb'))

X, Y, char_idx = \

textfile_to_semi_redundant_sequences(path, seq_maxlen=maxlen, redun_step=3,

pre_defined_char_idx=char_idx)

pickle.dump(char_idx, open(char_idx_file, 'wb'))

g = tflearn.input_data([None, maxlen, len(char_idx)])

g = tflearn.lstm(g, 512, return_seq=True)

g = tflearn.dropout(g, 0.5)

g = tflearn.lstm(g, 512, return_seq=True)

g = tflearn.dropout(g, 0.5)

g = tflearn.lstm(g, 512)

g = tflearn.dropout(g, 0.5)

g = tflearn.fully_connected(g, len(char_idx), activation='softmax')

g = tflearn.regression(g, optimizer='adam', loss='categorical_crossentropy',

learning_rate=0.001)

m = tflearn.SequenceGenerator(g, dictionary=char_idx,

seq_maxlen=maxlen,

clip_gradients=5.0,

checkpoint_path='model_shakespeare')

for i in range(50):

seed = random_sequence_from_textfile(path, maxlen)

m.fit(X, Y, validation_set=0.1, batch_size=128,

n_epoch=1, run_id='shakespeare')

print("-- TESTING...")

print("-- Test with temperature of 1.0 --")

print(m.generate(600, temperature=1.0, seq_seed=seed))

#print(m.generate(10, temperature=1.0, seq_seed=seed))

print("-- Test with temperature of 0.5 --")

print(m.generate(600, temperature=0.5, seq_seed=seed))从代码可知,验证了1-50轮的生成结果,不过每轮的过程实在是过于耗时,我自己的破笔记本实在是慢了,没有跑出完整的运行结果。参考其他人跑此程序的运行结果,第一轮生成的结果大家参考下

THAISA:

Why, sir, say if becel; sunthy alot but of

coos rytermelt, buy -

bived with wond I saTt fas,'? You and grigper.

FIENDANS:

By my wordhand!

KING RECENTEN:

Wish sterest expeun The siops so his fuurs,

And emour so, ane stamn.

she wealiwe muke britgie; I dafs tpichicon, bist,

Turch ose be fast wirpest neerenler.

NONTo:

So befac, sels at, Blove and rackity;

The senent stran spard: and, this not you so the wount

hor hould batil's toor wate

What if a poostit's of bust contot;

Whit twetemes, Game ifon I am

Ures the fast to been'd matter:

To and lause. Tiess her jittarss,

Let concertaet ar: and not!

Not fearle her g第十轮运行结果

PEMBROKE:

There tell the elder pieres,

Would our pestilent shapeing sebaricity. So have partned in me, Project of Yorle

again, and then when you set man

make plash'd of her too sparent

upon this father be dangerous puny or house;

Born is now been left of himself,

This true compary nor no stretches, back that

Horses had hand or question!

POLIXENES:

I have unproach the strangest

padely carry neerful young Yir,

Or hope not fall-a a cause of banque.

JESSICA:

He that comes to find the just,

And eyes gold, substrovious;

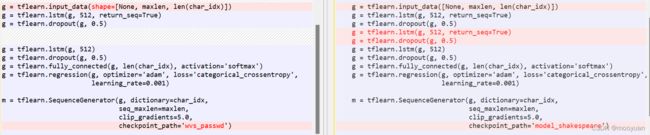

Yea pity a god on a foul rioness, these tebles and purish new head meet again?我在这里对比莎士比亚写作模型与生成密码模型的对比图,如下所示:

对比莎士比亚写作模型与生成城市名称模型的对比图,如下所示:

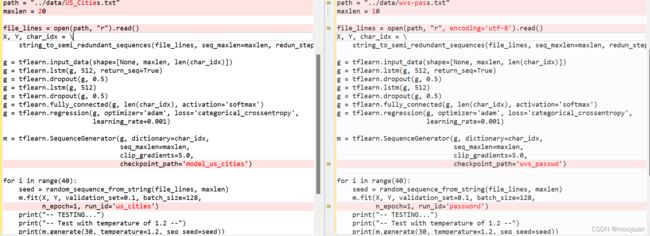

对比生成密码模型与生成城市名称模型的对比图,两者基本逻辑除了参与训练的数据集不同外,基本上是一致的。如下所示:

对比生成密码模型与生成城市名称模型的对比图,两者基本逻辑除了参与训练的数据集不同外,基本上是一致的。如下所示:

通过如下代码对比可知,生成城市名和密码的模型如下所示:

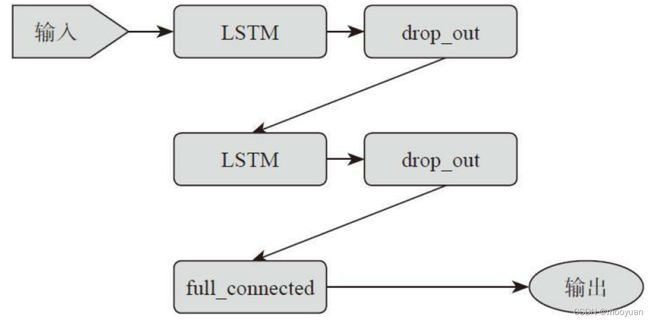

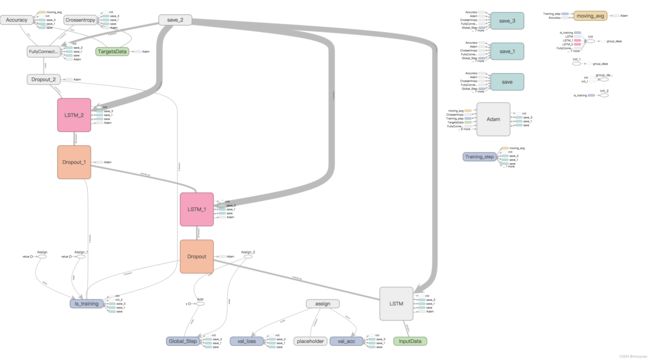

而生成莎士比亚的模型相比前两者,多了一个lstm和dropout示意图如下所示:

这一个小节的目的只是掌握tensorflow的rnn应用,以两种方法对影评分类、char-rnn生成莎士比亚写作。书上讲解不够细致,需要有一定的基础才能理解原理及应用,建议初学者可以先掌握原理再学习相关应用。

这一个小节的目的只是掌握tensorflow的rnn应用,以两种方法对影评分类、char-rnn生成莎士比亚写作。书上讲解不够细致,需要有一定的基础才能理解原理及应用,建议初学者可以先掌握原理再学习相关应用。