aubo_i5机械臂无序抓取实践二:eye_in_hand 手在眼上-手眼标定

步骤

一:安装标定包(realsense,easy_handeye,visp,aruco)

- Eye-to-hand 眼在手外:标定的是相机坐标系相对于机器人基座坐标系的位姿

- Eye-in-hand眼在手上:标定的是相机坐标系相对于机器人工具坐标系的位姿

(1)安装realsense-SDK包

1.源码构建SDK,注册服务器的公钥:

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE || sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-key F6E65AC044F831AC80A06380C8B3A55A6F3EFCDE

如果仍然无法检索到公钥,请检查并指定代理设置:export http_proxy="http://

2.将服务器添加到存储库列表中:

sudo add-apt-repository "deb https://librealsense.intel.com/Debian/apt-repo $(lsb_release -cs) main" -u

3.安装库:

sudo apt-get install librealsense2-dkms

sudo apt-get install librealsense2-utils

4.可选安装开发和调试包:

sudo apt-get install librealsense2-dev

sudo apt-get install librealsense2-dbg

5.重新连接英特尔实感深度摄像头并运行:realsense-viewer以验证安装。

6.验证内核是否已更新:运行以下程序输出应包含realsense字符串

modinfo uvcvideo | grep "version:"

(2)安装realsense-ros

1.建立工作空间

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src/

2.克隆源文件到src文件夹下并编译

git clone https://github.com/IntelRealSense/realsense-ros.git

cd ..

catkin_make

3.设置环境变量

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

4.连接相机测试

roslaunch realsense2_camera rs_camera.launch

紧接着打开一个新终端,输入rviz订阅image,topic设置为depth,即可出图!!

(3)安装aruco包,并编译

cd ~/catkin_ws/src

git clone -b melodic-devel https://github.com/pal-robotics/aruco_ros.git

cd ..

catkin_make

(4)安装visp,并编译visp_hand2eye_calibration这个包,其他包暂时不用全编译

cd ~/catkin_ws/src

git clone -b melodic-devel https://github.com/lagadic/vision_visp.git

cd ..

catkin_make --pkg visp_hand2eye_calibration

(5)安装easy_handeye

cd ~/catkin_ws/src

git clone https://github.com/IFL-CAMP/easy_handeye

cd ..

catkin_make

(6)编译aubo包,这里把上节的aubo_ws/src的aubo_robot复制到catkin_ws/src,重新编译一下即可

(7)下载aruco标定码:https://chev.me/arucogen/

Dictionary:Original ArUco

Marker ID:自己定

Marker size, mm: 100

(8)修改launch文件,easy_handeye里有例程,基于此launch文件进行修改,位置:

easy_handeye/docs/example_launch/ur5e_realsense_calibration.launch

把这个文件复制到easy_handeye/easy_handeye/launch/文件下,并重新命名为eye_to_hand_calibration.launch

原launch文件内容:

<launch>

<arg name="namespace_prefix" default="ur5_kinect_handeyecalibration" />

<arg name="eye_on_hand" default="false" />

<arg name="camera_namespace" default="/camera/color" />

<arg name="robot_ip" doc="The IP address of the UR5 robot" default="101.101.101.12" />

<arg name="marker_size" doc="Size of the ArUco marker used, in meters" default="0.016" />

<arg name="square_size" doc="The ID of the ArUco marker used" default="0.024" />

<arg name="square_number_x" default="7" />

<arg name="square_number_y" default="9" />

<!-- start the realsense -->

<include file="$(find realsense2_camera)/launch/rs_rgbd.launch" >

<arg name="color_height" value="1080" />

<arg name="color_width" value="1920" />

<arg name="color_fps" value="30" />

</include>

<!-- start ArUco -->

<node name="easy_aruco_node" pkg="easy_aruco" type="easy_aruco_node">

<param name="object_type" value="charuco_board" />

<param name="camera_namespace" value="$(arg camera_namespace)" />

<param name="dictionary" value="DICT_6X6_250" />

<param name="camera_frame" value="camera_color_optical_frame" />

<param name="reference_frame" value="camera_color_optical_frame" />

<param name="marker_size" value="$(arg marker_size)" />

<param name="square_size" value="$(arg square_size)" />

<param name="square_number_x" value="$(arg square_number_x)" />

<param name="square_number_y" value="$(arg square_number_y)" />

</node>

<!-- start the robot (using https://github.com/UniversalRobots/Universal_Robots_ROS_Driver) -->

<include file="$(find ur_robot_driver)/launch/ur5e_bringup.launch">

<arg name="robot_ip" value="$(arg robot_ip)" />

<!-- <arg name="kinematics_config" value="path/to/ur5e_calibration.yaml" />-->

</include>

<include file="$(find ur5e_moveit_config)/launch/ur5e_moveit_planning_execution.launch" />

<include file="$(find ur5e_moveit_config)/launch/moveit_rviz.launch" >

<arg name="rviz_config" value="$(find ur5e_moveit_config)/launch/moveit.rviz" />

</include>

<!-- start easy_handeye -->

<include file="$(find easy_handeye)/launch/calibrate.launch" >

<arg name="namespace_prefix" value="$(arg namespace_prefix)" />

<arg name="eye_on_hand" value="$(arg eye_on_hand)" />

<arg name="tracking_base_frame" value="camera_color_optical_frame" />

<arg name="tracking_marker_frame" value="board" />

<arg name="robot_base_frame" value="base_link" />

<arg name="robot_effector_frame" value="tool0" />

<arg name="freehand_robot_movement" value="true" />

<arg name="robot_velocity_scaling" value="0.5" />

<arg name="robot_acceleration_scaling" value="0.2" />

<arg name="translation_delta_meters" default="0.05" />

<arg name="rotation_delta_degrees" default="25" />

</include>

</launch>

修改后的launch文件:

<launch>

<arg name="namespace_prefix" default="aubo_i5_realsense_handeye_calibration" />

<!-- ip地址根据自己机械臂的地址而定 -->

<arg name="robot_ip" doc="The IP address of the aubo_i5 robot" default=”192.168.99.254” />

<arg name="marker_size" doc="Size of the ArUco marker used, in meters" default="0.1" />

<arg name="marker_id" doc="The ID of the ArUco marker used" default="53"/>

<!-- 1. start the Realsense435 -->

<include file="$(find realsense2_camera)/launch/rs_camera.launch" />

<!-- 2. start ArUco -->

<node name="aruco_tracker" pkg="aruco_ros" type="single">

<remap from="/camera_info" to="/camera/color/camera_info" />

<remap from="/image" to="/camera/color/image_raw" />

<param name="image_is_rectified" value="true"/>

<!--marker_size和marker_id根据下载的参数修改 -->

<param name="marker_size" value="$(arg marker_size)"/>

<param name="marker_id" value="$(arg marker_id)"/>

<param name="reference_frame" value="camera_color_frame"/>

<param name="camera_frame" value="camera_color_frame"/>

<param name="marker_frame" value="camera_marker" />

</node>

<!-- 3. start the robot -->

<include file="$(find aubo_i5_moveit_config)/launch/moveit_planning_execution.launch">

<arg name="sim" value="false" />

<arg name="robot_ip" value="192.168.99.254" />

</include>

<!-- 4. start easy_handeye -->

<include file="$(find easy_handeye)/launch/calibrate.launch" >

<arg name="namespace_prefix" value="$(arg namespace_prefix)" />

<!-- 眼在手上:value=true;眼在手外:value=false -->

<arg name="eye_on_hand" value="true" />

<arg name="tracking_base_frame" value="camera_color_frame" />

<arg name="tracking_marker_frame" value="camera_marker" />

<arg name="robot_base_frame" value="base" />

<arg name="freehand_robot_movement" value="false" />

<arg name="robot_velocity_scaling" value="0.5" />

<arg name="robot_acceleration_scaling" value="0.2" />

</include>

</launch>

(9)开始标定

roslaunch easy_handeye eye_to_hand_calibration.launch

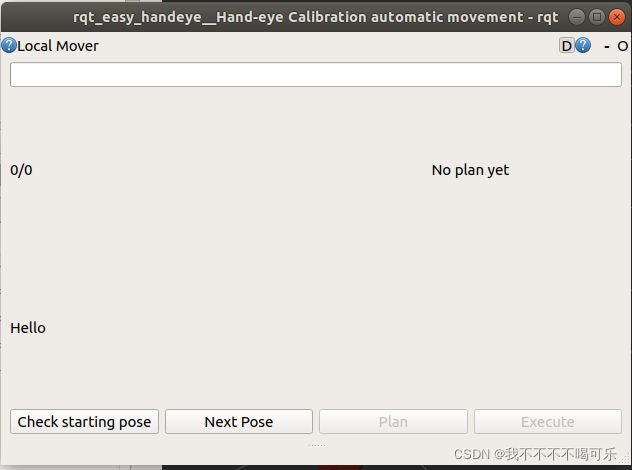

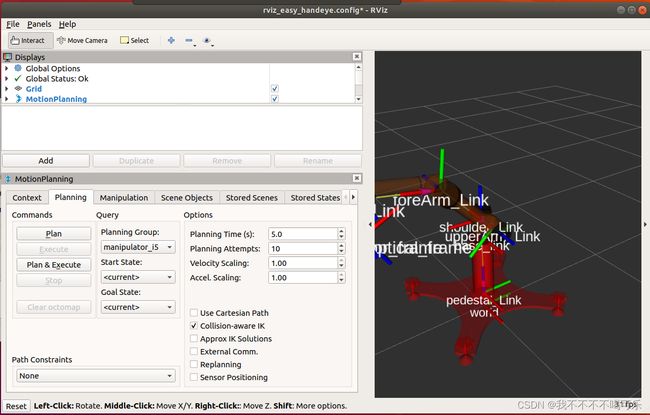

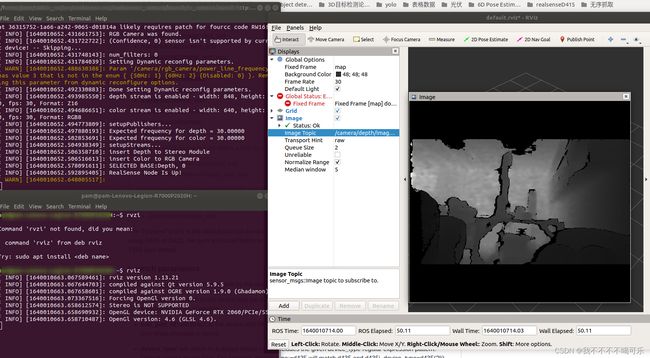

二:标定过程:运行launch文件,GUI界面没有菜单栏,这个问题还没有解决,在rviz界面订阅image话题代替即可

1.界面一:添加image,订阅aruco tracker/result,即可在rviz界面看到图像。

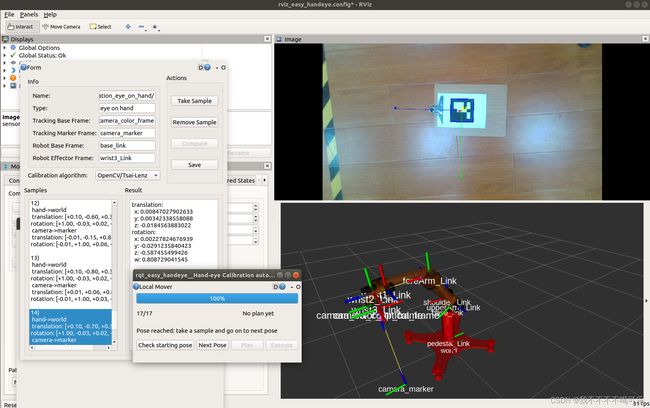

2.界面二:点击check starting pose,检查成功显示Ready to start:click to next pose,紧接着点击Next pose --> Plan --> Execute,若报:can not calibrate in current position,因为机械臂与二维码的距离太远。手动调节一下机械臂即可。

3.机械臂移动新的位置,移动完毕若二维码在视野范围,即可在界面3点击take sample,若二维码丢失,则这个点作废,回到步骤二。

4.重复步骤2和3,直到移动完17个点,点击compute, Result对话框即可出现标定好的结果。

标定结果:(采集了14个点,丢弃3个点)

根据计算出来的的translation,可用尺子实测一下,偏差不大,标定成功!!

三:发布TF

1.待更新!!!发布tf就运行一个publish.launch文件就可以,具体操作等周末我再更新一下博客,先把环境搭好!

遇到的错误及解决方法:

1.cv2.CALIB_HAND_EYE_TSAI

pip install opencv-python==4.2.0.32