Docker镜像解析出Dockerfile

目录

一、针对问题

二、解析镜像的Dockerfile

2.1 下载github的解析工具

2.2 解析出Dockerfile文件

2.3 针对官方镜像解析出的Dockfile进行分析

2.4 通过平台的创建镜像功能制作镜像(需要联网)

一、针对问题

在制作适配A100等新的GPU卡镜像时,由于一些pytorch、tensorflow等框架可能还不支持该GPU卡,自己制作的镜像,可能无法使用A100 GPU卡。在使用NVIDIA官方NGC提供的框架镜像时,官方在镜像里面已经配置jupyter等组件,配置方式和我们不一样,导致镜像导入AI平台时,无法使用jupyter。即使我们重新配置,也无法生效,因为配置方式不一样, 为了提高效率和节省时间,针对类似现象,提供一种解决该问题的参考方式。

以NGC官方tensorflow镜像为例:

docker pull nvcr.io/nvidia/tensorflow:20.09-tf2-py3镜像官方说明链接:

TensorFlow Release Notes :: NVIDIA Deep Learning Frameworks Documentation

二、解析镜像的Dockerfile

通过Dockerfile制作的镜像,解析出Dockerfile, 该方式不适用于所有镜像,只针对Dockerfile制作的镜像,而且解析出的Dockerfile仅供参考,和实际的原Dockerfile文件是有差别的。不过可以用来了解,Dockerfile安装了哪些组件,以及对镜像进行了哪些操作和配置,然后进行有针对性的修改,解决适配AIStation平台的相关问题。

2.1 下载github的解析工具

$ cd /home/wjy

$ git clone https://github.com/lukapeschke/dockerfile-from-image.git

$ cd dockerfile-from-image

$ docker build --rm -t lukapeschke/dockerfile-from-image .

查看buid完成的镜像

![]()

2.2 解析出Dockerfile文件

注意:

docker run --rm -v '/var/run/docker.sock:/var/run/docker.sock' lukapeschke/dockerfile-from-image 示例:

![]()

docker run --rm -v '/var/run/docker.sock:/var/run/docker.sock' lukapeschke/dockerfile-from-image > /home/wjy/cuda11-dockerfile/Dockerfile查看解析出的Dockerfile:

cat /home/wjy/cuda11-dockerfile/DockerfileFROM nvcr.io/nvidia/tensorflow:20.09-tf2-py3

ADD file:5c125b7f411566e9daa738d8cb851098f36197810f06488c2609074296f294b2 in /

RUN /bin/sh -c [ -z "$(apt-get indextargets)" ]

RUN /bin/sh -c set -xe \

&& echo '#!/bin/sh' > /usr/sbin/policy-rc.d \

&& echo 'exit 101' >> /usr/sbin/policy-rc.d \

&& chmod +x /usr/sbin/policy-rc.d \

&& dpkg-divert --local --rename --add /sbin/initctl \

&& cp -a /usr/sbin/policy-rc.d /sbin/initctl \

&& sed -i 's/^exit.*/exit 0/' /sbin/initctl \

&& echo 'force-unsafe-io' > /etc/dpkg/dpkg.cfg.d/docker-apt-speedup \

&& echo 'DPkg::Post-Invoke { "rm -f /var/cache/apt/archives/*.deb /var/cache/apt/archives/partial/*.deb /var/cache/apt/*.bin || true"; };' > /etc/apt/apt.conf.d/docker-clean \

&& echo 'APT::Update::Post-Invoke { "rm -f /var/cache/apt/archives/*.deb /var/cache/apt/archives/partial/*.deb /var/cache/apt/*.bin || true"; };' >> /etc/apt/apt.conf.d/docker-clean \

&& echo 'Dir::Cache::pkgcache ""; Dir::Cache::srcpkgcache "";' >> /etc/apt/apt.conf.d/docker-clean \

&& echo 'Acquire::Languages "none";' > /etc/apt/apt.conf.d/docker-no-languages \

&& echo 'Acquire::GzipIndexes "true"; Acquire::CompressionTypes::Order:: "gz";' > /etc/apt/apt.conf.d/docker-gzip-indexes \

&& echo 'Apt::AutoRemove::SuggestsImportant "false";' > /etc/apt/apt.conf.d/docker-autoremove-suggests

RUN /bin/sh -c mkdir -p /run/systemd \

&& echo 'docker' > /run/systemd/container

CMD ["/bin/bash"]

RUN RUN /bin/sh -c export DEBIAN_FRONTEND=noninteractive \

&& apt-get update \

&& apt-get install -y --no-install-recommends apt-utils build-essential ca-certificates curl patch wget jq gnupg libtcmalloc-minimal4 \

&& curl -fsSL https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/7fa2af80.pub | apt-key add - \

&& echo "deb https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64 /" > /etc/apt/sources.list.d/cuda.list \

&& rm -rf /var/lib/apt/lists/* # buildkit

RUN ARG CUDA_VERSION

RUN ARG CUDA_DRIVER_VERSION

RUN ENV CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 CUDA_CACHE_DISABLE=1

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c /nvidia/build-scripts/installCUDA.sh # buildkit

RUN COPY cudaCheck /tmp/cudaCheck # buildkit

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c cp /tmp/cudaCheck/$(uname -m)/sh-wrap /bin/sh-wrap # buildkit

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c cp /tmp/cudaCheck/$(uname -m)/cudaCheck /usr/local/bin/ # buildkit

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c rm -rf /tmp/cudaCheck # buildkit

RUN COPY cudaCheck/shinit_v2 /etc/shinit_v2 # buildkit

RUN COPY cudaCheck/startup_scripts.patch /tmp # buildkit

RUN COPY singularity /.singularity.d # buildkit

RUN RUN |2 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 /bin/sh -c patch -p0 < /tmp/startup_scripts.patch \

&& rm -f /tmp/startup_scripts.patch \

&& ln -sf /.singularity.d/libs /usr/local/lib/singularity \

&& ln -sf sh-wrap /bin/sh # buildkit

RUN ENV _CUDA_COMPAT_PATH=/usr/local/cuda/compat ENV=/etc/shinit_v2 BASH_ENV=/etc/bash.bashrc NVIDIA_REQUIRE_CUDA=cuda>=9.0

RUN LABEL com.nvidia.volumes.needed=nvidia_driver com.nvidia.cuda.version=9.0

RUN ARG NCCL_VERSION

RUN ARG CUBLAS_VERSION

RUN ARG CUFFT_VERSION

RUN ARG CURAND_VERSION

RUN ARG CUSPARSE_VERSION

RUN ARG CUSOLVER_VERSION

RUN ARG NPP_VERSION

RUN ARG NVJPEG_VERSION

RUN ARG CUDNN_VERSION

RUN ARG TRT_VERSION

RUN ARG TRTOSS_VERSION

RUN ARG NSIGHT_SYSTEMS_VERSION

RUN ARG NSIGHT_COMPUTE_VERSION

RUN ENV NCCL_VERSION=2.7.8 CUBLAS_VERSION=11.2.0.252 CUFFT_VERSION=10.2.1.245 CURAND_VERSION=10.2.1.245 CUSPARSE_VERSION=11.1.1.245 CUSOLVER_VERSION=10.6.0.245 NPP_VERSION=11.1.0.245 NVJPEG_VERSION=11.1.1.245 CUDNN_VERSION=8.0.4.12 TRT_VERSION=7.1.3.4 TRTOSS_VERSION=20.09 NSIGHT_SYSTEMS_VERSION=2020.3.2.6 NSIGHT_COMPUTE_VERSION=2020.1.2.4

RUN RUN |15 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 NCCL_VERSION=2.7.8 CUBLAS_VERSION=11.2.0.252 CUFFT_VERSION=10.2.1.245 CURAND_VERSION=10.2.1.245 CUSPARSE_VERSION=11.1.1.245 CUSOLVER_VERSION=10.6.0.245 NPP_VERSION=11.1.0.245 NVJPEG_VERSION=11.1.1.245 CUDNN_VERSION=8.0.4.12 TRT_VERSION=7.1.3.4 TRTOSS_VERSION=20.09 NSIGHT_SYSTEMS_VERSION=2020.3.2.6 NSIGHT_COMPUTE_VERSION=2020.1.2.4 /bin/sh -c /nvidia/build-scripts/installNCCL.sh \

&& /nvidia/build-scripts/installLIBS.sh \

&& /nvidia/build-scripts/installCUDNN.sh \

&& /nvidia/build-scripts/installTRT.sh \

&& /nvidia/build-scripts/installNSYS.sh \

&& /nvidia/build-scripts/installNCU.sh # buildkit

RUN LABEL com.nvidia.nccl.version=2.7.8 com.nvidia.cublas.version=11.2.0.252 com.nvidia.cufft.version=10.2.1.245 com.nvidia.curand.version=10.2.1.245 com.nvidia.cusparse.version=11.1.1.245 com.nvidia.cusolver.version=10.6.0.245 com.nvidia.npp.version=11.1.0.245 com.nvidia.nvjpeg.version=11.1.1.245 com.nvidia.cudnn.version=8.0.4.12 com.nvidia.tensorrt.version=7.1.3.4 com.nvidia.tensorrtoss.version=20.09 com.nvidia.nsightsystems.version=2020.3.2.6 com.nvidia.nsightcompute.version=2020.1.2.4

RUN ARG DALI_VERSION

RUN ARG DALI_BUILD

RUN ARG DLPROF_VERSION

RUN ENV DALI_VERSION=0.25.1 DALI_BUILD=1612461 DLPROF_VERSION=20.09

RUN ADD docs.tgz / # buildkit

RUN RUN |18 CUDA_VERSION=11.0.221 CUDA_DRIVER_VERSION=450.51.06 NCCL_VERSION=2.7.8 CUBLAS_VERSION=11.2.0.252 CUFFT_VERSION=10.2.1.245 CURAND_VERSION=10.2.1.245 CUSPARSE_VERSION=11.1.1.245 CUSOLVER_VERSION=10.6.0.245 NPP_VERSION=11.1.0.245 NVJPEG_VERSION=11.1.1.245 CUDNN_VERSION=8.0.4.12 TRT_VERSION=7.1.3.4 TRTOSS_VERSION=20.09 NSIGHT_SYSTEMS_VERSION=2020.3.2.6 NSIGHT_COMPUTE_VERSION=2020.1.2.4 DALI_VERSION=0.25.1 DALI_BUILD=1612461 DLPROF_VERSION=20.09 /bin/sh -c echo "/usr/local/nvidia/lib" >> /etc/ld.so.conf.d/nvidia.conf \

&& echo "/usr/local/nvidia/lib64" >> /etc/ld.so.conf.d/nvidia.conf # buildkit

RUN ENV PATH=/usr/local/nvidia/bin:/usr/local/cuda/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin LD_LIBRARY_PATH=/usr/local/cuda/compat/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64 NVIDIA_VISIBLE_DEVICES=all NVIDIA_DRIVER_CAPABILITIES=compute,utility,video

RUN COPY deviceQuery/deviceQuery /usr/local/bin/ # buildkit

RUN COPY deviceQuery/checkSMVER.sh /usr/local/bin/ # buildkit

RUN RUN /bin/sh -c export DEBIAN_FRONTEND=noninteractive \

&& apt-get update \

&& apt-get install -y --no-install-recommends git libglib2.0-0 less libnl-route-3-200 libnuma-dev libnuma1 libpmi2-0-dev nano numactl openssh-client vim \

&& rm -rf /var/lib/apt/lists/* # buildkit

RUN COPY mellanox /opt/mellanox # buildkit

RUN ARG MOFED_VERSION=4.6-1.0.1

RUN ENV MOFED_VERSION=4.6-1.0.1 IBV_DRIVERS=/usr/lib/libibverbs/libmlx5

RUN RUN |1 MOFED_VERSION=4.6-1.0.1 /bin/sh -c dpkg -i /opt/mellanox/DEBS/${MOFED_VERSION}/*_$(dpkg --print-architecture).deb \

&& ln -sf /opt/mellanox/change_mofed_version.sh /usr/local/bin/ \

&& cd /usr/bin \

&& chmod a+w ibv_* \

&& cd /usr/lib \

&& touch libmlx5.so.1.0.0 \

&& chmod -R a+w libibverbs* libmlx5.so.1.0.0 \

&& ln -sf libmlx5.so.1.0.0 libmlx5.so.1 \

&& chmod a+w libmlx5.so.1 \

&& rm -f /etc/libibverbs.d/* # buildkit

RUN COPY openpmix-pmi1.patch /tmp # buildkit

RUN ARG OPENUCX_VERSION=1.6.1

RUN ARG OPENMPI_VERSION=3.1.6

RUN ENV OPENUCX_VERSION=1.6.1 OPENMPI_VERSION=3.1.6

RUN RUN |3 MOFED_VERSION=4.6-1.0.1 OPENUCX_VERSION=1.6.1 OPENMPI_VERSION=3.1.6 /bin/sh -c wget -q -O - https://github.com/openucx/ucx/releases/download/v${OPENUCX_VERSION}/ucx-${OPENUCX_VERSION}.tar.gz | tar -xzf - \

&& cd ucx-${OPENUCX_VERSION} \

&& ./configure --prefix=/usr/local/ucx --enable-mt \

&& make -j"$(nproc)" install \

&& cd .. \

&& rm -rf ucx-${OPENUCX_VERSION} \

&& echo "/usr/local/ucx/lib" >> /etc/ld.so.conf.d/openucx.conf \

&& wget -q -O - https://www.open-mpi.org/software/ompi/v$(echo "${OPENMPI_VERSION}" | cut -d . -f 1-2)/downloads/openmpi-${OPENMPI_VERSION}.tar.gz | tar -xzf - \

&& cd openmpi-${OPENMPI_VERSION} \

&& ln -sf /usr/include/slurm-wlm /usr/include/slurm \

&& ( cd opal/mca/pmix/pmix2x/pmix \

&& patch -p1 < /tmp/openpmix-pmi1.patch \

&& rm -f /tmp/openpmix-pmi1.patch ) \

&& ./configure --enable-orterun-prefix-by-default --with-verbs --with-pmi --with-pmix=internal --with-ucx=/usr/local/ucx --with-ucx-libdir=/usr/local/ucx/lib --enable-mca-no-build=btl-uct --prefix=/usr/local/mpi --disable-getpwuid \

&& make -j"$(nproc)" install \

&& cd .. \

&& rm -rf openmpi-${OPENMPI_VERSION} \

&& echo "/usr/local/mpi/lib" >> /etc/ld.so.conf.d/openmpi.conf \

&& rm -f /usr/lib/libibverbs.so /usr/lib/libibverbs.a \

&& ldconfig # buildkit

RUN ENV PATH=/usr/local/mpi/bin:/usr/local/nvidia/bin:/usr/local/cuda/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/ucx/bin

RUN COPY singularity /.singularity.d # buildkit

RUN COPY cuda-*.patch /tmp # buildkit

RUN RUN |3 MOFED_VERSION=4.6-1.0.1 OPENUCX_VERSION=1.6.1 OPENMPI_VERSION=3.1.6 /bin/sh -c export DEVEL=1 BASE=0 \

&& /nvidia/build-scripts/installNCU.sh \

&& /nvidia/build-scripts/installCUDA.sh \

&& /nvidia/build-scripts/installNCCL.sh \

&& /nvidia/build-scripts/installLIBS.sh \

&& /nvidia/build-scripts/installCUDNN.sh \

&& /nvidia/build-scripts/installTRT.sh \

&& /nvidia/build-scripts/installNSYS.sh \

&& if [ -f "/tmp/cuda-${_CUDA_VERSION_MAJMIN}.patch" ]; then patch -p0 < /tmp/cuda-${_CUDA_VERSION_MAJMIN}.patch; fi \

&& if [ ! -f /usr/include/x86_64-linux-gnu/cudnn_backend_v8.h ]; then cp /nvidia/build-scripts/cudnn_backend.h /usr/include/x86_64-linux-gnu/cudnn_backend_v8.h; ln -s /usr/include/x86_64-linux-gnu/cudnn_backend_v8.h /usr/include/x86_64-linux-gnu/cudnn_backend.h; fi \

&& rm -f /tmp/cuda-*.patch # buildkit

RUN ENV LIBRARY_PATH=/usr/local/cuda/lib64/stubs:

RUN ENV LD_LIBRARY_PATH=/usr/local/cuda/extras/CUPTI/lib64:/usr/local/cuda/compat/lib:/usr/local/nvidia/lib:/usr/local/nvidia/lib64

RUN ARG TENSORFLOW_VERSION

RUN ENV TENSORFLOW_VERSION=2.3.0

RUN LABEL com.nvidia.tensorflow.version=2.3.0

RUN ARG NVIDIA_TENSORFLOW_VERSION

RUN ENV NVIDIA_TENSORFLOW_VERSION=20.09-tf2

RUN ARG PYVER=3.6

RUN ENV PYVER=3.6

RUN RUN |3 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 /bin/sh -c PYSFX=`[ "$PYVER" != "2.7" ] \

&& echo "$PYVER" | cut -c1-1 || echo ""` \

&& DISTUTILS=`[ "$PYVER" == "3.6" ] \

&& echo "python3-distutils" || echo""` \

&& apt-get update \

&& apt-get install -y --no-install-recommends cmake pkg-config python$PYVER python$PYVER-dev python$PYSFX-pip $DISTUTILS rsync swig unzip zip zlib1g-dev libgoogle-glog-dev libjsoncpp-dev \

&& rm -rf /var/lib/apt/lists/* # buildkit

RUN ENV PYTHONIOENCODING=utf-8 LC_ALL=C.UTF-8

RUN RUN |3 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 /bin/sh -c rm -f /usr/bin/python \

&& rm -f /usr/bin/python`echo $PYVER | cut -c1-1` \

&& ln -s /usr/bin/python$PYVER /usr/bin/python \

&& ln -s /usr/bin/python$PYVER /usr/bin/python`echo $PYVER | cut -c1-1` # buildkit

RUN RUN |3 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 /bin/sh -c mkdir -p /usr/lib/x86_64-linux-gnu/include/ \

&& ln -s /usr/include/cudnn.h /usr/lib/x86_64-linux-gnu/include/cudnn.h # buildkit

RUN RUN |3 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 /bin/sh -c curl -O https://bootstrap.pypa.io/get-pip.py \

&& python get-pip.py \

&& rm get-pip.py # buildkit

RUN RUN |3 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 /bin/sh -c pip install --no-cache-dir --upgrade setuptools==49.6.0 numpy==$(test ${PYVER%.*} -eq 2 \

&& echo 1.16.5 || echo 1.17.3) pexpect==4.7.0 psutil==5.7.0 nltk==3.4.5 future==0.18.2 jupyterlab==$(test ${PYVER%.*} -eq 2 \

&& echo 0.33.12 || echo 1.2.14) notebook==$(test ${PYVER%.*} -eq 2 \

&& echo 5.7.8 || echo 6.0.3) mock==3.0.5 portpicker==1.3.1 h5py==2.10.0 scipy==$(test ${PYVER%.*} -eq 2 \

&& echo 1.2.2 || echo 1.4.1) keras_preprocessing==1.1.1 keras_applications==1.0.8 # buildkit

RUN ENV BAZELRC=/root/.bazelrc

RUN RUN |3 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 /bin/sh -c echo "startup --batch" >> $BAZELRC \

&& echo "build --spawn_strategy=standalone --genrule_strategy=standalone" >> $BAZELRC \

&& echo "startup --max_idle_secs=0" >> $BAZELRC # buildkit

RUN ARG BAZEL_VERSION=2.0.0

RUN RUN |4 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 /bin/sh -c mkdir /bazel \

&& cd /bazel \

&& curl -fSsL -O https://github.com/bazelbuild/bazel/releases/download/$BAZEL_VERSION/bazel-$BAZEL_VERSION-installer-linux-x86_64.sh \

&& curl -fSsL -o /bazel/LICENSE.txt https://raw.githubusercontent.com/bazelbuild/bazel/master/LICENSE \

&& bash ./bazel-$BAZEL_VERSION-installer-linux-x86_64.sh \

&& rm -rf /bazel # buildkit

RUN WORKDIR /opt/tensorflow

RUN COPY mkl-dnn.patch . # buildkit

RUN RUN |4 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 /bin/sh -c MKL_DNN_COMMIT=733fc908874c71a5285043931a1cf80aa923165c \

&& curl -fSsL -O https://mirror.bazel.build/github.com/intel/mkl-dnn/archive/${MKL_DNN_COMMIT}.tar.gz \

&& tar -xf ${MKL_DNN_COMMIT}.tar.gz \

&& rm -f ${MKL_DNN_COMMIT}.tar.gz \

&& cd mkl-dnn-${MKL_DNN_COMMIT} \

&& patch -p0 < /opt/tensorflow/mkl-dnn.patch \

&& cd .. \

&& tar --mtime='1970-01-01' -cf mkl-dnn-${MKL_DNN_COMMIT}-patched.tar mkl-dnn-${MKL_DNN_COMMIT}/ \

&& rm -rf mkl-dnn-${MKL_DNN_COMMIT}/ \

&& gzip -n mkl-dnn-${MKL_DNN_COMMIT}-patched.tar # buildkit

RUN RUN |4 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 /bin/sh -c mkdir -p /opt/dlprof \

&& cp /nvidia/opt/dlprof/bin/dlprof /opt/dlprof/ \

&& cp /nvidia/workspace/LICENSE /opt/dlprof/ \

&& cp /nvidia/workspace/README.md /opt/dlprof/ \

&& ln -sf /opt/dlprof/dlprof /usr/local/bin/dlprof # buildkit

RUN COPY tensorboard.patch /tmp/ # buildkit

RUN RUN |4 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 /bin/sh -c pip install --no-cache-dir 'tensorboard>=2.2,<=2.3' \

&& TBFILE=$(find $(find /usr /opt -type d -name tensorboard) -type f -name core_plugin.py) \

&& patch $TBFILE < /tmp/tensorboard.patch \

&& rm -f /tmp/tensorboard.patch # buildkit

RUN ENV NVM_DIR=/usr/local/nvm

RUN RUN |4 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 /bin/sh -c pip install --no-cache-dir git+https://github.com/lspvic/jupyter_tensorboard.git@refs/pull/63/merge \

&& mkdir -p $NVM_DIR \

&& curl -Lo- https://raw.githubusercontent.com/nvm-sh/nvm/v0.35.3/install.sh | bash \

&& source "$NVM_DIR/nvm.sh" \

&& nvm install node \

&& jupyter labextension install jupyterlab_tensorboard \

&& jupyter serverextension enable jupyterlab \

&& pip install --no-cache-dir jupytext \

&& jupyter labextension install jupyterlab-jupytext \

&& ( cd $NVM_DIR/versions/node/$(node -v)/lib/node_modules/npm \

&& ( cd node_modules/libnpx \

&& npm install update-notifier@^4.1.0 --production \

&& npm install yargs@^15.3.1 --production --force \

&& cd ../.. \

&& npm install update-notifier@^4.1.0 --production ) \

&& npm prune --production ) \

&& ( cd /usr/local/share/jupyter/lab/staging \

&& npm prune --production ) \

&& npm cache clean --force \

&& rm -rf /usr/local/share/.cache \

&& echo "source $NVM_DIR/nvm.sh" >> /etc/bash.bashrc \

&& mv /root/.jupyter/jupyter_notebook_config.json /usr/local/etc/jupyter/ # buildkit

RUN COPY jupyter_notebook_config.py /usr/local/etc/jupyter/ # buildkit

RUN ENV JUPYTER_PORT=8888

RUN ENV TENSORBOARD_PORT=6006

RUN ENV TENSORBOARD_DEBUGGER_PORT=6064

RUN EXPOSE map[8888/tcp:{}]

RUN EXPOSE map[6006/tcp:{}]

RUN EXPOSE map[6064/tcp:{}]

RUN COPY tensorflow-source ./tensorflow-source # buildkit

RUN COPY nvbuild.sh nvbuildopts nvbazelcache bazel_build.sh test_grabber.sh ./ # buildkit

RUN COPY tensorflow-logo.jpg /opt/tensorflow/tensorflow-source/tensorflow/docs_src/tensorflow-logo.jpg # buildkit

RUN ARG TFAPI=1

RUN ARG BAZEL_CACHE=

RUN RUN |6 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache /bin/sh -c /opt/tensorflow/nvbuild.sh --triton --testlist --python$PYVER --v$TFAPI $BAZEL_CACHE # buildkit

RUN ENV TF_ADJUST_HUE_FUSED=1 TF_ADJUST_SATURATION_FUSED=1 TF_ENABLE_WINOGRAD_NONFUSED=1 TF_AUTOTUNE_THRESHOLD=2 TF_USE_CUDNN_BATCHNORM_SPATIAL_PERSISTENT=1

RUN COPY horovod-source/ ./horovod-source/ # buildkit

RUN RUN |6 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache /bin/sh -c export HOROVOD_GPU_OPERATIONS=NCCL \

&& export HOROVOD_NCCL_INCLUDE=/usr/include \

&& export HOROVOD_NCCL_LIB=/usr/lib/x86_64-linux-gnu \

&& export HOROVOD_NCCL_LINK=SHARED \

&& export HOROVOD_WITHOUT_PYTORCH=1 \

&& export HOROVOD_WITHOUT_MXNET=1 \

&& export HOROVOD_WITH_TENSORFLOW=1 \

&& export HOROVOD_WITH_MPI=1 \

&& export HOROVOD_BUILD_ARCH_FLAGS="-march=sandybridge -mtune=broadwell" \

&& cd /opt/tensorflow/horovod-source \

&& ln -s /usr/local/cuda/lib64/stubs/libcuda.so ./libcuda.so.1 \

&& export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$PWD \

&& rm -rf ./dist \

&& python setup.py sdist \

&& pip install --no-cache --no-cache-dir dist/horovod-*.tar.gz \

&& python setup.py clean \

&& rm ./libcuda.so.1 # buildkit

RUN RUN |6 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache /bin/sh -c if dpkg --compare-versions ${DALI_VERSION} lt 0.23; then DALI_URL_SUFFIX="/cuda/${CUDA_VERSION%%.*}.0"; else DALI_PKG_SUFFIX="-cuda${CUDA_VERSION%%.*}0"; fi \

&& pip install --no-cache-dir --upgrade --extra-index-url https://developer.download.nvidia.com/compute/redist --extra-index-url http://sqrl/dldata/pip-simple${DALI_URL_SUFFIX:-} --trusted-host sqrl nvidia-dali${DALI_PKG_SUFFIX:-}==${DALI_VERSION} \

&& pip install --no-cache-dir --upgrade --extra-index-url https://developer.download.nvidia.com/compute/redist --extra-index-url http://sqrl/dldata/pip-simple${DALI_URL_SUFFIX:-} --trusted-host sqrl nvidia-dali-tf-plugin${DALI_PKG_SUFFIX:-}==${DALI_VERSION} # buildkit

RUN RUN |6 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache /bin/sh -c pip install --no-cache-dir --upgrade tensorflow-datasets==3.1.0 # buildkit

RUN COPY addons_cuda11.1.patch . # buildkit

RUN COPY tf-addons/ ./tf-addons # buildkit

RUN RUN |6 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache /bin/sh -c cd tf-addons \

&& patch -p1 < ../addons_cuda11.1.patch \

&& TF_NEED_CUDA=1 TF_CUDA_VERSION=$(echo "${CUDA_VERSION}" | cut -d . -f 1-2) TF_CUDNN_VERSION=$(echo "${CUDNN_VERSION}" | cut -d . -f 1) TF_CUDA_COMPUTE_CAPABILITIES="5.2,6.0,6.1,7.0,7.5,8.0" CUDA_TOOLKIT_PATH=/usr/local/cuda CUDNN_INSTALL_PATH=/usr/lib/x86_64-linux-gnu python configure.py \

&& bazel build --enable_runfiles build_pip_pkg \

&& bazel-bin/build_pip_pkg artifacts \

&& pip install artifacts/tensorflow_addons-*.whl \

&& bazel clean --expunge \

&& rm -rf artifacts # buildkit

RUN ARG NVTX_PLUGINS_VERSION=0.1.8

RUN RUN |7 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache NVTX_PLUGINS_VERSION=0.1.8 /bin/sh -c pip install --no-cache-dir --upgrade nvtx-plugins==${NVTX_PLUGINS_VERSION} # buildkit

RUN COPY nvidia_entrypoint.sh /usr/local/bin/ # buildkit

RUN ENTRYPOINT ["/usr/local/bin/nvidia_entrypoint.sh"]

RUN WORKDIR /workspace

RUN RUN |7 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache NVTX_PLUGINS_VERSION=0.1.8 /bin/sh -c chmod -R a+w /workspace # buildkit

RUN COPY NVREADME.md README.md # buildkit

RUN COPY docker-examples docker-examples # buildkit

RUN COPY nvidia-examples /workspace/nvidia-examples # buildkit

RUN RUN |7 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache NVTX_PLUGINS_VERSION=0.1.8 /bin/sh -c BASE=0 DEVEL=0 SAMPLES=0 PYTHON=1 /nvidia/build-scripts/installTRT.sh \

&& mkdir -p /usr/src/tensorrt/python \

&& cd /usr/src/tensorrt/python \

&& BASE=0 DEVEL=0 SAMPLES=0 PYTHON=0 TENSORFLOW=1 FETCHONLY=1 /nvidia/build-scripts/installTRT.sh \

&& mv /usr/src/tensorrt /opt \

&& ln -s /opt/tensorrt /usr/src/tensorrt # buildkit

RUN COPY trt_python_setup.sh /opt/tensorrt/python/python_setup.sh # buildkit

RUN RUN |7 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache NVTX_PLUGINS_VERSION=0.1.8 /bin/sh -c cd /opt/tensorrt/python \

&& ./python_setup.sh # buildkit

RUN ENV PATH=/usr/local/mpi/bin:/usr/local/nvidia/bin:/usr/local/cuda/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/ucx/bin:/opt/tensorrt/bin

RUN RUN |7 TENSORFLOW_VERSION=2.3.0 NVIDIA_TENSORFLOW_VERSION=20.09-tf2 PYVER=3.6 BAZEL_VERSION=3.1.0 TFAPI=2 BAZEL_CACHE=--bazel-cache NVTX_PLUGINS_VERSION=0.1.8 /bin/sh -c ln -sf ${_CUDA_COMPAT_PATH}/lib.real ${_CUDA_COMPAT_PATH}/lib \

&& echo ${_CUDA_COMPAT_PATH}/lib > /etc/ld.so.conf.d/00-cuda-compat.conf \

&& ldconfig \

&& rm -f ${_CUDA_COMPAT_PATH}/lib # buildkit

RUN ARG NVIDIA_BUILD_ID

RUN ENV NVIDIA_BUILD_ID=16003717

RUN LABEL com.nvidia.build.id=16003717

RUN ARG NVIDIA_BUILD_REF

RUN LABEL com.nvidia.build.ref=bbb7f8edd544619c81635e75dd330bff437db82e2.3 针对官方镜像解析出的Dockfile进行分析

官方镜像的jupyer配置与AIStation平台的配置有区别,无法进行随机分配jupyter端口,以及没有指定外部访问的JUPYTER_HOST等环境变量。导致平台开发环境启动容器后,无法访问jupyter页面。

分析出实际问题后,针对这些问题,修改我们的Dockerfile文件,以NGC官方镜像为基础镜像,制作出AIStation平台需要的镜像。

修改我们的Dockerfile文件,适配AIStation平台:

FROM nvcr.io/nvidia/tensorflow:20.09-tf2-py3

# Install OpenSSH for MPI to communicate between containers

RUN apt-get -o Acquire::Check-Valid-Until=false -o Acquire::Check-Date=false update && apt-get install -y --no-install-recommends openssh-client openssh-server && \

mkdir -p /var/run/sshd

# Allow OpenSSH to talk to containers without asking for confirmation

RUN cat /etc/ssh/ssh_config | grep -v StrictHostKeyChecking > /etc/ssh/ssh_config.new && \

echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config.new && \

cat /etc/ssh/sshd_config | grep -v PermitRootLogin> /etc/ssh/sshd_config.new && \

echo "PermitRootLogin yes" >> /etc/ssh/sshd_config.new && \

mv /etc/ssh/ssh_config.new /etc/ssh/ssh_config && \

mv /etc/ssh/sshd_config.new /etc/ssh/sshd_config

##jupyter

RUN wget -O /usr/local/etc/jupyter/jupyter_notebook_config.py https://raw.githubusercontent.com/Winowang/jupyter_gpu/master/jupyter_notebook_config.py

RUN wget -O /usr/local/etc/jupyter/custom.js https://raw.githubusercontent.com/Winowang/jupyter_gpu/master/custom.js

## tini

ENV TINI_VERSION v0.18.0

ADD https://github.com/krallin/tini/releases/download/${TINI_VERSION}/tini /tini

RUN chmod +x /tini

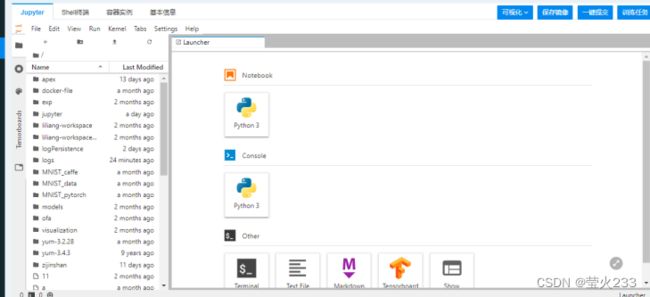

WORKDIR "/home"2.4 通过平台的创建镜像功能制作镜像(需要联网)

将我们修改的Dockerfile文件,通过平台的创建功能,将镜像引入平台:

使用该镜像,在开发环境提交任务,然后平台可以正常使用jupyter: