Pytorch归一化(MinMaxScaler、零均值归一化)

归一化:归一化就是要把需要处理的数据经过处理后(通过某种算法)限制在你需要的一定范围内。首先归一化是为了后面数据处理的方便,其次是保证程序运行时收敛加快。归一化的具体作用是归纳统一样本的统计分布性。归一化在0-1之间是统计的概率分布,归一化在某个区间上是统计的坐标分布。归一化有同一、统一和合一的意思。

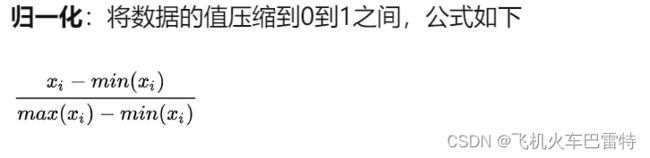

一、MinMaxScaler

MinMaxScaler是对一组数据进行归一化处理,使得这组数据的值位于[0,1]这个区间。

为什么要这么做呢?消除不同特征之间的数值相差很大从而导致结果不同的因素。备注:一个向量可以认为是一个特征。

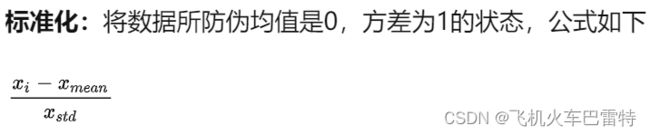

二、零均值归一化

零均值归一化是对一组数据进行标准化处理,处理后这组数据的均值为0、方差为1。

为什么要这么做呢?让每一个特征都服从标准正态分布,消除了每个特征分布不同从而导致结果不同的这个因素。备注:一个向量可以认为是一个特征。

三、如果只是向量

代码

import torch

# Set print options

torch.set_printoptions(linewidth=2048, precision=6, sci_mode=False)

# Define a tensor

a = torch.tensor(

[-1.8297, -1.9489, -1.9937, -1.9937, -2.0225, -2.0375, -2.0499,

-2.6950, -2.6967, -2.8939, -2.9030, -2.9107, -2.9385, -2.9468,

-2.9705, -2.9777])

a = torch.sort(input=a, descending=False).values

print(a, torch.mean(a), torch.std(a))

# Z-score standardization

mean_a = torch.mean(a)

std_a = torch.std(a)

n1 = (a - mean_a) / std_a

print(n1, torch.mean(n1), torch.std(n1))

# Min-Max scaling

min_a = torch.min(a)

max_a = torch.max(a)

n2 = (a - min_a) / (max_a - min_a)

print(n2, torch.mean(n2), torch.std(n2))输出

tensor([-2.977700, -2.970500, -2.946800, -2.938500, -2.910700, -2.903000, -2.893900, -2.696700, -2.695000, -2.049900, -2.037500, -2.022500, -1.993700, -1.993700, -1.948900, -1.829700]) tensor(-2.488044) tensor(0.469918)

tensor([-1.042003, -1.026682, -0.976247, -0.958584, -0.899426, -0.883040, -0.863674, -0.444027, -0.440409, 0.932383, 0.958771, 0.990691, 1.051979, 1.051979, 1.147315, 1.400976]) tensor( 0.000000) tensor(1.)

tensor([0.000000, 0.006272, 0.026916, 0.034146, 0.058362, 0.065070, 0.072997, 0.244773, 0.246254, 0.808188, 0.818990, 0.832056, 0.857143, 0.857143, 0.896167, 1.000000]) tensor(0.426530) tensor(0.409336)二、如果是一个张量(2维)

代码(仅对零均值归一化进行示例)

import torch

# Set print options

torch.set_printoptions(linewidth=2048, precision=6, sci_mode=False)

# Define a tensor

a = torch.tensor([[1.00, 1.05, 1.21, 1.24, 1.38, 1.39, 1.78, 1.81, 1.99],

[2.00, 2.04, 2.21, 2.22, 2.36, 2.37, 2.77, 2.83, 2.94],

[3.00, 3.06, 3.20, 3.26, 3.32, 3.33, 3.76, 3.84, 3.98],

[4.00, 4.08, 4.21, 4.28, 4.37, 4.38, 4.79, 4.88, 4.98],

[5.00, 5.01, 5.22, 5.22, 5.35, 5.35, 5.72, 5.84, 5.96],

[6.00, 6.02, 6.24, 6.25, 6.33, 6.33, 6.71, 6.86, 6.99],

[7.00, 7.01, 7.26, 7.24, 7.32, 7.33, 7.71, 7.89, 7.95],

[8.00, 8.01, 8.25, 8.26, 8.31, 8.34, 8.70, 8.89, 8.96]])

# Define a contrastive tensor

b = torch.tensor([1.00, 1.05, 1.21, 1.24, 1.38, 1.39, 1.78, 1.81, 1.99])

print(a)

print(b)

# Calculate mean and standard variance

mean_a = torch.mean(a, dim=1)

mean_b = torch.mean(b, dim=0)

print(mean_a)

print(mean_b)

std_a = torch.std(a, dim=1)

std_b = torch.std(b, dim=0)

print(std_a)

print(std_b)

# Do Z-score standardization on 2D tensor

n_a = a.sub_(mean_a[:, None]).div_(std_a[:, None])

n_b = (b - mean_b) / std_b

print(n_a)

print(n_b)

输出

tensor([[1.000000, 1.050000, 1.210000, 1.240000, 1.380000, 1.390000, 1.780000, 1.810000, 1.990000],

[2.000000, 2.040000, 2.210000, 2.220000, 2.360000, 2.370000, 2.770000, 2.830000, 2.940000],

[3.000000, 3.060000, 3.200000, 3.260000, 3.320000, 3.330000, 3.760000, 3.840000, 3.980000],

[4.000000, 4.080000, 4.210000, 4.280000, 4.370000, 4.380000, 4.790000, 4.880000, 4.980000],

[5.000000, 5.010000, 5.220000, 5.220000, 5.350000, 5.350000, 5.720000, 5.840000, 5.960000],

[6.000000, 6.020000, 6.240000, 6.250000, 6.330000, 6.330000, 6.710000, 6.860000, 6.990000],

[7.000000, 7.010000, 7.260000, 7.240000, 7.320000, 7.330000, 7.710000, 7.890000, 7.950000],

[8.000000, 8.010000, 8.250000, 8.260000, 8.310000, 8.340000, 8.700000, 8.890000, 8.960000]])

tensor([1.000000, 1.050000, 1.210000, 1.240000, 1.380000, 1.390000, 1.780000, 1.810000, 1.990000])

tensor([1.427778, 2.415555, 3.416667, 4.441112, 5.407778, 6.414445, 7.412222, 8.413334])

tensor(1.427778)

tensor([0.353263, 0.348537, 0.354189, 0.356702, 0.351951, 0.356410, 0.354605, 0.354965])

tensor(0.353263)

tensor([[-1.210934, -1.069397, -0.616476, -0.531553, -0.135247, -0.106940, 0.997055, 1.081978, 1.591514],

[-1.192286, -1.077521, -0.589767, -0.561076, -0.159397, -0.130705, 1.016951, 1.189099, 1.504704],

[-1.176396, -1.006995, -0.611726, -0.442325, -0.272924, -0.244691, 0.969350, 1.195217, 1.590487],

[-1.236640, -1.012363, -0.647913, -0.451670, -0.199359, -0.171324, 0.978096, 1.230408, 1.510754],

[-1.158621, -1.130208, -0.533535, -0.533535, -0.164165, -0.164165, 0.887118, 1.228076, 1.569032],

[-1.162833, -1.106718, -0.489451, -0.461393, -0.236932, -0.236932, 0.829257, 1.250121, 1.614869],

[-1.162483, -1.134282, -0.429272, -0.485674, -0.260069, -0.231870, 0.839747, 1.347354, 1.516557],

[-1.164436, -1.136264, -0.460141, -0.431969, -0.291109, -0.206594, 0.807590, 1.342856, 1.540058]])

tensor([-1.210934, -1.069397, -0.616476, -0.531553, -0.135247, -0.106940, 0.997055, 1.081978, 1.591514])输出说明:代码中b是用来检验的,b是向量的零均值归一化的体现,a包含了b,如果a中包含的b的零均值归一化和b的零均值归一化,那么就说明我们的Pytorch2D数据零均值归一化就没问题。可以看到倒数第二个张量的第一行和倒数第一个张量是完全一样的,因此,上面的代码没有问题。

上述方法不仅仅可以在2D数据上进行零均值归一化,理所当然能够用在3D、4D甚至更多D维的数据归一化中。

三、参考资料

How to normalize multidimensional tensor

minmax标准化