Pytorch GCN入门

实验环境

pytorch1.7.1 ,cu101和torch-geometric,其中torch-geometric是pytorch的一部分,使用它可以很方便的进行有关图神经网络的训练和推理,安装过程略,torch-geometric的详细信息可参见官方文档链接

简单GCN的搭建

图数据的表示

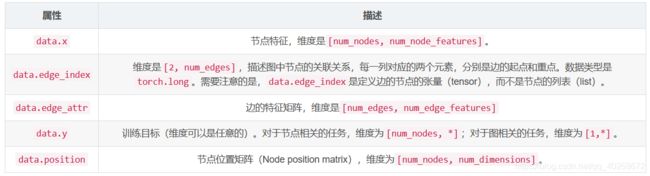

图(Graph)是描述实体(节点)和关系(边)的数据模型。在Pytorch Geometric中,图被看作是torch_geometric.data.Data的实例,并拥有以下属性:

以及

以及

更详细的,可以参考博客

数据集的加载

from torch_geometric.datasets import Planetoid

dataset = Planetoid(root='./data/Cora', name='Cora')

print(dataset)

网络模型的搭建

import torch

import torch.nn.functional as F

from torch_geometric.nn import GCNConv

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = GCNConv(dataset.num_node_features, 16)

self.conv2 = GCNConv(16, dataset.num_classes)

def forward(self, data):

x, edge_index = data.x, data.edge_index

x = self.conv1(x, edge_index)

x = F.relu(x)

x = F.dropout(x, training=self.training)

x = self.conv2(x, edge_index)

return F.log_softmax(x, dim=1)

这里,GCNConv的具体信息如下:

可以看到,这是一个标准的GCN卷积运算。

模型的训练

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(device)

model = Net().to(device)

data = dataset[0].to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

model.train()

for epoch in range(20000):

optimizer.zero_grad()

out = model(data)

loss = F.nll_loss(out[data.train_mask], data.y[data.train_mask])

print("i={},loss={}".format(epoch,loss.item()))

loss.backward()

optimizer.step()

model.eval()

_, pred = model(data).max(dim=1)

correct = int(pred[data.test_mask].eq(data.y[data.test_mask]).sum().item())

acc = correct / int(data.test_mask.sum())

print('Accuracy: {:.4f}'.format(acc))

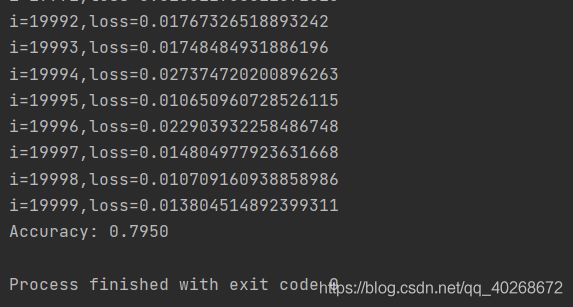

实验现象

总的代码

from torch_geometric.datasets import Planetoid

dataset = Planetoid(root='./data/Cora', name='Cora')

print(dataset)

import torch

import torch.nn.functional as F

from torch_geometric.nn import GCNConv

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = GCNConv(dataset.num_node_features, 16)

self.conv2 = GCNConv(16, dataset.num_classes)

def forward(self, data):

x, edge_index = data.x, data.edge_index

x = self.conv1(x, edge_index)

x = F.relu(x)

x = F.dropout(x, training=self.training)

x = self.conv2(x, edge_index)

return F.log_softmax(x, dim=1)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(device)

model = Net().to(device)

data = dataset[0].to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.01, weight_decay=5e-4)

model.train()

for epoch in range(20000):

optimizer.zero_grad()

out = model(data)

loss = F.nll_loss(out[data.train_mask], data.y[data.train_mask])

print("i={},loss={}".format(epoch,loss.item()))

loss.backward()

optimizer.step()

model.eval()

_, pred = model(data).max(dim=1)

correct = int(pred[data.test_mask].eq(data.y[data.test_mask]).sum().item())

acc = correct / int(data.test_mask.sum())

print('Accuracy: {:.4f}'.format(acc))