数据分析-深度学习Pytorch Day9

科普知识

NIPS(NeurIPS),全称神经信息处理系统大会(Conference and Workshop on Neural Information Processing Systems),是一个关于机器学习和计算神经科学的国际会议。该会议固定在每年的12月举行,由NIPS基金会主办。NIPS是机器学习领域的顶级会议 。在中国计算机学会的国际学术会议排名中,NIPS为人工智能领域的A类会议。

# 前言

SEP.

理论篇的上一篇文章中我们学习了残差网络(ResNet),其核心思想是通过跳跃连接构建残差结构,使得网络可以突破深度的限制,从而构建更加深层次的网络。

TensorFlow之ResNet实战

本期实战分享我们主要对ResNet网络结构中常用的ResNet-18进行代码Tensorflow实践, 本次代码十分简洁,希望各位全程跟上。

1.数据准备

本次数据采用RAFDB人脸表情数据集,包含七个分类:平和,开心,悲伤,

惊讶,厌恶,愤怒,害怕。与之前的数据集类似,该人脸表情数据集也包含

训练集合测试集,每个集中没别包含7个文件夹(表情)。与之前的数据集

一样,该数据集包含训练集与测试集,每个集包含七个文件夹(表情)

一些样本展示

2.网络结构

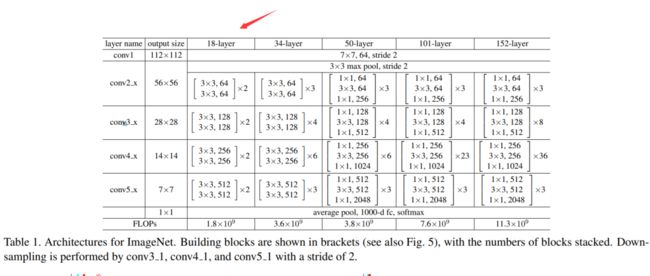

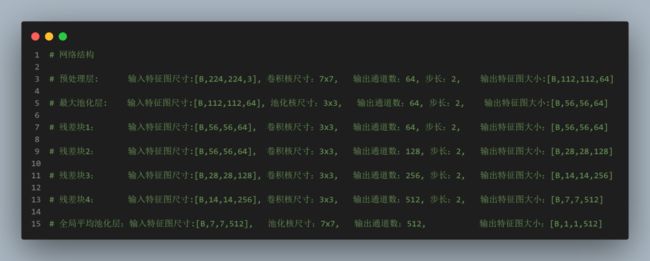

上图包含了ResNet网络的常见版本,本次分享以18层的ResNet-18为例,网络结构为:

# 残差块构建

def ResBlock(name, num_blocks, input, inchannel, outchannel, stride):

conv_input = input

conv_inchannel = inchannel

conv_stride = stride

for i inrange(num_blocks):

out =Conv_layer(names ='{}{}'.format(name, i), input = conv_input , w_shape =[3,3, conv_inchannel, outchannel], b_shape =[outchannel], strid =[conv_stride, conv_stride])

conv_input = out

conv_inchannel = outchannel

conv_stride =1

# 残差

if stride >1:

shortcut =Conv_layer(names ='{}_{}'.format(name,i), input = input , w_shape =[1,1, inchannel, outchannel], b_shape =[outchannel], strid =[stride, stride])

out = out + shortcut

return out

# 残差网络构建

def inference(images, batch_size, n_classes,drop_rate):print("******** images {} ".format(images.shape))

#第一层预处理卷积

pre_conv1 =Conv_layer(names ='pre_conv', input = images , w_shape =[7,7,3,64], b_shape =[64], strid =[2,2])print("******** pre_conv1 {} ".format(pre_conv1.shape))

# 池化层

pool_1 =Max_pool_lrn(names ='pooling1', input = pre_conv1 , ksize =[1,3,3,1], is_lrn = False)

# print("******** pool_1 {} ".format(pool_1.shape))

# 第一个卷积块(layer1)

layer1 =ResBlock('Resblock1',2, pool_1,64,64,1)print("******** layer1 {} ".format(layer1.shape))

# 第二个卷积块(layer2)

layer2 =ResBlock('Resblock2',2, layer1,64,128,2)print("******** layer2 {} ".format(layer2.shape))

# 第三个卷积块(layer3)

layer3 =ResBlock('Resblock3',2, layer2,128,256,2)print("******** layer3 {} ".format(layer3.shape))

# 第四个卷积块(layer4)

layer4 =ResBlock('Resblock4',2, layer3,256,512,2)print("******** layer4 {} ".format(layer4.shape))

# 全局平均池化

global_avg = tf.nn.avg_pool(layer4, ksize=[1,7,7,1],strides=[1,7,7,1],padding='SAME')print("******** global_avg {} ".format(global_avg.shape))

reshape = tf.reshape(global_avg, shape=[batch_size,-1])

dim = reshape.get_shape()[1].value

with tf.variable_scope('softmax_linear')asscope:

weights = tf.Variable(tf.truncated_normal(shape=[dim, n_classes], stddev=0.005, dtype=tf.float32),

name='softmax_linear', dtype=tf.float32)

biases = tf.Variable(tf.constant(value=0.1, dtype=tf.float32, shape=[n_classes]),

name='biases', dtype=tf.float32)

resnet18_out = tf.add(tf.matmul(reshape, weights), biases, name='softmax_linear')print("---------resnet18_out:{}".format(resnet18_out))return resnet18_out复制

3.训练过程

源码获取:https://gitee.com/fengyuxiexie/res-net-18