NVIDIA CUDA TensorFlow TensorRT openVINO FFmpeg openCV等编译安装 Linux C++

相关框架与依赖

- NVIDIA

显卡驱动

CUDA驱动

cuDNN包 - 项目依赖

FFmpeg

openCV

Thrift - TensorFlow

bazel

protobuf - PyTorch

- TensorRT

- openVINO

- Caffe

一、环境

系统:

ubuntu 16.04 LTS

配置:

内存:15.5 GiB

CPU:Intel® Core™ i7-8700 CPU @ 3.20GHz × 12

显卡:GeForce RTX 2070/PCIe/SSE2 (7952MiB)

OS type:64-bit

清华大学源、aliyun源:

对于ubuntu 16.04(清华大学源):

# 1.先备份源文件(就是将sources.list备份到sources.list.bak)

$ cd /etc/apt/

$ sudo cp sources.list sources.list.bak

# 2.替换

$ sudo gedit sources.list

# 用下面的代码替换打开的文件里的代码即可

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ xenial main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ xenial-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ xenial-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ xenial-security main restricted universe multiverse

# 3.更新源

$ sudo apt-get update #更新源

$ sudo apt-get upgrade #更新软件(有点耗时)

对于ubuntu 18.04(aliyun源):

我试过在ubuntu 18.04上更新aliyun源,无需修改文件这么繁琐,只需:

1.进入系统软件Software & Updates

2.在Ubuntu Software页面点击Source code

3.在Download from里去找China、aliyun

二、编译安装

1.NVIDIA

1.1 显卡驱动安装

对于ubuntu 18.04系统:

这里先介绍ubuntu 18.04系统下的显卡驱动安装,因为实在太简单了:

1.进入系统软件Software & Updates

2.在Additional Drivers页面里(进入页面需要等待几秒钟列表才会刷出来)选择想要安装的nvidia驱动,点击Apply Changes之后等待一段时间的安装即可(会提示你重启,重启之后命令行运行nvidia-smi即可查看显卡驱动信息):

对于ubuntu 16.04系统:

参考《NVIDIA驱动安装 2种方式 Ubuntu》:https://blog.csdn.net/Tosonw/article/details/103159999

一、这是第一种方式:

这种方式笔记本没问题,但是台式机要注意,在安装好驱动之后会一直循环在登录界面,输入密码之后一闪又回到登录界面,重装了多次驱动还是不行。就要换第二种方式使用run文件来安装。

$ sudo apt-get purge nvidia* #卸载所有nvidia相关包

$ sudo apt-get autoremove #清理依赖包

$ sudo apt-get install -f #修复依赖关系

$ sudo add-apt-repository ppa:graphics-drivers/ppa

$ sudo apt-get update

$ sudo apt-get install nvidia-418 nvidia-settings

# 安装完毕后重启电脑,运行nvidia-smi,查看生效的显卡驱动。

$ nvidia-smi

已安装好的显卡驱动:

NVIDIA-SMI 418.56 Driver Version: 418.56

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 418.56 Driver Version: 418.56 CUDA Version: 10.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 GeForce RTX 2070 Off | 00000000:01:00.0 Off | N/A |

| 40% 48C P2 71W / 175W | 6018MiB / 7952MiB | 33% Default |

+-------------------------------+----------------------+----------------------+

二、第二种方式:

下载对应的显卡驱动包:http://www.geforce.cn/drivers

按ctrl+alt+F1,进入tty1模式,并使用账户登录。

$ sudo apt-get purge nvidia* #卸载所有nvidia相关包

$ sudo apt-get autoremove #清理依赖包

$ sudo apt-get install -f #修复依赖关系

# 禁用系统自带显卡

$ sudo chmod 666 /etc/modprobe.d/blacklist.conf

$ sudo gedit /etc/modprobe.d/blacklist.conf

# 在文本最后添加:(禁用nouveau第三方驱动,之后也不需要改回来)

blacklist nouveau

options nouveau modeset=0

# 更新文件

$ sudo update-initramfs -u

# 重启后,执行:lsmod | grep nouveau。如果没有屏幕输出,说明禁用nouveau成功。(我记得没有重启也能继续安装。)

# 关闭图形界面

$ sudo service lightdm stop

# 以root权限安装

$ sudo su

# 进入到.run文件目录中,安装驱动

$ bash NVIDIA-Linux-x86_64-430.40.run --no-opengl-files

# --no-opengl-files 只安装驱动文件,不安装OpenGL文件,否则可能会导致 GUI 无限重启。

--no-opengl-files参数解释:

因为NVIDIA的驱动默认会安装OpenGL,而Ubuntu的内核本身也有OpenGL、且与GUI显示息息相关,一旦NVIDIA的驱动覆写了OpenGL,在GUI需要动态链接OpenGL库的时候就引起问题。

到了这里,驱动安装已完成,最后须恢复启用图形界面,再按

到了这里,驱动安装已完成,最后须恢复启用图形界面,再按Ctrl+Alt+F7回到图形界面:

# 启动图形界面

$ sudo service lightdm start

运行nvidia-smi,查看显卡驱动是否正常。

——————————————————————————————

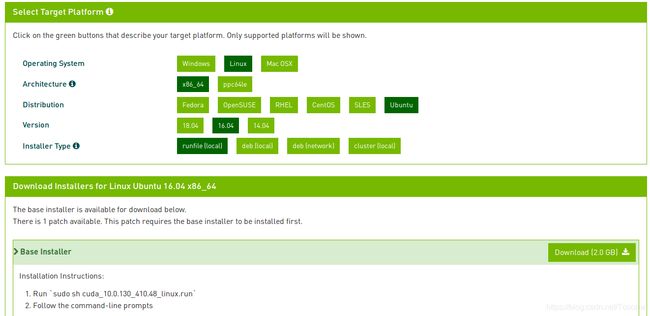

1.2 安装CUDA 10.0:

下载runfile文件:

Archived Releases:CUDA Toolkit 10.0

https://developer.nvidia.com/cuda-10.0-download-archive(需要登录)

安装:

1.Run sudo ./cuda_10.0.130_410.48_linux.run

2.Follow the command-line prompts

Do you accept the previously read EULA?

accept/decline/quit: accept

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 410.48?

(y)es/(n)o/(q)uit: n

Install the CUDA 10.0 Toolkit?

(y)es/(n)o/(q)uit: y

Enter Toolkit Location

[ default is /usr/local/cuda-10.0 ]:

Do you want to install a symbolic link at /usr/local/cuda?

(y)es/(n)o/(q)uit: y

Install the CUDA 10.0 Samples?

(y)es/(n)o/(q)uit: y

Enter CUDA Samples Location

[ default is /home/toson ]:

测试:

在命令行中输入一下命令

$ cd /usr/local/cuda/samples/1_Utilities/deviceQuery

$ sudo make

$ bash deviceQuery

# 如下提示表示OK

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 10.1, CUDA Runtime Version = 10.0, NumDevs = 1

Result = PASS

注:查看CUDA版本:$ nvcc -V

CUDA环境变量:

$ gedit ~/.bashrc

# 在打开的文件末尾添加下面的语句:

export PATH=/usr/local/cuda/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/local/cuda/lib${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

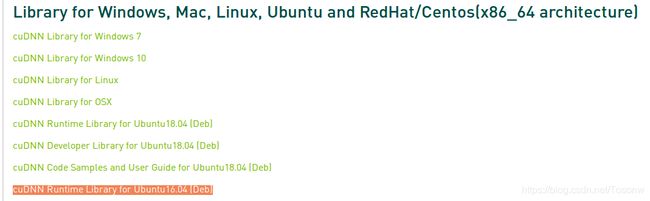

1.3 cudnn-7.5

下载7.5版本:https://developer.nvidia.com/rdp/cudnn-archive(需要登录)

解压到cuda目录:

$ sudo tar -zxvf cudnn-10.0-linux-x64-v7.5.0.56.tgz -C /usr/local/

而如今官网上下载的是deb文件了(2种我都试过,我还是推荐上面那种方法):

我下载的是:https://developer.nvidia.com/compute/machine-learning/cudnn/secure/v7.5.0.56/prod/10.0_20190219/Ubuntu16_04-x64/libcudnn7_7.5.0.56-1%2Bcuda10.0_amd64.deb(需要登录)

$ sudo dpkg -i libcudnn7_7.5.0.56-1+cuda10.0_amd64.deb

但是deb安装的会装在usr的那些系统目录下,并不是/usr/local/cuda下,最后还是使用cudnn-10.0-linux-x64-v7.5.0.56.tgz解压的方式装cudnn。

2.项目依赖

2.1 FFmpeg 4.1编译安装

参考:https://blog.csdn.net/Tosonw/article/details/89883591

下载:https://github.com/FFmpeg/FFmpeg/archive/n4.1.tar.gz

下载后解压,并进入目录:(注:可以解压到自己创建的home/user/softs文件夹,因为这些需要编译安装的软件最好不要删掉。)

# 依赖项

$ sudo apt-get install libgtk2.0-dev libjpeg.dev libjasper-dev yasm

# 在目录中创建build文件夹,用于放置编译的文件

$ mkdir build

$ cd build

# 配置

$ ../configure --enable-shared --disable-static

# 如果想要在 FFMPEG 中编译 QSV 硬件编码器,则需要使用下面命令进行配置:

#$ ../configure --enable-libmfx --enable-encoder=h264_qsv --enable-decoder=h264_qsv --enable-shared --disable-static

# 编译

$ make -j12

# 安装

$ sudo make install

2.2 openCV-4.1.0编译安装

如果是python安装,很简单:$ pip install opencv-python

下面介绍基于C++的安装:

PS:其实不用下载编译openCV,因为后面即将安装的openVINO里已经自带了openCV。 在CMakeLists.txt中设置opencv路径set(OpenCV_DIR /opt/intel/openvino/opencv/cmake),然后find_package(OpenCV REQUIRED)就可以链接。

注:确保FFmpeg已安装。

我还是写一下源码编译步骤:

下载:https://github.com/opencv/opencv/archive/4.1.0.tar.gz

# 一些依赖项

sudo apt-get install build-essential

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

sudo apt-get install python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

# 编译

cd opencv-4.1.0

mkdir build

cd build

$ cmake -D CMAKE_BUILD_TYPE=Release ..

# 可以使用CMAKE_INSTALL_PREFIX来指定make install安装目录

#cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local ..

$ make -j12

#安装

#$ sudo make install #根据自己需要是否安装到公共/usr目录,我没有安装

提示有未定义等问题:

libemotion_sdk.so: undefined reference to `cv::imread(std::string const&, int)'

libemotion_sdk.so: undefined reference to `cv::VideoCapture::VideoCapture(std::string const&, int)'

https://github.com/opencv/opencv/issues/13000

提示:cmake的时候先在CMakeLists.txt中添加:

add_definitions(-D_GLIBCXX_USE_CXX11_ABI=0)

注:"-D_GLIBCXX_USE_CXX11_ABI=0"是由于protobuf是基于GCC4等等一系列原因。

//当openCV更新为4.1版本后,出现问题:

// [swscaler @ 0x7fe4e457a8c0] deprecated pixel format used, make sure you did set range correctly

// 原因就是使用的格式已经被废除了。

//该函数用于解决该问题。

AVPixelFormat ConvertDeprecatedFormat(enum AVPixelFormat format)

{

switch (format)

{

case AV_PIX_FMT_YUVJ420P:

return AV_PIX_FMT_YUV420P;

break;

case AV_PIX_FMT_YUVJ422P:

return AV_PIX_FMT_YUV422P;

break;

case AV_PIX_FMT_YUVJ444P:

return AV_PIX_FMT_YUV444P;

break;

case AV_PIX_FMT_YUVJ440P:

return AV_PIX_FMT_YUV440P;

break;

default:

return format;

break;

}

}

2.3 Thrift-0.12编译安装

编译thrift很容易遇到openssl依赖问题,建议先安装openssl(如下所写的)

如果遇到openssl依赖问题:/usr/local/ssl/lib/libssl.a: error adding symbols: Bad value,需要自行下载openssl重新编译。

问题原因:我们都知道在生成一个动态库时需要指定-fPIC,这是创建动态库所要求的,共享库被加载是在内存中的位置是不固定的,是一个相对的位置。那么在生成静态库时通常不指定-fPIC, 可是在64bit编译使用静态库就会提示需要-fPIC重新编译该库。由于openssl编译静态库时,没有使用-fPIC选项,使得编译出来的静态库没有重定位能力。这样在64bit机器上编译出来的静态库如果不指定-fPIC选项几乎全部不能使用。因此需要重新加上-fPIC从新编译openssl。参考:https://www.cnblogs.com/yoyotl/p/7424967.html

OpenSSL_1_1_1d下载:https://github.com/openssl/openssl/releases

# 解压进入目录,编译安装

./config -fPIC

make depend

sudo make install

现在正式编译安装thrift

下载:http://thrift.apache.org/

需要下载0.12.0版本的thrift:https://github.com/apache/thrift/archive/v0.12.0.tar.gz

# 依赖:

$ sudo apt install automake libboost1.58-dev

$ sudo apt install byacc flex bison

$ sudo apt install libtool

# 编译:

$ ./bootstrap.sh

# 使用prefix来指定install安装目录,我是指定安装在我设置的目录

# 使用disable来关闭部分依赖和功能

$ ./configure --prefix=/opt/shtf/sas/thrift --disable-openssl --without-go

$ make -j12

$ sudo make install

2.4 conda环境安装

参考:https://www.jianshu.com/p/edaa744ea47d

conda官网:https://conda.io/miniconda.html

我是在这里下载的:https://repo.continuum.io/miniconda/Miniconda3-4.5.4-Linux-x86_64.sh

$ sh Miniconda3-4.5.4-Linux-x86_64.sh

# 按照提示输入即可

Do you accept the license terms? [yes|no]

[no] >>> yes

Miniconda3 will now be installed into this location:

/home/cyhc/miniconda3

- Press ENTER to confirm the location

- Press CTRL-C to abort the installation

- Or specify a different location below

[/home/cyhc/miniconda3] >>>

Do you wish the installer to prepend the Miniconda3 install location

to PATH in your /home/cyhc/.bashrc ? [yes|no]

[no] >>> no

下面这几句是进conda环境用的,可以暂时不用管它。

$ cd ~/miniconda3/bin

$ sudo chmod +x activate

$ . ./activate #这里的第一个点跟source是一样的效果

# 当命令行前面出现(base)的时候说明现在已经在conda的环境中了。

Python环境配置:

配置conda内的Python-3.6为默认python环境:

# 打开环境变量文件

$ gedit ~/.bashrc

# 在末尾添加:

# added by Miniconda3 installer

export PATH="/home/user/miniconda3/bin:$PATH"

export LD_LIBRARY_PATH=/home/user/miniconda3/lib${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

注意:PATH里的user需要改为自己的用户名。

3.TensorFlow

3.1 bazel-0.15.2

直接编译TensorFlow会提示:

Cannot find bazel. Please install bazel.

Configuration finished

TensorFlow依赖bazel,需要先安装bazel:

我下载的是0.15.2版本:https://github.com/bazelbuild/bazel/releases/download/0.15.2/bazel-0.15.2-installer-linux-x86_64.sh

源码编译还要依赖Java比较麻烦,我使用bash脚本的方式:

bazel-0.15.2-installer-linux-x86_64.sh

#安装(注:末尾那个#--user也是命令的一部分)

$ chmod +x bazel-0.15.2-installer-linux-x86_64.sh

$ sudo ./bazel-0.15.2-installer-linux-x86_64.sh #--user

3.2 TensorFlow-1.12.0编译安装

我下载的是1.12.0版本:https://github.com/tensorflow/tensorflow/archive/v1.12.0.tar.gz

解压后命令行进入目录:

$ ./configure

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

You have bazel 0.15.2 installed.

Please specify the location of python. [Default is /home/toson/anaconda3/bin/python]:

Found possible Python library paths:

/home/toson/anaconda3/lib/python3.6/site-packages

Please input the desired Python library path to use. Default is [/home/toson/anaconda3/lib/python3.6/site-packages]

Do you wish to build TensorFlow with Apache Ignite support? [Y/n]:

n

No Apache Ignite support will be enabled for TensorFlow.

Do you wish to build TensorFlow with XLA JIT support? [Y/n]:

n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]:

n

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with ROCm support? [y/N]:

n

No ROCm support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]:

y

CUDA support will be enabled for TensorFlow.

Please specify the CUDA SDK version you want to use. [Leave empty to default to CUDA 9.0]:

10.0

Please specify the location where CUDA 10.0 toolkit is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Please specify the cuDNN version you want to use. [Leave empty to default to cuDNN 7]:

Please specify the location where cuDNN 7 library is installed. Refer to README.md for more details. [Default is /usr/local/cuda]:

Do you wish to build TensorFlow with TensorRT support? [y/N]:

n

No TensorRT support will be enabled for TensorFlow.

Please specify the NCCL version you want to use. If NCCL 2.2 is not installed, then you can use version 1.3 that can be fetched automatically but it may have worse performance with multiple GPUs. [Default is 2.2]:

1.3

Please specify a list of comma-separated Cuda compute capabilities you want to build with.

You can find the compute capability of your device at: https://developer.nvidia.com/cuda-gpus.

Please note that each additional compute capability significantly increases your build time and binary size. [Default is: 6.1]:

Do you want to use clang as CUDA compiler? [y/N]:

n

nvcc will be used as CUDA compiler.

Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:

Do you wish to build TensorFlow with MPI support? [y/N]:

n

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]:

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See tools/bazel.rc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

--config=gdr # Build with GDR support.

--config=verbs # Build with libverbs support.

--config=ngraph # Build with Intel nGraph support.

Configuration finished

编译(很耗时,CPU也会全占):

$ bazel build --config=opt --config=cuda //tensorflow:libtensorflow_cc.so

#等待有点久,最后提示以下内容就算成功了:

Target //tensorflow:libtensorflow_cc.so up-to-date:

bazel-bin/tensorflow/libtensorflow_cc.so

INFO: Elapsed time: 1206.366s, Critical Path: 162.98s

INFO: 4956 processes: 4956 local.

INFO: Build completed successfully, 5069 total actions

如果有问题:

# 如果出现undefined reference to libcublas.so的问题

# 则说明CUDA的环境变量没有配置,请往回翻阅“CUDA环境变量”,配置完成后使用“$ source ~/.bashrc”然后再编译tensorflow

bazel-out/host/bin/_solib_local/_U_S_Stensorflow_Scc_Cops_Saudio_Uops_Ugen_Ucc___Utensorflow/libtensorflow_framework.so: undefined reference to `[email protected]'

collect2: error: ld returned 1 exit status

Target //tensorflow:libtensorflow_cc.so failed to build

Use --verbose_failures to see the command lines of failed build steps.

INFO: Elapsed time: 422.190s, Critical Path: 65.94s

INFO: 2532 processes: 2532 local.

FAILED: Build did NOT complete successfully

编译成功后,在/bazel-bin/tensorflow目录下会出现 libtensorflow_cc.so文件

C版本: bazel build :libtensorflow.so

C++版本: bazel build :libtensorflow_cc.so

需要的头文件,要在源码里拷贝出来使用:

bazel-genfiles/...,eigen/...,include/...,tf/...

需要的文件都拷贝出来后,可使用bazel clean命令,把编译的那些琐碎文件清除掉,那些太占空间。

4.PyTorch安装

如果是python下安装,比较简单:$ pip install torch torchvision

下面介绍基于C++的安装:

PyTorch的C++调用库可以在官网直接下载,解压即可,无需安装。

需要重新下载基于cuda10的:

![]()

https://pytorch.org/get-started/locally/

https://download.pytorch.org/libtorch/cu100/libtorch-shared-with-deps-latest.zip

由于当时下载了1.3.0版本来用,发现有问题,所以找到了1.2.0版本:

我是在这里下载的1.2.0版本:

https://download.pytorch.org/libtorch/cu100/libtorch-cxx11-abi-shared-with-deps-1.2.0.zip

这个是cpu版本的:

https://download.pytorch.org/libtorch/cpu/libtorch-cxx11-abi-shared-with-deps-1.2.0%2Bcpu.zip

下载后解压到自己的文件夹,即可使用。

下面是代码相关的,不需要了解的可以跳过:

并在CMakeLists.txt中包含引用:

set(Torch_DIR /home/toson/download_libs/libtorch/share/cmake/Torch)

find_package(Torch REQUIRED)

程序中会报错:找不到 tensorFromBlob() 函数

可能是我使用的是PyTorch 1.1的缘故,但也没找到1.0的libtorch在哪里下载。

我尝试查找问题:

在GitHub上有看到该函数被替换了:

https://github.com/pytorch/pytorch/pull/18780

https://github.com/pytorch/pytorch/issues/15426

注:torch::CPU和torch::CUDA在PyTorch 1.0已被弃用,应该写这个:

torch::from_blob(img_float.data, {1, 224, 224, 3}).to(torch::kCUDA)

5.TensorRT安装

下载基于cuda10.0的TensorRT:https://developer.nvidia.com/tensorrt(需要登录)

Tar File Install Packages For Linux x86:

TensorRT 6.0.1.5 GA for Ubuntu 16.04 and CUDA 10.0 tar package

然后解压到自己的目录即可使用。

如果要在python里使用tensorRT,需要安装:

# 安装TensorRT(注意要安装自己python版本对应的包)

cd TensorRT-6.0.1.5/python

pip install tensorrt-6.0.1.5-cp36-none-linux_x86_64.whl

# 安装UFF

cd ../uff

pip install uff-0.6.5-py2.py3-none-any.whl

# 安装graphsurgeon

cd ../graphsurgeon

pip install graphsurgeon-0.4.1-py2.py3-none-any.whl

6.openVINO安装

参考博客:https://blog.csdn.net/Tosonw/article/details/91980108

下载(Linux):https://software.intel.com/en-us/openvino-toolkit/choose-download/free-download-linux

会填写一些必要的信息,版本选择2019 R2,选Full Package下载。

下载的文件名:l_openvino_toolkit_p_2019.2.242.tgz

解压并进入目录:

$ tar -xvzf l_openvino_toolkit_p_<version>.tgz

$ cd l_openvino_toolkit_p_2019.1.144/

# 如果您安装了以前版本的Intel Distribution of OpenVINO工具包,请重命名或删除这两个目录:

# /home//inference_engine_samples

# /home//openvino_models

# 安装

$ sudo ./install_GUI.sh

安装很简单,一直下一步就行。

7.Caffe编译安装

7.1 protobuf-3.6.1

其实TensorFlow里带有protobuf,这里不用安装,但是tensorflow的protobuf版本太低,而我的caffe需要protobuf-3.6.1版本。

编译安装步骤:

下载protobuf-3.6.1:https://github.com/protocolbuffers/protobuf/releases/tag/v3.6.1

$ tar -zxvf protobuf-all-3.6.1.tar.gz

$ cd protobuf-3.6.1

$ ./configure

$ make

$ make check

$ sudo make install

7.2 boost-1.58

caffe编译的时候依赖boost-1.58。

下载:https://nchc.dl.sourceforge.net/project/boost/boost/1.58.0/boost_1_58_0.tar.gz

百度编译过程

7.3 caffe

GitHub下载:https://github.com/BVLC/caffe

首先介绍GPU版本的编译安装:

# 依赖项(暂无)

#$ sudo apt-get install

$ cd "自己的文件夹下"

$ git clone https://github.com/BVLC/caffe.git

$ cd caffe

# 修改选项 # 修改Makefile.config,例如我们可以打开CPU_ONLY选项。

$ cp Makefile.config.example Makefile.config

$ gedit Makefile.config

# 编译GPU的,这里要修改使用cudnn:

# 我是打开了USE_CUDNN选项,关闭CPU_ONLY选项

USE_CUDNN := 1

# CPU_ONLY := 1

USE_OPENCV := 0

OPENCV_VERSION := 3

CUDA_DIR := /usr/local/cuda

BLAS := open

# 编译

$ make clean # 如果编译有奇怪问题,干脆直接clean一下。

$ make all -j12

# make runtest -j16

# make pycaffe

如果有问题:

fatal error: gflags/gflags.h: No such file or directory

#

$ sudo apt-get install libgflags-dev

CPU版本的编译和上述一样:

只是在$ gedit Makefile.config的时候:

# 关闭USE_CUDNN选项,打开CPU_ONLY选项

USE_CUDNN := 0

CPU_ONLY := 1

三、其他

Ubuntu 18.04 自带播放器无法播放H264:

问题:H.264(High Profile) decoder is required to play the file, but is not installed

$ sudo apt-get install ubuntu-restricted-extras

两次yes即可安装。