OpenPose -tensorflow代码解析(3)—— 网络结构的搭建 Net.py

前言

该openpose-tensorflow的工程是自己实现的,所以有些地方写的会比较简单,但阅读性强、方便使用。

论文翻译 || openpose – Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields

工程实现 || 基于opencv使用openpose完成人体姿态估计

OpenPose -tensorflow代码解析(1)——工程概述&&训练前的准备

OpenPose -tensorflow代码解析(2)—— 数据增强和处理 dataset.py

OpenPose -tensorflow代码解析(3)—— 网络结构的搭建 Net.py

OpenPose -tensorflow代码解析(4)—— 训练脚本 train.py

OpenPose -tensorflow代码解析(5)—— 预测代码解析 predict.py

1 openpose 的网络结构

- 【网络结构】

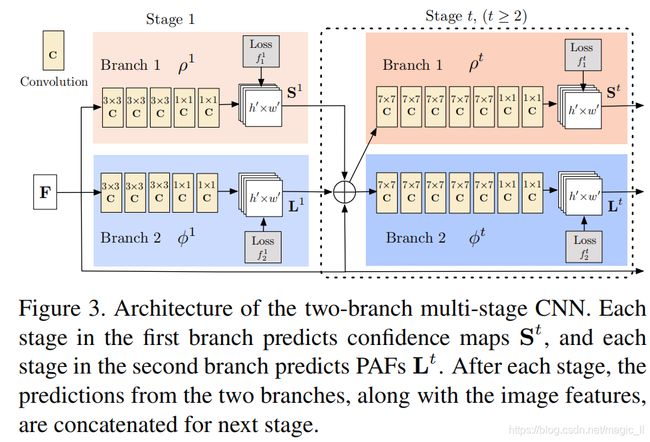

openpose的 基础网络选择的是 vgg16,出来的特征图再经过两个通道,完成关键点、连接方式的预测。

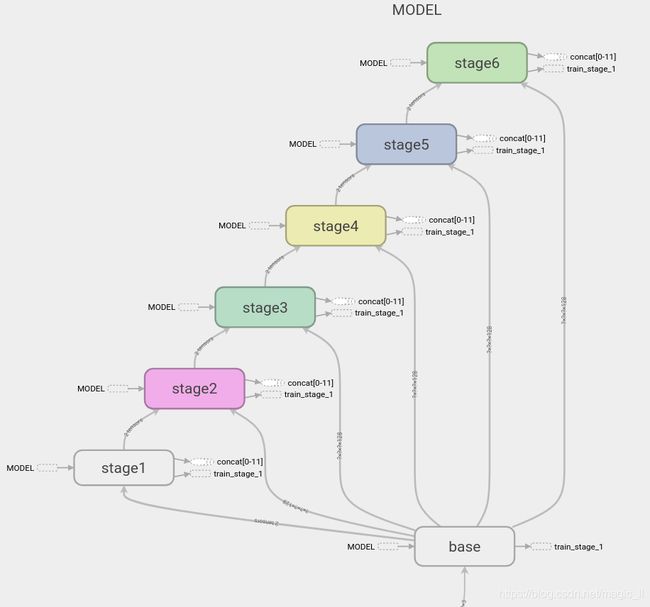

每个stage出来的特征图,会和基础网络的输出特征图进行concat,再传入下一个stage,然后重复多个stage(可设置)。并且在每个stage之后进行loss的设置。

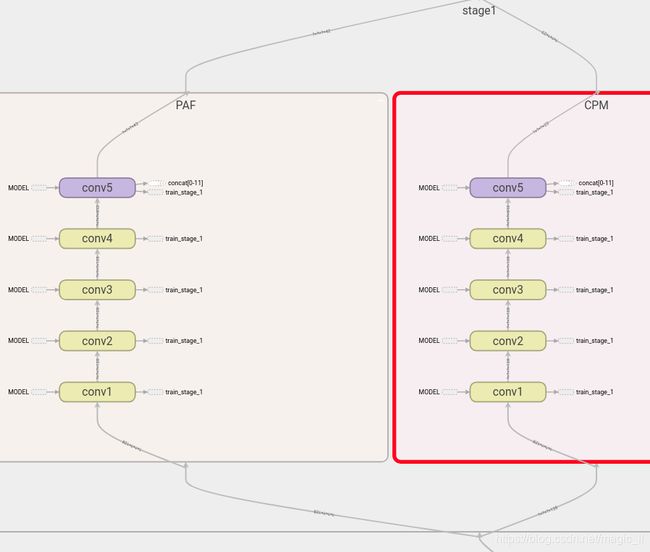

下面附上论文中的结构图。以及 在 tensorboard中显示的网络结构图、stage内部的结构图。- 【loss function】

对于每个stage 输出的特征图,都会和label进行损失计算。计算方式是 均方差。可以理解为中继监督,让网络在每一个阶段,都向着label的方向进行收敛,加快网络的训练和预测的精度

==========================================================================================================

==========================================================================================================

==========================================================================================================

# NET.py 定义了网络结构和 loss function from opt import * from base_net import * import tensorflow as tf class OpenPose(object): def __init__(self, input_data, trainable): self.cpm_num = cfg.OP.cpm_num+1 # 关键点数量 + 背景类 self.paf_num = cfg.OP.paf_num # 关键点连接数量×2 self.trainable = trainable self.PAF = [] self.CPM = [] self.__build_network(input_data) def __build_network(self, input_data): with tf.variable_scope("MODEL"): with tf.variable_scope("base"): x = convolutional(input_data, 64, (3, 3), (1, 1), trainable=self.trainable, name="conv1_1") x = convolutional(x, 64, (3, 3), (1, 1), trainable=self.trainable, name="conv1_2") x = max_pool(x, (2, 2), (2, 2), name='pool1', padding='VALID') x = convolutional(x, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv2_1') x = convolutional(x, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv2_2') x = max_pool(x, (2, 2), (2, 2), name='pool2', padding='VALID') x = convolutional(x, 256, (3, 3), (1, 1), trainable=self.trainable, name='conv3_1') x = convolutional(x, 256, (3, 3), (1, 1), trainable=self.trainable, name='conv3_2') x = convolutional(x, 256, (3, 3), (1, 1), trainable=self.trainable, name='conv3_3') x = convolutional(x, 256, (3, 3), (1, 1), trainable=self.trainable, name='conv3_4') x = max_pool(x, (2, 2), (2, 2), name='pool3', padding='VALID') x = convolutional(x, 512, (3, 3), (1, 1), trainable=self.trainable, name='conv4_1') x = convolutional(x, 512, (3, 3), (1, 1), trainable=self.trainable, name='conv4_2') x = convolutional(x, 256, (3, 3), (1, 1), trainable=self.trainable, name='conv4_3_CPM') base = convolutional(x, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv4_4_CPM',bn=False) with tf.variable_scope("stage1"): with tf.variable_scope("PAF"): x = convolutional(base, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv1') x = convolutional(x, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv2') x = convolutional(x, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv3') x = convolutional(x, 512, (1, 1), (1, 1), trainable=self.trainable, name='conv4') paf_stage = convolutional(x, self.paf_num, (1, 1), (1, 1), trainable=self.trainable, name='conv5', activate=False, bn=False) self.PAF.append(paf_stage) with tf.variable_scope("CPM"): x = convolutional(base, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv1') x = convolutional(x, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv2') x = convolutional(x, 128, (3, 3), (1, 1), trainable=self.trainable, name='conv3') x = convolutional(x, 512, (1, 1), (1, 1), trainable=self.trainable, name='conv4') cpm_stage = convolutional(x, self.cpm_num, (1, 1), (1, 1), trainable=self.trainable, name='conv5', activate=False,bn=False) self.CPM.append(cpm_stage) for stage in range(2, 7): with tf.variable_scope("stage{}".format(stage)): concat = route([paf_stage,cpm_stage, base], name="concat") with tf.variable_scope("PAF"): x = convolutional(concat, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv1') x = convolutional(x, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv2') x = convolutional(x, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv3') x = convolutional(x, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv4') x = convolutional(x, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv5') x = convolutional(x, 128, (1, 1), (1, 1), trainable=self.trainable, name='conv6') paf_stage = convolutional(x, self.paf_num, (1, 1), (1, 1), trainable=self.trainable, name='conv7', activate=False,bn=False) self.PAF.append(paf_stage) with tf.variable_scope("CPM"): x = convolutional(concat, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv1') x = convolutional(x, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv2') x = convolutional(x, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv3') x = convolutional(x, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv4') x = convolutional(x, 128, (7, 7), (1, 1), trainable=self.trainable, name='conv5') x = convolutional(x, 128, (1, 1), (1, 1), trainable=self.trainable, name='conv6') cpm_stage = convolutional(x, self.cpm_num, (1, 1), (1, 1), trainable=self.trainable, name='conv7', activate=False,bn=False) self.CPM.append(cpm_stage) def loss_layer(self, heatmap_node, vectmap_node): with tf.device(tf.DeviceSpec(device_type="GPU")): losses = [] last_losses_l1 = [] last_losses_l2 = [] for idx, (PAF, CPM) in enumerate(zip(self.PAF, self.CPM)): # loss_l1 = tf.nn.l2_loss(tf.concat(PAF, axis=0) - vectmap_node, name='loss_l1_stage%d' % (idx)) # loss_l2 = tf.nn.l2_loss(tf.concat(CPM, axis=0) - heatmap_node, name='loss_l2_stage%d' % (idx)) loss_l1 = tf.reduce_mean((tf.concat(PAF, axis=0) - vectmap_node)**2, name='loss_l1_stage%d' % (idx)) loss_l2 = tf.reduce_mean((tf.concat(CPM, axis=0) - heatmap_node)**2, name='loss_l2_stage%d' % (idx)) losses.append(tf.reduce_mean([loss_l1, loss_l2])) last_losses_l1.append(loss_l1) last_losses_l2.append(loss_l2) self.total_loss = tf.reduce_mean(losses) self.total_loss_paf = tf.reduce_mean(last_losses_l1) self.total_loss_heat = tf.reduce_mean(last_losses_l2) self.total_loss_ll = self.total_loss_paf + self.total_loss_heat return self.total_loss, self.total_loss_paf, self.total_loss_heat, self.total_loss_ll def get_lastlayer(self): return self.PAF[-1], self.CPM[-1]

2 基础网络层的定义

- 这里附上了有 卷积层、池化层、合并层、残差层、上采样层。其中后两种该网络是没有使用到的。(比较简单,不做详细描述)

- 值得注意的是:

(1) 不同的初始化会产生不同的训练情况。在openpose网络中,没有使用 BN,所以需要设置 偏置bias。偏置初始话的选择:当tf.constant_initializer(0.0)的使用,延迟了训练时网络的收敛的时间;当 tf.contrib.layers.xavier_initializer(),可提升训练的速度和效果

(2) tf.nn.leaky_relu 的使用,延迟了网络的收敛。所以还是选择tf.nn.relu。

import tensorflow as tf init_weights = [tf.random_normal_initializer(stddev=0.01), tf.contrib.layers.xavier_initializer()] init_weight = init_weights[1] def convolutional(input_data, output_C, kernel_size, stride_size, trainable, name, activate=True, bn=False): with tf.variable_scope(name): if stride_size[0] != 1 or stride_size[1] != 1: pad_h, pad_w = (kernel_size[0] - 2) // 2 + 1, (kernel_size[1] - 2) // 2 + 1 paddings = tf.constant([[0, 0], [pad_h, pad_h], [pad_w, pad_w], [0, 0]]) input_data = tf.pad(input_data, paddings, 'CONSTANT') strides = (1, stride_size[0], stride_size[1], 1) padding = 'VALID' else: strides = (1, 1, 1, 1) padding = "SAME" input_C = int(input_data.get_shape()[-1]) filters_shape = (kernel_size[0], kernel_size[1], input_C, output_C) weight = tf.get_variable(name='weight', dtype=tf.float32, trainable=trainable, shape=filters_shape, initializer=init_weight) conv = tf.nn.conv2d(input=input_data, filter=weight, strides=strides, padding=padding) if bn: conv = tf.layers.batch_normalization(conv, beta_initializer=tf.zeros_initializer(), gamma_initializer=tf.ones_initializer(), moving_mean_initializer=tf.zeros_initializer(), moving_variance_initializer=tf.ones_initializer(), training=trainable, name = "bn") else: bias = tf.get_variable(name='bias', shape=filters_shape[-1], trainable=trainable, dtype=tf.float32, initializer=init_weight) conv = tf.nn.bias_add(conv, bias) # if activate == True: conv = tf.nn.leaky_relu(conv, alpha=0.1) if activate == True: conv = tf.nn.relu(conv) return conv def residual_block(input_data, filter_num1, filter_num2, trainable, name): short_cut = input_data with tf.variable_scope(name): input_data = convolutional(input_data, filter_num1, (1, 1), (1, 1), trainable=trainable, name='conv1') input_data = convolutional(input_data, filter_num2, (3, 3), (1, 1), trainable=trainable, name='conv2') residual_output = input_data + short_cut return residual_output def route(input_layer, name): with tf.variable_scope(name): output = tf.concat(input_layer, axis=-1) return output def upsample(input_data, name, method="deconv"): assert method in ["resize", "deconv"] if method == "resize": with tf.variable_scope(name): input_shape = tf.shape(input_data) output = tf.image.resize_nearest_neighbor(input_data, (input_shape[1] * 2, input_shape[2] * 2)) return output if method == "deconv": # replace resize_nearest_neighbor with conv2d_transpose To support TensorRT optimization numm_filter = input_data.shape.as_list()[-1] output = tf.layers.conv2d_transpose(input_data, numm_filter, kernel_size=2, padding='same', strides=(2 ,2), kernel_initializer=init_weight) return output def max_pool(input, kernel_size, stride_size, name, padding=None): return tf.nn.max_pool(input, ksize=[1, kernel_size[0], kernel_size[1], 1], strides=[1, stride_size[0], stride_size[1], 1], padding=padding, name=name)