PCL点云库调库学习系列——关键点NARF(附完整代码)

PCL–关键点

关键点也称为兴趣点,它是 2D 图像、3D 点云或曲面模型上,可以通过定义检测标准来获取的具有稳定性、区别性的点集。从技术上来说,关键点的数量相比于原始点云或图像的 数据量小很多,它与局部特征描述子结合在一起,组成关键点描述子,常用来形成原始数据 的紧凑表示,而且不失代表性与描述性,从而可以加快后续识别、追踪等对数据的处理速度。关键点提取是 2D 与 3D 信息处理中不可或缺的关键技术。

1 NARF 关键点提取

NARF(Normal Aligned Radial Feature)关键点是为了从==深度图像==中提取物体提出的。NARF关键点提取要求:提取过程必须将边缘以及物体表面变化信息考虑在内;关键点位置必须稳定,可以在不同视角时被重复探测;关键点所在位置必须有稳定的支持区域,可以计算描述子并进行唯一的法向量估计。

提取步骤:

- 遍历每个深度图像点,通过寻找在近邻区域有深度突变的位置进行边缘检测。

- 遍历每个深度图像点,根据近邻区域的表面变化决定一种测度表面变化的系数,以及变化的主 方向。

- 根据第二步找到的主方向计算兴趣值,表征该方向与其他方向的不同,以及该处表面的变化情 况,即该点有多稳定。

- 对兴趣值进行平滑过滤。

- 进行无最大值压缩找到最终的关键点,即为 NARF 关键点。

1.1 实现功能

从距离图像中提取NARF关键点

1.2 关键函数

//1.从点云创建深度图像

void pcl::RangeImage::createFromPointCloud(

const PointCloudType& point_cloud, //输入点云

float angular_resolution = pcl::deg2rad (0.5f), //图像中各个像素之间的角差(以弧度表示)

float max_angle_width = pcl::deg2rad (360.0f), //定义传感器水平边界的角度(以弧度为单位)

float max_angle_height = pcl::deg2rad (180.0f), //定义传感器垂直边界的角度(以弧度为单位)

const Eigen::Affine3f& sensor_pose = Eigen::Affine3f::Identity (), //定义传感器位姿的仿射矩阵

RangeImage::CoordinateFrame coordinate_frame = CAMERA_FRAME, //坐标系统

float noise_level = 0.0f, //以米为单位的距离,其中z缓冲区不会使用最小值,而是使用点的均值。如果0.0,它相当于一个普通的z缓冲区,并且总是取每个单元格的最小值。

float min_range = 0.0f, //最小可见范围(默认为0),如果大于0,则半径为min_range内的邻近点都将被忽略

int border_size = 0 //边界的大小,如果大于0,将在图像周围留下当前视点不可见点的边界

)

1.3 完整代码

/* \author Bastian Steder */

#include \n\n"

<< "Options:\n"

<< "-------------------------------------------\n"

<< "-r angular resolution in degrees (default " << angular_resolution << ")\n"

<< "-c coordinate frame (default " << (int)coordinate_frame << ")\n"

<< "-m Treat all unseen points as maximum range readings\n"

<< "-s support size for the interest points (diameter of the used sphere - "

<< "default " << support_size << ")\n"

<< "-h this help\n"

<< "\n\n";

}

//void

//setViewerPose (pcl::visualization::PCLVisualizer& viewer, const Eigen::Affine3f& viewer_pose)

//{

//Eigen::Vector3f pos_vector = viewer_pose * Eigen::Vector3f (0, 0, 0);

//Eigen::Vector3f look_at_vector = viewer_pose.rotation () * Eigen::Vector3f (0, 0, 1) + pos_vector;

//Eigen::Vector3f up_vector = viewer_pose.rotation () * Eigen::Vector3f (0, -1, 0);

//viewer.setCameraPosition (pos_vector[0], pos_vector[1], pos_vector[2],

//look_at_vector[0], look_at_vector[1], look_at_vector[2],

//up_vector[0], up_vector[1], up_vector[2]);

//}

// --------------

// -----Main-----

// --------------

int

main(int argc, char** argv)

{

// --------------------------------------

// -----Parse Command Line Arguments-----

// --------------------------------------

if (pcl::console::find_argument(argc, argv, "-h") >= 0)

{

printUsage(argv[0]);

return 0;

}

if (pcl::console::find_argument(argc, argv, "-m") >= 0)

{

setUnseenToMaxRange = true;

std::cout << "Setting unseen values in range image to maximum range readings.\n";

}

int tmp_coordinate_frame;

if (pcl::console::parse(argc, argv, "-c", tmp_coordinate_frame) >= 0)

{

coordinate_frame = pcl::RangeImage::CoordinateFrame(tmp_coordinate_frame);

std::cout << "Using coordinate frame " << (int)coordinate_frame << ".\n";

}

if (pcl::console::parse(argc, argv, "-s", support_size) >= 0)

std::cout << "Setting support size to " << support_size << ".\n";

if (pcl::console::parse(argc, argv, "-r", angular_resolution) >= 0)

std::cout << "Setting angular resolution to " << angular_resolution << "deg.\n";

angular_resolution = pcl::deg2rad(angular_resolution);

// ------------------------------------------------------------------

// -----Read pcd file or create example point cloud if not given-----

// ------------------------------------------------------------------

pcl::PointCloud<PointType>::Ptr point_cloud_ptr(new pcl::PointCloud<PointType>);

pcl::PointCloud<PointType>& point_cloud = *point_cloud_ptr;

pcl::PointCloud<pcl::PointWithViewpoint> far_ranges;

Eigen::Affine3f scene_sensor_pose(Eigen::Affine3f::Identity());

//解析命令行参数

std::vector<int> pcd_filename_indices = pcl::console::parse_file_extension_argument(argc, argv, "pcd");

//如果输入了pcd文件,则使用输入的文件

if (!pcd_filename_indices.empty())

{

std::string filename = argv[pcd_filename_indices[0]];

if (pcl::io::loadPCDFile(filename, point_cloud) == -1)

{

std::cerr << "Was not able to open file \"" << filename << "\".\n";

printUsage(argv[0]);

return 0;

}

scene_sensor_pose = Eigen::Affine3f(Eigen::Translation3f(point_cloud.sensor_origin_[0],

point_cloud.sensor_origin_[1],

point_cloud.sensor_origin_[2])) *

Eigen::Affine3f(point_cloud.sensor_orientation_);

std::string far_ranges_filename = pcl::getFilenameWithoutExtension(filename) + "_far_ranges.pcd";

if (pcl::io::loadPCDFile(far_ranges_filename.c_str(), far_ranges) == -1)

std::cout << "Far ranges file \"" << far_ranges_filename << "\" does not exists.\n";

}

//否则创建点云

else

{

setUnseenToMaxRange = true;

std::cout << "\nNo *.pcd file given => Generating example point cloud.\n\n";

for (float x = -0.5f; x <= 0.5f; x += 0.01f)

{

for (float y = -0.5f; y <= 0.5f; y += 0.01f)

{

PointType point; point.x = x; point.y = y; point.z = 2.0f - y;

point_cloud.points.push_back(point);

}

}

point_cloud.width = (int)point_cloud.points.size(); point_cloud.height = 1;

}

// -----------------------------------------------

// -----Create RangeImage from the PointCloud-----

// -----------------------------------------------

// ------------从点云创建深度图像-----------------

float noise_level = 0.0;

float min_range = 0.0f;

int border_size = 1;

pcl::RangeImage::Ptr range_image_ptr(new pcl::RangeImage);

pcl::RangeImage& range_image = *range_image_ptr;

//生成深度图像

range_image.createFromPointCloud(point_cloud, angular_resolution, pcl::deg2rad(360.0f), pcl::deg2rad(180.0f),

scene_sensor_pose, coordinate_frame, noise_level, min_range, border_size);

range_image.integrateFarRanges(far_ranges); //将给定的远距离测量值集成到深度图像中。

if (setUnseenToMaxRange)

//将所有-INFINITY值设置为INFINITY。

range_image.setUnseenToMaxRange(); //这样设置所有不能观察到的点都为远距离

// --------------------------------------------

// -----Open 3D viewer and add point cloud-----

// --------------------------------------------

//可视化点云

pcl::visualization::PCLVisualizer viewer("3D Viewer");

viewer.setBackgroundColor(1, 1, 1);

pcl::visualization::PointCloudColorHandlerCustom<pcl::PointWithRange> range_image_color_handler(range_image_ptr, 0, 0, 0);

viewer.addPointCloud(range_image_ptr, range_image_color_handler, "range image");

viewer.setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE, 1, "range image");

//viewer.addCoordinateSystem (1.0f, "global");

//PointCloudColorHandlerCustom point_cloud_color_handler (point_cloud_ptr, 150, 150, 150);

//viewer.addPointCloud (point_cloud_ptr, point_cloud_color_handler, "original point cloud");

viewer.initCameraParameters();

//setViewerPose (viewer, range_image.getTransformationToWorldSystem ());

// --------------------------

// -----Show range image-----

// --------------------------

//显示深度图像

pcl::visualization::RangeImageVisualizer range_image_widget("Range image");

range_image_widget.showRangeImage(range_image);

// --------------------------------

// -----Extract NARF keypoints-----

// --------------------------------

// -------提取NARF关键点----------

//创建深度图像边界提取对象

pcl::RangeImageBorderExtractor range_image_border_extractor;

//创建NARF关键点提取器,输入为深度图像边界提取器

pcl::NarfKeypoint narf_keypoint_detector(&range_image_border_extractor);

//关键点提取,设置输入深度图像

narf_keypoint_detector.setRangeImage(&range_image);

//关键点提取的参数:兴趣点覆盖的区域,单位是米,搜索空间球体的半径

narf_keypoint_detector.getParameters().support_size = support_size;

//对竖直边是否感兴趣

//narf_keypoint_detector.getParameters ().add_points_on_straight_edges = true;

//narf_keypoint_detector.getParameters ().distance_for_additional_points = 0.5;

pcl::PointCloud<int> keypoint_indices; //关键点索引

narf_keypoint_detector.compute(keypoint_indices); //关键点计算,结果保存在keypoint_indices

std::cout << "Found " << keypoint_indices.points.size() << " key points.\n";

// ----------------------------------------------

// -----Show keypoints in range image widget-----

// ----------------------------------------------

//for (std::size_t i=0; i

//range_image_widget.markPoint (keypoint_indices.points[i]%range_image.width,

//keypoint_indices.points[i]/range_image.width);

// -------------------------------------

// -----Show keypoints in 3D viewer-----

// -------------------------------------

//显示关键点

pcl::PointCloud<pcl::PointXYZ>::Ptr keypoints_ptr(new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ>& keypoints = *keypoints_ptr;

keypoints.points.resize(keypoint_indices.points.size());

for (std::size_t i = 0; i < keypoint_indices.points.size(); ++i)

keypoints.points[i].getVector3fMap() = range_image.points[keypoint_indices.points[i]].getVector3fMap(); //添加成员x,y,z

pcl::visualization::PointCloudColorHandlerCustom<pcl::PointXYZ> keypoints_color_handler(keypoints_ptr, 0, 255, 0);

viewer.addPointCloud<pcl::PointXYZ>(keypoints_ptr, keypoints_color_handler, "keypoints");

viewer.setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE, 7, "keypoints");

//--------------------

// -----Main loop-----

//--------------------

while (!viewer.wasStopped())

{

range_image_widget.spinOnce(); // process GUI events

viewer.spinOnce();

pcl_sleep(0.01);

}

}

1.4 小结

- 创建深度图像的流程

//1.创建对象

pcl::RangeImage range_image;

//2.生成图像

range_image.createFromPointCloud();

//3.其他设置

range_image.integrateFarRanges();

range_image.setUnseenToMaxRange();

- 显示深度图像

//1.创建深度图像显示器

pcl::visualization::RangeImageVisualizer range_image_widget("Range image");

//2.显示

range_image_widget.showRangeImage(range_image);

- 提取NARF关键点流程

//1.创建深度图像边界提取对象

pcl::RangeImageBorderExtractor range_image_border_extractor;

//2.创建NARF关键点提取器,输入为深度图像边界提取器

pcl::NarfKeypoint narf_keypoint_detector(&range_image_border_extractor);

//3.关键点提取,设置输入深度图像

narf_keypoint_detector.setRangeImage(&range_image);

//4.相关参数设置

//关键点提取的参数:兴趣点覆盖的区域,单位是米,搜索空间球体的半径

narf_keypoint_detector.getParameters().support_size = support_size;

//对竖直边是否感兴趣

narf_keypoint_detector.getParameters ().add_points_on_straight_edges = true;

//narf_keypoint_detector.getParameters ().distance_for_additional_points = 0.5;

//5.计算关键点

pcl::PointCloud<int> keypoint_indices; //关键点索引

narf_keypoint_detector.compute(keypoint_indices); //关键点计算,结果保存在keypoint_indices

//6.显示关键点

pcl::PointCloud<pcl::PointXYZ> keypoints;

keypoints.points.resize(keypoint_indices.points.size());

for (std::size_t i = 0; i < keypoint_indices.points.size(); ++i)

//添加成员x,y,z

keypoints.points[i].getVector3fMap() = range_image.points[keypoint_indices.points[i]].getVector3fMap();

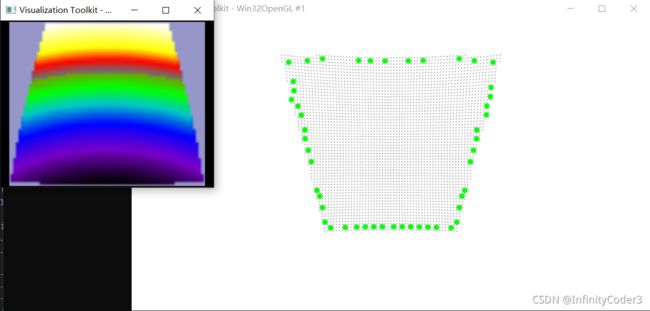

1.5运行结果

启用了narf_keypoint_detector.getParameters ().add_points_on_straight_edges = true;的结果