torch.Tensor.copy_()和torch.Tensor.detach()和torch.Tensor.clone()学习笔记

参考链接: copy_(src, non_blocking=False) → Tensor

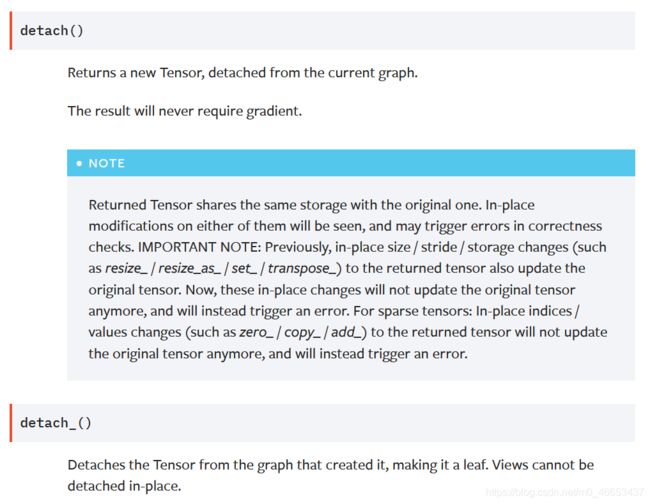

参考链接: detach()

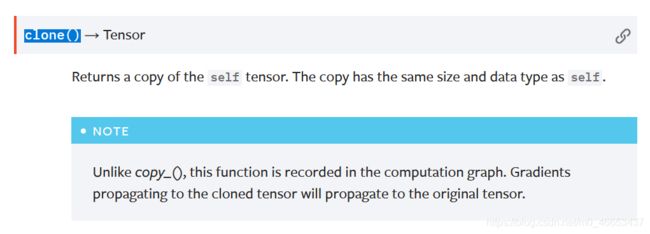

参考链接: clone() → Tensor

Microsoft Windows [版本 10.0.18363.1256]

(c) 2019 Microsoft Corporation。保留所有权利。

C:\Users\chenxuqi>conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) C:\Users\chenxuqi>python

Python 3.7.7 (default, May 6 2020, 11:45:54) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x000002421C30D330>

>>>

>>> x = torch.randn(3, requires_grad=True)

>>> y = torch.randn(3, requires_grad=True)

>>> x

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> y

tensor([-1.7601, -0.1806, 2.0937], requires_grad=True)

>>> y.copy_(x)

Traceback (most recent call last):

File "" , line 1, in <module>

RuntimeError: a leaf Variable that requires grad has been used in an in-place operation.

>>>

>>> y = torch.randn(3, requires_grad=False)

>>> y

tensor([ 1.0406, -1.7651, 1.1216])

>>> x

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> y

tensor([ 1.0406, -1.7651, 1.1216])

>>> y.copy_(x)

tensor([ 0.2824, -0.3715, 0.9088], grad_fn=<CopyBackwards>)

>>> y

tensor([ 0.2824, -0.3715, 0.9088], grad_fn=<CopyBackwards>)

>>> x

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>>

>>>

Microsoft Windows [版本 10.0.18363.1256]

(c) 2019 Microsoft Corporation。保留所有权利。

C:\Users\chenxuqi>conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) C:\Users\chenxuqi>python

Python 3.7.7 (default, May 6 2020, 11:45:54) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x000001DE002BD330>

>>>

>>> xt = torch.randn(3, requires_grad=True)

>>> xf = torch.randn(3, requires_grad=False)

>>> yt = torch.randn(3, requires_grad=True)

>>> yf = torch.randn(3, requires_grad=False)

>>> xt

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> xf

tensor([-1.7601, -0.1806, 2.0937])

>>> yt

tensor([ 1.0406, -1.7651, 1.1216], requires_grad=True)

>>> yf

tensor([0.8440, 0.1783, 0.6859])

>>>

>>> yt.copy_(xt)

Traceback (most recent call last):

File "" , line 1, in <module>

RuntimeError: a leaf Variable that requires grad has been used in an in-place operation.

>>>

>>> yf.copy_(xf)

tensor([-1.7601, -0.1806, 2.0937])

>>>

>>> yf

tensor([-1.7601, -0.1806, 2.0937])

>>>

>>>

>>>

>>>

>>>

>>>

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x000001DE002BD330>

>>> xt = torch.randn(3, requires_grad=True)

>>> xf = torch.randn(3, requires_grad=False)

>>> yt = torch.randn(3, requires_grad=True)

>>> yf = torch.randn(3, requires_grad=False)

>>> xt

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> xf

tensor([-1.7601, -0.1806, 2.0937])

>>> yt

tensor([ 1.0406, -1.7651, 1.1216], requires_grad=True)

>>> yf

tensor([0.8440, 0.1783, 0.6859])

>>>

>>> yf.copy_(xt)

tensor([ 0.2824, -0.3715, 0.9088], grad_fn=<CopyBackwards>)

>>>

>>> yt.copy_(xf)

Traceback (most recent call last):

File "" , line 1, in <module>

RuntimeError: a leaf Variable that requires grad has been used in an in-place operation.

>>>

>>>

>>>

Microsoft Windows [版本 10.0.18363.1256]

(c) 2019 Microsoft Corporation。保留所有权利。

C:\Users\chenxuqi>conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) C:\Users\chenxuqi>python

Python 3.7.7 (default, May 6 2020, 11:45:54) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x0000022963CED330>

>>>

>>> xt = torch.randn(3, requires_grad=True)

>>> xf = torch.randn(3, requires_grad=False)

>>> yt = torch.randn(3, requires_grad=True)

>>> yf = torch.randn(3, requires_grad=False)

>>> xt

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> xf

tensor([-1.7601, -0.1806, 2.0937])

>>> yt

tensor([ 1.0406, -1.7651, 1.1216], requires_grad=True)

>>> yf

tensor([0.8440, 0.1783, 0.6859])

>>>

>>>

>>>

>>>

>>> xt

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> xt.requires_grad

True

>>> xt.grad_fn

>>> print(xt.grad_fn)

None

>>>

>>> xf

tensor([-1.7601, -0.1806, 2.0937])

>>> xf.requires_grad

False

>>> print(xf.grad_fn)

None

>>>

>>> xf.copy_(xt)

tensor([ 0.2824, -0.3715, 0.9088], grad_fn=<CopyBackwards>)

>>> xt

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> xt.requires_grad

True

>>> print(xt.grad_fn)

None

>>> xf

tensor([ 0.2824, -0.3715, 0.9088], grad_fn=<CopyBackwards>)

>>> xf.requires_grad

True

>>> print(xf.grad_fn)

<CopyBackwards object at 0x0000022954D3DF88>

>>>

>>>

>>> #############################################

>>> yt

tensor([ 1.0406, -1.7651, 1.1216], requires_grad=True)

>>> yt.requires_grad

True

>>> yt.grad_fn

>>> print(yt.grad_fn)

None

>>> yf

tensor([0.8440, 0.1783, 0.6859])

>>> yf.requires_grad

False

>>> print(yf.grad_fn)

None

>>> yf = 20200910.0 * yt

>>> yf

tensor([ 21021540., -35657388., 22658140.], grad_fn=<MulBackward0>)

>>> yt

tensor([ 1.0406, -1.7651, 1.1216], requires_grad=True)

>>> yt.requires_grad

True

>>> yf.requires_grad

True

>>> print(yt.grad_fn)

None

>>> print(yf.grad_fn)

<MulBackward0 object at 0x0000022954D3DFC8>

>>>

>>>

>>>

>>> xf = torch.randn(3, requires_grad=False)

>>> yf = torch.randn(3, requires_grad=False)

>>> xf

tensor([-1.5942, -0.2006, -0.4050])

>>> yf

tensor([-0.5556, 0.9571, 0.7435])

>>> xf.requires_grad

False

>>> yf.requires_grad

False

>>> print(xf.grad_fn)

None

>>> print(yf.grad_fn)

None

>>>

>>> xf.copy_(yf)

tensor([-0.5556, 0.9571, 0.7435])

>>>

>>> xf

tensor([-0.5556, 0.9571, 0.7435])

>>> yf

tensor([-0.5556, 0.9571, 0.7435])

>>> xf.requires_grad

False

>>> yf.requires_grad

False

>>> print(xf.grad_fn)

None

>>> print(yf.grad_fn)

None

>>>

>>>

>>>

>>> xf = torch.randn(3, requires_grad=False)

>>> xf

tensor([-0.2974, -2.2825, -0.6627])

>>> xf.requires_grad

False

>>> print(xf.grad_fn)

None

>>> xxx = 20200910.0 * xf

>>> xf

tensor([-0.2974, -2.2825, -0.6627])

>>> xxx

tensor([ -6007563., -46108144., -13387551.])

>>> xf.requires_grad

False

>>> xxx.requires_grad

False

>>> print(xf.grad_fn)

None

>>> print(xxx.grad_fn)

None

>>> xf.grad_fn

>>> xxx.grad_fn

>>>

>>> None

>>>

>>>

>>>

>>> None

>>>

>>>

>>>

>>>

>>>

Microsoft Windows [版本 10.0.18363.1256]

(c) 2019 Microsoft Corporation。保留所有权利。

C:\Users\chenxuqi>conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) C:\Users\chenxuqi>python

Python 3.7.7 (default, May 6 2020, 11:45:54) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x0000018DA812D330>

>>>

>>> x = torch.randn(3, requires_grad=True)

>>> y = torch.randn(3, requires_grad=True)

>>> x

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> y

tensor([-1.7601, -0.1806, 2.0937], requires_grad=True)

>>> y.detach()

tensor([-1.7601, -0.1806, 2.0937])

>>> y

tensor([-1.7601, -0.1806, 2.0937], requires_grad=True)

>>>

>>> y+1

tensor([-0.7601, 0.8194, 3.0937], grad_fn=<AddBackward0>)

>>>

>>> (y+1).detach()

tensor([-0.7601, 0.8194, 3.0937])

>>>

>>>

>>> y = torch.randn(3, requires_grad=False)

>>> y

tensor([ 1.0406, -1.7651, 1.1216])

>>> y+1

tensor([ 2.0406, -0.7651, 2.1216])

>>> y.detach()

tensor([ 1.0406, -1.7651, 1.1216])

>>> (y+1).detach()

tensor([ 2.0406, -0.7651, 2.1216])

>>>

>>>

>>> x

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> y

tensor([ 1.0406, -1.7651, 1.1216])

>>> x.detach_()

tensor([ 0.2824, -0.3715, 0.9088])

>>> y.detach_()

tensor([ 1.0406, -1.7651, 1.1216])

>>>

>>>

>>>

detach()返回的新张量和原来的张量共享内存:

Microsoft Windows [版本 10.0.18363.1256]

(c) 2019 Microsoft Corporation。保留所有权利。

C:\Users\chenxuqi>conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) C:\Users\chenxuqi>python

Python 3.7.7 (default, May 6 2020, 11:45:54) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x0000023E000AD330>

>>>

>>> x = torch.randn(3, requires_grad=True)

>>> y = x.detach()

>>> x

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> y

tensor([ 0.2824, -0.3715, 0.9088])

>>> y.zero_()

tensor([0., 0., 0.])

>>> y

tensor([0., 0., 0.])

>>> x

tensor([0., 0., 0.], requires_grad=True)

>>>

>>>

>>>

>>>

代码实验2:

Microsoft Windows [版本 10.0.18363.1256]

(c) 2019 Microsoft Corporation。保留所有权利。

C:\Users\chenxuqi>conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) C:\Users\chenxuqi>python

Python 3.7.7 (default, May 6 2020, 11:45:54) [MSC v.1916 64 bit (AMD64)] :: Anaconda, Inc. on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> torch.manual_seed(seed=20200910)

<torch._C.Generator object at 0x0000016F955DD330>

>>>

>>>

>>> x = torch.randn(3, requires_grad=True)

>>> y = torch.randn(3, requires_grad=False)

>>>

>>> x

tensor([ 0.2824, -0.3715, 0.9088], requires_grad=True)

>>> y

tensor([-1.7601, -0.1806, 2.0937])

>>>

>>> x.clone()

tensor([ 0.2824, -0.3715, 0.9088], grad_fn=<CloneBackward>)

>>> y.clone()

tensor([-1.7601, -0.1806, 2.0937])

>>>

>>> (x+1).clone()

tensor([1.2824, 0.6285, 1.9088], grad_fn=<CloneBackward>)

>>>

>>> (y+1).clone()

tensor([-0.7601, 0.8194, 3.0937])

>>>

>>>

>>>