StrongSORT:Make DeepSORT Great Again

0. 摘要

Abstract. Existing Multi-Object Tracking (MOT) methods can be roughly classified as tracking-by-detection and joint-detection-association paradigms. Although the latter has elicited more attention and demonstrates comparable performance relative to the former, we claim that the tracking-by-detection paradigm is still the optimal solution in terms of tracking accuracy. In this paper, we revisit the classic tracker DeepSORT and upgrade it from various aspects, i.e., detection, embedding and association.The resulting tracker, called StrongSORT, sets new HOTA and IDF1records on MOT17 and MOT20. We also present two lightweight andplug-and-play algorithms to further refine the tracking results. Firstly,an appearance-free link model (AFLink) is proposed to associate shorttracklets into complete trajectories. To the best of our knowledge, thisis the first global link model without appearance information. Secondly,we propose Gaussian-smoothed interpolation (GSI) to compensate formissing detections. Instead of ignoring motion information like linear interpolation, GSI is based on the Gaussian process regression algorithmand can achieve more accurate localizations. Moreover, AFLink and GSIcan be plugged into various trackers with a negligible extra compu-tational cost (591.9 and 140.9 Hz, respectively, on MOT17). By integrating StrongSORT with the two algorithms, the final tracker Strong-SORT++ ranks first on MOT17 and MOT20 in terms of HOTA andIDF1 metrics and surpasses the second-place one by 1.3 - 2.2. Code willbe released soon

现有的多目标跟踪(MOT)方法大致可分为基于检测的跟踪(tracking-by-detection)和联合检测关联(joint-detection-association)两种,虽然后者已经引起了更多的关注,并且表现出与前者相当的性能,但我们认为从跟踪精度来看,检测跟踪(tracking-by-detection)仍然是目前最优的解决方案。本文对经典的跟踪器DeepSORT进行了回顾,并从检测、嵌入和关联等方面对其进行了升级,提出了一种称之为StrongSORT的跟踪器,在MOT17和MOT20上设置了新的HOTA和IDF1记录。

我们还提出了两种轻量级、即插即用的算法来进一步完善跟踪结果。首先,提出了一种无外观链接模型appearence-free link(AFLink)模型,将短轨迹关联成完整的轨迹。据我们所知,这是第一个没有外观信息的全局链接模型。其次,我们提出了高斯平滑插值Gaussian-smoothed interpolation(GSI)方法来补偿漏检。GSI不像线性插值那样忽略运动信息,而是基于高斯过程回归算法,可以实现更精确的定位。此外,AFLink和GSI可以插入到各种跟踪器中,相应产生的额外计算成本可以忽略不计(在MOT17上分别为591.9 Hz和140.9 Hz)。通过将StrongSORT与这两种算法相结合,最终的跟踪器Strong Sort++在HOTA和IDF1度量方面在MOT17和MOT20上排名第一,并以1.3-2.2的优势超过第二名。

1. 引言

Multi-Object Tracking (MOT) plays an essential role in video understanding. Itaims to detect and track all specific classes of objects frame by frame. In thepast few years, the tracking-by-detection paradigm [3, 4, 36, 62, 69] dominatedthe MOT task. It performs detection per frame and formulates the MOT prob-lem as a data association task. Benefiting from high-performing object detectionmodels, tracking-by-detection methods have gained favor due to their excellent performance.

多目标跟踪(MOT)在视频理解中起着至关重要的作用。它旨在用于逐帧检测和跟踪所有特定类别的对象。在过去的几年里,tracking-by-detection[3,4,36,62,69]在MOT任务中占据主导地位。它按帧执行检测,并将MOT问题形式化为数据关联任务。得益于高性能的目标检测模型,基于检测的跟踪方法以其优良的性能而广受青睐。

Fig. 1. IDF1-MOTA-HOTA comparisons of state-of-the-art trackers with our proposed StrongSORT and StrongSORT++ on MOT17 and MOT20 test sets. The horizontal axis is MOTA, the vertival axis is IDF1, and the radius of the circle is HOTA. ”*”represents our reproduced version. Our StrongSORT++ achieves the best IDF1 and HOTA and comparable MOTA performance.

图1.IDF1-MOTA-HOTA在MOT17和MOT20测试集上与我们提出的StrongSORT和StrongSORT++进行的最先进跟踪器的比较。水平轴是MOTA,垂直轴是IDF1,圆的半径是HOTA。“*”代表我们的复制版本。我们的StrongSORT++实现了最好的IDF1和HOTA以及可与之媲美的MOTA性能。

However, these methods generally require multiple computationally expensive components, such as a detector and an embedding model. To solve this problem, several recent methods [1,60,74] integrate the detector and embedding model into a unified framework. Moreover, joint detection and embedding training appears to produce better results compared with the seperate one [47]. Thus, these methods (joint trackers) achieve comparable or even better tracking accuracy compared with tracking-by-detection ones (seperate trackers).

然而,这些方法通常需要多个计算代价很大的组件,例如检测器和嵌入模型。为了解决这一问题,最近的几种方法[1,60,74]将探测器和embedding模型集成到一个统一的框架中。此外,联合检测和嵌入训练似乎比单独的检测和嵌入训练产生了更好的效果。因此,联合检测器与单独检测器相比,实现了相当的跟踪精度,甚至更高的跟踪精度。

The success of joint trackers has motivated researchers to design unified track-ing frameworks for various components, e.g., detection, motion, embedding, and association models [30, 32, 38, 57, 59, 65, 68]. However, we argue that two problems exist in these joint frameworks: (1) the competition between different components and (2) limited data for training these components jointly. Although several strategies have been proposed to solve them, these problems still lower the upper bound of tracking accuracy. On the contrary, the potential of seperate trackers seems to be underestimated.

联合跟踪器的成功促使研究人员为各种组件设计统一的跟踪框架,例如检测、运动、嵌入和关联模型[30,32,38,57,59,65,68]。然而,我们认为这些联合框架中存在两个问题:(1)不同组件之间的竞争和(2)用于联合训练这些组件的数据有限。虽然已经提出了几种策略来解决这些问题,但这些问题仍然降低了跟踪精度的上限。相反,seperate trackers的潜力似乎被低估了。

In this paper, we revisit the classic seperate tracker DeepSORT [62], which is among the earliest methods that apply the deep learning model to the MOT task. It’s claimed that DeepSORT underperforms compared with state-of-the-artmethods because of its outdated techniques, rather than its tracking paradigm. We show that by simply equipping DeepSORT with advanced components invarious aspects, resulting in the proposed StrongSORT, it can achieve new SOTA on popular benchmarks MOT17 [35] and MOT20 [11].

在本文中,我们回顾了经典的独立跟踪器DeepSORT[62],它是最早将深度学习模型应用于MOT任务的方法之一。DeepSORT的性能不如最先进的方法是因为它的技术落后,而不是它的跟踪模式本身,通过简单地为DeepSORT的组件各个方面配置先进的,我们证明了借此产生了所提出的StrongSORT,它可以在流行的基准MOT17[35]和MOT20[11]上实现新的SOTA。

Two lightweight, plug-and-play, model-independent, appearance-free algorithms are also proposed to refine the tracking results. Firstly, to better exploit the global information, several methods propose to associate short tracklets into trajectories by using a global link model [12,39,55,56,67]. They usually generate accurate but incomplete tracklets and associate them with global information in an offline manner. Although these methods improve tracking performance sig-nificantly, they all rely on computation-intensive models, especially appearance embeddings. By contrast, we propose an appearance-free link model (AFLink)that only utilizes spatio-temporal information to predict whether the two inputtracklets belong to the same ID.

本文提出了两种轻量级、即插即用、模型无关、外观无关的算法来改进跟踪结果。首先,为了更好地利用全局信息,几种方法提出通过使用全局链接模型将短轨迹关联到轨迹[12,39,55,56,67]。它们通常生成准确但不完整的轨迹,并用离线的方式将它们与全局信息关联。虽然这些方法显著提高了跟踪性能,但它们都依赖于计算密集型模型,尤其是appearance embeddings。相反,我们提出了一种只利用时空信息来预测两个输入轨迹是否属于同一ID的AFLink模型。

Secondly, linear interpolation is widely used to compensate for missing detections [12, 21, 37, 40, 41, 73]. However, it ignores motion information, which limits the accuracy of the interpolated positions. To solve this problem, we propose the Gaussian-smoothed interpolation algorithm (GSI), which enhances the interpolation by using the Gaussian process regression algorithm [61].

其次,线性插值被广泛用于补偿缺失检测[12,21,37,40,41,73]。但是,该算法忽略了运动信息,限制了插值位置的精度。为了解决这个问题,我们提出了高斯平滑插值算法(GSI),它通过使用高斯过程回归算法来增强插值[61]。

Extensive experiments prove that the two proposed algorithms achieve notable improvements on StrongSORT and other state-of-the-art trackers, e.g.,CenterTrack [77], TransTrack [50] and FairMOT [74]. Particularly, by applyingAFLink and GSI to StrongSORT, we obtain a stronger tracker called Strong-SORT++. It achieves 64.4 HOTA, 79.5 IDF1 and 79.6 MOTA (7.1 Hz) on theMOT17 test set and 62.6 HOTA, 77.0 IDF1 and 73.8 MOTA (1.4 Hz) on theMOT20 test set. Figure 1 compares our StrongSORT and StrongSORT++ withstate-of-the-art trackers on MOT17 and MOT20 test sets. Our method achievesthe best IDF1 and HOTA and a comparable MOTA performance. Furthermore,AFLink and GSI respectively run at 591.9 and 140.9 Hz on MOT17, 224.0 and17.6 Hz on MOT20, resulting in a negligible computational cost.

大量的实验结果表明,这两种算法在StrongSORT和其他最先进的跟踪器(如CenterTrack[77]、Transtrack[50]和FairMOT[74])上取得了显著改进。特别地,通过将AFLink和GSI应用于StrongSORT,我们得到了一个更强的跟踪器,称为Strong-Sort++。在MOT17测试集上达到64.4HOTA、79.5IDF1和79.6MOTA(7.1 Hz),在MOT20测试集上达到62.6HOTA、77.0IDF1和73.8MOTA(1.4 Hz)。图1将我们的StrongSORT和StrongSORT++与MOT17和MOT20测试集上最先进的跟踪器进行了比较。我们的方法获得了最好的IDF1和HOTA,并且获得了与MOTA相当的性能。此外,AFLink和GSI分别在MOT17上运行591.9 Hz和140.9 Hz,在MOT20上运行224.0 Hz和17.6 Hz,导致计算量可以忽略不计。

The contributions of our work are summarized as follows:

We revisit the classic seperate tracker DeepSORT and improve it from various aspects, resulting in StrongSORT, which sets new HOTA and IDF1 records on MOT17 and MOT20 datasets.

We propose two lightweight and appearance-free algorithms, AFLink andGSI, which can be plugged into various trackers to improve their performanceby a large margin.

By integrating StrongSORT with AFLink and GSI, our StrongSORT++ ranks first on MOT17 and MOT20 in terms of widely used HOTA and IDF1metrics and surpasses the second-place one [73] by 1.3 - 2.2.

本文的主要工作或贡献如下:

对经典的独立跟踪器DeepSORT进行了改进,提出了在MOT17和MOT20数据集上达到新的HOTA和IDF1记录的StrongSORT算法;

提出了AFLink和GSI两种轻量级、appearance-free的跟踪算法,可以嵌入到各种跟踪器中,大大提高了跟踪性能;

通过将StrongSORT与AFLink和GSI集成,在广泛使用的HOTA和IDF1指标方面,我们的StrongSORT++在MOT17和MOT20中排名第一,并以1.3-2.2的优势超过第二名[73]。

2.相关工作

2.1 独立联合检测器

MOT methods can be classified as seperate and joint trackers. Seperate trackers[3,4,7,8,15,36,62,69] follow the tracking-by-detection paradigm and localize targets first and then associate them with information on appearance, motion, etc. Benefiting from the rapid development of object detection [17, 42, 43, 52, 53, 78],seperate trackers have dominated the MOT task for years. Recently, severaljoint trackers [30, 32, 38, 57, 59, 65, 68] have been proposed to train detection andsome other components jointly, e.g., motion, embedding and association models.The main benefit of these trackers is their low computational cost and comparable performance. However, we claim that joint trackers face two major problems: competition between different components and limited data for training the components jointly. The two problems limit the upper bound of tracking accuracy.Therefore, we argue that the tracking-by-detection paradigm is still the optimal solution for tracking performance.

MOT方法可分为单独跟踪器和联合跟踪器。独立跟踪器[3,4,7,8,15,36,62,69]遵循先检测后跟踪的范式,首先定位目标,然后将它们与外观、运动等信息相关联,得益于目标检测的快速发展[17,42,43,52,53,78],独立跟踪器多年来一直主导着MOT任务。最近,人们提出了几种联合跟踪器[30,32,38,57,59,65,68]来联合训练检测和其他一些组件,如运动、嵌入和关联模型,这些跟踪器的主要优点是计算成本低,性能相当。然而,我们声明联合跟踪器面临两大问题:不同组件之间的竞争,有限的数据用于训练联合组件。这两个问题限制了跟踪精度的上限,因此,我们认为tracking-by-detection仍然是跟踪性能的最佳解决方案。

Meanwhile, several recent studies [48, 49, 73] have abandoned appearance information and relied only on high-performance detectors and motion information, which achieve high running speed and state-of-the-art performance on MOTChallenge benchmarks [11,35]. However, we argue that it’s partly due to the general simplicity of motion patterns in these datasets. Abandoning appearance features would lead to poor robustness in more complex scenes. In this paper, we adopt the DeepSORT-like [62] paradigm and equip it with advanced techniques from various aspects to confirm the effectiveness of this classic framework.

与此同时,最近的一些研究[48,49,73]已经放弃了外观信息,而只依赖于高性能的检测器和运动信息,它们在MOT挑战基准上获得了高运行速度和最先进的性能[11,35]。然而,我们认为这在一定程度上是由于这些数据集中运动模式的普遍简单性。在更复杂的场景中,放弃外观特征会导致较差的鲁棒性。本文采用类DeepSORT[62]范式,并从各个方面为其配备了先进的技术,以证实这一经典框架的有效性。

2.2 MOT 中的全局link

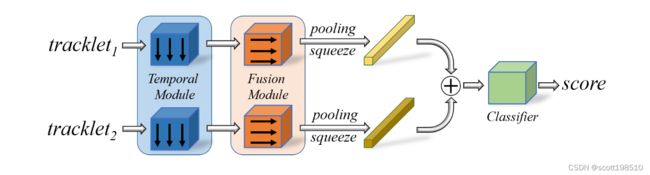

To exploit rich global information, several methods refine the tracking results with a global link model [12, 39, 55, 56, 67]. They tend to generate accurate but incomplete tracklets by using spatio-temporal and/or appearance information first. Then, these tracklets are linked by exploring global information in an offline manner. TNT [56] designs a multi-scale TrackletNet to measure the connectivity between two tracklets. It encodes motion and appearance informationin a unified network by using multi-scale convolution kernels. TPM [39] presents a tracklet-plane matching process to push easily confusable tracklets into different tracklet-planes, which helps reduce the confusion in the tracklet matching step. ReMOT [67] is improved from ReMOTS [66]. Given any tracking results, ReMOT splits imperfect trajectories into tracklets and then merges them with appearance features. GIAOTracker [12] proposes a complex global link algorithm that encodes tracklet appearance features by using an improved ResNet50-TP model [16] and associates tracklets together with spatial and temporal costs. Although these methods yield notable improvements, they all rely on appearance features, which bring high computational cost. Differently, we propose the AFLink model that only exploits motion information to predict the link confidence between two tracklets. By designing an appropriate model framework and training process, AFLink benefits various state-of-the-art trackers with a negligible extra cost. To the best of our knowledge, this is the first appearance-freeand lightweight global link model for the MOT task.

为了利用丰富的全局信息,几种方法使用全局链接模型[12,39,55,56,67]来改进跟踪结果。他们倾向于通过首先使用时空和/或外观信息来生成准确但不完整的轨迹。然后,通过以一种灵活的方式探索全局信息,将这些轨迹链接起来。TNT[56]设计了一个多尺度TrackletNet来测量两个轨道之间的连通性。它利用多尺度卷积核在统一的网络中对运动和外观信息进行编码。TPM[39]提出了一种轨迹-平面匹配过程,将容易混淆的轨迹推送到不同的轨迹-平面中,这有助于减少轨迹匹配步骤中的混淆。ReMOT[67]是从ReMOTS[66]改进的。给定任何跟踪结果,ReMOT都会将不完美的轨迹拆分成tracklet,然后将它们与外观特征合并。GIAOTracker[12]提出了一种复杂的全局链接算法,该算法使用改进的ResNet50-TP模型[16]对轨迹块外观特征进行编码,并将轨迹块与空间和时间代价相关联,虽然这些方法都取得了显著的改进,但都依赖于外观特征,带来了较高的计算代价。不同的是,我们提出了AFLink模型,该模型只利用运动信息来预测两个轨迹之间的链接概率。通过设计合适的模型框架和训练过程,AFLink 以微不足道的额外成本使各种最先进的跟踪器受益。据我们所知,这是MOT任务的第一个外观自由和轻量级的全局链接模型。

2.3 MOT中的插值

Linear interpolation is widely used to fill the gaps of recovered trajectories for missing detections [12, 21, 37, 40, 41, 73]. Despite its simplicity and effectiveness,linear interpolation ignores motion information, which limits the accuracy of the restored bounding boxes. To solve this problem, several strategies have been proposed to utilize spatio-temporal information effectively. V-IOUTracker [5]extends IOUTracker [4] by falling back to single-object tracking [20, 25] while missing detection occurs. MAT [19] smooths the linearly interpolated trajectories nonlinearly by adopting a cyclic pseudo-observation trajectory filling strategy. An extra camera motion compensation (CMC) model [14] and Kalman filter [26]are needed to predict missing positions. MAATrack [49] simplifies it by applying only the CMC model. All these methods apply extra models, i.e., single-objecttracker, CMC, Kalman filter, in exchange for performance gains. Instead, we propose to model nonlinear motion on the basis of the Gaussian process regression(GPR) algorithm [61]. Without additional time-consuming components, our pro-posed GSI algorithm achieves a good trade-off between accuracy and efficiency.

线性插值被广泛用于填补形成检测的恢复轨迹的空白[12,21,37,40,41,73]。尽管线性插值简单有效,但它忽略了运动信息,这限制了存储的边界框的精度。为了解决这一问题,人们提出了几种有效利用时空信息的策略。V-IOUTracker 扩展了 IOUTracker,在发生漏检时回退到单目标跟踪。MAT [19]采用循环伪观测轨迹填充策略对线性插值轨迹进行非线性平滑。需要额外的相机运动补偿模型[14]和卡尔曼过滤[26]来预测丢失位置。MAATrack[49]通过只应用CMC模型简化了它。所有这些方法都使用额外的模型,即单对象跟踪器、CMC法、卡尔曼过滤法,以换取性能的提高。相反,我们建议在高斯过程回归(GPR)算法的基础上对非线性运动进行建模[61]。在不增加额外耗时组件的情况下,我们提出的GSI算法在精度和效率之间取得了很好的折衷。

Fig. 2. Framework and performance comparison between DeepSORT and Strong-SORT. Performance is evaluated on the MOT17 validation set based on detectionspredicted by YOLOX [17].

图2.DeepSORT和Strong-Sort的结构和性能比较。基于YOLOX[17]预测的检测,在MOT17验证集上评估性能。

The most similar work with our GSI is [79], which uses the GPR algorithm to smooth the uninterpolated tracklets for accurate velocity predictions. How-ever, it works for the event detection task in surveillance videos. Differently, westudy on the MOT task and adopt GPR to refine the interpolated localizations.Moreover, we present an adaptive smoothness factor, instead of presetting ahyperparameter like [79].

与我们的 GSI 最相似的工作是 [79],它使用 GPR 算法来平滑未插值的轨迹,以进行准确的速度预测。但是,它适用于监控视频中的事件检测任务。不同的是,我们对 MOT 任务进行了研究,并采用 GPR 来改进插值定位。此外,我们提出了一个自适应平滑因子,而不是像 [79] 那样预设超参数。

3 StrongSORT

In this section, we present various approaches to improve the classic tracker DeepSORT [62]. Specifically, we review DeepSORT in Section 3.1 and introduce StrongSORT in Section 3.2. Notably, we do not claim any algorithmic novelty in this section. Instead, our contributions here lie in giving a clear understanding of DeepSORT and equipping it with various advanced techniques to prove the effectiveness of its paradigm.

在本节中,我们将介绍改进经典trackerDeepSORT[62]的各种方法。具体地说,我们在3.1节中回顾了DeepSORT,并在3.2节中介绍了StrongSORT。值得注意的是,我们在这一节中没有声称有任何算法新颖性。相反,我们在这里的贡献在于对DeepSORT有了一个清晰的理解,并为其配备了各种先进的技术来证明其范式的有效性。

3.1 DeepSORT回顾

我们简要地将DeepSORT概括为一个由两个分支组成的框架,即外观分支和运动分支,如图2的上半部分所示

在外观分支中,给定每一帧中的检测,应用在行人重识别数据集MARS[75]上预训练的深度外观描述符(一种简单的CNN)来提取其外观特征,并利用feature bank机制来存储每条轨迹的最后100帧的特征。随着新检测的到来,第i个轨道小程序的feature bank Ri和第j个检测的特征fj之间的最小余弦距离被计算为

在关联过程中,将距离作为匹配代价。

在运动分支中,卡尔曼过滤算法[26]负责预测当前帧中轨迹的位置。然后,利用马氏距离来度量轨迹和目标之间的时空差异性。DeepSORT以此运动距离为阀来过滤排除不可能的关联。

然后提出匹配级联算法将关联任务作为一系列子问题来求解,而不是全局分配问题。其核心思想是赋予更频繁出现的对象更高的匹配优先级,每个关联子问题都使用匈牙利算法[29]来求解