Face alignment

Face alignment 实现方案及实现效果分析

1. Face Alignment 简介

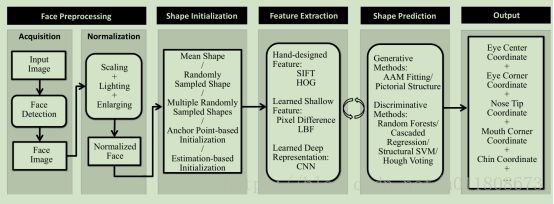

在Face Alignment中,传统方法能够取得不错的效果。但是在大姿态、极端表情上效果并不是很好。人脸对齐可以看作在一张人脸图像搜索人脸预先定义的点(也叫人脸形状),通常从一个粗估计的形状开始,然后通过迭代来细化形状的估计。 其实现的大概框架如下:

图1.1

人脸特征点检测问题需要关注两个方面:一是特征点处的局部特征提取方法,二是回归算法。特征点处的局部特征提取也可以看做是人脸的一种特征表示,现在的基于深度学习的方法可以看做是先使用神经网络得到人脸特征表示,然后使用线性回归得到点坐标。

2. 深度学习相关论文

2.1 Deep Convolutional Network Cascade for Facial Point Detection

香港中文大学唐晓鸥教授的课题组在CVPR 2013上提出3级卷积神经网络DCNN来实现人脸对齐的方法。该方法也可以统一在级联形状回归模型的大框架下,和CPR、RCPR、SDM、LBF等方法不一样的是,DCNN使用深度模型-卷积神经网络,来实现。第一级f1使用人脸图像的三块不同区域(整张人脸,眼睛和鼻子区域,鼻子和嘴唇区域)作为输入,分别训练3个卷积神经网络来预测特征点的位置,网络结构包含4个卷积层,3个Pooling层和2个全连接层,并融合三个网络的预测来得到更加稳定的定位结果。后面两级f2, f3在每个特征点附近抽取特征,针对每个特征点单独训练一个卷积神经网络(2个卷积层,2个Pooling层和1个全连接层)来修正定位的结果。该方法在LFPW数据集上取得当时最好的定位结果。

图2.1

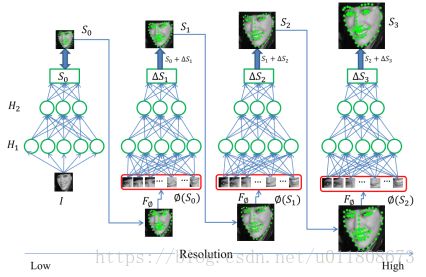

2.2 Coarse-to-Fine Auto-Encoder Networks (CFAN) for Real-Time Face Alignment

一种由粗到精的自编码器网络(CFAN)来描述从人脸表观到人脸形状的复杂非线性映射过程。该方法级联了多个栈式自编码器网络,每一个刻画从人脸表观到人脸形状的部分非线性映射。具体来说,输入一个低分辨率的人脸图像I,第一层自编码器网络f1可以快速地估计大致的人脸形状,记作基于全局特征的栈式自编码网络。网络f1包含三个隐层,隐层节点数分别为1600,900,400。然后提高人脸图像的分辨率,并根据f1得到的初始人脸形状θ1,抽取联合局部特征,输入到下一层自编码器网络f2来同时优化、调整所有特征点的位置,记作基于局部特征的栈式自编码网络。该方法级联了3个局部栈式自编码网络{f2 , f3, f4}直到在训练集上收敛。每一个局部栈式自编码网络包含三个隐层,隐层节点数分别为1296,784,400。得益于深度模型强大的非线性刻画能力,该方法在XM2VTS,LFPW,HELEN数据集上取得比DRMF、SDM更好的结果。此外,CFAN可以实时地完成人脸人脸对齐(在I7的台式机上达到23毫秒/张),比DCNN(120毫秒/张)具有更快的处理速度。

图2.2

补充:

惊, 刚发现,原来 face++ 跟我想的一样。只是比我早了几年

DCNN(face++)

2013 年,Face++在 DCNN 模型上进行改进,提出从粗到精的人脸关键点检测算法 [6],实现了 68 个人脸关键点的高精度定位。该算法将人脸关键点分为内部关键点和轮廓关键点,内部关键点包含眉毛、眼睛、鼻子、嘴巴共计 51 个关键点,轮廓关键点包含 17 个关键点。

针对内部关键点和外部关键点,该算法并行的采用两个级联的 CNN 进行关键点检测,网络结构如图所示: ![]()

针对内部 51 个关键点,采用四个层级的级联网络进行检测。其中,Level-1 主要作用是获得面部器官的边界框;Level-2 的输出是 51 个关键点预测位置,这里起到一个粗定位作用,目的是为了给 Level-3 进行初始化;Level-3 会依据不同器官进行从粗到精的定位;Level-4 的输入是将 Level-3 的输出进行一定的旋转,最终将 51 个关键点的位置进行输出。针对外部 17 个关键点,仅采用两个层级的级联网络进行检测。Level-1 与内部关键点检测的作用一样,主要是获得轮廓的 bounding box;Level-2 直接预测 17 个关键点,没有从粗到精定位的过程,因为轮廓关键点的区域较大,若加上 Level-3 和 Level-4,会比较耗时间。最终面部 68 个关键点由两个级联 CNN 的输出进行叠加得到。

算法主要创新点由以下三点:(1)把人脸的关键点定位问题,划分为内部关键点和轮廓关键点分开预测,有效的避免了 loss 不均衡问题;(2)在内部关键点检测部分,并未像 DCNN 那样每个关键点采用两个 CNN 进行预测,而是每个器官采用一个 CNN 进行预测,从而减少计算量;(3)相比于 DCNN,没有直接采用人脸检测器返回的结果作为输入,而是增加一个边界框检测层(Level-1),可以大大提高关键点粗定位网络的精度。

Face++版 DCNN 首次利用卷积神经网络进行 68 个人脸关键点检测,针对以往人脸关键点检测受人脸检测器影响的问题,作者设计 Level-1 卷积神经网络进一步提取人脸边界框,为人脸关键点检测获得更为准确的人脸位置信息,最终在当年 300-W 挑战赛上获得领先成绩。

TCNN

2016 年,Wu 等人研究了 CNN 在人脸关键点定位任务中到底学习到的是什么样的特征,在采用 GMM(Gaussian Mixture Model, 混合高斯模型)对不同层的特征进行聚类分析,发现网络进行的是层次的,由粗到精的特征定位,越深层提取到的特征越能反应出人脸关键点的位置。针对这一发现,提出了 TCNN(Tweaked Convolutional Neural Networks)[8],其网络结构如图所示: ![]()

上图为 Vanilla CNN,针对 FC5 得到的特征进行 K 个类别聚类,将训练图像按照所分类别进行划分,用以训练所对应的 FC6K。测试时,图片首先经过 Vanilla CNN 提取特征,即 FC5 的输出。将 FC5 输出的特征与 K 个聚类中心进行比较,将 FC5 输出的特征划分至相应的类别中,然后选择与之相应的 FC6 进行连接,最终得到输出。

作者通过对 Vanilla CNN 中间层特征聚类分析得出的结论是什么呢?又是如何通过中间层聚类分析得出灵感从而设计 TCNN 呢?

作者对 Vanilla CNN 中间各层特征进行聚类分析,并统计出关键点在各层之间的变化程度,如图所示: ![]()

从图中可知,越深层提取到的特征越紧密,因此越深层提取到的特征越能反应出人脸关键点的位置。作者在采用 K=64 时,对所划分簇的样本进行平均后绘图如下: ![]()

从图上可发现,每一个簇的样本反应了头部的某种姿态,甚至出现了表情和性别的差异。因此可推知,人脸关键点的位置常常和人脸的属性相关联。因此为了得到更准确的关键点定位,作者使用具有相似特征的图片训练对应的回归器,最终在人脸关键点检测数据集 AFLW,AFW 和 300W 上均获得当时最佳效果。

DAN(Deep Alignment Networks)

2017 年,Kowalski 等人提出一种新的级联深度神经网络——DAN(Deep Alignment Network)[10],以往级联神经网络输入的是图像的某一部分,与以往不同,DAN 各阶段网络的输入均为整张图片。当网络均采用整张图片作为输入时,DAN 可以有效的克服头部姿态以及初始化带来的问题,从而得到更好的检测效果。之所以 DAN 能将整张图片作为输入,是因为其加入了关键点热图(Landmark Heatmaps),关键点热图的使用是本文的主要创新点。DAN 基本框架如图所示:

![]()

DAN 包含多个阶段,每一个阶段含三个输入和一个输出,输入分别是被矫正过的图片、关键点热图和由全连接层生成的特征图,输出是面部形状(Face Shape)。其中,CONNECTION LAYER 的作用是将本阶段得输出进行一系列变换,生成下一阶段所需要的三个输入,具体操作如下图所示:

![]()

从第一阶段开始讲起,第一阶段的输入仅有原始图片和 S0S0。面部关键点的初始化即为 S0S0,S0S0 是由所有关键点取平均得到,第一阶段输出 S0S0。对于第二阶段,首先,S0S0 经第一阶段的 CONNECTION LAYERS 进行转换,分别得到转换后图片 T2(I)T2(I)、S0S0 所对应的热图 H2H2 和第一阶段 fc1fc1 层输出,这三个正是第二阶段的输入。如此周而复始,直到最后一个阶段输出 SNSN。文中给出在数据集 IBUG 上,经过第一阶段后的T2(I)T2(I) 、T2(S1)T2(S1)和特征图,如图所示:

![]()

从图中发现,DAN 要做的「变换」,就是把图片给矫正了,第一行数据尤为明显,那么 DAN 对姿态变换具有很好的适应能力,或许就得益于这个「变换」。至于 DAN 采用何种「变换」,需要到代码中具体探究。

接下来看一看,StSt 是如何由 St−1St−1 以及该阶段 CNN 得到,先看 StSt计算公式:

St=T−1t(T(St−1)+ΔSt)St=Tt−1(T(St−1)+ΔSt)

其中ΔStΔSt是由 CNN 输出的,各阶段 CNN 网络结构如图所示: ![]()

该 CNN 的输入均是经过了「变换」——TiTi的操作,因此得到的偏移量ΔStΔSt是在新特征空间下的偏移量,在经过偏移之后应经过一个反变换T−1iTi−1还原到原始空间。而这里提到的新特征空间,或许是将图像进行了「矫正」,使得网络更好的处理图像。

关键点热度图的计算就是一个中心衰减,关键点处值最大,越远则值越小,公式如下:

H(x,y)=11+minsiTt(St−1)∥(x,y)−si∥H(x,y)=11+minsiTt(St−1)‖(x,y)−si‖

为什么需要从fc1fc1 层生成一张特征图?文中提到「Such a connection allows any information learned by the preceding stage to be transferred to the consecutive stage.」其实就是人为给 CNN 增加上一阶段信息。

总而言之,DAN 是一个级联思想的关键点检测方法,通过引入关键点热图作为补充,DAN 可以从整张图片进行提取特征,从而获得更为精确的定位。

代码实现:

Theano:https://github.com/MarekKowalski/DeepAlignmentNetwork

TensorFlow:https://github.com/kpzhang93/MTCNN_face_detection_alignment

3. 目前研究流程

3.1实现方案Pipline:

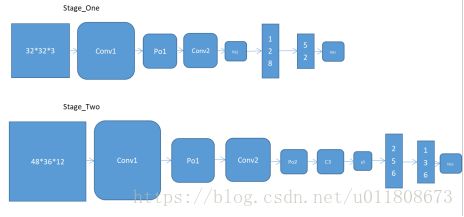

图3.1

3.2 流程解析

3.2.1 Stage One

a) Resize:将人脸图片resize到32*32的大小,landmark shape的位置同比例变换。

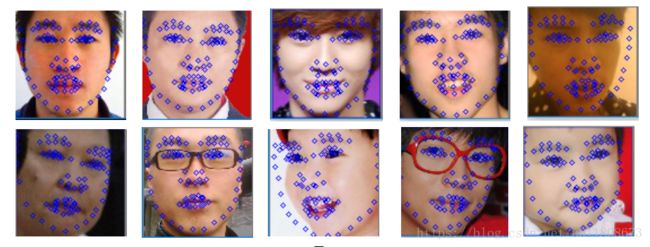

b) landmark选择:挑选出26个人脸的landmark(人脸边缘21个,剩余眼睛2个,鼻子1个,嘴巴两个,将26个位置信息展开就是26*2 = 52维)。 其效果图如图所示:

![]()

图3.2

c) landmark shape归一化:训练前可以对数据做简单处理,把图像的左上角看成坐标(-1,-1),右下角坐标看作(1,1),以此来重新计算 landmark shape 的位置信息。

注意:可能有些landmark并没有出现在图中,此时的位置信息用(-1,-1)表示,所以在测试阶段,应舍弃左上角的小区域位置信息。

舍弃规则:![]() 。

。

d) 激活函数的选择,为了更好的拟合我们的位置信息,我们采用tanh函数作为激活函数。因为我们的位置信息是[-1,1],采用tanh更容易收敛。 [当然此处也可使用sigmoid激活函数,那么对应的我们landmark shape 归一化时应将图片左上角看成(0,0),右下角看成(1,1))舍弃规则:![]() 或者是直接使用RELU函数,这样就不用再归一化landmark shape 的位置信息]

或者是直接使用RELU函数,这样就不用再归一化landmark shape 的位置信息]

e) tanh 函数图像如下图所示:

图3.3

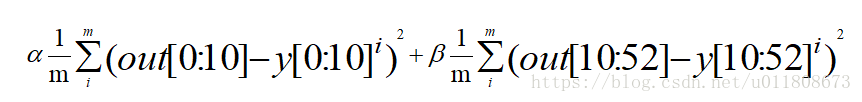

f) Loss 采用的是EuclideanLoss,公式如下。

提示: 如果想提高边缘的精度可以通过给边缘点设置更大的误差比例,如 ![]() 。

。

其中[0:10]表示的是眼睛鼻子嘴巴5个landmark, [10:52]表示的是人脸的边缘21个landmark

3.2.2 Stage two

a) Resize:将人脸图片resize到96*96的大小,landmark shape的位置同比例变换。

b)训练数据准备,训练数据分为4部分,分别为左眼,右眼,鼻子,嘴吧。第二阶段训练数据准备有以下几个方法。

l 基于第一阶段的5个输出结果作为中心进行区域剪裁,剪裁宽高为[48,36],剪裁后需要用 stage one 第c步的方式变换 landmark shape 位置信息。

l 基于标注好的数据,根据已经标注的信息进行区域剪裁,及landmark shape 位置信息变换。剪裁中心可做小范围变换以模拟真实的 stage one 结果。

c) landmark 归一化,激活函数,loss函数与 stage one 一致。

d)训练时首先将4个部分的patch图片通道融合,融合后的图片结果为:[48,36, 3*4],此处如果不对图片做通道融合而是分别使用4个CNN网络进行回归,理论上会有更精确的回归效果,但是考虑训练起来较麻烦,所以采用了融合的方法,这样只用训练一个CNN网络进行回归。

3.3检测结果

联合Stage One 和 Stage Two 的检测结果。

图3.4

由图可以看出侧脸人脸边缘检测的效果没有其他的landmark位置准确,主要是因为,为了加快回归,边缘信息只经过一次基于全局图片特征的回归。

4. 训练

4.1.训练配置

base_lr: 0.00001

lr_policy: "inv"

gamma: 0.0001

power: 0.75

regularization_type: "L2"

weight_decay: 0.0005

momentum: 0.9

max_iter: 20000

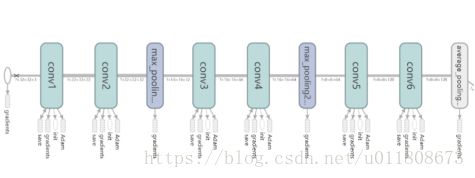

4.2 模型图

图4.1

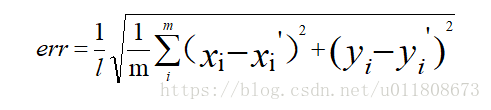

5.误差分析

5.1误差loss计算

测试误差评价标准公式如下:

其中l是人脸框的长度,m是landmark的个数。

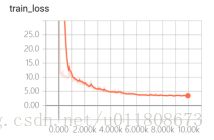

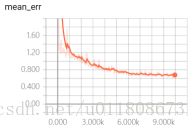

5.2 Loss函数收敛情况

图5.1

这里 train_loss 加了一个5倍的权重参数,所以最后的值反而比test_loss的值大。

部分代码:

#include

#include "MCLC.h"

#include

#include "util/Util.h"

using namespace caffe;

using namespace std;

using namespace cv;

using namespace glasssix;

const int TRAIN_COUNT = 1000;

const int TEST_COUNT = 429;

string base = "C:\\WorkSpace\\Visual_studio\\face_align\\ConsoleApplication2\\ConsoleApplication2\\res\\face_ldmk_devset\\";

string image_path = "C:\\WorkSpace\\Visual_studio\\face_align\\ConsoleApplication2\\ConsoleApplication2\\res\\face_ldmk_devset\\result_img_resize_32\\";

string prototext = "C:\\WorkSpace\\Visual_studio\\caffe\\face_alignment\\regression_26\\test_26_flip.prototxt";

string mode_path = "C:\\WorkSpace\\Visual_studio\\caffe\\face_alignment\\regression_26\\save_path\\_iter_40000.caffemodel";

string prototest_two = "C:\\WorkSpace\\Visual_studio\\caffe\\face_alignment\\regression_stage_two\\test.prototxt";

string mode_path_two = "C:\\WorkSpace\\Visual_studio\\caffe\\face_alignment\\regression_stage_two\\save_path\\_iter_50000.caffemodel";

string image_96 = "C:\\WorkSpace\\Visual_studio\\face_align\\ConsoleApplication2\\ConsoleApplication2\\res\\face_ldmk_devset\\result_img_resize_96\\";

string mark_4_img_dir[] = { "left_eye\\", "right_eye\\", "nose\\", "mouth\\" };

string mark_4_img_txt_test[] = { "test_left_eye.txt", "test_right_eye.txt", "nose_test.txt", "mouth_test.txt" };

int const LEFT_EYE_MARK = 18;

int const RIGHT_EYE_MARK = 18;

int const NOSE_MARK = 10;

int const MOUTH_MASK = 22;

int const IMAGE_SIZE = 32;

int mark_num[] = { LEFT_EYE_MARK, RIGHT_EYE_MARK, NOSE_MARK, MOUTH_MASK };

void mergeImg(Mat & dst, Mat &src1, Mat &src2)

{

int rows = src1.rows + 5 + src2.rows;

int cols = src1.cols + 5 + src2.cols;

CV_Assert(src1.type() == src2.type());

dst.create(rows, cols, src1.type());

src1.copyTo(dst(Rect(0, 0, src1.cols, src1.rows)));

src2.copyTo(dst(Rect(src1.cols + 5, 0, src2.cols, src2.rows)));

}

void checkxy(float &x, float &y)

{

if (x < 0)

{

x = 0;

}

if (y < 0)

{

y = 0;

}

if (x > 48)

{

x = 48;

}

if (y > 60)

{

y = 60;

}

}

int main() {

DataPrepareUtil utd;

//stage one predict.

vector result_data = utd.readStageOneData(base + "result_img_resize_32\\" + "scale_test_point_32_26_all.txt" /*"result_point_resize_96_68_test.txt"*/, 26, TEST_COUNT);

vector src_point = utd.readStageOneData(image_96 + "scale_test_point_96.txt", 68, TEST_COUNT);

int length = result_data.size();

MCLC mclc;

int net_id = mclc.AddNet(prototext, mode_path, 0);

int net_id2 = mclc.AddNet(prototest_two, mode_path_two, 0);

int i = 0;

for (int i = 0; i < length; i++)

{

vector data;

Mat srcImage = imread(image_path + result_data[i].fileName);

data.push_back(srcImage);

double t0 = (double)cvGetTickCount();

unordered_map result = mclc.Forward(data, net_id);

double t1 = (double)cvGetTickCount();

//cout << "predict has completed, cost " << ((t1 - t0) / ((double)cvGetTickFrequency() * 1000 * 1000)) *1000 << "ms" << endl;

for (unordered_map::iterator iter = result.begin(); iter != result.end(); iter++) {

cout << "key value is" << iter->first << " the mapped value is " << " result" << endl;

string key = iter->first;

DataBlob result_one = iter->second;

if (result_one.name == "ip2")

{

double t2 = (double)cvGetTickCount();

float scale = 96 / IMAGE_SIZE;

LandMark som;

Mat img = imread(image_96 + result_data[i].fileName);

som.fileName = result_data[i].fileName;

for (int j = 0; j < STAGE_ONE_MARK + 21; j++)

{

if (j >= 0 && j < 5) {

float x = *(result_one.data);

float y = *(++result_one.data);

som.points[j].x = x;

som.points[j].y = y;

(result_one.data)++;

//circle(srcImage, Point(x, y), 2, Scalar(255, 0, 0));

}

else

{

float x = *(result_one.data);

float y = *(++result_one.data);

circle(img, Point(x*scale, y*scale), 2, Scalar(255, 0, 0));

circle(img, Point(result_data[i].points[j].x*scale, result_data[i].points[j].y * scale), 2, Scalar(0, 0, 255));

(result_one.data)++;

//circle(srcImage, Point(x, y), 2, Scalar(255, 0, 0));

}

}

//imshow("test", srcImage);

//imwrite(base+"test\\"+"srcImg.jpg", srcImage);

//waitKey(0);

Mat mats[4];

vector rect4;

for (int k = 0; k < 4; k++)

{

float x, y;

if (k == 3) {

x = (som.points[k].x + som.points[k + 1].x) / 2.0 * scale;

y = (som.points[k].y + som.points[k + 1].y) / 2.0 * scale;

}

else

{

x = som.points[k].x * scale;

y = som.points[k].y * scale;

}

x = x - 24;

if (k == 2)

{

y = y - 12;

}

else if (k == 3)

{

y = y - 20;

}

else

{

y = y - 24;

}

checkxy(x, y);

Rect rect(x, y, 48, 36);

rect4.push_back(rect);

Mat roi = img(rect);

//imshow("test", roi);

//waitKey();

mats[k] = roi;

}

vector dd;

Mat all_data(36, 48, CV_8UC(12));

merge(mats, 4, all_data);

cout << " width is: " << all_data.cols << " image shape is :" << all_data.channels() << endl;

dd.push_back(all_data);

double t3 = (double)cvGetTickCount();

double t4 = (double)cvGetTickCount();

unordered_map result2 = mclc.Forward(dd, net_id2);

double t5 = (double)cvGetTickCount();

for (unordered_map::iterator iter = result2.begin(); iter != result2.end(); iter++)

{

cout << "key value is" << iter->first << " the mapped value is " << " result" << endl;

string key = iter->first;

DataBlob result_two = iter->second;

if (result_two.name == "ip2")

{

for (int j = 0; j < 68; j++)

{

float x = *(result_two.data);

float y = *(++result_two.data);

(result_two.data)++;

if (j >= 0 && j < 18)

{

x = x + rect4[0].x;

y = y + rect4[0].y;

}

if (j >= 18 && j < 36)

{

x = x + rect4[1].x;

y = y + rect4[1].y;

}

if (j >= 36 && j < 46)

{

x = x + rect4[2].x;

y = y + rect4[2].y;

}

if (j >= 46 && j < 68)

{

x = x + rect4[3].x;

y = y + rect4[3].y;

}

circle(img, Point(x, y), 2, Scalar(255, 0, 0));

circle(img, Point(src_point[i].points[j].x, src_point[i].points[j].y), 2, Scalar(0, 0, 255));

}

}

}

cout << "predict stage one, cost " << ((t1 - t0) / ((double)cvGetTickFrequency() * 1000 * 1000)) * 1000 << "ms" << endl;

cout << "prepare date for stage two, cost " << ((t3 - t2) / ((double)cvGetTickFrequency() * 1000 * 1000)) * 1000 << "ms" << endl;

cout << "predict stage two, cost " << ((t5 - t4) / ((double)cvGetTickFrequency() * 1000 * 1000)) * 1000 << "ms" << endl;

imshow("test", img);

waitKey(0);

}

}

}

return 0;

} 版本2.main.cpp

#include

#include "MCLC.h"

#include

#include "../CascadRegression\util/Util.h"

#include

#include

#include

using namespace caffe;

using namespace std;

using namespace cv;

using namespace glasssix;

#define DEVICE 0

const int four[] = { 17,21,22,26, 36,39,42,45, 27,31,33,35, 48,51,54,57 , 0,16,8,9};

const int four_point[] = { 17,22,22,27, 36,42,42,48, 27,36, 48,68 , 0,17};

//size: brow, eye, nose, mouth, face

const float four_size[] = { 32,16, 24,16, 24,36, 48,32, 64,64};

const int BROW_MARK = 5;

const int EYE_MARK = 6;

const int NOSE_MARK = 9;

const int MOUTH_MARK = 20;

const int FACE_MARK = 17;

int mark_num[] = { BROW_MARK, EYE_MARK, NOSE_MARK, MOUTH_MARK, FACE_MARK};

string mark_4_img_dir[] = { "brow\\", "eye\\", "nose\\", "mouth\\", "face\\"};

string mark_4_img_train[] = { "brow_train.txt", "eye_train.txt", "nose_train.txt", "mouth_train.txt", "face_train.txt"};

string mark_4_img_test[] = { "brow_test.txt", "eye_test.txt", "nose_test.txt", "mouth_test.txt", "face_test.txt" };

double predict(Mat img, MCLC & mclc, int net_id, LandMark & landmark)

{

double time = 0;

double t0 = (double)cvGetTickCount();

vector imgdata;

imgdata.push_back(img);

unordered_map result = mclc.Forward(imgdata, net_id);

double t1 = (double)cvGetTickCount();

time = (t1 - t0) / ((double)cvGetTickFrequency() * 1000);

//cout << "time is:" << time << " ms" << endl;

for (unordered_map::iterator iter = result.begin(); iter != result.end(); iter++) {

string key = iter->first;

DataBlob result = iter->second;

vector mt = result.size;

if (result.name == "ip2")

{

for (int i = 0; i < LANDMARK_NUM; i++)

{

float x = *(result.data);

float y = *(++result.data);

//cout << "x is:" << x << endl;

landmark.points[i].x = x;

landmark.points[i].y = y;

(result.data)++;

}

}

}

return time;

}

double predict_four(Mat img, MCLC & mclc, int net_id, LandMark & landmark, int mark_num)

{

double time = 0;

double t0 = (double)cvGetTickCount();

vector imgdata;

imgdata.push_back(img);

unordered_map result = mclc.Forward(imgdata, net_id);

double t1 = (double)cvGetTickCount();

time = (t1 - t0) / ((double)cvGetTickFrequency() * 1000);

//cout << "time is:" << time << " ms" << endl;

for (unordered_map::iterator iter = result.begin(); iter != result.end(); iter++) {

string key = iter->first;

DataBlob result = iter->second;

vector mt = result.size;

if (result.name == "ip2")

{

for (int i = 0; i < mark_num; i++)

{

float x = *(result.data);

float y = *(++result.data);

//cout << "x is:" << x << endl;

landmark.points[i].x = x;

landmark.points[i].y = y;

(result.data)++;

}

}

}

return time;

}

double predict_1(Mat img, MCLC & mclc, int net_id, LandMark & landmark)

{

double time = 0;

double t0 = (double)cvGetTickCount();

vector imgdata;

imgdata.push_back(img);

unordered_map result = mclc.Forward(imgdata, net_id);

double t1 = (double)cvGetTickCount();

time = (t1 - t0) / ((double)cvGetTickFrequency() * 1000);

cout << "time is:" << time << " ms" << endl;

for (unordered_map::iterator iter = result.begin(); iter != result.end(); iter++) {

string key = iter->first;

DataBlob result = iter->second;

vector mt = result.size;

if (result.name == "ip2")

{

cout << "found data." << endl;

for (int i = 0; i < 1; i++)

{

float x = *(result.data);

cout << "x is:" << x << endl;

float y = *(++result.data);

cout << "y is:" << y << endl;

/*float y = *(++result.data);

landmark.points[i].x = x;

landmark.points[i].y = y;

(result.data)++;

cout << "x :" << x << "y :" << y << endl;*/

}

}

}

return time;

}

double predict_33(Mat img, MCLC & mclc, int net_id, LandMark & landmark)

{

double time = 0;

double t0 = (double)cvGetTickCount();

vector imgdata;

imgdata.push_back(img);

unordered_map result = mclc.Forward(imgdata, net_id);

double t1 = (double)cvGetTickCount();

time = (t1 - t0) / ((double)cvGetTickFrequency() * 1000);

//cout << "time is:" << time << " ms" << endl;

float scale = 96 / 48.0;

for (unordered_map::iterator iter = result.begin(); iter != result.end(); iter++) {

//cout << "key value is" << iter->first << " the mapped value is " << " result" << endl;

string key = iter->first;

DataBlob result = iter->second;

vector mt = result.size;

if (result.name == "ip2")

{

for (int i = 0; i < STAGE_ONE_MARK - 16; i++)

{

float x = *(result.data);

float y = *(++result.data);

landmark.points[i].x = x*scale;

landmark.points[i].y = y*scale;

//circle(img, Point(x, y), 2, Scalar(255, 0, 0));

(result.data)++;

}

}

}

return time;

}

void showError(vector &label, vector & predictRe)

{

int size = label.size();

float error1 = 0, error2 = 0, ave_error = 0;

float left_error = 0, right_error = 0, mouth_error = 0, nose_error = 0, left_e = 0, right_e = 0;

cout << label.size() << " " << predictRe.size() << endl;

for (int i = 0; i < size; i++)

{

float sum1 = 0, sum2 = 0, sum3 = 0;

float left_sum = 0, right_sum = 0, mouth_sum = 0, nose_sum = 0, left = 0, right = 0;

for (int j = 0; j < 68; j++)

{

if (j < 17)

{

sum1 += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

else

{

sum2 += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

if ((j >= 17 && j < 22) || (j >= 36 && j < 42))

{

left_sum += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

if (j >= 36 && j < 42)

{

left += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

if ((j >= 22 && j < 27) || (j >= 42 && j < 48))

{

right_sum += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

if (j >= 42 && j < 48)

{

right += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

if (j >= 27 && j < 36)

{

nose_sum += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

if (j >= 48 && j < 68)

{

mouth_sum += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

sum3 += pow((label[i].points[j].x - predictRe[i].points[j].x), 2) + pow((label[i].points[j].y - predictRe[i].points[j].y), 2);

}

float distance = sqrt(pow((label[i].points[39].x - label[i].points[42].x), 2) + pow((label[i].points[39].y - label[i].points[42].y), 2));

//cout << "distance :" << distance << endl;

error2 += sum2;

ave_error += 1 / distance * sqrt(sum3);

//cout << "ave_error is :" << 1 / distance * sqrt(sum3) << endl;

error1 += sum1;

left_error += left_sum;

left_e += left;

right_error += right_sum;

right_e += right;

nose_error += nose_sum;

mouth_error += mouth_sum;

}

cout << "sum1 :" << error1 / size << endl;

cout << "left_error :" << left_error / size << endl;

cout << "left_e :" << left_e / size << endl;

cout << "right_error :" << right_error / size << endl;

cout << "right_e :" << right_e / size << endl;

cout << "nose_error :" << nose_error / size << endl;

cout << "mouth_error :" << mouth_error / size << endl;

//cout << "error1 is:" << error1 / size << endl;

cout << "error2 is:" << error2 / size << endl;

cout << "all error is:" << ave_error / size << endl;

}

const int TRAIN = 9000;

const int TEST = 773;

//const int FEATURE_NUM = 8704;

void mat2hdf5(Mat &data, Mat &label, const char * filepath, string dataset1, string dataset2)

{

int data_cols = data.cols;

int data_rows = data.rows;

int label_cols = label.cols;

int label_rows = label.rows;

hid_t file_id;

herr_t status;

file_id = H5Fcreate(filepath, H5F_ACC_TRUNC, H5P_DEFAULT, H5P_DEFAULT);

int rank_data = 2, rank_label = 2;

hsize_t dims_data[2];

hsize_t dims_label[2];

dims_data[0] = data_rows;

dims_data[1] = data_cols;

dims_label[0] = label_rows;

dims_label[1] = label_cols;

hid_t data_id = H5Screate_simple(rank_data, dims_data, NULL);

hid_t label_id = H5Screate_simple(rank_label, dims_label, NULL);

hid_t dataset_id = H5Dcreate2(file_id, dataset1.c_str(), H5T_NATIVE_FLOAT, data_id, H5P_DEFAULT, H5P_DEFAULT, H5P_DEFAULT);

hid_t labelset_id = H5Dcreate(file_id, dataset2.c_str(), H5T_NATIVE_FLOAT, label_id, H5P_DEFAULT, H5P_DEFAULT, H5P_DEFAULT);

int i, j;

float* data_mem = new float[data_rows*data_cols];

float **array_data = new float*[data_rows];

for (j = 0; j < data_rows; j++) {

array_data[j] = data_mem + j* data_cols;

for (i = 0; i < data_cols; i++)

{

array_data[j][i] = data.at(j, i);

}

}

float * label_mem = new float[label_rows*label_cols];

float **array_label = new float*[label_rows];

for (j = 0; j < label_rows; j++) {

array_label[j] = label_mem + j*label_cols;

for (i = 0; i < label_cols; i++)

{

array_label[j][i] = label.at(j, i);

}

}

status = H5Dwrite(dataset_id, H5T_NATIVE_FLOAT, H5S_ALL, H5S_ALL, H5P_DEFAULT, array_data[0]);

status = H5Dwrite(labelset_id, H5T_NATIVE_FLOAT, H5S_ALL, H5S_ALL, H5P_DEFAULT, array_label[0]);

//关闭

status = H5Sclose(data_id);

status = H5Sclose(label_id);

status = H5Dclose(dataset_id);

status = H5Dclose(labelset_id);

status = H5Fclose(file_id);

delete[] array_data;

delete[] array_label;

}

void getRect(LandMark landmark, int flag, vector &rects, int cols, int rows)

{

Rect rect, rect1;

Point p1(landmark.points[four[4 * flag]]);

Point p2(landmark.points[four[4 * flag + 1]]);

Point p3(landmark.points[four[4 * flag + 2]]);

Point p4(landmark.points[four[4 * flag + 3]]);

float x, y, width, height;

if (flag == 0 || 1 == flag)

{

x = p1.x;

y = min(p1.y, p2.y);

width = abs(p2.x - p1.x);

height = four_size[2 * flag + 1] / four_size[2 * flag] * width;

rect.x = max(x - width*0.3, 0.0);

rect.y = max(y - height*0.6, 0.0);

rect.width = width * 1.6;

rect.height = height * 1.6;

x = p3.x;

y = min(p3.y, p4.y);

width = abs(p4.x - p3.x);

height = four_size[2 * flag + 1] / four_size[2 * flag] * width;

rect1.x = max(x - width*0.3, 0.0);

rect1.y = max(y - height*0.6, 0.0);

rect1.width = width * 1.6;

rect1.height = height * 1.6;

}

if (flag == 2)

{

x = min(min(p1.x, p2.x), p3.x);

y = p1.y;

int h1 = abs(p1.y - p3.y);

int h2 = abs(p1.y - p2.y);

int h3 = abs(p1.y - p4.y);

height = max(max(h1, h2), h3);

int w1 = abs(p2.x - p4.x);

int w2 = abs(p2.x - p3.x);

int w3 = abs(p4.x - p3.x);

width = max(max(w1, w2), w3);

rect.x = max(x - width*0.3, 0.0);

rect.y = max(y - height*0.2, 0.0);

rect.width = width * 1.6;

rect.height = height * 1.4;

}

if (flag == 3)

{

x = p1.x;

y = min(min(p1.y, p2.y), p3.y);

width = abs(p3.x - x);

height = max(max(abs(p4.y - y), abs(p1.y - y)), abs(p2.y-y));

rect.x = max(x - width*0.3, 0.0);

rect.y = max(y - height*0.4, 0.0);

rect.width = max((int)(width*1.6), 18);

rect.height = max((int)(height*1.8), 12);

/*x = (p1.x + p3.x)/2.0;

y = (p1.y + p3.y) / 2.0;

rect.x = max(x - rect.width*0.5, 0.0);

rect.y = max(y - rect.height*0.5, 0.0);*/

}

if (flag == 4)

{

x = p1.x;

y = min(p1.y, p2.y);

width = abs(p1.x - p2.x);

height = abs(p3.y - y);

rect.x = max(x - width*0.1, 0.0);

rect.y = max(y - height*0.1, 0.0);

rect.width = max((int)(width*1.2), 32);

rect.height = max((int)(height*1.2), 32);

}

if (rect.x + rect.width > cols)

{

rect.width = cols - rect.x;

}

if (rect.y + rect.height > rows)

{

rect.height = rows - rect.y;

}

rects.push_back(rect);

if (flag == 0 || flag == 1)

{

if (rect1.x + rect1.width > cols)

{

rect1.width = cols - rect1.x;

}

if (rect1.y + rect1.height > rows)

{

rect1.height = rows - rect1.y;

}

rects.push_back(rect1);

}

}

void createPatchFile(string base, vector data, DataPrepareUtil &dpu)

{

vector landmark_four[5][2];

int istd = 0;

int length = data.size();

for (int i = 0; i < length; i++)

{

if (i >= 9000)

{

istd = 1;

}

for (int j = 2; j < 3; j++)

{

Mat img = imread(base + data[i].fileName);

vector rects;

//Rect rect(0,0, four_size[2*j], four_size[2*j+1]);

getRect(data[i], j, rects, img.cols, img.rows);

//cout << rect.x << " " << rect.y << " " << rect.width << " " << rect.height << endl;

for (int n = 0; n < rects.size(); n++)

{

Mat roi = img(rects[n]);

resize(roi, roi, Size(four_size[2 * j], four_size[2 * j + 1]));

string dir = base + mark_4_img_dir[j];

if (_access(dir.c_str(), 0) == -1)

{

_mkdir(dir.c_str());

}

imwrite(dir + to_string(n)+"_" + data[i].fileName, roi);

//坐标转换

LandMark mark;

mark.fileName = to_string(n) + "_" + data[i].fileName;

if (0 == j || 1 == j)

{

int count = 0;

for (int k = four_point[4 * j + 2*n]; k < four_point[4 * j + 1 + 2*n]; k++)

{

float offsetX = (data[i].points[k].x - rects[n].x) * ((float)four_size[2 * j] / rects[n].width);

float offsetY = (data[i].points[k].y - rects[n].y) * ((float)four_size[2 * j + 1] / rects[n].height);

mark.points[count].x = offsetX;

mark.points[count].y = offsetY;

++count;

}

landmark_four[j][istd].push_back(mark);

}

else

{

int count = 0;

for (int k = four_point[8 + 2 * (j - 2)]; k < four_point[8 + 2 * (j - 2) + 1]; k++)

{

float offsetX = (data[i].points[k].x - rects[n].x) * four_size[2 * j] / rects[n].width;

float offsetY = (data[i].points[k].y - rects[n].y) * four_size[2 * j + 1] / rects[n].height;

mark.points[count].x = offsetX;

mark.points[count].y = offsetY;

++count;

}

landmark_four[j][istd].push_back(mark);

}

}

}

}

for (int j = 2; j < 3; j++)

{

dpu.clearFileData(base + mark_4_img_dir[j] + mark_4_img_train[j]);

dpu.writeDatatoFile(base + mark_4_img_dir[j] + mark_4_img_train[j], landmark_four[j][0], mark_num[j]);

dpu.clearFileData(base + mark_4_img_dir[j] + mark_4_img_test[j]);

dpu.writeDatatoFile(base + mark_4_img_dir[j] + mark_4_img_test[j], landmark_four[j][1], mark_num[j]);

}

}

int main() {

string base = "E:\\work\\face_alignment\\model\\regression_96_68\\";

string prototext = base + "test_68.prototxt";

string mode_path = base + "save_path\\_iter_150000.caffemodel";

string img_path = "D:\\face\\face_img_96\\img\\";

string text_label = "D:\\face\\face_img_96\\img\\shutter_68_test.txt";

/*string base = "E:\\work\\face_alignment\\model\\regression_48_68\\";

string prototext = base + "test_68.prototxt";

string mode_path = base + "save_path\\_iter_20000.caffemodel";

string img_path = "D:\\face_new\\face_img_48\\";

string text_label = "D:\\face_new\\face_img_48\\shutter_68_test.txt";*/

/*string base1 = "E:\\work\\face_alignment\\model\\regression_33\\";

string prototext1 = base1 + "test_33.prototxt";

string mode_path1 = base1 + "save_path_plan1\\_iter_80000.caffemodel";

string img_path_48 = "D:\\face\\face_img_48\\img\\";

string text_label_48 = "D:\\face\\face_img_48\\img\\shutter_33_test.txt";*/

MCLC mclc;

int id_68 = mclc.AddNet(prototext, mode_path, DEVICE);

//int id_33 = mclc.AddNet(prototext1, mode_path1, 0);

DataPrepareUtil dpu;

vector data;

data = dpu.readStageOneData(text_label, LANDMARK_NUM);

//vector data_33;

//data_33 = dpu.readStageOneData(text_label_48, 33);

int length = data.size();

vector preRe;

double time1 = 0;

for (int i = 0; i < length; i++)

{

LandMark landmark;

landmark.fileName = data[i].fileName;

Mat img = imread(img_path + data[i].fileName);

time1 += predict(img, mclc, id_68, landmark);

/*Mat img_48 = imread(img_path_48 + data[i].fileName);

time2 += predict_33(img_48, mclc, id_33, landmark);*/

preRe.push_back(landmark);

/*for (int j = 0; j < LANDMARK_NUM; j++)

{

circle(img, Point(landmark.points[j].x, landmark.points[j].y), 2, Scalar(255, 0 ,0));

}

imshow("img", img);

waitKey(0);*/

}

cout << "average time1 is:" << time1 / length << " ms" << endl;

showError(data, preRe);

//createPatchFile(img_path, preRe, dpu);

/*

//create sift feateure and label

Mat data_train, label_train;

Mat data_test, label_test;

dpu.getData("D:\\face\\face_img_96\\img\\", preRe, data, data_train, label_train, data_test, label_test, TRAIN, TEST, 1);

Mat out_train, out_test;

cout << data_train.cols << " " << data_train.rows << endl;

cout << data_test.cols << " " << data_test.rows << endl;

Mat mean, eigenvectors;

dpu.PCA_Reduce(data_train, data_test, out_train, out_test, mean, eigenvectors);

string f5_path = "E:\\work\\face_alignment\\model\\stage_three\\hdf5_pca\\";

string train_data_path = f5_path + "hdf5_train.h5";

string test_data_path = f5_path + "hdf5_test.h5";

string mean_eigenv_path = f5_path + "mean_eigenv.h5";

mat2hdf5(out_train, label_train, train_data_path.c_str(), "data", "label");

mat2hdf5(out_test, label_test, test_data_path.c_str(), "data", "label");

mat2hdf5(mean, eigenvectors, mean_eigenv_path.c_str(), "mean", "eigenv");

cout << "mean is:" << mean << endl;

//cout << "eigenvectors is:" << eigenvectors << endl;

cout << "mean shape:" << mean.rows << " " << mean.cols << endl;

*/

//stage two.

string base_brow = "E:\\work\\face_alignment\\model\\regression_stage_two\\brow\\";

string prototext_brow = base_brow + "test_brow.prototxt";

string mode_path_brow = base_brow + "save_path\\_iter_100000.caffemodel";

int id_5_brow = mclc.AddNet(prototext_brow, mode_path_brow, DEVICE);

string base_eye = "E:\\work\\face_alignment\\model\\regression_stage_two\\eye\\";

string prototext_eye = base_eye + "test_eye.prototxt";

string mode_path_eye = base_eye + "save_path\\_iter_100000.caffemodel";

int id_6_eye = mclc.AddNet(prototext_eye, mode_path_eye, DEVICE);

string base_nose = "E:\\work\\face_alignment\\model\\regression_stage_two\\nose\\";

string prototext_nose = base_nose + "test_nose.prototxt";

string mode_path_nose = base_nose + "save_path\\_iter_70000.caffemodel";

int id_9_nose = mclc.AddNet(prototext_nose, mode_path_nose, DEVICE);

string base_mouth = "E:\\work\\face_alignment\\model\\regression_stage_two\\mouth\\";

string prototext_mouth = base_mouth + "test_mouth.prototxt";

string mode_path_mouth = base_mouth + "save_path\\_iter_100000.caffemodel";

int id_68_mouth = mclc.AddNet(prototext_mouth, mode_path_mouth, DEVICE);

string base_face = "E:\\work\\face_alignment\\model\\regression_stage_two\\face\\";

string prototext_face = base_face + "test_face.prototxt";

string mode_path_face = base_face + "save_path\\_iter_100000.caffemodel";

int id_17_face = mclc.AddNet(prototext_face, mode_path_face, DEVICE);

int id_four[] = { id_5_brow, id_6_eye, id_9_nose, id_68_mouth, id_17_face};

double time_four = 0;

for (int i = 0; i < length; i++)

{

LandMark landmark;

landmark.fileName = data[i].fileName;

Mat img = imread(img_path + data[i].fileName);

for (int j = 0; j < 5; j++)

{

//if (j == 2)

//{

// continue;

//}

vector rects;

getRect(data[i], j, rects, img.cols, img.rows);

for (int n = 0; n < rects.size(); n++)

{

Mat roi = img(rects[n]);

resize(roi, roi, Size(four_size[2 * j], four_size[2 * j + 1]));

time_four += predict_four(roi, mclc, id_four[j], landmark, mark_num[j]);

if (j == 0 || j == 1)

{

int count = 0;

for (int k = four_point[4 * j + 2 * n]; k < four_point[4 * j + 1 + 2*n]; k++)

{

preRe[i].points[k].x = landmark.points[count].x * (rects[n].width / four_size[2 * j]) + rects[n].x;

preRe[i].points[k].y = landmark.points[count].y * (rects[n].height / four_size[2 * j + 1]) + rects[n].y;

++count;

}

}

//for (int k = 0; k < mark_num[j]; k++)

//{

// circle(roi, Point(landmark.points[k]), 2, Scalar(255,0,0));

//}

//imshow("roi", roi);

//waitKey(0);

if (j == 2 || j == 3 || j == 4)

{

int count = 0;

for (int k = four_point[8 + 2 * (j - 2)]; k < four_point[8 + 2 * (j - 2) + 1]; k++)

{

preRe[i].points[k].x = landmark.points[count].x * (rects[n].width / four_size[2 * j]) + rects[n].x;

preRe[i].points[k].y = landmark.points[count].y * (rects[n].height / four_size[2 * j + 1]) + rects[n].y;

++count;

}

}

}

//for (int k = 0; k < LANDMARK_NUM; k++)

//{

// circle(img, Point(preRe[i].points[k]), 2, Scalar(255, 0, 0));

//}

//imshow("img", img);

//waitKey(0);

}

}

cout << "stage two ........................................................." << endl;

cout << "average time mouth is :" << time_four / length << " ms"<< endl;

showError(data, preRe);

//create sift feateure and label

/*

Mat data_train, label_train;

Mat data_test, label_test;

dpu.getData(img_path, preRe, data, data_train, label_train, data_test, label_test, 0, 6, 1);

int length_1 = data_test.rows;

cout << "test count is :" << length_1 << endl;

double time2 = 0;

for (int i = 0; i < length_1; i++)

{

LandMark landmark;

Rect rect(0, i, data_test.cols, 1);

Mat feature = data_test(rect);

//Mat feature(1,2, CV_32FC1);

//feature.at(0, 0) = 7;

//feature.at(0, 1) = 8;

time2 += predict(feature, mclc, id_68_1, landmark);

for (int j = 0; j < LANDMARK_NUM; j++)

{

preRe[i].points[j].x = preRe[i].points[j].x + landmark.points[j].x;

preRe[i].points[j].y = preRe[i].points[j].y + landmark.points[j].y;

}

if (i == 0)

{

for (int k = 0; k < 68; k++)

{

cout << landmark.points[k].x << " " << landmark.points[k].y << endl;

}

}

}

cout << "average time2 is:" << time2 / length << " ms" << endl;

showError(data, preRe);

*/

std::system("PAUSE");

return 0;

} util.h

#ifndef _UTIL_

#define _UTIL_

#include

#include

#include

#include

#define LANDMARK_NUM 68

#define STAGE_ONE_MARK 33

using namespace std;

namespace glasssix

{

struct LandMark

{

std::string fileName;

cv::Point2d points[LANDMARK_NUM];

};

struct Label

{

std::string fileName;

std::string label;

};

const int HOG_FEATURE = 0;

const int SIFT_FEATURE = 1;

const int LBP_FEATURE = 2;

class DataPrepareUtil

{

public:

DataPrepareUtil() {};

std::vector readStageOneData(string filePath, int numMark, int count = INT_MAX);

void readLabelData(string filePath, std::vector util.cpp

#include "Util.h"

#include

#include

#include

#include //使用stringstream需要引入这个头文件

#include

#include

#include

#include

#include "../../../../SoftWare/libsvm-3.22/svm.h"

using namespace std;

using namespace glasssix;

using namespace cv;

const int LEFT_EYE = 0;

const int RIGHT_EYE = 9;

const int NOSE = 34;

const int LEFT_MOUTH = 46;

const int RIGHT_MOUTH = 47;

const int ALL = 95;

template

Type stringToNum(const string& str)

{

istringstream iss(str);

Type num;

iss >> num;

return num;

}

void splitString(const string& s, vector& v, const string& c)

{

string::size_type pos1, pos2;

pos2 = s.find(c);

pos1 = 0;

while (string::npos != pos2)

{

v.push_back(s.substr(pos1, pos2 - pos1));

pos1 = pos2 + c.size();

pos2 = s.find(c, pos1);

}

if (pos1 != s.length()) {

v.push_back(s.substr(pos1));

}

}

void parseData_5(string buf, LandMark& mark)

{

vector result1;

splitString(buf, result1, "\t");

mark.fileName = result1[0];

vector result2;

splitString(result1[1], result2, ",");

mark.points[0].x = stringToNum(result2[2 * LEFT_EYE]);

mark.points[0].y = stringToNum(result2[2 * LEFT_EYE + 1]);

mark.points[1].x = stringToNum(result2[2 * RIGHT_EYE]);

mark.points[1].y = stringToNum(result2[2 * RIGHT_EYE + 1]);

mark.points[2].x = stringToNum(result2[2 * NOSE]);

mark.points[2].y = stringToNum(result2[2 * NOSE + 1]);

mark.points[3].x = stringToNum(result2[2 * LEFT_MOUTH]);

mark.points[3].y = stringToNum(result2[2 * LEFT_MOUTH + 1]);

mark.points[4].x = stringToNum(result2[2 * RIGHT_MOUTH]);

mark.points[4].y = stringToNum(result2[2 * RIGHT_MOUTH + 1]);

}

void parseKey_26_Data(string buf, LandMark& mark)

{

vector result1;

splitString(buf, result1, "\t");

mark.fileName = result1[0];

vector result2;

splitString(result1[1], result2, ",");

mark.points[0].x = stringToNum(result2[2 * LEFT_EYE]);

mark.points[0].y = stringToNum(result2[2 * LEFT_EYE + 1]);

mark.points[1].x = stringToNum(result2[2 * RIGHT_EYE]);

mark.points[1].y = stringToNum(result2[2 * RIGHT_EYE + 1]);

mark.points[2].x = stringToNum(result2[2 * NOSE]);

mark.points[2].y = stringToNum(result2[2 * NOSE + 1]);

mark.points[3].x = stringToNum(result2[2 * LEFT_MOUTH]);

mark.points[3].y = stringToNum(result2[2 * LEFT_MOUTH + 1]);

mark.points[4].x = stringToNum(result2[2 * RIGHT_MOUTH]);

mark.points[4].y = stringToNum(result2[2 * RIGHT_MOUTH + 1]);

int j = 5;

for (int i = 74; i < ALL; i++)

{

if (i >= 74 && i < 95) {

mark.points[j].x = stringToNum(result2[2 * i]);

mark.points[j].y = stringToNum(result2[2 * i + 1]);

j++;

}

}

}

void parseKey_68_Data(string buf, LandMark& mark)

{

vector result1;

splitString(buf, result1, "\t");

mark.fileName = result1[0];

vector result2;

splitString(result1[1], result2, ",");

int j = 0;

//add left eye 18个

for (int i = 0; i < 37; i++)

{

if ((i >= 0 && i < 9) || (i >= 18 && i < 26) || (i == 36)) {

mark.points[j].x = stringToNum(result2[2 * i]);

mark.points[j].y = stringToNum(result2[2 * i + 1]);

j++;

}

}

//add right eye 18个

for (int i = 9; i < 38; i++)

{

if ((i >= 9 && i < 18) || (i >= 26 && i < 34) || (i == 37)) {

mark.points[j].x = stringToNum(result2[2 * i]);

mark.points[j].y = stringToNum(result2[2 * i + 1]);

j++;

}

}

//add nose eye 10个

for (int i = 34; i < 46; i++)

{

if ((i >= 34 && i < 36) || (i >= 38 && i < 46)) {

mark.points[j].x = stringToNum(result2[2 * i]);

mark.points[j].y = stringToNum(result2[2 * i + 1]);

j++;

}

}

//add mouth eye 22个

for (int i = 46; i < 68; i++)

{

if (i >= 46 && i < 68) {

mark.points[j].x = stringToNum(result2[2 * i]);

mark.points[j].y = stringToNum(result2[2 * i + 1]);

j++;

}

}

}

void parseData(string buf, LandMark& mark, int num_mark)

{

vector result1;

splitString(buf, result1, " ");

mark.fileName = result1[0];

for (int i = 0; i < num_mark; i++)

{

mark.points[i].x = stringToNum(result1[2 * i + 1]);

mark.points[i].y = stringToNum(result1[2 * (i + 1)]);

}

}

void parseData(string buf, LandMark& mark)

{

vector result1;

splitString(buf, result1, "\t");

mark.fileName = result1[0];

vector result2;

splitString(result1[1], result2, ",");

for (int i = 0; i < LANDMARK_NUM; i++)

{

mark.points[i].x = stringToNum(result2[2 * i]);

mark.points[i].y = stringToNum(result2[2 * i + 1]);

}

}

void DataPrepareUtil::clearFileData(string filePath)

{

if (filePath == "")

{

return;

}

ofstream in;

in.open(filePath, ios::trunc);

in.close();

}

void DataPrepareUtil::readLabelData(string filePath, std::vector &data, int count)

{

ifstream fileA(filePath);

if (!fileA)

{

cout << "没有找到需要读取的 " << filePath << " 请将文件放到指定位置再次运行本程序。" << endl << " 按任意键以退出";

return;

}

for (int i = 0; !fileA.eof() && (i < count); i++)

{

Label mark;

string buf;

getline(fileA, buf, '\n');

if (buf == "")

{

cout << "buf is empty." << endl;

continue;

}

vector resu;

splitString(buf, resu, " ");

mark.fileName = resu[0];

mark.label = resu[1];

data.push_back(mark);

}

fileA.close();

}

vector DataPrepareUtil::readStageOneData(string filePath, int numMark, int count)

{

vector result;

ifstream fileA(filePath);

if (!fileA)

{

cout << "没有找到需要读取的 " << filePath << " 请将文件放到指定位置再次运行本程序。" << endl << " 按任意键以退出";

return result;

}

for (int i = 0; !fileA.eof() && (i < count); i++)

{

LandMark mark;

string buf;

getline(fileA, buf, '\n');

if (buf == "")

{

cout << "buf is empty." << endl;

continue;

}

parseData(buf, mark, numMark);

//cout.precision(20); // 设置输出精度

//cout << "mark.fileName is:" << mark.fileName << " mark.Point:" << mark.points[0] << endl;

result.push_back(mark);

}

fileA.close();

return result;

}

void DataPrepareUtil::writeDatatoFile(std::string filePath, vector & data)

{

if (filePath == "" || data.size() == 0)

{

return;

}

ofstream in;

in.open(filePath, ios::app); //ios::trunc

int length = data.size();

for (int i = 0; i < length; i++)

{

string dataline = "H:\\CASIA\\CASIA-WebFace\\" + data[i].fileName;

dataline.append(" ");

dataline.append(data[i].label);

in << dataline << "\n";

}

in.close();

}

void DataPrepareUtil::writeDatatoFile(std::string filePath, vector & data, int landmark)

{

if (filePath == "" || data.size() == 0)

{

return;

}

ofstream in;

in.open(filePath, ios::app);

int length = data.size();

for (int i = 0; i < length; i++)

{

string dataline = data[i].fileName;

dataline.append(" ");

for (int j = 0; j < landmark; j++)

{

dataline.append(to_string(data[i].points[j].x));

dataline.append(" ");

dataline.append(to_string(data[i].points[j].y));

if (j != (landmark - 1))

{

dataline.append(" ");

}

//cout.precision(20);

//cout << "double x:" << data[i].points[j].x << endl;

//cout.precision(20); // 设置输出精度

//cout << "to_string():" << doubleToString(data[i].points[j].x)< & data, int landmark)

{

if (filePath == "" || data.size() == 0)

{

return;

}

ofstream in;

in.open(filePath, ios::app);

int length = data.size();

for (int i = 0; i < length; i++)

{

string dataline = data[i].fileName;

dataline.append(" ");

for (int j = 0; j < landmark; j++)

{

dataline.append(to_string(data[i].points[j].x));

dataline.append(" ");

}

for (int j = 0; j < landmark; j++)

{

dataline.append(to_string(data[i].points[j].y));

if (j != (landmark - 1))

{

dataline.append(" ");

}

}

in << dataline << "\n";

}

in.close();

}

void DataPrepareUtil::writeDatatoFile(std::string filePath, vector & data, int landmark, int start, int end)

{

if (filePath == "" || data.size() == 0)

{

return;

}

ofstream in;

in.open(filePath, ios::app);

int length = data.size();

if (start < 0 || start > length || end < start || end > length)

{

cout << "start or end is error." << endl;

}

for (int i = start; i < end; i++)

{

string dataline = data[i].fileName;

dataline.append(" ");

for (int j = 0; j < landmark; j++)

{

dataline.append(to_string(data[i].points[j].x));

dataline.append(" ");

dataline.append(to_string(data[i].points[j].y));

if (j != (landmark - 1))

{

dataline.append(" ");

}

//cout.precision(20);

//cout << "double x:" << data[i].points[j].x << endl;

//cout.precision(20); // 设置输出精度

//cout << "to_string():" << doubleToString(data[i].points[j].x)< sift = xfeatures2d::SIFT::create(1);

std::vector keypointsa;

keypointsa.clear();

KeyPoint keyp;

keyp.pt.x = point.x;

keyp.pt.y = point.y;

keyp.size = 16;

keypointsa.push_back(keyp);

//sift->detectAndCompute(src, mask, keypointsa, a);//得到特征点和特征点描述

//drawKeypoints(src, keypointsa, src, Scalar(0,0,255));//画出特征点

sift->detectAndCompute(img, Mat(), keypointsa, desc, true);

//drawKeypoints(img, keypointsa, img, Scalar(0, 0, 255));

/*imshow("src", img);

waitKey(0);*/

}

int DataPrepareUtil::getImageHogFeature(Mat &img, vector & descriptors)

{

if (img.data == NULL)

{

cout << "No exist" << endl;

return -1;

}

HOGDescriptor *hog = new HOGDescriptor(Size(8, 8), Size(8, 8), Size(8, 8), Size(4, 4), 9); //Size(4,4) cell 大小

hog->compute(img, descriptors, Size(1, 1), Size(0, 0));

return 0;

}

void check_xy(int &x, int &y, int width, int height, int stride)

{

if (x < 0)

{

x = 0;

}

if (y < 0)

{

y = 0;

}

if (x > width - stride)

{

x = width - stride;

}

if (y > height - stride)

{

y = height - stride;

}

}

void DataPrepareUtil::PCA_Reduce(cv::Mat & input_train, cv::Mat & input_test, cv::Mat & output_train, cv::Mat & output_test, Mat & mean, Mat & eigenvectors)

{

cout << "start pca" << endl;

double t0 = (double)cvGetTickCount();

PCA pca(input_train, Mat(), PCA::DATA_AS_ROW, 1700);

cout << "end pca" << endl;

double t1 = (double)cvGetTickCount();

cout << "cost time is: " << ((t1 - t0) / ((double)cvGetTickFrequency() * 1000 * 1000)) << "s" << endl;

//cout << pca.eigenvalues << endl;

//cout << pca.eigenvectors << endl;

output_train = pca.project(input_train);

output_test = pca.project(input_test);

cout << " point size :" << output_train.rows << " " << output_train.cols << endl;

//imwrite("D:\\face\\face_img_96\\img\\feature_sift\\pca\\mean.jpg", pca.mean);

//imwrite("D:\\face\\face_img_96\\img\\feature_sift\\pca\\engv.jpg", pca.eigenvectors);

mean = pca.mean;

eigenvectors = pca.eigenvectors;

}

void DataPrepareUtil::getData(std::string base, std::vector & really, vector & predict, cv::Mat & train_data, cv::Mat & train_label, cv::Mat & test_data, cv::Mat & test_label, int train_num, int test_num, int flage)

{

int length = predict.size();

cout << "train image is :" << length << endl;

int n = 0;

int featureNum = 0;

int cols = 68 * 2;

for (int i = 0; i < length; i++)

{

Mat img = imread(base + predict[i].fileName, CV_LOAD_IMAGE_COLOR);

std::vector descriptors;

if (flage == HOG_FEATURE)

{

for (int j = 0; j < 68; j++)

{

std::vector descriptor;

Rect rect;

rect.x = predict[i].points[j].x;

rect.y = predict[i].points[j].y;

rect.x = rect.x - 4;

rect.y = rect.y - 4;

check_xy(rect.x, rect.y, 96, 96, 8);

rect.width = 8;

rect.height = 8;

Mat roi = img(rect);

getImageHogFeature(roi, descriptor);

int le = descriptor.size();

for (int k = 0; k < le; k++)

{

descriptors.push_back(descriptor[k]);

}

}

}

else if (flage == SIFT_FEATURE)

{

for (int j = 0; j < LANDMARK_NUM; j++)

{

Mat desc;

sift_feature(Point(predict[i].points[j].x, predict[i].points[j].y), desc, img);

for (int k = 0; k < desc.cols; k++)

{

descriptors.push_back(desc.at(0,k));

}

//cout << " value :" << desc << endl;

//system("PAUSE");

}

}

if (i < train_num)

{

if (i == 0)

{

featureNum = descriptors.size();

cout << "featureNum is:" << featureNum << endl;

train_label = Mat::zeros(train_num, cols, CV_32FC1); //注意其中训练和自动训练的接口,还有labelMat一定要用CV_32SC1的类型

train_data = Mat::zeros(train_num, descriptors.size(), CV_32FC1);

}

for (int j = 0; j < cols / 2; j++)

{

train_label.at(i, 2 * j) = really[i].points[j].x - predict[i].points[j].x;

train_label.at(i, 2 * j + 1) = really[i].points[j].y - predict[i].points[j].y;

//cout << train_label.at(i, 2 * j) << endl;

}

n = 0;

for (std::vector::iterator iter = descriptors.begin(); iter != descriptors.end(); iter++)

{

train_data.at(i, n) = *iter;

n++;

}

}

else

{

if (i == train_num)

{

featureNum = descriptors.size();

cout << "test featureNum is:" << featureNum << endl;

test_label = Mat::zeros(test_num, cols, CV_32FC1);

test_data = Mat::zeros(test_num, descriptors.size(), CV_32FC1);

}

for (int j = 0; j < cols / 2; j++)

{

test_label.at(i - train_num, 2 * j) = really[i - train_num].points[j].x - predict[i - train_num].points[j].x;

test_label.at(i - train_num, 2 * j + 1) = really[i - train_num].points[j].y - predict[i - train_num].points[j].y;

}

n = 0;

for (std::vector::iterator iter = descriptors.begin(); iter != descriptors.end(); iter++)

{

test_data.at(i - train_num, n) = *iter;

n++;

}

}

}

}

void DataPrepareUtil::getPatchData(std::string base, std::vector & really, vector & predict, std::vector & train_data, cv::Mat & train_label, std::vector & test_data, cv::Mat & test_label, int train_num, int test_num)

{

int length = predict.size();

cout << "train image is :" << length << endl;

int n = 0;

int featureNum = 0;

int cols = 68 * 2;

for (int i = 0; i < length; i++)

{

Mat img = imread(base + predict[i].fileName, CV_LOAD_IMAGE_COLOR);

Mat patch[68];

vector rect;

for (int j = 0; j < 68; j++)

{

Rect re;

re.x = predict[i].points[j].x;

re.y = predict[i].points[j].y;

re.x = re.x - 8;

re.y = re.y - 8;

check_xy(re.x, re.y, 96, 96, 16);

re.width = 16;

re.height = 16;

Mat roi = img(re);

patch[j] = roi;

rect.push_back(re);

}

if (i < train_num)

{

if (i == 0)

{

train_label = Mat::zeros(train_num, cols, CV_32FC1); //注意其中训练和自动训练的接口,还有labelMat一定要用CV_32SC1的类型

}

for (int j = 0; j < cols / 2; j++)

{

train_label.at(i, 2 * j) = really[i].points[j].x - rect[j].x;//predict[i].points[j].x;

train_label.at(i, 2 * j + 1) = really[i].points[j].y - rect[j].y;//predict[i].points[j].y;

//cout << train_label.at(i, 2 * j) << endl;

}

/*Mat patch1, patch2;

for (int i = 0; i < 17; i++)

{

for (int j = 0; j < 3; j++)

{

if (j == 0)

{

hconcat(patch[i][j], patch[i][j + 1], patch1);

}

else {

hconcat(patch1, patch[i][j + 1], patch1);

}

}

if (i == 0)

{

patch2 = patch1;

}

else if (i > 0)

{

vconcat(patch1, patch2, patch2);

}

}*/

Mat dst;

merge(patch, 68, dst);

train_data.push_back(dst);

}

else

{

if (i == train_num)

{

test_label = Mat::zeros(test_num, cols, CV_32FC1); //注意其中训练和自动训练的接口,还有labelMat一定要用CV_32SC1的类型

}

for (int j = 0; j < cols / 2; j++)

{

test_label.at(i - train_num, 2 * j) = really[i - train_num].points[j].x - predict[i - train_num].points[j].x;

test_label.at(i - train_num, 2 * j + 1) = really[i - train_num].points[j].y - predict[i - train_num].points[j].y;

}

Mat dst;

merge(patch, 68, dst);

test_data.push_back(dst);

}

}

}

svm_parameter param;

void init_param()

{

param.svm_type = EPSILON_SVR;

param.kernel_type = RBF;

param.degree = 3;

param.gamma = 0.01;

param.coef0 = 0;

param.nu = 0.5;

param.cache_size = 1000;

param.C = 20;

param.eps = 1e-6;

param.shrinking = 1;

param.probability = 0;

param.nr_weight = 0;

param.weight_label = NULL;

param.weight = NULL;

}

//void DataPrepareUtil::libSVM_Train(cv::Mat & data, cv::Mat &label, string save_path)

//{

// init_param();

// int rows = data.rows;

// int cols = data.cols;

//

// svm_problem prob;

// prob.l = rows;

//

// svm_node *x_space = new svm_node[(cols + 1)*prob.l];//样本特征存储空间

// prob.x = new svm_node *[prob.l]; //每一个X指向一个样本

// cout << "size :" << sizeof(x_space) << endl;

// prob.y = new double[prob.l];

//

// //libsvm train data prepare.

// for (int i = 0; i < rows; i++)

// {

// for (int j = 0; j < cols + 1; j++)

// {

// if (j == cols)

// {

// x_space[i*(cols + 1) + j].index = -1;

// prob.x[i] = &x_space[i * (cols + 1)];

// prob.y[i] = label.at(i, 0);

// break;

// }

// x_space[i*(cols + 1) + j].index = j + 1;

// x_space[i*(cols + 1) + j].value = data.at(i, j);

// }

// }

// cout << "start train svm." << endl;

// svm_model *model = svm_train(&prob, ¶m);

//

// cout << "save model" << endl;

// svm_save_model(save_path.c_str(), model);

// cout << "done!" << endl;

//

// delete[] x_space;

// delete[] prob.x;

// delete[] prob.y;

//}

//void DataPrepareUtil::libSVM_Predict(std::string mode, cv::Mat & data, cv::Mat &label)

//{

// svm_model* model = svm_load_model(mode.c_str());

// int test_cols = data.cols;

// int test_rows = data.rows;

// svm_node *test_space = new svm_node[test_cols + 1];

// //svm_problem prob_test;

// //libsvm test data prepare.

// int error = 0;

// double t0 = (double)cvGetTickCount();

// for (int i = 0; i < test_rows; i++)

// {

// for (int j = 0; j < test_cols + 1; j++)

// {

// if (j == test_cols)

// {

// test_space[j].index = -1;

// break;

// }

// test_space[j].index = j + 1;

// test_space[j].value = data.at(i, j);

// }

// int d = svm_predict(model, test_space);

// if (d != label.at(i, 0))

// {

// cout << "predict is :" << d << " really is :" << label.at(i, 0) << endl;

// error++;

// }

// }

// double t1 = (double)cvGetTickCount();

// cout << "average time is: " << ((t1 - t0) / ((double)cvGetTickFrequency() * 1000 * 1000))*1000.0 / test_rows << "ms" << endl;

// cout << "acurcy is :" << (float)(test_rows - error) / test_rows << endl;

// delete[] test_space;

//}

如果想使用该方法请联系 :[email protected]

关于人脸对齐发展的介绍

https://blog.csdn.net/chaipp0607/article/details/78836640