《利用python进行数据分析》第二版 第14章-数据分析示例 学习笔记1

文章目录

- 一、从Bitly获取1.USA.gov数据

-

- 纯python下对时区进行计数

- 利用pandas对时区进行计数

- 二、MovieLens 1M数据集

-

- 测量评分分歧

- 三、美国1880~2010年的婴儿名字

-

- 分析名字趋势

-

- 计量命名多样性的增加

- “最后一个字母”革命

- 男孩名字变成女孩名字(以及反向)

- 四、美国农业部食品数据库

- 五、2012年联邦选举委员会数据库

-

- 按职业和雇主的捐献统计

- 捐赠金额分桶

- 按州进行捐赠统计

import numpy as np

import pandas as pd

import os

import matplotlib.pyplot as plt

from numpy.random import randn

np.random.seed(123)

一、从Bitly获取1.USA.gov数据

2011 年,短服务商Bitly与美国政府网站 USA.gov 合作,提供从以. gov/. mil结尾的短网址的用户收集的匿名数据。以每小时快照为例,文件中各行的格式为 JSON(即 JavaScript Object Notation,一种常用的 Web 数据格式),该数据集共有十八个维度。若只读取某个文件中的第一行,所看到的结果如下:

path = 'datasets/bitly_usagov/example.txt'

open(path).readline()

'''

'{ "a": "Mozilla\\/5.0 (Windows NT 6.1; WOW64) AppleWebKit\\/535.11 (KHTML, like Gecko) Chrome\\/17.0.963.78 Safari\\/535.11", "c": "US", "nk": 1, "tz": "America\\/New_York", "gr": "MA", "g": "A6qOVH", "h": "wfLQtf", "l": "orofrog", "al": "en-US,en;q=0.8", "hh": "1.usa.gov", "r": "http:\\/\\/www.facebook.com\\/l\\/7AQEFzjSi\\/1.usa.gov\\/wfLQtf", "u": "http:\\/\\/www.ncbi.nlm.nih.gov\\/pubmed\\/22415991", "t": 1331923247, "hc": 1331822918, "cy": "Danvers", "ll": [ 42.576698, -70.954903 ] }\n'

'''

# 通过json.loads() 将JSON字符串逐行加载 转换成Python形式,这里为Python字典对象

import json

path = 'datasets/bitly_usagov/example.txt'

records = [json.loads(line) for line in open(path, encoding='utf-8')]

# 查看加载的数据的第一行

records[0]

'''

{'a': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.78 Safari/535.11',

'c': 'US',

'nk': 1,

'tz': 'America/New_York',

'gr': 'MA',

'g': 'A6qOVH',

'h': 'wfLQtf',

'l': 'orofrog',

'al': 'en-US,en;q=0.8',

'hh': '1.usa.gov',

'r': 'http://www.facebook.com/l/7AQEFzjSi/1.usa.gov/wfLQtf',

'u': 'http://www.ncbi.nlm.nih.gov/pubmed/22415991',

't': 1331923247,

'hc': 1331822918,

'cy': 'Danvers',

'll': [42.576698, -70.954903]}

'''

纯python下对时区进行计数

找到数据集中最常出现的时区(tz字段)

# 用列表推导式提取时区列表

# 由于并不是所有的记录都有tz时区数据,故会报错

time_zones = [rec['tz'] for rec in records]

'''

---------------------------------------------------------------------------

KeyError Traceback (most recent call last)

in

----> 1 time_zones = [rec['tz'] for rec in records]

in (.0)

----> 1 time_zones = [rec['tz'] for rec in records]

KeyError: 'tz'

'''

# 处理以上报错,在列表推导式结尾添加条件

# 但却发现有些时区是空字符串,这些其实也可以过滤掉(这里不做此项处理)

time_zones = [rec['tz'] for rec in records if 'tz' in rec]

time_zones[:10]

'''

['America/New_York',

'America/Denver',

'America/New_York',

'America/Sao_Paulo',

'America/New_York',

'America/New_York',

'Europe/Warsaw',

'',

'',

'']

'''

纯python下通过定义函数来实现计数

# 定义函数,在遍历时区时用字典来存储计数

def get_counts(sequence):

counts = {}

for x in sequence:

if x in counts:

counts[x] += 1

else:

counts[x] = 1

return counts

# 以上函数的另一种实现方式

# defaultdict()的用法

from collections import defaultdict

def get_counts2(sequence):

counts = defaultdict(int) # 值将会初始化为0

for x in sequence:

counts[x] += 1

return counts

# 传递time_zones列表给刚刚的函数,得到字典

counts = get_counts(time_zones)

# 查看tz为'America/New_York'的计数

counts['America/New_York']

'''1251'''

# 查看tz字段非缺失值的总的计数,含空值

len(time_zones)

'''3440'''

# 定义函数,获取排名前10的时区及其计数

def top_counts(count_dict, n=10):

value_key_pairs = [(count, tz) for tz, count in count_dict.items()]

value_key_pairs.sort()

return value_key_pairs[-n:]

# 传递存储时区和计数的字典给刚刚的函数,即可得到最常出现的前10个时区

top_counts(counts)

'''

[(33, 'America/Sao_Paulo'),

(35, 'Europe/Madrid'),

(36, 'Pacific/Honolulu'),

(37, 'Asia/Tokyo'),

(74, 'Europe/London'),

(191, 'America/Denver'),

(382, 'America/Los_Angeles'),

(400, 'America/Chicago'),

(521, ''),

(1251, 'America/New_York')]

'''

纯python下利用标准库collections.Counter()类实现计数

from collections import Counter

# 传递时区列表time_zones给Counter()

counts = Counter(time_zones)

counts.most_common(10)

'''

[('America/New_York', 1251),

('', 521),

('America/Chicago', 400),

('America/Los_Angeles', 382),

('America/Denver', 191),

('Europe/London', 74),

('Asia/Tokyo', 37),

('Pacific/Honolulu', 36),

('Europe/Madrid', 35),

('America/Sao_Paulo', 33)]

'''

利用pandas对时区进行计数

# 将原始记录的列表传递给pd.DataFrame()生成DataFrame

frame = pd.DataFrame(records)

frame.info()

'''

RangeIndex: 3560 entries, 0 to 3559

Data columns (total 18 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 a 3440 non-null object

1 c 2919 non-null object

2 nk 3440 non-null float64

3 tz 3440 non-null object

4 gr 2919 non-null object

5 g 3440 non-null object

6 h 3440 non-null object

7 l 3440 non-null object

8 al 3094 non-null object

9 hh 3440 non-null object

10 r 3440 non-null object

11 u 3440 non-null object

12 t 3440 non-null float64

13 hc 3440 non-null float64

14 cy 2919 non-null object

15 ll 2919 non-null object

16 _heartbeat_ 120 non-null float64

17 kw 93 non-null object

dtypes: float64(4), object(14)

memory usage: 500.8+ KB

'''

# 利用索引切片查看前10行时区数据

frame['tz'][:10]

'''

0 America/New_York

1 America/Denver

2 America/New_York

3 America/Sao_Paulo

4 America/New_York

5 America/New_York

6 Europe/Warsaw

7

8

9

Name: tz, dtype: object

'''

# 对时区进行计数,用Series 的value_counts()

tz_counts = frame['tz'].value_counts()

tz_counts[:10]

'''

America/New_York 1251

521

America/Chicago 400

America/Los_Angeles 382

America/Denver 191

Europe/London 74

Asia/Tokyo 37

Pacific/Honolulu 36

Europe/Madrid 35

America/Sao_Paulo 33

Name: tz, dtype: int64

'''

# 处理缺失值

clean_tz = frame['tz'].fillna('Missing')

# 处理空值

clean_tz[clean_tz == ''] = 'Unknown'

tz_counts = clean_tz.value_counts()

tz_counts[:10]

'''

America/New_York 1251

Unknown 521

America/Chicago 400

America/Los_Angeles 382

America/Denver 191

Missing 120

Europe/London 74

Asia/Tokyo 37

Pacific/Honolulu 36

Europe/Madrid 35

Name: tz, dtype: int64

'''

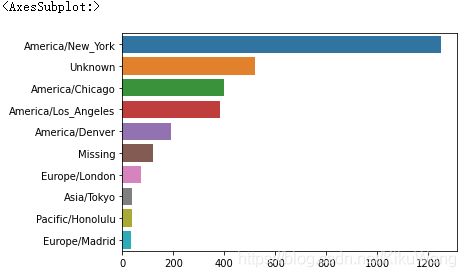

# 对处理后的前10名数据进行可视化

import seaborn as sns

%matplotlib inline

subset = tz_counts[:10]

sns.barplot(y=subset.index, x=subset.values)

# a 字段含有执行 URL 短缩操作的浏览器、设备、应用程序的相关信息

frame['a'][1]

'''

'GoogleMaps/RochesterNY'

'''

frame['a'][50]

'''

'Mozilla/5.0 (Windows NT 5.1; rv:10.0.2) Gecko/20100101 Firefox/10.0.2'

'''

# 选取a字段第52行的数据的前50个字符

frame['a'][51][:50] # long line

'''

'Mozilla/5.0 (Linux; U; Android 2.2.2; en-us; LG-P9'

'''

# 从字段a中解析出感兴趣的信息的做法

# 分离字符串中的第一个标记(大致对应于浏览器信息)

# x.split()[0]表示将a字段的信息遇到空白就分开,并选取第一个标记

results = pd.Series([x.split()[0] for x in frame.a.dropna()])

results[:5]

'''

0 Mozilla/5.0

1 GoogleMaps/RochesterNY

2 Mozilla/4.0

3 Mozilla/5.0

4 Mozilla/5.0

dtype: object

'''

results.value_counts()[:8]

'''

Mozilla/5.0 2594

Mozilla/4.0 601

GoogleMaps/RochesterNY 121

Opera/9.80 34

TEST_INTERNET_AGENT 24

GoogleProducer 21

Mozilla/6.0 5

BlackBerry8520/5.0.0.681 4

dtype: int64

'''

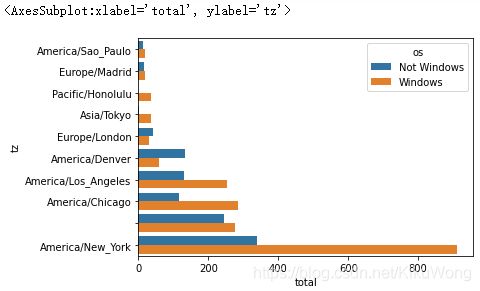

将时区计数多的时区记录分解为Windows和非Windows用户,并统计相同时区下其占比

# 处理缺失的代理字符串,直接将其排除在外

cframe = frame[frame.a.notnull()]

# 找出windows用户,并新添加一列'os'

cframe['os'] = np.where(cframe['a'].str.contains('Windows'), 'Windows', 'Not Windows')

cframe['os'][:5]

'''

0 Windows

1 Not Windows

2 Windows

3 Not Windows

4 Windows

Name: os, dtype: object

'''

# 根据时区列及新生成的操作系统列对数据分组

by_tz_os = cframe.groupby(['tz', 'os'])

# by_tz_os.size()计算每组的大小

agg_counts = by_tz_os.size().unstack().fillna(0)

agg_counts[:10]

| os | Not Windows | Windows |

|---|---|---|

| tz | ||

| 245.0 | 276.0 | |

| Africa/Cairo | 0.0 | 3.0 |

| Africa/Casablanca | 0.0 | 1.0 |

| Africa/Ceuta | 0.0 | 2.0 |

| Africa/Johannesburg | 0.0 | 1.0 |

| Africa/Lusaka | 0.0 | 1.0 |

| America/Anchorage | 4.0 | 1.0 |

| America/Argentina/Buenos_Aires | 1.0 | 0.0 |

| America/Argentina/Cordoba | 0.0 | 1.0 |

| America/Argentina/Mendoza | 0.0 | 1.0 |

# 得出总体计数最高的时区在原序列中的索引

# agg_counts.sum(axis=1)计算时区总数

# argsort()得出排序后的数据在原序列中的索引

indexer = agg_counts.sum(axis=1).argsort()

indexer[-10:]

'''

tz

Europe/Sofia 35

Europe/Stockholm 78

Europe/Uzhgorod 96

Europe/Vienna 59

Europe/Vilnius 77

Europe/Volgograd 15

Europe/Warsaw 22

Europe/Zurich 12

Pacific/Auckland 0

Pacific/Honolulu 29

dtype: int64

'''

# 用take()方法沿着指定轴返回给定索引处的元素,默认axis=0

count_subset = agg_counts.take(indexer[-10:])

count_subset

| os | Not Windows | Windows |

|---|---|---|

| tz | ||

| America/Sao_Paulo | 13.0 | 20.0 |

| Europe/Madrid | 16.0 | 19.0 |

| Pacific/Honolulu | 0.0 | 36.0 |

| Asia/Tokyo | 2.0 | 35.0 |

| Europe/London | 43.0 | 31.0 |

| America/Denver | 132.0 | 59.0 |

| America/Los_Angeles | 130.0 | 252.0 |

| America/Chicago | 115.0 | 285.0 |

| 245.0 | 276.0 | |

| America/New_York | 339.0 | 912.0 |

# 可以实现上述结果,但是返回的数据不是原序列中的格式

agg_counts.sum(1).nlargest(10)

'''

tz

America/New_York 1251.0

521.0

America/Chicago 400.0

America/Los_Angeles 382.0

America/Denver 191.0

Europe/London 74.0

Asia/Tokyo 37.0

Pacific/Honolulu 36.0

Europe/Madrid 35.0

America/Sao_Paulo 33.0

dtype: float64

'''

# 对绘图数据重新排列

count_subset = count_subset.stack()

count_subset

'''

tz os

America/Sao_Paulo Not Windows 13.0

Windows 20.0

Europe/Madrid Not Windows 16.0

Windows 19.0

Pacific/Honolulu Not Windows 0.0

Windows 36.0

Asia/Tokyo Not Windows 2.0

Windows 35.0

Europe/London Not Windows 43.0

Windows 31.0

America/Denver Not Windows 132.0

Windows 59.0

America/Los_Angeles Not Windows 130.0

Windows 252.0

America/Chicago Not Windows 115.0

Windows 285.0

Not Windows 245.0

Windows 276.0

America/New_York Not Windows 339.0

Windows 912.0

dtype: float64

'''

# 给列取名为'total',因为此时前面的为层次化索引

count_subset.name = 'total'

# 剔除层次化索引

count_subset = count_subset.reset_index()

count_subset[:10]

| tz | os | total | |

|---|---|---|---|

| 0 | America/Sao_Paulo | Not Windows | 13.0 |

| 1 | America/Sao_Paulo | Windows | 20.0 |

| 2 | Europe/Madrid | Not Windows | 16.0 |

| 3 | Europe/Madrid | Windows | 19.0 |

| 4 | Pacific/Honolulu | Not Windows | 0.0 |

| 5 | Pacific/Honolulu | Windows | 36.0 |

| 6 | Asia/Tokyo | Not Windows | 2.0 |

| 7 | Asia/Tokyo | Windows | 35.0 |

| 8 | Europe/London | Not Windows | 43.0 |

| 9 | Europe/London | Windows | 31.0 |

# 每个时区分组中,windows用户和非windows用户的数量

sns.barplot(x='total', y='tz', hue='os', data=count_subset)

# 定义函数,计算按时区分组中windows用户和非windows用户的比例;即将组百分比归一化为1

def norm_total(group):

group['normed_total'] = group.total / group.total.sum()

return group

results = count_subset.groupby('tz').apply(norm_total)

sns.barplot(x='normed_total', y='tz', hue='os', data=results)

# 以下也可用于将组百分比归一化为1的处理

g = count_subset.groupby('tz')

results2 = count_subset.total / g.total.transform('sum')

# 以下也可用于将组百分比归一化为1的处理

g = count_subset.groupby('tz')

results2 = count_subset.total / g.total.transform('sum')

二、MovieLens 1M数据集

GroupLens实验室(http://www.grouplens.org/node/73)提供了一些从MovieLens用户那收集的20世纪90年末到21世纪初的电影评分数据集。

这些数据提供了电影评分、电影元数据(风格类型和年代)、观众数据(年龄、邮编、性别和职业等)。这些数据通常会用于基于机器学习算法的推荐系统开发。虽然在本节不会详细介绍机器学习技术,但会告诉你如何将这些数据集切片并切成满足实际需要的形式。

MovieLens 1M数据集含有来自6000名用户对4000部电影的100万条评分。分为三个表:评分、用户信息和电影信息。

从ZIP文件提取数据后,可通过’pd.read_table()'将各个表分别加载到一个pandas DataFrame对象中

# 让展示的内容少一点

pd.options.display.max_rows = 10

# 加载用户信息数据

unames = ['user_id', 'gender', 'age', 'occupation', 'zip']

users = pd.read_table('datasets/movielens/users.dat', sep='::',

header=None, names=unames, engine='python')

# 加载评分信息数据

rnames = ['user_id', 'movie_id', 'rating', 'timestamp']

ratings = pd.read_table('datasets/movielens/ratings.dat', sep='::',

header=None, names=rnames, engine='python')

# 加载电影信息数据

mnames = ['movie_id', 'title', 'genres']

movies = pd.read_table('datasets/movielens/movies.dat', sep='::',

header=None, names=mnames, engine='python')

用切片查看加载的数据

users[:5]

# 结果集中'age'列被分组后用整数编码、'occupation'列被整数编码

| user_id | gender | age | occupation | zip | |

|---|---|---|---|---|---|

| 0 | 1 | F | 1 | 10 | 48067 |

| 1 | 2 | M | 56 | 16 | 70072 |

| 2 | 3 | M | 25 | 15 | 55117 |

| 3 | 4 | M | 45 | 7 | 02460 |

| 4 | 5 | M | 25 | 20 | 55455 |

ratings[:5]

| user_id | movie_id | rating | timestamp | |

|---|---|---|---|---|

| 0 | 1 | 1193 | 5 | 978300760 |

| 1 | 1 | 661 | 3 | 978302109 |

| 2 | 1 | 914 | 3 | 978301968 |

| 3 | 1 | 3408 | 4 | 978300275 |

| 4 | 1 | 2355 | 5 | 978824291 |

movies[:5]

| movie_id | title | genres | |

|---|---|---|---|

| 0 | 1 | Toy Story (1995) | Animation|Children’s|Comedy |

| 1 | 2 | Jumanji (1995) | Adventure|Children’s|Fantasy |

| 2 | 3 | Grumpier Old Men (1995) | Comedy|Romance |

| 3 | 4 | Waiting to Exhale (1995) | Comedy|Drama |

| 4 | 5 | Father of the Bride Part II (1995) | Comedy |

# 将所有表合并成单个表

# pandas的pd.merge()会根据重叠名称推断那些列用作合并的(连接)键位

data = pd.merge(pd.merge(ratings, users), movies)

data

| user_id | movie_id | rating | timestamp | gender | age | occupation | zip | title | genres | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 1193 | 5 | 978300760 | F | 1 | 10 | 48067 | One Flew Over the Cuckoo’s Nest (1975) | Drama |

| 1 | 2 | 1193 | 5 | 978298413 | M | 56 | 16 | 70072 | One Flew Over the Cuckoo’s Nest (1975) | Drama |

| 2 | 12 | 1193 | 4 | 978220179 | M | 25 | 12 | 32793 | One Flew Over the Cuckoo’s Nest (1975) | Drama |

| 3 | 15 | 1193 | 4 | 978199279 | M | 25 | 7 | 22903 | One Flew Over the Cuckoo’s Nest (1975) | Drama |

| 4 | 17 | 1193 | 5 | 978158471 | M | 50 | 1 | 95350 | One Flew Over the Cuckoo’s Nest (1975) | Drama |

| … | … | … | … | … | … | … | … | … | … | … |

| 1000204 | 5949 | 2198 | 5 | 958846401 | M | 18 | 17 | 47901 | Modulations (1998) | Documentary |

| 1000205 | 5675 | 2703 | 3 | 976029116 | M | 35 | 14 | 30030 | Broken Vessels (1998) | Drama |

| 1000206 | 5780 | 2845 | 1 | 958153068 | M | 18 | 17 | 92886 | White Boys (1999) | Drama |

| 1000207 | 5851 | 3607 | 5 | 957756608 | F | 18 | 20 | 55410 | One Little Indian (1973) | Comedy|Drama|Western |

| 1000208 | 5938 | 2909 | 4 | 957273353 | M | 25 | 1 | 35401 | Five Wives, Three Secretaries and Me (1998) | Documentary |

1000209 rows × 10 columns

data.iloc[0]

'''

user_id 1

movie_id 1193

rating 5

timestamp 978300760

gender F

age 1

occupation 10

zip 48067

title One Flew Over the Cuckoo's Nest (1975)

genres Drama

Name: 0, dtype: object

'''

获得按性别分级的每部电影的平均电影评分 mean_ratings

# 用df.pivot_table()方法,获得按性别分级的每部电影的平均电影评分

mean_ratings = data.pivot_table('rating', index='title',

columns='gender', aggfunc='mean')

mean_ratings[:5]

| gender | F | M |

|---|---|---|

| title | ||

| $1,000,000 Duck (1971) | 3.375000 | 2.761905 |

| 'Night Mother (1986) | 3.388889 | 3.352941 |

| 'Til There Was You (1997) | 2.675676 | 2.733333 |

| 'burbs, The (1989) | 2.793478 | 2.962085 |

| …And Justice for All (1979) | 3.828571 | 3.689024 |

为了从mean_ratings中 选出不少于250个评分的电影,需先获得相应的电影的索引:

# 先按标题对数据进行分组,用size()为每个标题获取一个元素是各分组大小的Series

ratings_by_title = data.groupby('title').size()

ratings_by_title[:10]

'''

title

$1,000,000 Duck (1971) 37

'Night Mother (1986) 70

'Til There Was You (1997) 52

'burbs, The (1989) 303

...And Justice for All (1979) 199

1-900 (1994) 2

10 Things I Hate About You (1999) 700

101 Dalmatians (1961) 565

101 Dalmatians (1996) 364

12 Angry Men (1957) 616

dtype: int64

'''

# 获取不少于250个评分的电影的索引

active_titles = ratings_by_title.index[ratings_by_title >= 250]

active_titles

'''

Index([''burbs, The (1989)', '10 Things I Hate About You (1999)',

'101 Dalmatians (1961)', '101 Dalmatians (1996)', '12 Angry Men (1957)',

'13th Warrior, The (1999)', '2 Days in the Valley (1996)',

'20,000 Leagues Under the Sea (1954)', '2001: A Space Odyssey (1968)',

'2010 (1984)',

...

'X-Men (2000)', 'Year of Living Dangerously (1982)',

'Yellow Submarine (1968)', 'You've Got Mail (1998)',

'Young Frankenstein (1974)', 'Young Guns (1988)',

'Young Guns II (1990)', 'Young Sherlock Holmes (1985)',

'Zero Effect (1998)', 'eXistenZ (1999)'],

dtype='object', name='title', length=1216)

'''

# 从mean_ratings中 选出不少于250个评分的电影

mean_ratings = mean_ratings.loc[active_titles]

mean_ratings

| gender | F | M |

|---|---|---|

| title | ||

| 'burbs, The (1989) | 2.793478 | 2.962085 |

| 10 Things I Hate About You (1999) | 3.646552 | 3.311966 |

| 101 Dalmatians (1961) | 3.791444 | 3.500000 |

| 101 Dalmatians (1996) | 3.240000 | 2.911215 |

| 12 Angry Men (1957) | 4.184397 | 4.328421 |

| … | … | … |

| Young Guns (1988) | 3.371795 | 3.425620 |

| Young Guns II (1990) | 2.934783 | 2.904025 |

| Young Sherlock Holmes (1985) | 3.514706 | 3.363344 |

| Zero Effect (1998) | 3.864407 | 3.723140 |

| eXistenZ (1999) | 3.098592 | 3.289086 |

1216 rows × 2 columns

# 从 不少于250个评分的电影mean_ratings 中获得女性观众的top电影

top_female_ratings = mean_ratings.sort_values(by='F', ascending=False)

top_female_ratings[:10]

| gender | F | M |

|---|---|---|

| title | ||

| Close Shave, A (1995) | 4.644444 | 4.473795 |

| Wrong Trousers, The (1993) | 4.588235 | 4.478261 |

| Sunset Blvd. (a.k.a. Sunset Boulevard) (1950) | 4.572650 | 4.464589 |

| Wallace & Gromit: The Best of Aardman Animation (1996) | 4.563107 | 4.385075 |

| Schindler’s List (1993) | 4.562602 | 4.491415 |

| Shawshank Redemption, The (1994) | 4.539075 | 4.560625 |

| Grand Day Out, A (1992) | 4.537879 | 4.293255 |

| To Kill a Mockingbird (1962) | 4.536667 | 4.372611 |

| Creature Comforts (1990) | 4.513889 | 4.272277 |

| Usual Suspects, The (1995) | 4.513317 | 4.518248 |

测量评分分歧

任务一:找到男性和女性观众之间最具分歧性的电影。

# 向 不少于250个评分的电影mean_ratings 中添加一列'diff'

# 再按'diff'排序产生评分差异最大的电影

mean_ratings['diff'] = mean_ratings['M'] - mean_ratings['F']

sorted_by_diff = mean_ratings.sort_values(by='diff')

# 相比男性,女性首选的电影

sorted_by_diff[:10]

| gender | F | M | diff |

|---|---|---|---|

| title | |||

| Dirty Dancing (1987) | 3.790378 | 2.959596 | -0.830782 |

| Jumpin’ Jack Flash (1986) | 3.254717 | 2.578358 | -0.676359 |

| Grease (1978) | 3.975265 | 3.367041 | -0.608224 |

| Little Women (1994) | 3.870588 | 3.321739 | -0.548849 |

| Steel Magnolias (1989) | 3.901734 | 3.365957 | -0.535777 |

| Anastasia (1997) | 3.800000 | 3.281609 | -0.518391 |

| Rocky Horror Picture Show, The (1975) | 3.673016 | 3.160131 | -0.512885 |

| Color Purple, The (1985) | 4.158192 | 3.659341 | -0.498851 |

| Age of Innocence, The (1993) | 3.827068 | 3.339506 | -0.487561 |

| Free Willy (1993) | 2.921348 | 2.438776 | -0.482573 |

# 转换行的顺序,即对行倒叙, 选取前10行,获得相比女性男性首选的电影

sorted_by_diff[::-1][:10]

| gender | F | M | diff |

|---|---|---|---|

| title | |||

| Good, The Bad and The Ugly, The (1966) | 3.494949 | 4.221300 | 0.726351 |

| Kentucky Fried Movie, The (1977) | 2.878788 | 3.555147 | 0.676359 |

| Dumb & Dumber (1994) | 2.697987 | 3.336595 | 0.638608 |

| Longest Day, The (1962) | 3.411765 | 4.031447 | 0.619682 |

| Cable Guy, The (1996) | 2.250000 | 2.863787 | 0.613787 |

| Evil Dead II (Dead By Dawn) (1987) | 3.297297 | 3.909283 | 0.611985 |

| Hidden, The (1987) | 3.137931 | 3.745098 | 0.607167 |

| Rocky III (1982) | 2.361702 | 2.943503 | 0.581801 |

| Caddyshack (1980) | 3.396135 | 3.969737 | 0.573602 |

| For a Few Dollars More (1965) | 3.409091 | 3.953795 | 0.544704 |

任务二:获得不依赖于性别而再观众中引起最大异议的电影

# 异议通过评分的方差/标准差来衡量

# 计算按电影标题分组的评分标准差

rating_std_by_title = data.groupby('title')['rating'].std()

# 将 不少于250个评分的电影的索引active_titles 传递给loc

# 从rating_std_by_title 选取不少于250个评分的电影

rating_std_by_title = rating_std_by_title.loc[active_titles]

# 根据标准差 对不少于250个评分的电影 降序排列 得到观众中引起最大异议的电影

rating_std_by_title.sort_values(ascending=False)[:10]

'''

title

Dumb & Dumber (1994) 1.321333

Blair Witch Project, The (1999) 1.316368

Natural Born Killers (1994) 1.307198

Tank Girl (1995) 1.277695

Rocky Horror Picture Show, The (1975) 1.260177

Eyes Wide Shut (1999) 1.259624

Evita (1996) 1.253631

Billy Madison (1995) 1.249970

Fear and Loathing in Las Vegas (1998) 1.246408

Bicentennial Man (1999) 1.245533

Name: rating, dtype: float64

'''

电影流派genres数据以‘|’分隔,若想按流派进行分析,需要做更多的工作将流派信息转化为更有用的形式。

三、美国1880~2010年的婴儿名字

美国社会保障局提供了从1880年至今的婴儿姓名频率的数据。对这些数据集可进行的分析包括:

- 根据给定名字对婴儿名字随时间的比例进行可视化

- 确定一个名字的相对排位

- 确定每年最受欢迎的名字,或流行程度最低或最高的名字

- 分析名字趋势:元音、辅音、长度、整体多样性、拼写变化、第一个/最后一个字母

- 分析额外的趋势来源:圣经中的名字、名人、人口变化等

Windows中通过’ !type 数据路径 '查看数据:

!type datasets\babynames\yob1880.txt

# 因为数据是用逗号分隔,故可用pd.read_csv()加载数据

import pandas as pd

names1880 = pd.read_csv('datasets/babynames/yob1880.txt',

names=['name', 'sex', 'births'])

names1880

| name | sex | births | |

|---|---|---|---|

| 0 | Mary | F | 7065 |

| 1 | Anna | F | 2604 |

| 2 | Emma | F | 2003 |

| 3 | Elizabeth | F | 1939 |

| 4 | Minnie | F | 1746 |

| … | … | … | … |

| 1995 | Woodie | M | 5 |

| 1996 | Worthy | M | 5 |

| 1997 | Wright | M | 5 |

| 1998 | York | M | 5 |

| 1999 | Zachariah | M | 5 |

2000 rows × 3 columns

# 由于数据集按年份分为多个文件,用pd.concat()将数据集中到一个DataFrame中

# 并增加一个年份字段

years = range(1880, 2011)

pieces = []

columns = ['name', 'sex', 'births']

for year in years:

path = 'datasets/babynames/yob%d.txt' % year

frame = pd.read_csv(path, names=columns)

frame['year'] = year

pieces.append(frame)

# 将所有内容合并成一个DataFrame

# 传递ignore_index=True,忽略原始序列中行索引

names = pd.concat(pieces, ignore_index=True)

names

| name | sex | births | year | |

|---|---|---|---|---|

| 0 | Mary | F | 7065 | 1880 |

| 1 | Anna | F | 2604 | 1880 |

| 2 | Emma | F | 2003 | 1880 |

| 3 | Elizabeth | F | 1939 | 1880 |

| 4 | Minnie | F | 1746 | 1880 |

| … | … | … | … | … |

| 1690779 | Zymaire | M | 5 | 2010 |

| 1690780 | Zyonne | M | 5 | 2010 |

| 1690781 | Zyquarius | M | 5 | 2010 |

| 1690782 | Zyran | M | 5 | 2010 |

| 1690783 | Zzyzx | M | 5 | 2010 |

1690784 rows × 4 columns

# 用pivot_table()聚合年份和性别数据

# 按性别列出每年的出生总和

# groupby也可实现统一的效果

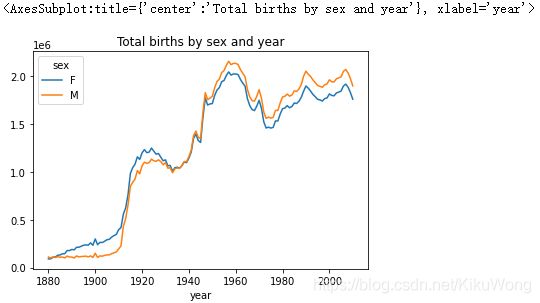

total_births = names.pivot_table('births', index='year',

columns='sex', aggfunc=sum)

total_births.tail()

| sex | F | M |

|---|---|---|

| year | ||

| 2006 | 1896468 | 2050234 |

| 2007 | 1916888 | 2069242 |

| 2008 | 1883645 | 2032310 |

| 2009 | 1827643 | 1973359 |

| 2010 | 1759010 | 1898382 |

total_births.plot(title='Total births by sex and year')

# 增加prop列,给出每个婴儿名字相对于出生总数的比例

def add_prop(group):

group['prop'] = group.births / group.births.sum()

return group

# 按年份和性别对数据进行分组,然后将新列添加到每个组

names = names.groupby(['year', 'sex']).apply(add_prop)

names

| name | sex | births | year | prop | |

|---|---|---|---|---|---|

| 0 | Mary | F | 7065 | 1880 | 0.077643 |

| 1 | Anna | F | 2604 | 1880 | 0.028618 |

| 2 | Emma | F | 2003 | 1880 | 0.022013 |

| 3 | Elizabeth | F | 1939 | 1880 | 0.021309 |

| 4 | Minnie | F | 1746 | 1880 | 0.019188 |

| … | … | … | … | … | … |

| 1690779 | Zymaire | M | 5 | 2010 | 0.000003 |

| 1690780 | Zyonne | M | 5 | 2010 | 0.000003 |

| 1690781 | Zyquarius | M | 5 | 2010 | 0.000003 |

| 1690782 | Zyran | M | 5 | 2010 | 0.000003 |

| 1690783 | Zzyzx | M | 5 | 2010 | 0.000003 |

1690784 rows × 5 columns

# 进行数据完整性检查,验证所有组中prop列总计为1

names.groupby(['year', 'sex']).prop.sum()

'''

year sex

1880 F 1.0

M 1.0

1881 F 1.0

M 1.0

1882 F 1.0

...

2008 M 1.0

2009 F 1.0

M 1.0

2010 F 1.0

M 1.0

Name: prop, Length: 262, dtype: float64

'''

提取每个性别/年份组合的前1000名,之后将在这个数据集上进行分析

# 提取每个性别/年份组和的前1000名

def get_top1000(group):

return group.sort_values(by='births', ascending=False)[:1000]

grouped = names.groupby(['year', 'sex'])

top1000 = grouped.apply(get_top1000)

# 传递drop=True,删除组索引,不需要它

top1000.reset_index(inplace=True, drop=True)

top1000

| name | sex | births | year | prop | |

|---|---|---|---|---|---|

| 0 | Mary | F | 7065 | 1880 | 0.077643 |

| 1 | Anna | F | 2604 | 1880 | 0.028618 |

| 2 | Emma | F | 2003 | 1880 | 0.022013 |

| 3 | Elizabeth | F | 1939 | 1880 | 0.021309 |

| 4 | Minnie | F | 1746 | 1880 | 0.019188 |

| … | … | … | … | … | … |

| 261872 | Camilo | M | 194 | 2010 | 0.000102 |

| 261873 | Destin | M | 194 | 2010 | 0.000102 |

| 261874 | Jaquan | M | 194 | 2010 | 0.000102 |

| 261875 | Jaydan | M | 194 | 2010 | 0.000102 |

| 261876 | Maxton | M | 193 | 2010 | 0.000102 |

261877 rows × 5 columns

分析名字趋势

# 将top1000的数据集分成男孩和女孩

boys = top1000[top1000.sex == 'M']

girls = top1000[top1000.sex == 'F']

# 按年份和名字形成出生总数的数据透视表

total_births = top1000.pivot_table('births', index='year',

columns='name', aggfunc=sum)

total_births.info()

'''

Int64Index: 131 entries, 1880 to 2010

Columns: 6868 entries, Aaden to Zuri

dtypes: float64(6868)

memory usage: 6.9 MB

'''

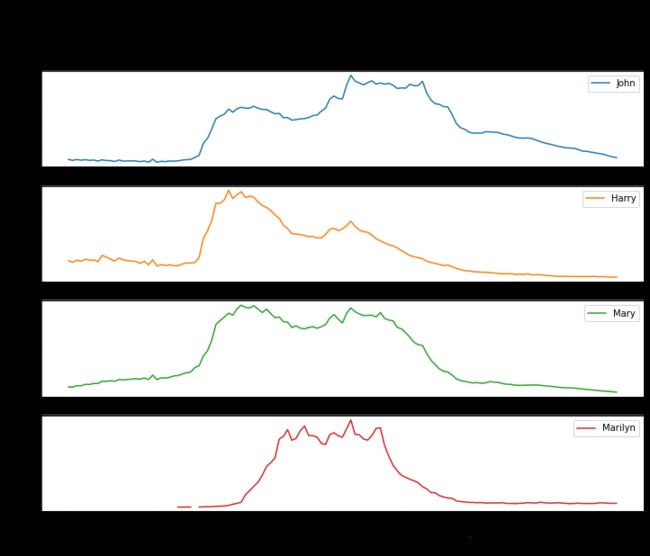

# 绘制部分名字随时间变化的趋势的数据透视表

subset = total_births[['John', 'Harry', 'Mary', 'Marilyn']]

subset.plot(subplots=True, figsize=(12, 10), grid=False,

title="Number of births per year")

'''

array([, ,

, ],

dtype=object)

'''

# 根据图发现这些名字的使用趋势下降了,可以往下分析原因

计量命名多样性的增加

名字使用趋势下降的可能解释为:越来越多的父母为他们的孩子选择常用的名字。我们在数据中对这个假设进行探索和确认。

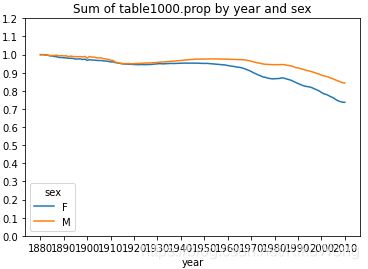

# top1000最受欢迎的名字所涵盖婴儿的出生比例,按性别和年份聚合绘图

table = top1000.pivot_table('prop', index='year',

columns='sex', aggfunc=sum)

table.plot(title='Sum of table1000.prop by year and sex',

yticks=np.linspace(0, 1.2, 13), xticks=range(1880, 2020, 10))

'''

'''

# 根据图可以发现,似乎有越来越多的名字多样性(top1000名字的总比例降低了)

# 不同名字的数量,按最高到最低的受欢迎程度在出生人数最高的50%的名字中排序

# 考虑2010年男孩的名字

df = boys[boys.year == 2010]

df

| name | sex | births | year | prop | |

|---|---|---|---|---|---|

| 260877 | Jacob | M | 21875 | 2010 | 0.011523 |

| 260878 | Ethan | M | 17866 | 2010 | 0.009411 |

| 260879 | Michael | M | 17133 | 2010 | 0.009025 |

| 260880 | Jayden | M | 17030 | 2010 | 0.008971 |

| 260881 | William | M | 16870 | 2010 | 0.008887 |

| … | … | … | … | … | … |

| 261872 | Camilo | M | 194 | 2010 | 0.000102 |

| 261873 | Destin | M | 194 | 2010 | 0.000102 |

| 261874 | Jaquan | M | 194 | 2010 | 0.000102 |

| 261875 | Jaydan | M | 194 | 2010 | 0.000102 |

| 261876 | Maxton | M | 193 | 2010 | 0.000102 |

1000 rows × 5 columns

# 按prop降序排列,弄清楚最受欢迎的前50%的男孩名字的个数

# 获取按prop排序后的累加和

prop_cumsum = df.sort_values(by='prop', ascending=False).prop.cumsum()

prop_cumsum[:10]

'''

260877 0.011523

260878 0.020934

260879 0.029959

260880 0.038930

260881 0.047817

260882 0.056579

260883 0.065155

260884 0.073414

260885 0.081528

260886 0.089621

Name: prop, dtype: float64

'''

# 调用searchsorted()方法,返回累计和中0.5的位置

prop_cumsum.values.searchsorted(0.5)

'''116'''

# 返回值116表示2010年出生人数最高的50%的男孩名字有117个

"""

a.searchsorted(v, side='left', sorter=None)用法:

找到v在a中的位置,并返回其索引值

默认sorter=None时,a必须为升序排序

默认side='left',返回第一个符合条件的元素的索引

等价于np.searchsorted(a, v, side='left', sorter=None)

"""

# 获取1900年,最受欢迎的前50%男孩的名字

df = boys[boys.year == 1900]

in1900 = df.sort_values(by='prop', ascending=False).prop.cumsum()

in1900.values.searchsorted(0.5) + 1

'''25'''

# 对比以上两个结果发现,相比之下2010年这个数量要大得多

# 将上述方法应用于每年/性别分组中,

# 用groupby按这些字段分组,

# 将返回值是每个分组计数值的函数apply到每个分组上

def get_quantile_count(group, q=0.5):

group = group.sort_values(by='prop', ascending=False)

return group.prop.cumsum().values.searchsorted(q) + 1

diversity = top1000.groupby(['year', 'sex']).apply(get_quantile_count)

diversity = diversity.unstack('sex')

diversity.head()

| sex | F | M |

|---|---|---|

| year | ||

| 1880 | 38 | 14 |

| 1881 | 38 | 14 |

| 1882 | 38 | 15 |

| 1883 | 39 | 15 |

| 1884 | 39 | 16 |

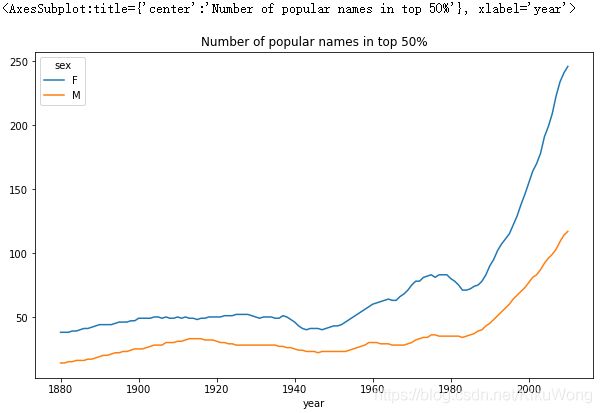

# 绘图:按年份划分的男孩名字与女孩名字多样性指标图

diversity.plot(title="Number of popular names in top 50%")

# 由图可以分析,女孩名字一直比男孩名字更加多样化,且随着时间的推移它们变得越来越多

# 进一步,还可以分析什么促成了多样性,比如增加了其他拼写方法

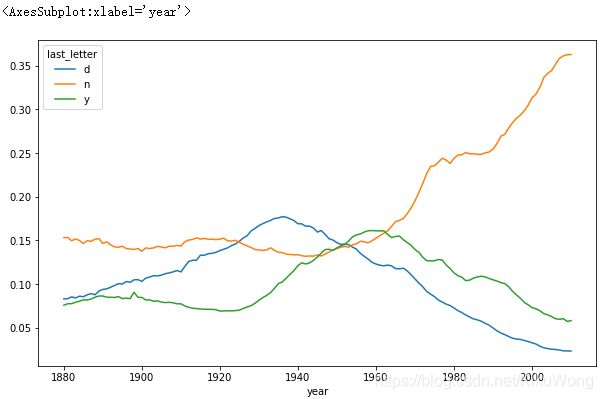

“最后一个字母”革命

# 按年龄、性别和最后一个字母汇总完整数据集中所有出生情况

# 从name 列提取最后一个字母

get_last_letter = lambda x: x[-1]

last_letters = names.name.map(get_last_letter)

last_letters.name = 'last_letter'

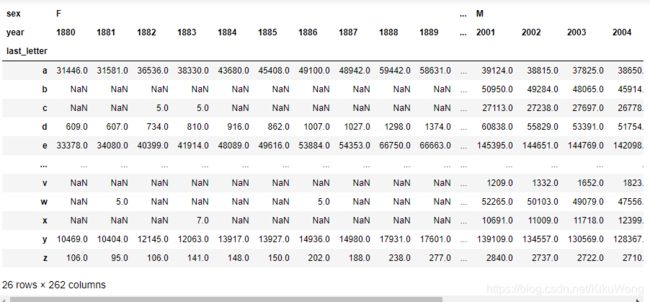

table = names.pivot_table('births', index=last_letters,

columns=['sex', 'year'], aggfunc=sum)

table

# 选出历史上3个有代表性的年份并列出前几行

subtable = table.reindex(columns=[1910, 1960, 2010], level='year')

subtable.head()

| sex | F | M | ||||

|---|---|---|---|---|---|---|

| year | 1910 | 1960 | 2010 | 1910 | 1960 | 2010 |

| last_letter | ||||||

| a | 108376.0 | 691247.0 | 670605.0 | 977.0 | 5204.0 | 28438.0 |

| b | NaN | 694.0 | 450.0 | 411.0 | 3912.0 | 38859.0 |

| c | 5.0 | 49.0 | 946.0 | 482.0 | 15476.0 | 23125.0 |

| d | 6750.0 | 3729.0 | 2607.0 | 22111.0 | 262112.0 | 44398.0 |

| e | 133569.0 | 435013.0 | 313833.0 | 28655.0 | 178823.0 | 129012.0 |

# 按出生总数对表格进行归一化处理,计算一个新表格,其中包含每个性别的每个结束字母占总出生数的比例

# 对每一列求总和,即按性别求每年的总数

subtable.sum()

'''

sex year

F 1910 396416.0

1960 2022062.0

2010 1759010.0

M 1910 194198.0

1960 2132588.0

2010 1898382.0

dtype: float64

'''

# 归一化

letter_prop = subtable / subtable.sum()

letter_prop

| sex | F | M | ||||

|---|---|---|---|---|---|---|

| year | 1910 | 1960 | 2010 | 1910 | 1960 | 2010 |

| last_letter | ||||||

| a | 0.273390 | 0.341853 | 0.381240 | 0.005031 | 0.002440 | 0.014980 |

| b | NaN | 0.000343 | 0.000256 | 0.002116 | 0.001834 | 0.020470 |

| c | 0.000013 | 0.000024 | 0.000538 | 0.002482 | 0.007257 | 0.012181 |

| d | 0.017028 | 0.001844 | 0.001482 | 0.113858 | 0.122908 | 0.023387 |

| e | 0.336941 | 0.215133 | 0.178415 | 0.147556 | 0.083853 | 0.067959 |

| … | … | … | … | … | … | … |

| v | NaN | 0.000060 | 0.000117 | 0.000113 | 0.000037 | 0.001434 |

| w | 0.000020 | 0.000031 | 0.001182 | 0.006329 | 0.007711 | 0.016148 |

| x | 0.000015 | 0.000037 | 0.000727 | 0.003965 | 0.001851 | 0.008614 |

| y | 0.110972 | 0.152569 | 0.116828 | 0.077349 | 0.160987 | 0.058168 |

| z | 0.002439 | 0.000659 | 0.000704 | 0.000170 | 0.000184 | 0.001831 |

26 rows × 6 columns

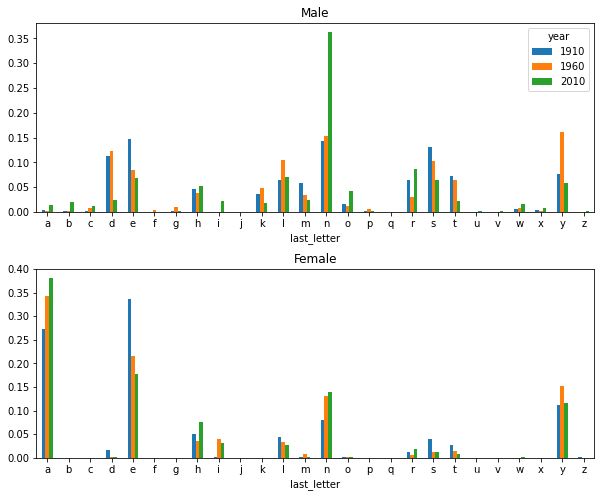

# 绘制按年份划分的每个性别的最后一个字母比例的条形图

fig, axes = plt.subplots(2, 1, figsize=(10, 8))

letter_prop['M'].plot(kind='bar', rot=0, ax=axes[0], title='Male')

letter_prop['F'].plot(kind='bar', rot=0, ax=axes[1], title='Female',legend=False)

# 调整子图间距

plt.subplots_adjust(hspace=0.3)

# 由图发现,以n结尾的男孩名字经历了显著的增长

# 按年份和性别进行标准化,并为男孩名字选择一个字母子集

letter_prop = table / table.sum()

dny_ts = letter_prop.loc[['d', 'n', 'y'], 'M'].T

dny_ts.head()

| last_letter | d | n | y |

|---|---|---|---|

| year | |||

| 1880 | 0.083055 | 0.153213 | 0.075760 |

| 1881 | 0.083247 | 0.153214 | 0.077451 |

| 1882 | 0.085340 | 0.149560 | 0.077537 |

| 1883 | 0.084066 | 0.151646 | 0.079144 |

| 1884 | 0.086120 | 0.149915 | 0.080405 |

# 随着时间推移名字以d/n/y结尾的男孩比例的变化趋势

dny_ts.plot()

男孩名字变成女孩名字(以及反向)

# 回到之前的top1000的DataFrmae,计算数据集中以'lesl'开头的名字列表

all_names = pd.Series(top1000.name.unique())

lesley_like = all_names[all_names.str.lower().str.contains('lesl')]

lesley_like

'''

632 Leslie

2294 Lesley

4262 Leslee

4728 Lesli

6103 Lesly

dtype: object

'''

# 从DataFrame中选出这些名字,并对按名字分组的出生数进行累加来看相关频率

filtered = top1000[top1000.name.isin(lesley_like)]

filtered.groupby('name').births.sum()

'''

name

Leslee 1082

Lesley 35022

Lesli 929

Leslie 370429

Lesly 10067

Name: births, dtype: int64

'''

# 按性别和年份进行聚合,并在年内进行标准化

table = filtered.pivot_table('births', index='year', columns='sex', aggfunc='sum')

table = table.div(table.sum(1), axis=0)

table.tail()

| sex | F | M |

|---|---|---|

| year | ||

| 2006 | 1.0 | NaN |

| 2007 | 1.0 | NaN |

| 2008 | 1.0 | NaN |

| 2009 | 1.0 | NaN |

| 2010 | 1.0 | NaN |

table.plot(style={'M': 'k-', 'F': 'k--'})

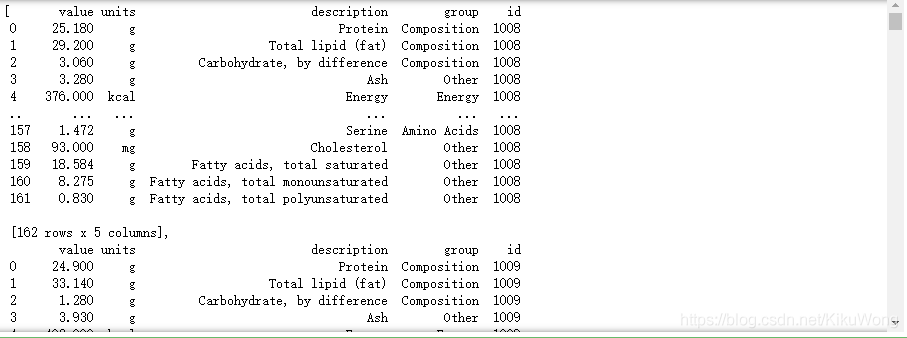

四、美国农业部食品数据库

分析以下原始数据会发现,每一笔数据是个字典,但有些字典的值又是字典所组成的list,如 “portions”、 “nutrients”,故本节主要学习如何对这样的数据进行转换以用于分析。

{

"id": 21441,

"description": "KENTUCKY FRIED CHICKEN, Fried Chicken, EXTRA CRISPY, Wing, meat and skin with breading",

"tags": ["KFC"],

"manufacturer": "Kentucky Fried Chicken",

"group": "Fast Foods",

"portions": [

{

"amount": 1,

"unit": "wing, with skin",

"grams": 68.0

},

...

],

"nutrients": [

{

"value": 20.8,

"units": "g",

"description": "Protein",

"group": "Composition"

},

...

]

}

# 用Python内置的json模块加载数据

import json

db = json.load(open('datasets/usda_food/database.json'))

len(db)

'''6636'''

db[0].keys()

'''

dict_keys(['id', 'description', 'tags', 'manufacturer', 'group', 'portions', 'nutrients'])

'''

# 查看第1笔数据中键nutrients的值的第一个元素

db[0]['nutrients'][0]

'''

{'value': 25.18,

'units': 'g',

'description': 'Protein',

'group': 'Composition'}

'''

nutrients = pd.DataFrame(db[0]['nutrients'])

nutrients[:7]

| value | units | description | group | |

|---|---|---|---|---|

| 0 | 25.18 | g | Protein | Composition |

| 1 | 29.20 | g | Total lipid (fat) | Composition |

| 2 | 3.06 | g | Carbohydrate, by difference | Composition |

| 3 | 3.28 | g | Ash | Other |

| 4 | 376.00 | kcal | Energy | Energy |

| 5 | 39.28 | g | Water | Composition |

| 6 | 1573.00 | kJ | Energy | Energy |

# 提取指定字段,组成DataFrame

info_keys = ['description', 'group', 'id', 'manufacturer']

info = pd.DataFrame(db, columns=info_keys)

info[:5]

| description | group | id | manufacturer | |

|---|---|---|---|---|

| 0 | Cheese, caraway | Dairy and Egg Products | 1008 | |

| 1 | Cheese, cheddar | Dairy and Egg Products | 1009 | |

| 2 | Cheese, edam | Dairy and Egg Products | 1018 | |

| 3 | Cheese, feta | Dairy and Egg Products | 1019 | |

| 4 | Cheese, mozzarella, part skim milk | Dairy and Egg Products | 1028 |

info.info()

'''

RangeIndex: 6636 entries, 0 to 6635

Data columns (total 4 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 description 6636 non-null object

1 group 6636 non-null object

2 id 6636 non-null int64

3 manufacturer 5195 non-null object

dtypes: int64(1), object(3)

memory usage: 207.5+ KB

'''

# 查看食物分类group的分布情况

pd.value_counts(info.group)[:10]

'''

Vegetables and Vegetable Products 812

Beef Products 618

Baked Products 496

Breakfast Cereals 403

Fast Foods 365

Legumes and Legume Products 365

Lamb, Veal, and Game Products 345

Sweets 341

Pork Products 328

Fruits and Fruit Juices 328

Name: group, dtype: int64

'''

将每种食物的营养元素组成一张大表:

nutrients = []

for rec in db: # rec表示原数据中的第几笔数据

# 将原数据中nutrients的数据的每个列表提取出来组成DataFrame

fnuts = pd.DataFrame(rec['nutrients'])

fnuts['id'] = rec['id'] # 再给每一笔数据添加上相应的id

nutrients.append(fnuts) # 将DataFrame附加到list中

# 查看list的长度是否与原始数据一致

len(nutrients)

'''6636'''

nutrients # 由DataFrame组成的list

# 将这些位于list中的DataFrame通过pd.concat()连接

nutrients = pd.concat(nutrients, ignore_index=True)

nutrients

| value | units | description | group | id | |

|---|---|---|---|---|---|

| 0 | 25.180 | g | Protein | Composition | 1008 |

| 1 | 29.200 | g | Total lipid (fat) | Composition | 1008 |

| 2 | 3.060 | g | Carbohydrate, by difference | Composition | 1008 |

| 3 | 3.280 | g | Ash | Other | 1008 |

| 4 | 376.000 | kcal | Energy | Energy | 1008 |

| … | … | … | … | … | … |

| 389350 | 0.000 | mcg | Vitamin B-12, added | Vitamins | 43546 |

| 389351 | 0.000 | mg | Cholesterol | Other | 43546 |

| 389352 | 0.072 | g | Fatty acids, total saturated | Other | 43546 |

| 389353 | 0.028 | g | Fatty acids, total monounsaturated | Other | 43546 |

| 389354 | 0.041 | g | Fatty acids, total polyunsaturated | Other | 43546 |

389355 rows × 5 columns

# 注意到表中存在重复值,重复的数量

nutrients.duplicated().sum()

'''14179'''

# 删除其中的重复值

nutrients = nutrients.drop_duplicates()

# 对group列和description列,重命名列名

# 因为info和nutrients表中都含有这两个列,故进行重命名便于之后的合并

col_mapping = {'description' : 'food', 'group' : 'fgroup'}

info = info.rename(columns=col_mapping, copy=False)

info.info()

'''

RangeIndex: 6636 entries, 0 to 6635

Data columns (total 4 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 food 6636 non-null object

1 fgroup 6636 non-null object

2 id 6636 non-null int64

3 manufacturer 5195 non-null object

dtypes: int64(1), object(3)

memory usage: 207.5+ KB

'''

col_mapping = {'description' : 'nutrient',

'group' : 'nutgroup'}

nutrients = nutrients.rename(columns=col_mapping, copy=False)

nutrients

| value | units | nutrient | nutgroup | id | |

|---|---|---|---|---|---|

| 0 | 25.180 | g | Protein | Composition | 1008 |

| 1 | 29.200 | g | Total lipid (fat) | Composition | 1008 |

| 2 | 3.060 | g | Carbohydrate, by difference | Composition | 1008 |

| 3 | 3.280 | g | Ash | Other | 1008 |

| 4 | 376.000 | kcal | Energy | Energy | 1008 |

| … | … | … | … | … | … |

| 389350 | 0.000 | mcg | Vitamin B-12, added | Vitamins | 43546 |

| 389351 | 0.000 | mg | Cholesterol | Other | 43546 |

| 389352 | 0.072 | g | Fatty acids, total saturated | Other | 43546 |

| 389353 | 0.028 | g | Fatty acids, total monounsaturated | Other | 43546 |

| 389354 | 0.041 | g | Fatty acids, total polyunsaturated | Other | 43546 |

375176 rows × 5 columns

# 将info与nutrients表合并

ndata = pd.merge(nutrients, info, on='id', how='outer')

ndata.info()

'''

Int64Index: 375176 entries, 0 to 375175

Data columns (total 8 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 value 375176 non-null float64

1 units 375176 non-null object

2 nutrient 375176 non-null object

3 nutgroup 375176 non-null object

4 id 375176 non-null int64

5 food 375176 non-null object

6 fgroup 375176 non-null object

7 manufacturer 293054 non-null object

dtypes: float64(1), int64(1), object(6)

memory usage: 25.8+ MB

'''

ndata.iloc[30000]

'''

value 0.04

units g

nutrient Glycine

nutgroup Amino Acids

id 6158

food Soup, tomato bisque, canned, condensed

fgroup Soups, Sauces, and Gravies

manufacturer

Name: 30000, dtype: object

'''

ndata

| value | units | nutrient | nutgroup | id | food | fgroup | manufacturer | |

|---|---|---|---|---|---|---|---|---|

| 0 | 25.180 | g | Protein | Composition | 1008 | Cheese, caraway | Dairy and Egg Products | |

| 1 | 29.200 | g | Total lipid (fat) | Composition | 1008 | Cheese, caraway | Dairy and Egg Products | |

| 2 | 3.060 | g | Carbohydrate, by difference | Composition | 1008 | Cheese, caraway | Dairy and Egg Products | |

| 3 | 3.280 | g | Ash | Other | 1008 | Cheese, caraway | Dairy and Egg Products | |

| 4 | 376.000 | kcal | Energy | Energy | 1008 | Cheese, caraway | Dairy and Egg Products | |

| … | … | … | … | … | … | … | … | … |

| 375171 | 0.000 | mcg | Vitamin B-12, added | Vitamins | 43546 | Babyfood, banana no tapioca, strained | Baby Foods | None |

| 375172 | 0.000 | mg | Cholesterol | Other | 43546 | Babyfood, banana no tapioca, strained | Baby Foods | None |

| 375173 | 0.072 | g | Fatty acids, total saturated | Other | 43546 | Babyfood, banana no tapioca, strained | Baby Foods | None |

| 375174 | 0.028 | g | Fatty acids, total monounsaturated | Other | 43546 | Babyfood, banana no tapioca, strained | Baby Foods | None |

| 375175 | 0.041 | g | Fatty acids, total polyunsaturated | Other | 43546 | Babyfood, banana no tapioca, strained | Baby Foods | None |

375176 rows × 8 columns

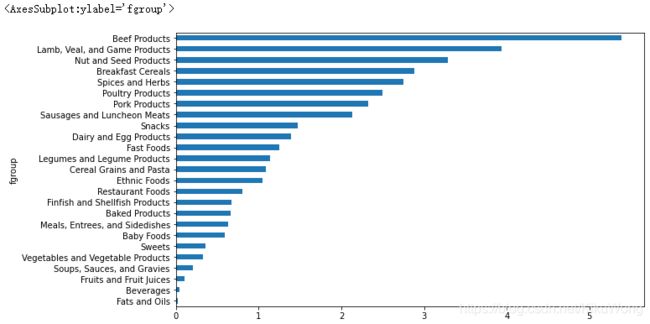

# 根据营养类型和食物组制作一个中位数图

result = ndata.groupby(['nutrient', 'fgroup'])['value'].quantile(0.5)

result

'''

nutrient fgroup

Adjusted Protein Sweets 12.900

Vegetables and Vegetable Products 2.180

Alanine Baby Foods 0.085

Baked Products 0.248

Beef Products 1.550

...

Zinc, Zn Snacks 1.470

Soups, Sauces, and Gravies 0.200

Spices and Herbs 2.750

Sweets 0.360

Vegetables and Vegetable Products 0.330

Name: value, Length: 2246, dtype: float64

'''

result['Zinc, Zn']

'''

fgroup

Baby Foods 0.590

Baked Products 0.660

Beef Products 5.390

Beverages 0.040

Breakfast Cereals 2.885

...

Snacks 1.470

Soups, Sauces, and Gravies 0.200

Spices and Herbs 2.750

Sweets 0.360

Vegetables and Vegetable Products 0.330

Name: value, Length: 25, dtype: float64

'''

# 营养素锌的食物组的中位数图

result['Zinc, Zn'].sort_values().plot(kind='barh')

找到哪种食物在哪个营养素下有最密集的营养

# 根据营养群和营养素分组

by_nutrient = ndata.groupby(['nutgroup', 'nutrient'])

get_maximum = lambda x: x.loc[x.value.idxmax()]

get_minimum = lambda x: x.loc[x.value.idxmin()]

max_foods = by_nutrient.apply(get_maximum)[['value', 'food']]

max_foods

| value | food | ||

|---|---|---|---|

| nutgroup | nutrient | ||

| Amino Acids | Alanine | 8.009 | Gelatins, dry powder, unsweetened |

| Arginine | 7.436 | Seeds, sesame flour, low-fat | |

| Aspartic acid | 10.203 | Soy protein isolate | |

| Cystine | 1.307 | Seeds, cottonseed flour, low fat (glandless) | |

| Glutamic acid | 17.452 | Soy protein isolate | |

| … | … | … | … |

| Vitamins | Vitamin D2 (ergocalciferol) | 28.100 | Mushrooms, maitake, raw |

| Vitamin D3 (cholecalciferol) | 27.400 | Fish, halibut, Greenland, raw | |

| Vitamin E (alpha-tocopherol) | 149.400 | Oil, wheat germ | |

| Vitamin E, added | 46.550 | Cereals ready-to-eat, GENERAL MILLS, Multi-Gra… | |

| Vitamin K (phylloquinone) | 1714.500 | Spices, sage, ground |

94 rows × 2 columns

# food列只取前50个

max_foods.food = max_foods.food.str[:50]

# 查看氨基酸Amino Acids营养组的食物

max_foods.loc['Amino Acids']['food']

'''

nutrient

Alanine Gelatins, dry powder, unsweetened

Arginine Seeds, sesame flour, low-fat

Aspartic acid Soy protein isolate

Cystine Seeds, cottonseed flour, low fat (glandless)

Glutamic acid Soy protein isolate

Glycine Gelatins, dry powder, unsweetened

Histidine Whale, beluga, meat, dried (Alaska Native)

Hydroxyproline KENTUCKY FRIED CHICKEN, Fried Chicken, ORIGINA...

Isoleucine Soy protein isolate, PROTEIN TECHNOLOGIES INTE...

Leucine Soy protein isolate, PROTEIN TECHNOLOGIES INTE...

Lysine Seal, bearded (Oogruk), meat, dried (Alaska Na...

Methionine Fish, cod, Atlantic, dried and salted

Phenylalanine Soy protein isolate, PROTEIN TECHNOLOGIES INTE...

Proline Gelatins, dry powder, unsweetened

Serine Soy protein isolate, PROTEIN TECHNOLOGIES INTE...

Threonine Soy protein isolate, PROTEIN TECHNOLOGIES INTE...

Tryptophan Sea lion, Steller, meat with fat (Alaska Native)

Tyrosine Soy protein isolate, PROTEIN TECHNOLOGIES INTE...

Valine Soy protein isolate, PROTEIN TECHNOLOGIES INTE...

Name: food, dtype: object

'''

五、2012年联邦选举委员会数据库

本节数据集是有关2012年美国总统大选的贡献的数据,包括捐赠者姓名、职业和雇主、地址和缴费金额等。

fec = pd.read_csv('datasets/fec/P00000001-ALL.csv', low_memory=False)

fec.info()

'''

RangeIndex: 1001731 entries, 0 to 1001730

Data columns (total 16 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 cmte_id 1001731 non-null object

1 cand_id 1001731 non-null object

2 cand_nm 1001731 non-null object

3 contbr_nm 1001731 non-null object

4 contbr_city 1001712 non-null object

5 contbr_st 1001727 non-null object

6 contbr_zip 1001620 non-null object

7 contbr_employer 988002 non-null object

8 contbr_occupation 993301 non-null object

9 contb_receipt_amt 1001731 non-null float64

10 contb_receipt_dt 1001731 non-null object

11 receipt_desc 14166 non-null object

12 memo_cd 92482 non-null object

13 memo_text 97770 non-null object

14 form_tp 1001731 non-null object

15 file_num 1001731 non-null int64

dtypes: float64(1), int64(1), object(14)

memory usage: 122.3+ MB

'''

# 查看其中一笔数据

fec.iloc[123456]

'''

cmte_id C00431445

cand_id P80003338

cand_nm Obama, Barack

contbr_nm ELLMAN, IRA

contbr_city TEMPE

contbr_st AZ

contbr_zip 852816719

contbr_employer ARIZONA STATE UNIVERSITY

contbr_occupation PROFESSOR

contb_receipt_amt 50

contb_receipt_dt 01-DEC-11

receipt_desc NaN

memo_cd NaN

memo_text NaN

form_tp SA17A

file_num 772372

Name: 123456, dtype: object

'''

对这些数据进行切片和切块,提取有关捐助者和竞选捐助模式的统计信息

# 获得所有候选人名单

unique_cands = fec.cand_nm.unique()

unique_cands

'''

array(['Bachmann, Michelle', 'Romney, Mitt', 'Obama, Barack',

"Roemer, Charles E. 'Buddy' III", 'Pawlenty, Timothy',

'Johnson, Gary Earl', 'Paul, Ron', 'Santorum, Rick',

'Cain, Herman', 'Gingrich, Newt', 'McCotter, Thaddeus G',

'Huntsman, Jon', 'Perry, Rick'], dtype=object)

'''

unique_cands[2]

'''

'Obama, Barack'

'''

# 手动加入候选人政党背景信息

parties = {'Bachmann, Michelle': 'Republican',

'Cain, Herman': 'Republican',

'Gingrich, Newt': 'Republican',

'Huntsman, Jon': 'Republican',

'Johnson, Gary Earl': 'Republican',

'McCotter, Thaddeus G': 'Republican',

'Obama, Barack': 'Democrat',

'Paul, Ron': 'Republican',

'Pawlenty, Timothy': 'Republican',

'Perry, Rick': 'Republican',

"Roemer, Charles E. 'Buddy' III": 'Republican',

'Romney, Mitt': 'Republican',

'Santorum, Rick': 'Republican'}

fec.cand_nm[123456:123461]

'''

123456 Obama, Barack

123457 Obama, Barack

123458 Obama, Barack

123459 Obama, Barack

123460 Obama, Barack

Name: cand_nm, dtype: object

'''

fec.cand_nm[123456:123461].map(parties)

'''

123456 Democrat

123457 Democrat

123458 Democrat

123459 Democrat

123460 Democrat

Name: cand_nm, dtype: object

'''

# 在原数据中新增一列表示政党背景

fec['party'] = fec.cand_nm.map(parties)

fec['party'].value_counts()

'''

Democrat 593746

Republican 407985

Name: party, dtype: int64

'''

# 查看捐款金额,发现有退款

(fec.contb_receipt_amt > 0).value_counts()

'''

True 991475

False 10256

Name: contb_receipt_amt, dtype: int64

'''

# 只保留捐款信息,剔除退款信息

fec = fec[fec.contb_receipt_amt > 0]

# 选取对'Obama, Barack', 'Romney, Mitt'的竞选有贡献的数据子集

fec_mrbo = fec[fec.cand_nm.isin(['Obama, Barack', 'Romney, Mitt'])]

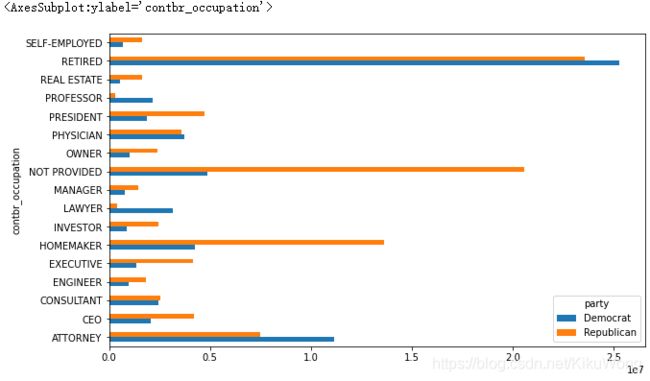

按职业和雇主的捐献统计

# 获得按职业捐献的总数

fec.contbr_occupation.value_counts()[:10]

'''

RETIRED 233990

INFORMATION REQUESTED 35107

ATTORNEY 34286

HOMEMAKER 29931

PHYSICIAN 23432

INFORMATION REQUESTED PER BEST EFFORTS 21138

ENGINEER 14334

TEACHER 13990

CONSULTANT 13273

PROFESSOR 12555

Name: contbr_occupation, dtype: int64

'''

# 将相同的职业类别合并成一条数据

occ_mapping = {

'INFORMATION REQUESTED PER BEST EFFORTS' : 'NOT PROVIDED',

'INFORMATION REQUESTED' : 'NOT PROVIDED',

'INFORMATION REQUESTED (BEST EFFORTS)' : 'NOT PROVIDED',

'C.E.O.': 'CEO'

}

# 如果没有映射,则返回x

f = lambda x: occ_mapping.get(x, x)

fec.contbr_occupation = fec.contbr_occupation.map(f)

# 对雇主职业也进行相同职业合并操作

emp_mapping = {

'INFORMATION REQUESTED PER BEST EFFORTS' : 'NOT PROVIDED',

'INFORMATION REQUESTED' : 'NOT PROVIDED',

'SELF' : 'SELF-EMPLOYED',

'SELF EMPLOYED' : 'SELF-EMPLOYED',

}

# 如果没有映射,则返回x

f = lambda x: emp_mapping.get(x, x)

fec.contbr_employer = fec.contbr_employer.map(f)

# 用pivot_table()按党派和职业聚合数据,再过滤出至少捐赠200万美元的子集

by_occupation = fec.pivot_table('contb_receipt_amt',

index='contbr_occupation',

columns='party', aggfunc='sum')

over_2mm = by_occupation[by_occupation.sum(1) > 2000000]

over_2mm

| party | Democrat | Republican |

|---|---|---|

| contbr_occupation | ||

| ATTORNEY | 11141982.97 | 7.477194e+06 |

| CEO | 2074974.79 | 4.211041e+06 |

| CONSULTANT | 2459912.71 | 2.544725e+06 |

| ENGINEER | 951525.55 | 1.818374e+06 |

| EXECUTIVE | 1355161.05 | 4.138850e+06 |

| HOMEMAKER | 4248875.80 | 1.363428e+07 |

| INVESTOR | 884133.00 | 2.431769e+06 |

| LAWYER | 3160478.87 | 3.912243e+05 |

| MANAGER | 762883.22 | 1.444532e+06 |

| NOT PROVIDED | 4866973.96 | 2.056547e+07 |

| OWNER | 1001567.36 | 2.408287e+06 |

| PHYSICIAN | 3735124.94 | 3.594320e+06 |

| PRESIDENT | 1878509.95 | 4.720924e+06 |

| PROFESSOR | 2165071.08 | 2.967027e+05 |

| REAL ESTATE | 528902.09 | 1.625902e+06 |

| RETIRED | 25305116.38 | 2.356124e+07 |

| SELF-EMPLOYED | 672393.40 | 1.640253e+06 |

over_2mm.plot(kind='barh')

# 如果对捐赠给Obama和Rommeny的顶级捐赠者的职业或顶级公司感兴趣

# 可按候选人名称进行分组

def get_top_amounts(group, key, n=5):

totals = group.groupby(key)['contb_receipt_amt'].sum()

return totals.nlargest(n)

grouped = fec_mrbo.groupby('cand_nm')

# 按职业分类

grouped.apply(get_top_amounts, 'contbr_occupation', n=7)

'''

cand_nm contbr_occupation

Obama, Barack RETIRED 25305116.38

ATTORNEY 11141982.97

INFORMATION REQUESTED 4866973.96

HOMEMAKER 4248875.80

PHYSICIAN 3735124.94

LAWYER 3160478.87

CONSULTANT 2459912.71

Romney, Mitt RETIRED 11508473.59

INFORMATION REQUESTED PER BEST EFFORTS 11396894.84

HOMEMAKER 8147446.22

ATTORNEY 5364718.82

PRESIDENT 2491244.89

EXECUTIVE 2300947.03

C.E.O. 1968386.11

Name: contb_receipt_amt, dtype: float64

'''

# 按受雇机构/雇主分类

grouped.apply(get_top_amounts, 'contbr_employer', n=10)

'''

cand_nm contbr_employer

Obama, Barack RETIRED 22694358.85

SELF-EMPLOYED 17080985.96

NOT EMPLOYED 8586308.70

INFORMATION REQUESTED 5053480.37

HOMEMAKER 2605408.54

SELF 1076531.20

SELF EMPLOYED 469290.00

STUDENT 318831.45

VOLUNTEER 257104.00

MICROSOFT 215585.36

Romney, Mitt INFORMATION REQUESTED PER BEST EFFORTS 12059527.24

RETIRED 11506225.71

HOMEMAKER 8147196.22

SELF-EMPLOYED 7409860.98

STUDENT 496490.94

CREDIT SUISSE 281150.00

MORGAN STANLEY 267266.00

GOLDMAN SACH & CO. 238250.00

BARCLAYS CAPITAL 162750.00

H.I.G. CAPITAL 139500.00

Name: contb_receipt_amt, dtype: float64

'''

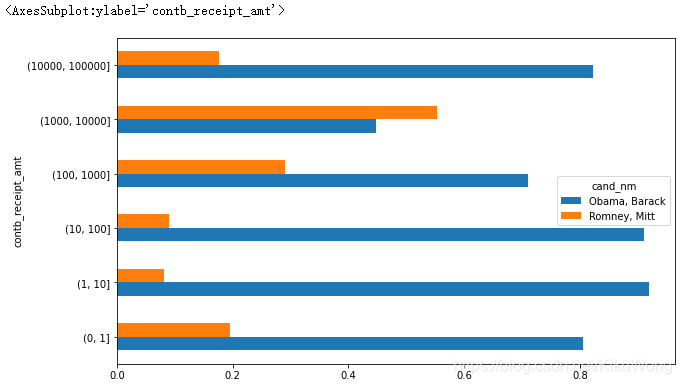

捐赠金额分桶

# 用cut() 将贡献者的数量按贡献大小离散化分桶

bins = np.array([0, 1, 10, 100, 1000, 10000,

100000, 1000000, 10000000])

labels = pd.cut(fec_mrbo.contb_receipt_amt, bins)

labels

'''

411 (10, 100]

412 (100, 1000]

413 (100, 1000]

414 (10, 100]

415 (10, 100]

...

701381 (10, 100]

701382 (100, 1000]

701383 (1, 10]

701384 (10, 100]

701385 (100, 1000]

Name: contb_receipt_amt, Length: 694282, dtype: category

Categories (8, interval[int64]): [(0, 1] < (1, 10] < (10, 100] < (100, 1000] < (1000, 10000] < (10000, 100000] < (100000, 1000000] < (1000000, 10000000]]

'''

# 将Obama和Rommeny的数据按名称和分类标签进行分组,获得捐赠规模的直方图

grouped = fec_mrbo.groupby(['cand_nm', labels])

grouped.size().unstack(0)

| cand_nm | Obama | BarackRomney |

|---|---|---|

| Mittcontb_receipt_amt | ||

| (0, 1] | 493 | 77 |

| (1, 10] | 40070 | 3681 |

| (10, 100] | 372280 | 31853 |

| (100, 1000] | 153991 | 43357 |

| (1000, 10000] | 22284 | 26186 |

| (10000, 100000] | 2 | 1 |

| (100000, 1000000] | 3 | 0 |

| (1000000, 10000000] | 4 | 0 |

# 对捐赠金额求和并在桶内进行归一化处理

bucket_sums = grouped.contb_receipt_amt.sum().unstack(0)

normed_sums = bucket_sums.div(bucket_sums.sum(axis=1), axis=0)

normed_sums

| cand_nm | Obama, Barack | Romney, Mitt |

|---|---|---|

| contb_receipt_amt | ||

| (0, 1] | 0.805182 | 0.194818 |

| (1, 10] | 0.918767 | 0.081233 |

| (10, 100] | 0.910769 | 0.089231 |

| (100, 1000] | 0.710176 | 0.289824 |

| (1000, 10000] | 0.447326 | 0.552674 |

| (10000, 100000] | 0.823120 | 0.176880 |

| (100000, 1000000] | 1.000000 | NaN |

| (1000000, 10000000] | 1.000000 | NaN |

# 不同捐赠规模的候选人收到的捐赠总额的百分比

normed_sums[:-2].plot(kind='barh')

按州进行捐赠统计

# 按照候选人和州进行统计

grouped = fec_mrbo.groupby(['cand_nm', 'contbr_st'])

totals = grouped.contb_receipt_amt.sum().unstack(0).fillna(0)

totals = totals[totals.sum(1) > 100000]

totals[:10]

| cand_nm | Obama, Barack | Romney, Mitt |

|---|---|---|

| contbr_st | ||

| AK | 281840.15 | 86204.24 |

| AL | 543123.48 | 527303.51 |

| AR | 359247.28 | 105556.00 |

| AZ | 1506476.98 | 1888436.23 |

| CA | 23824984.24 | 11237636.60 |

| CO | 2132429.49 | 1506714.12 |

| CT | 2068291.26 | 3499475.45 |

| DC | 4373538.80 | 1025137.50 |

| DE | 336669.14 | 82712.00 |

| FL | 7318178.58 | 8338458.81 |

# 每个候选人按州的捐赠总额的相对百分比

percent = totals.div(totals.sum(1), axis=0)

percent[:10]

'''

cand_nm Obama, Barack Romney, Mitt

contbr_st

AK 0.765778 0.234222

AL 0.507390 0.492610

AR 0.772902 0.227098

AZ 0.443745 0.556255

CA 0.679498 0.320502

CO 0.585970 0.414030

CT 0.371476 0.628524

DC 0.810113 0.189887

DE 0.802776 0.197224

FL 0.467417 0.532583

'''