基于虹软人脸识别,实现本地视频流或RTSP视频流实现人脸追踪(C++)

【置顶】项目解决方案下载链接

提取码为demo

文章目录

- 一、前言

-

- 1. 文章内容

- 2. 关键技术

-

- 2.1 C++

- 2.2 OpenCV

- 2.3 ArcFace

- 二、正文

-

- 1. 前期准备

-

- 1.1 新建项目解决方案

- 1.2 拷贝所需要的文件到工作空间:

- 1.3 VS2017属性页配置路径

- 1.4 加入头文件

- 2. 跟踪视频中所有人脸

-

- 2.1 获取设备信息(用于生成离线激活文件)

- 2.2 生成离线激活文件

- 2.3 使用授权文件激活设备

- 2.4 激活引擎

- 2.5 重要函数

- 2.6 获取本地流图像帧

- 2.7 在循环中对视频帧进行处理

- 2.8 完整程序

- 2.9 运行效果

- 2.10 获取rtsp视频帧

- 3. 指定人脸跟踪识别

-

- 3.1 获取指定人脸的特征信息

- 3.2 特征对比

- 3.3 完整程序

- 3.4 实现效果

- 三、总结

一、前言

1. 文章内容

本文主要基于C++语言,利用虹软人脸识别SDK,来实现本地视频流或RTSP视频流的人脸追踪。

实现内容包括实时检测摄像头的图像帧,实现多人脸的跟踪或将摄像头图像帧的每个人脸特征与人脸库中的人脸信息特征进行对比,实现指定目标的人脸跟踪。

2. 关键技术

2.1 C++

C++ 是一种中级语言,它是由 Bjarne Stroustrup 于 1979 年在贝尔实验室开始设计开发的。. C++ 进一步扩充和完善了 C 语言,是一种面向对象的程序设计语言,并且C++可以很好地应用在多种平台上,比如:Windows、Mac以及Unix的各个版本。

2.2 OpenCV

OpenCV是一个开元的跨平台的计算机视觉库,可以运行在Linux、Windows、Android和Mac OS操作系统上。由一系列 C 函数和少量 C++ 类构成,实现了图像处理和计算机视觉方面的很多通用算法,在图像处理方面有着极高的性能表现!

2.3 ArcFace

虹软提供的离线人脸识别库,版本为4.1。

虹软拥有着全球领先的视觉智能技术,在多平台多语言的人脸检测、人脸跟踪、人脸对比以及更为丰富的人脸属性检测、IR/RGB活体检测、图像质量检测方便都有着不错的性能。

二、正文

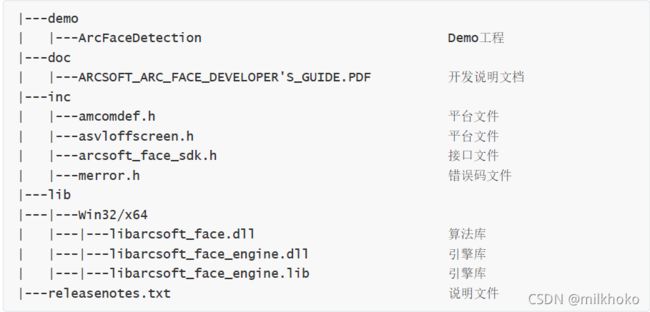

我们使用的是windows版本,直接下载虹软SDK得到一个压缩包,解压缩之后就可以得到我们需要的文件(结构如下):

1. 前期准备

需要注意的是:我们的工程是在32位(x86)环境下进行的!

1.1 新建项目解决方案

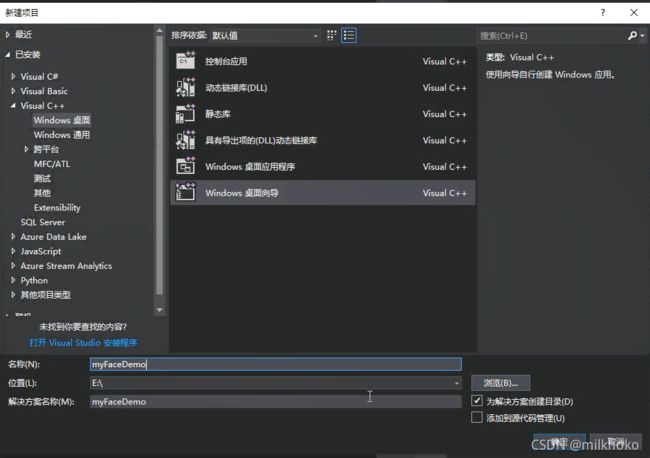

使用VS2017在E盘新建一个控制台工程(对MFC还不熟悉,仅使用控制台作为演示),名为myFaceDemo。

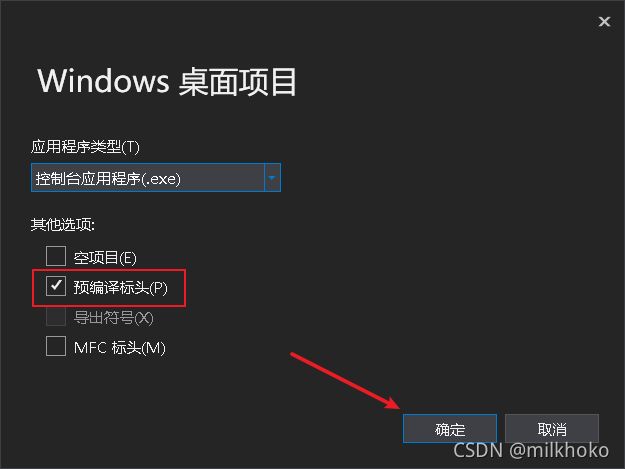

我们这里创建一个预编译头的(或者空项目皆可)解决方案

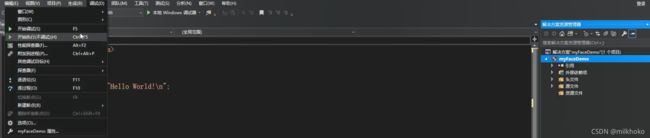

然后先在Debug x86环境下运行一次,使得解决方案生成debug文件夹目录。

1.2 拷贝所需要的文件到工作空间:

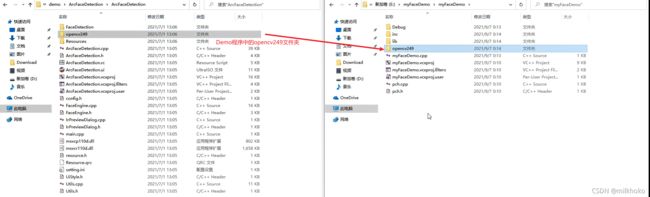

- 将sdk解压包的inc和lib文件夹复制到myFaceDemo解决方案中myFaceDemo.vcxproj文件所在目录:

- 将demo中opencv249文件夹也复制到该目录下:

- 将下图两个文件复制到解决方案生成的debug目录下:

- 将opencv249文件夹按下图路径将.dll文件也复制到debug文件夹下:

- 将sdk解压包中lib文件夹(之前复制过,但是需要将里面的dll文件复制到debug目录下)(如下图):

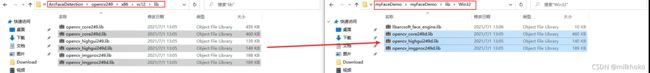

- 将opencv的lib文件复制到myFaceDemo解决方案的lib文件夹下:

- 另外这里需要将6中右图路径lib/win32路径下的所有lib文件复制到lib文件夹,而不是lib/win32文件夹(当然也可以放在win32文件夹下,但是之后添加库的时候路径要选择win32文件夹)

- 这样我们就保证将所需要的dll文件复制到debug文件夹下,将lib文件夹整理到lib文件夹下,将opencv也复制到我们的工作空间(也就是解决方案)中,便于之后我们添加路径。

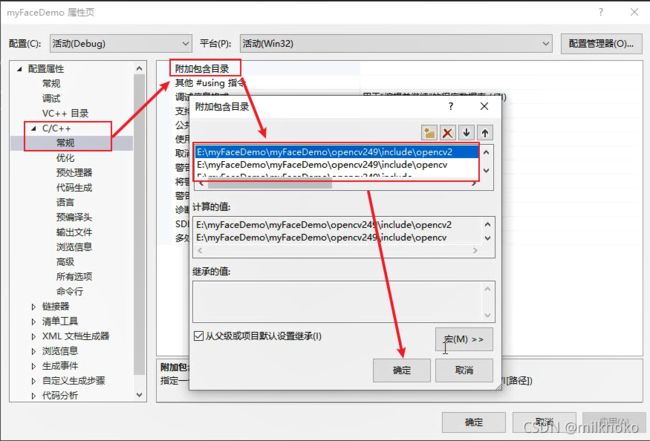

1.3 VS2017属性页配置路径

- 在附加包含目录添加下面的路径

E:\myFaceDemo\myFaceDemo\opencv249\include

E:\myFaceDemo\myFaceDemo\opencv249\include\opencv

E:\myFaceDemo\myFaceDemo\opencv249\include\opencv2

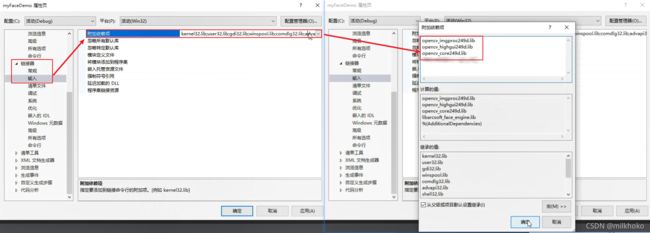

- 在链接器中的附加库目录添加下面的路径

E:\myFaceDemo\myFaceDemo\lib

E:\myFaceDemo\Debug

- 在链接器->输入->附加依赖项中添加下面内容:

opencv_imgproc249d.lib

opencv_highgui249d.lib

opencv_core249d.lib

1.4 加入头文件

#include "pch.h"

#include 加入上面的头文件之后我们再次生成运行,没有报错,表示我们的配置成功!!!

2. 跟踪视频中所有人脸

2.1 获取设备信息(用于生成离线激活文件)

using namespace std;

using namespace cv;

#pragma comment(lib, "libarcsoft_face_engine.lib")

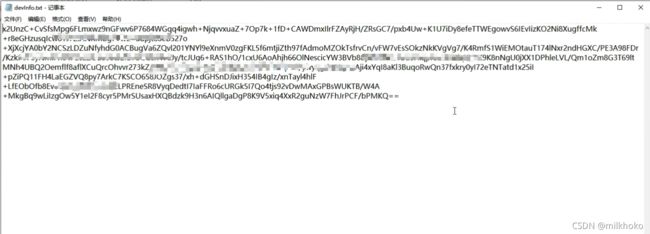

ofstream fout;//需要添加头文件- 我们首先在myFaceDemo.vcxproj所在目录下新建一个名为devInfo.txt的txt文件。

- 在上面的代码中,我们主要包装了一个用于生成设备信息并保存在本地的函数:GetDeviceInfo()

- 该函数调用ASFGetActiveDeviceInfo接口,将设备信息保存在deviceInfo中,然后通过文件操作将其值输出到文件devInfo.txt中。

- 运行上面的程序然后打开devInfo.txt文件会发现保存下来一些内容:

然后我们需要在虹软官网上使用该文件生成离线激活文件(.dat)

2.2 生成离线激活文件

这步操作需要在虹软官网上进行。

- 进入开发者中心,找到下载sdk包的地方,点击下图所示的的查看试用码:

然后我们找到一个待激活的点击离线激活:

- 选择SDK版本(我这里是4.1版本),进入下一步:

- 点击上传设备信息,然后找到我们生成的devInfo.txt文件上传:

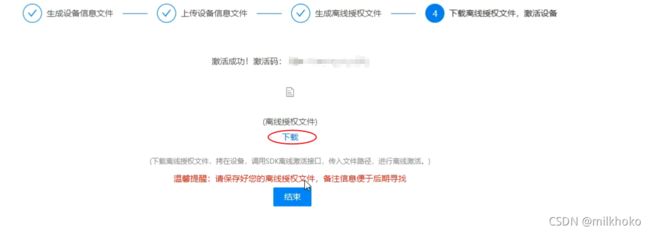

上传之后点击生成离线授权文件:

- 下载授权文件:

- 然后将该文件复制到与设备信息文件devInfo.txt同一目录下,并修改其名称为decActive.dat

2.3 使用授权文件激活设备

我们再包装一个用于离线激活设备的函数**devActive()**并在主函数中调用:

void devActive()

{

char ActiveFileName[] = "decActive.dat"; //创建一个字符数组来保存激活文件的文件名

MRESULT res = ASFOfflineActivation(ActiveFileName); //离线激活

if (MOK != res && MERR_ASF_ALREADY_ACTIVATED != res &&

MERR_ASF_LOCAL_EXIST_USEFUL_ACTIVE_FILE != res)

{

cout << "ASFOfflineActivation failed: " << res << endl; //激活失败打印

}

else cout << "ASFOfflineActivation success" << endl; //激活成功打印

}

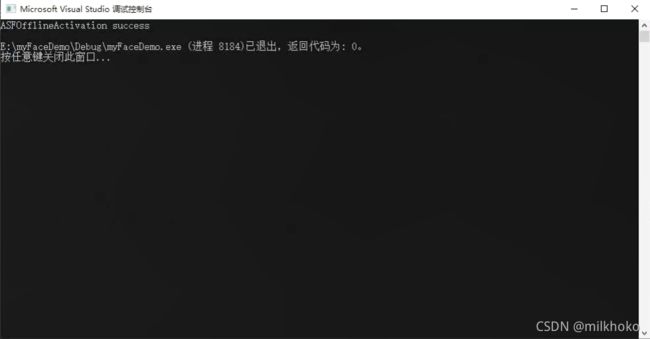

然后我们在主函数中调用该函数,得到如下结果,说明我们的设备激活成功啦!

2.4 激活引擎

激活引擎需要调用的接口函数为:ASFInitEngine()

MRESULT InitEngine(ASF_DetectMode detectMode)//初始化引擎

{

m_hEngine = NULL;

MInt32 mask = 0;

if (ASF_DETECT_MODE_IMAGE == detectMode)

{

mask = ASF_FACE_DETECT | ASF_FACERECOGNITION | ASF_AGE | ASF_GENDER | ASF_LIVENESS | ASF_IR_LIVENESS;

}

else

{

mask = ASF_FACE_DETECT | ASF_FACERECOGNITION | ASF_LIVENESS | ASF_IR_LIVENESS;//功能选择,mask作为ASFInitEngine的参数

}

MRESULT res = ASFInitEngine(detectMode, ASF_OP_ALL_OUT, FACENUM, mask, &m_hEngine);

return res;

}

这里我们重新包装了一下,调用MRESULT InitEngine(ASF_DetectMode detectMode)来进行激活,可以选择激活模式为图片或者视频:

- detectMode == ASF_DETECT_MODE_IMAGE 为图片模式

- detectMode == ASF_DETECT_MODE_VIDEO 为视频模式

这样我们在主函数中可以通过下面的语句激活引擎m_hEngine。

这里注意m_hEngine要先进行定义(见完整程序)

MHandle m_hEngine;//引擎handle

然后调用

InitEngine(ASF_DETECT_MODE_VIDEO);

这样我们就完成了引擎的激活,在之后我们进行识别跟踪时,调用虹软sdk函数需要用到我们激活的引擎:m_hEngine

2.5 重要函数

- 裁剪图像的函数PicCutOut()

//裁剪图片

void PicCutOut(IplImage* src, IplImage* dst, int x, int y)

{

if (!src || !dst)

{

return;

}

CvSize size = cvSize(dst->width, dst->height);//区域大小

cvSetImageROI(src, cvRect(x, y, size.width, size.height));//设置源图像ROI

cvCopy(src, dst); //复制图像

cvResetImageROI(src);//源图像用完后,清空ROI

}

- 颜色空间转换函数ColorSpaceConversion()

将处理后的图像信息保存在offscreen中

//颜色空间转换

int ColorSpaceConversion(IplImage* image, MInt32 format, ASVLOFFSCREEN& offscreen)

{

switch (format)

{

case ASVL_PAF_RGB24_B8G8R8:

offscreen.u32PixelArrayFormat = (unsigned int)format;

offscreen.i32Width = image->width;

offscreen.i32Height = image->height;

offscreen.pi32Pitch[0] = image->widthStep;

offscreen.ppu8Plane[0] = (MUInt8*)image->imageData;

break;

case ASVL_PAF_GRAY:

offscreen.u32PixelArrayFormat = (unsigned int)format;

offscreen.i32Width = image->width;

offscreen.i32Height = image->height;

offscreen.pi32Pitch[0] = image->widthStep;

offscreen.ppu8Plane[0] = (MUInt8*)image->imageData;

break;

default:

return 0;

}

return 1;

}

- 提取人脸信息函数PreDetectFace()

使用该函数,输入为:

- 图片数据 image

- 使用引擎tempEngine ,如果不传入该参数,使用默认值为m_hEngine,用于实现视频识别跟踪,或者传入m_hImgEngine实现图片提取人脸信息

实现图像帧中人脸信息的提取,返回值为:

- 所有人脸信息detectedFaces

//提取人脸信息

//如果不传入使用的引擎,那么默认使用视频引擎,返回为保存所有人脸的多信息

MRESULT PreDetectFace(IplImage* image, ASF_MultiFaceInfo& MultiFaces, MHandle& tempEngine = m_hEngine)

{

if (!image) { cout << "图片为空" << endl; return -1; }

IplImage* cutImg = NULL;

MRESULT res = MOK;

MultiFaces = { 0 };//人脸检测

cutImg = cvCreateImage(cvSize(image->width - (image->width % 4), image->height), IPL_DEPTH_8U, image->nChannels);

PicCutOut(image, cutImg, 0, 0);

ASVLOFFSCREEN offscreen = { 0 };

ColorSpaceConversion(cutImg, ASVL_PAF_RGB24_B8G8R8, offscreen);

res = ASFDetectFacesEx(tempEngine, &offscreen, &MultiFaces);

if (res != MOK || MultiFaces.faceNum < 1)

{

cvReleaseImage(&cutImg);

return -1;

}

return res;

}

- 提取人脸特征函数

使用该函数,输入为:

- 图片数据image

- 单人脸信息faceRect

- 使用引擎tempEngine ,默认值为m_hEngine,即使用视频跟踪引擎

注意: 我们使用的是同一个引擎提取人脸特征,所以对于提取到的特征要进行复制(memcpy),因为同一个引擎多次进行特征提取会覆盖之前的特征,所以进行特征比对时,如果没有对特征进行复制,得到的对比结果始终为1。

//提取特征,如果不输入引擎,那么默认选用视频引擎,进行人脸人脸追踪,否则进行图片特征提取

MRESULT PreExtractFeature(IplImage* image, ASF_FaceFeature& feature, ASF_SingleFaceInfo& faceRect, MHandle& tempEngine = m_hEngine)

{

if (!image || image->imageData == NULL)

return -1;

IplImage* cutImg = cvCreateImage(cvSize(image->width - (image->width % 4), image->height), IPL_DEPTH_8U, image->nChannels);

PicCutOut(image, cutImg, 0, 0);

if (!cutImg) { cvReleaseImage(&cutImg); return -1; }

MRESULT res = MOK;

ASF_FaceFeature detectFaceFeature = { 0 };//特征值

ASVLOFFSCREEN offscreen = { 0 };

ColorSpaceConversion(cutImg, ASVL_PAF_RGB24_B8G8R8, offscreen);

if (tempEngine == m_hEngine)

res = ASFFaceFeatureExtractEx(tempEngine, &offscreen, &faceRect, ASF_RECOGNITION, 0, &detectFaceFeature);//这里相比于3.0版本做了修改

else

res = ASFFaceFeatureExtractEx(tempEngine, &offscreen, &faceRect, ASF_REGISTER, 0, &detectFaceFeature);

if (MOK != res) { cvReleaseImage(&cutImg); return res; }

if (!detectFaceFeature.feature)

return -1;

feature.featureSize = detectFaceFeature.featureSize;

feature.feature = (MByte *)malloc(detectFaceFeature.featureSize);

memset(feature.feature, 0, detectFaceFeature.featureSize);

memcpy(feature.feature, detectFaceFeature.feature, detectFaceFeature.featureSize);

cvReleaseImage(&cutImg);

return res;

}

2.6 获取本地流图像帧

然后在main()函数中我们通过本地摄像头或者本地视频来获取图像帧进行检测:

cv::Mat rgbFrame;//用于保存图像帧

cv::VideoCapture rgbCapture;

if (!rgbCapture.isOpened())

{

bool res = rgbCapture.open("testVideo.avi");//可以使用本地视频路径

//bool res = rgbCapture.open(0);//可以使用本地摄像头

if (res)

cout << "获取本地视频成功!" << endl;

}

//如果是使用摄像头,即rgbCapture.open(0)参数为0,那么可以使用以下内容设置摄像头的长宽,并判断初始化是否成功

//其中的CV_CAP_PROP_FRAME_WIDTH和CV_CAP_PROP_FRAME_HEIGHT在程序中要定义,见完整程序中宏定义关键量

//if (!(rgbCapture.set(CV_CAP_PROP_FRAME_WIDTH, VIDEO_FRAME_DEFAULT_WIDTH) &&

// rgbCapture.set(CV_CAP_PROP_FRAME_HEIGHT, VIDEO_FRAME_DEFAULT_HEIGHT)))//设置摄像头的宽和高分别为设定值(这里为640×480)

//{

// cout << "RGB摄像头初始化失败!" << endl;

// return 1;//设置失败的话就返回1,程序结束

//}

2.7 在循环中对视频帧进行处理

while (true)

{

rgbCapture >> rgbFrame;

if (!rgbFrame.empty())//判断获取到的视频真是否为空,不为空时进行处理

{

ASF_SingleFaceInfo faceInfo = { 0 };//用于保存从视频帧上识别到的最大单人脸信息

ASF_MultiFaceInfo multiFaceInfo = { 0 };//用于保存从视频帧上识别到的多人脸信息

IplImage rgbImage(rgbFrame);

MRESULT detectRes = PreDetectFace(&rgbImage, faceInfo, multiFaceInfo, true);//faceInfo保存的是视频帧上识别到的最大的单人脸信息

if (MOK == detectRes)//检测到人脸信息

{

//对检测到的所有人脸信息画框

for (int i = 0; i < multiFaceInfo.faceNum; i++)

{

cvRectangle(&rgbImage, cvPoint(multiFaceInfo.faceRect[i].left, multiFaceInfo.faceRect[i].top), cvPoint(multiFaceInfo.faceRect[i].right, multiFaceInfo.faceRect[i].bottom), cvScalar(0, 0, 255), 2);

cvPutText(&rgbImage, to_string(multiFaceInfo.faceID[i]).c_str(), cvPoint(multiFaceInfo.faceRect[i].left, multiFaceInfo.faceRect[i].top), &font, CV_RGB(255, 0, 0));//在人脸框左上角标记人脸ID

}

}

//显示图像

IplImage* m_curVideoImage;//保存拷贝后的图像

m_curVideoImage = cvCloneImage(&rgbImage);//复制一份图像

cvNamedWindow("show image");

cvShowImage("show image", m_curVideoImage);

if (waitKey(1) >= 0)

break;

}

else//如果视频帧为空,结束

{

cout << "视频播放结束" << endl;

break;

}

}

rgbCapture.release();//跳出while循环后释放rgbCapture

下面是完整程序!!!

2.8 完整程序

#include "pch.h"

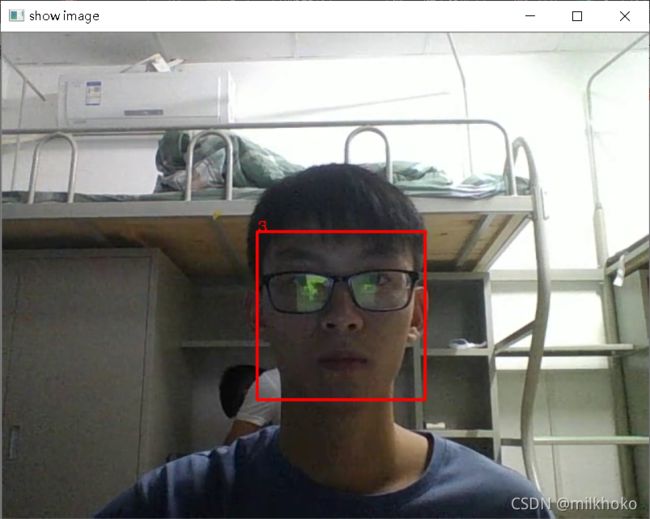

#include 2.9 运行效果

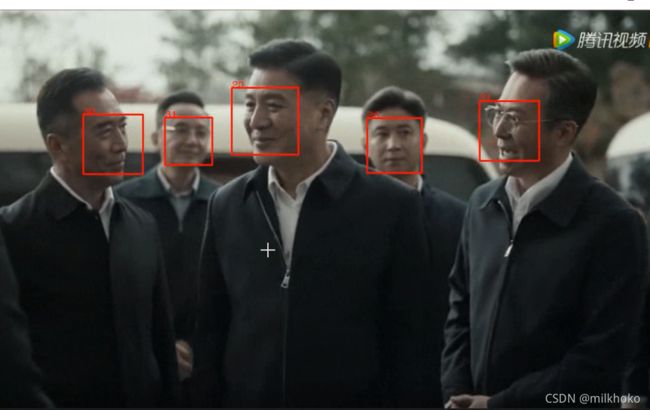

截取了几张使用本地视频进行人脸跟踪的效果图,可以看到效果还是很好的!!!

2.10 获取rtsp视频帧

如果我们有rtsp视频流的推流链接,那么就很简单,只需要修改下面这句代码:

bool res = rgbCapture.open("testVideo.avi");

修改为:

bool res = rgbCapture.open("rtsp视频流链接");

这样就可以获取对应的画面。

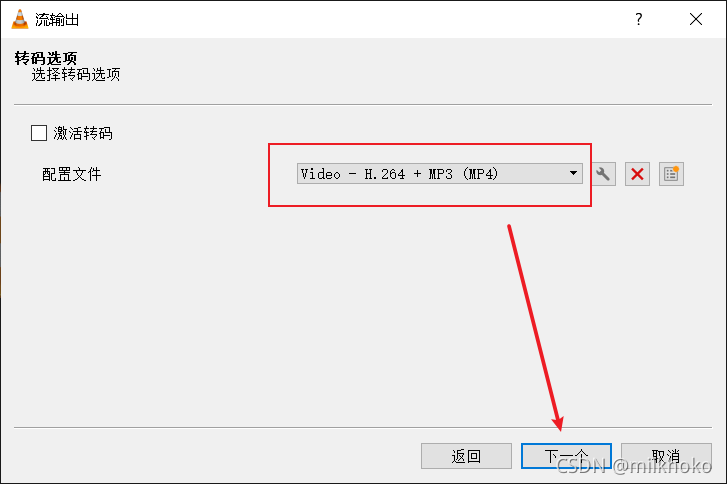

如果我们没有现成的链接,那么我们可以使用一个VLC软件来生成一个rtsp视频流链接来进行测试!

-

下载VLC软件——>下载链接

-

下载安装后打开软件,选择左上角的媒体->流,然后选择捕获设备,选择我们的笔记本摄像头和音频输入,最后选择串流进入下一步。

-

然后按照下图操作,选择rtsp,点击添加后随便输入一个路径名,然后生成的rtsp链接地址就是:rtsp://127.0.0.1:8554/自定义的路径名

我这里的地址就是:rtsp://127.0.0.1:8554/demo

bool res = rgbCapture.open("rtsp://127.0.0.1:8554/demo");

3. 指定人脸跟踪识别

对于指定人脸的跟踪识别,我们在主程序开始时加入了提取指定图片人脸特征,在视频帧处理时加入了提取所有人脸特征并一 一对比判断置信度数值是否大于设定阈值THRESHOLD(这里设置0.82,可以根据实际情况进行调整)

下面主要介绍人脸对比跟踪部分:

3.1 获取指定人脸的特征信息

string img_name;

cout << "请输入图片库中姓名:" << endl;

cin >> img_name;

string fimgPath = filePath + img_name + ".jpg"; //简单直接的做法,如果想要更多样正式的获取路径或文件,可以使用c++的文件操作

Mat tempImg = imread(fimgPath.c_str());

IplImage getImg(tempImg);

ASF_MultiFaceInfo getMultiFaceInfo = { 0 };

PreDetectFace(&getImg, getMultiFaceInfo, true, m_hImgEngine);//图片中的人脸信息保存在getMultiFaceInfo中

//将多人脸信息提取第一个保存在getFaceInfo,用于下一步的特征提取

ASF_SingleFaceInfo getFaceInfo;

getFaceInfo.faceRect = getMultiFaceInfo.faceRect[0];

getFaceInfo.faceOrient = getMultiFaceInfo.faceOrient[0];

getFaceInfo.faceDataInfo = getMultiFaceInfo.faceDataInfoList[0];//相比于3.1版本新加入的

ASF_FaceFeature faceFeature = { 0 };

PreExtractFeature(&getImg, faceFeature, getFaceInfo, m_hImgEngine);

上述代码的主要步骤为:

- 首先,我们保存一下图片路径,然后根据输入的图片名称进行加载

- 对加载的图片进行人脸识别,保存识别到的人脸信息getFaceInfo用于下一步特征提取,由于我们得到的是多人脸信息,而特征提取需要的是单人脸信息,所以这里操作一下把多人脸信息的第一个保存在单人脸信息getFaceInfo中(也可以找最大人脸,根据实际需要调整)

- 进行特征提取,并将提取结果保存在faceFeature用于之后的特征比对

3.2 特征对比

for (int i = 0; i < multiFaceInfo.faceNum; i++)

{

tempFaceInfo.faceDataInfo = multiFaceInfo.faceDataInfoList[i];

tempFaceInfo.faceOrient = multiFaceInfo.faceOrient[i];

tempFaceInfo.faceRect = multiFaceInfo.faceRect[i];

cvRectangle(&rgbImage, cvPoint(multiFaceInfo.faceRect[i].left, multiFaceInfo.faceRect[i].top),

cvPoint(multiFaceInfo.faceRect[i].right, multiFaceInfo.faceRect[i].bottom), cvScalar(0, 0, 255), 2);

PreExtractFeature(&rgbImage, tempFeature, tempFaceInfo);

ASFFaceFeatureCompare(m_hEngine, &tempFeature, &faceFeature, &confidenceLeve);

if (confidenceLeve >= THRESHOLD)

{

string text = to_string(multiFaceInfo.faceID[i]) + " level:" + to_string(confidenceLeve);

cvPutText(&rgbImage, text.c_str(), cvPoint(multiFaceInfo.faceRect[i].left, multiFaceInfo.faceRect[i].top), &font, CV_RGB(255, 0, 0));

}

- 在循环处理视频帧时,逐个保存多人脸信息中的每个人脸信息在tempFaceInfo中。

- 然后根据tempFaceInfo来进行特征提取。

- 提取到的特征值和之前获取到的图片特征值进行对比

- 对比之后的置信度数值保存在confidenceLeve中,然后进行和阈值之间的判断

- 如果判断成功,就在视频中进行显示标记对比置信度结果。

3.3 完整程序

#include "pch.h"

#include 3.4 实现效果

我们在项目文件夹中新建一个face_lib文件夹,并放入指定人脸的图片

运行程序时可以输入图片库中的名称,进行指定人脸的跟踪

运行效果如下:

三、总结

这次是我第一次使用SDK4.1的版本,之前使用的都是3.1的版本,相比于3.1版本,这次版本做了一些改动,我在进行demo的开发时,是根据之前的经验来做的,没有阅读版本改动,致使此次开发走了很多弯路,最后都是通过debug一点一点找到问题所在,然后再次阅读开发文档才得以解决。在使用SDK或其他技术进行项目开发时,要对开发文档以及改动说明进行了解,可以少花时间,少走弯路。