The AlphaGo Replication Wiki

摘自:https://github.com/Rochester-NRT/RocAlphaGo/wiki/01.-Home

Contents :

- Home

- 01. Home

- 02. Code

- 03. Data

- 04. Neural Networks and Training

- 05. Supervised Policy Network (Phase I)

- 06. Reinforcement Policy Network (Phase II)

- 07. Value Network (Phase III)

- 08. Running models with GTP

- 09. Tests and Benchmarks

- 10. MCTS

01. Home

Welcome to the AlphaGo Replication Wiki

Here you can learn more about how this project is structured, and a little about how DeepMind's AlphaGo itself works.

For details, see the list of pages in the sidebar on the right.

How AlphaGo Works

https://www.deepmind.com/alpha-go.html

DeepMind's AlphaGo is a combination of Monte Carlo Tree Search (MCTS) with Convolutional Neural Networks. There are three networks actively contributing to finding a move:

- the policy network guesses where an expert would play. Think of this network as memorizing patterns that it has seen others play but without any sense of the value of playing there.

- the value network estimates the probability of winning from the current position. It is perhaps analogous to an expert's 'intuition' of preferring one position to another.

- a second fast policy network that is used to simulate playouts to the end of the game. It is not a particularly strong player, but is orders of magnitude faster than the first policy network.

Together, these can be used to choose a move - by playing out a short series of reasonable moves then evaluating the results, then picking the best option. This is what AlphaGo's MCTS does - it simply follows the search tree in the most promising directions, where how 'promising' a branch is is a combination of expert policy and estimated value.

The fast policy network is not what you would think of as a typical neural network - it is more like multinomial logistic regression to choose the next move. This rollout policy is not nearly as good as the deeply-learned policy, but it is much much faster. Since the good policy function is so slow, it is only used to search ahead about 20 moves. Normally the value function alone could evaluate how good the board looks after those 20 moves, but AlphaGo's value function is augmented using this fast rollout all the way to the end of the game and simply seeing who wins.

The networks here aren't just processing stone positions or images of boards. A given board position is preprocessed into features that the neural network can make better use of. Part of the success of AlphaGo is attributable to carefully choosing which features to use. More complex features can be informative but at the expense of having to compute them for each position in the search tree.

The other big development contributing to AlphaGo's success is how these networks are trained using a combination of supervised and reinforcement learning.

02. Code

General principles

We are using Python and emphasizing a modular/agile design so that this project stays as comprehensible as possible. The apparent lack of regard for speed should not worry you too much; see the section on benchmarking

Our implementation

GameState

We chose to create our own implementation of Go logic.This is handled by the GameState class in AlphaGo/go.py. It is nearly complete with a few minor bugs and the potential to be cleaned up or refactored. A GameState object is not meant to be much more than a represenatation of a single board position and the game logic for interacting with/updating it. These objects are the common currency that tie together the different components of the project. For example, we load SGF files into GameState objects before processing them into neural network features. A few optimizations have already been added that add a bit of bloat to the class, namely updating liberties on each move. Because some of the features AlphaGo uses depend on the game history, we also added history to the GameState class.

MCTS

A generic tree search class can be found in AlphaGo/mcts.py on the mcts branch. It has been made generic (i.e. not specific to Go or AlphaGo) so as to be easily understood, and to be easily testable in the absence of functional policy and value networks (which are still being written and will then need to be trained). There are many opportunities for benchmarking and optimizations of this file.

Neural Networks

For analogous reasons to MCTS, the policy network (see CNNPolicy in AlphaGo/models/policy.py) has been written "generically." In general, a policy is any function that maps from states to a distribution over actions. CNNPolicy is one such implementation that uses convolutional neural networks. Its eval_state function uses the feature preprocessor to convert from a state to neural network features, feeds them through a network, and converts the output to a list of (action,probability) tuples.

The value network is partially implemented. policy.py will serve as a template for value.py.

The rollout policy is not implemented yet, nor are any of the conversion functions for its features.

Network Features

In case you weren't yet sick of all the decoupling we've done already, we have separated out neural network feature processing from the policy/value models themselves. The Preprocessor class in AlphaGo/models/preprocessing.py is responsible for knowing how to convert from a GameStateinstance to some network features.

There is some debate regarding how best to define the ladder features (discussion in issues).

Features for the rollout policy are not implemented yet.

Training

Training will be implemented as a series of scripts to be run from the command line. There are multiple stages of training that go into the full training 'pipeline'. The different stages of the training pipeline loosely correspond to our development milestones (with some complications glossed over in the main body of DeepMind's paper - see their Methods section)

03. Data

Our data is managed by git-lfs in a different repository. See https://github.com/Rochester-NRT/RocAlphaGo.data

Expert data - supervised learning

We have a few datasets of professional play in SGF format, not all of which we have permission to share. The "Community" dataset has plenty of high-level games and is free to use (see the dataset's README for information on contributors). We are also experimenting with GoGoD and BadukMovies. See here for a compilation of other datasets.

Expert games are saved in the SGF format. The training pipeline begins by batch-processing a collection of SGF files into neural network features. See "phase 0" of the neural networks and training page.

Organization

The conversion and training scripts save metadata using relative paths. Using the same structure will make sharing data more easy. Keep the data repository on the same level as this repository. When we reference data as ../AlphaGo.data/ it will be consistent across contributors. See that repository for more information on the organization of subdirectories

04. Neural Networks and Training

The training pipeline

The neural networks behind AlphaGo's success are trained in a series of steps, partially described in the main body of the paper. To really understand the details, look at the paper's Methods section.

Phase 0: features

As briefly described on the home page, AlphaGo's neural networks are not processing raw board positions. Rather, they are directly being fed information like "if black plays here, how many white stones will be captured?" For a full list of features used for each network, see the Extended Data Tables in paper.

A potential take-away from this is that convolutional neural networks are very good at recognizing (and often generalizing from) spatial patterns, but they are not good at inferring arbitrary and complex rule-based relationships. Choosing which features to provide to the network is a balance of giving it good information to work with, while using features that are fast to compute and that are usable by a the network.

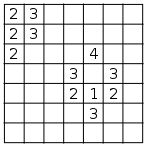

These features are encoded as "one-hot". Take the "liberties" feature for example, which simply counts the number of liberties the group a stone is connected to has. Instead of treating the number of liberties as a scalar value, the feature given to the network is an indicator bit in a binary vector, for example 10000000 if the group has one liberty, 00010000 if the group has 4 liberties, or 00000001 if the group has 8-or-more liberties. Take this miniature 7x7 board..

The number of liberties of the group connected to each stone is

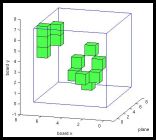

There is a one-hot (i.e. indicator bit in a binary vector) encoding at each position, so we can visualize the resulting feature in 3D (cubes as 1s and empty space as 0s)

Note: in our code base, GameState objects know nothing about one-hot encoding. Converting from a GameState object to some NN inputs is handled by the Preprocessor class. The order of the dimensions of these features is batch x depth x width x height using the Theano convention. These dimensions would need to be permuted to use TensorFlow.

Feature conversion script

To see what arguments are available, use

python -m AlphaGo.preprocessing.game_converter --help

This script generates an HDF5 File with a states dataset, an actions dataset, and a file_offsetsgroup. The states dataset has size n_data x feature_planes x board_width x board_height, storing states converted to concatenated one-hot features at each board position. The actionsdataset has size n_data x 2 and simply stores the x,y coordinate of the move made from the corresponding state.

Typically all you do with this script is specify a list of SGF files and the particular set of features you want to process. SGF files may be specified as a directory walk, a flat lookup into a directory, or by stdin.

Example 1: convert all SGFs in the tests/ directory to all available features

python -m AlphaGo.preprocessing.game_converter --features all --directory tests --recurse -o debug_feature_planes.hdf5

Example 2: convert SGFs with 'Lee-Sedol' in the name, via stdin, to first 4 features planes

find tests -iname "*Lee-Sedol*.sgf" | python -m AlphaGo.preprocessing.game_converter --features board,ones,turns_since -o debug_feature_planes.hdf5

Phase 1: supervised learning of policy networks

Training begins by teaching a convolutional network to simply predict where an expert would move. See the data page regarding where 'expert' data comes from.

Each board position and its subsequent move from the database form a training pair. Additional pairs are constructed using rotations and reflections of the board. SGD and backpropagation are used to maximize the probability of selecting the given action.

DeepMind reported achieving 57% accuracy predicting moves in a test set after this phase (Silver et al. page 2).

Training of the fast rollout policy uses the same dataset and therefore will share much of the same training code. The features and configuration of this network are very different than those of the deep policy network.

Supervised training script

To see what arguments are available, use

python -m AlphaGo.training.supervised_policy_trainer --help

This script is given a model file (a json specifying the policy network's architecture) and a HDF5 file and calls Keras' fit_generator on data extracted by shuffling the given dataset. Each time this script is run, it should be pointed to a different 'output' directory. Metadata and model weights each epoch are saved in this directory.

The output directory will be populated with:

- metadata.json - a json file that keeps a record of which model is being trained, on what dataset, and the train/val accuracy on each epoch

- shuffle.npz - shuffling indices used to index into the dataset (which itself is in contiguous blocks of individual games). Training example

iisdataset[shuffle[i]] - weights.XXXXX.hdf5 - where

XXXXXis the epoch number starting from00000

To resume training from an earlier point, use the --weights weights.#####.hdf5 argument, where ##### refers the latest epoch (if you resume training from an earlier point, the metadata will be wrong). The script will look for weights in the same output directory, i.e. os.path.join(output_dir, weights_file).

A model spec must exist before running this script. This can be done as follows:

from AlphaGo.models.policy import CNNPolicy

arch = {'filters_per_layer': 128, 'layers': 12} # args to CNNPolicy.create_network() features = ['board', 'ones', 'turns_since'] # Important! This must match args to game_converter policy = CNNPolicy(features, **arch) policy.save_model('my_model.json')

Example 1: train on mini dataset created above and save results to training_results/

python -m AlphaGo.training.supervised_policy_trainer my_model.json debug_feature_planes.hdf5 training_results/ --epochs 5 --minibatch 32 --learning-rate 0.01

Phase 2: reinforcement learning, self-play

Reinforcement learning of a policy is difficult to do from scratch. The supervised learning phase is used to initialize the reinforcement learning phase, since greedily evaluating the policy from the first phase should yield reasonable moves.

The policy learned through supervised learning then plays against itself a few million times using decent-but-random moves. Every time it wins, its weights are adjusted to make selecting those same moves more likely. Every time it loses, its weights are adjusted in the opposite direction. Importantly, the network is not always playing against the best version of itself, but instead plays against a random past version of itself. This helps to ensure that it doesn't get stuck on a single type of strategy.

Phase 3: reinforcement learning, value networks

The value network is trained to guess the outcome of games played by the policy we get from phase 2. To prevent overfitting (see the paper), this network is trained on one board position per game. Even more interestingly, there is an added element of randomness to reduce the network's bias (and possibly resulting in more creativity) at this phase; training data comes from games of self-play where, at a random point in the game, a completely random (off policy) move is made. The game is then continued past that point using the greedy policy. The value network is trained to predict the winner from the position immediately following the random move.

This network is simply trained to minimize squared error between output values and the result of the game (+1 for wins and -1 for losses).

Phase 4: revisiting phase 2

Once the value network has learned to predict game outcomes, the self-play phase is repeated, except that instead of weights being adjusted based on wins and losses, they are adjusted based on how different the outcome was from the value predicted by the value network. Presumably this phase makes the policy and value functions more mutually consistent.

05. Supervised Policy Network (Phase I)

Training begins by teaching a convolutional network to simply predict where an expert would move. See the data page regarding where 'expert' data comes from.

Each board position and its subsequent move from the database form a training pair. Additional pairs are constructed using rotations and reflections of the board. SGD and backpropagation are used to maximize the probability of selecting the given action.

DeepMind reported achieving 57% accuracy predicting moves in a test set after this phase (Silver et al. page 2).

Training of the fast rollout policy uses the same dataset and therefore will share much of the same training code. The features and configuration of this network are very different than those of the deep policy network.

Supervised training script

To see what arguments are available, use

python -m AlphaGo.training.supervised_policy_trainer --help

This script is given a model file (a json specifying the policy network's architecture) and a HDF5 file and calls Keras' fit_generator on data extracted by shuffling the given dataset. Each time this script is run, it should be pointed to a different 'output' directory. Metadata and model weights each epoch are saved in this directory.

The output directory will be populated with:

- metadata.json - a json file that keeps a record of which model is being trained, on what dataset, and the train/val accuracy on each epoch

- shuffle.npz - shuffling indices used to index into the dataset (which itself is in contiguous blocks of individual games). Training example

iisdataset[shuffle[i]] - weights.NN.hdf5 - where

NNis the epoch number starting from00

To resume training from an earlier point, use the --weights weights.##.hdf5 argument, where ##refers the latest epoch (if you resume training from an earlier point, the metadata will be wrong). The script will look for weights in the same output directory, i.e. os.path.join(output_dir, weights_file).

A model spec must exist before running this script. This can be done as follows:

from AlphaGo.models.policy import CNNPolicy

arch = {'filters_per_layer': 128, 'layers': 12} # args to CNNPolicy.create_network() features = ['board', 'ones', 'turns_since'] # must match args to game_converter policy = CNNPolicy(features, **arch) policy.save_model('my_model.json')

Example 1: train on mini dataset created above and save results to training_results/

python -m AlphaGo.training.supervised_policy_trainer my_model.json debug_feature_planes.hdf5 training_results/ --epochs 5 --minibatch 32 --learning-rate 0.0

06. Reinforcement Policy Network (Phase II)

Pages 11

- Home

- 01. Home

- 02. Code

- 03. Data

- 04. Neural Networks and Training

- 05. Supervised Policy Network (Phase I)

- 06. Reinforcement Policy Network (Phase II)

- 07. Value Network (Phase III)

- 08. Running models with GTP

- 09. Tests and Benchmarks

- 10. MCTS

Clone this wiki locally

Clone in DesktopTraining begins with the supervised policy learned in Phase I. At a basic level, this policy is simply played against itself iteratively, updating policy weights according to whether a game was won or lost. One copy of the policy is continually updated (and hopefully improved), and periodically frozen copies of itself are added to the pool of opponents. Of course, when starting out, the pool has size one, but grows over time.

Games are played in "mini-batches". In each mini-batch, the policy chooses an opponent at random from the pool, to play a set of games against. During play, state-action pairs and winners are recorded for later training. After a mini-batch has been played out, network weights for the policy are updated over all training pairs. In Google's implementation, they run mini-batches of size 128, in parallel. They play 500 mini-batches per iteration (i.e. the policy sees 500 opponents), and add a copy of the policy to the opponent pool after each iteration. Google's system was trained on 10,000 mini-batches. During the second pass through RL learning (Phase IV), they used the value network as a baseline for each training example, to reduce variance (see paper).

Here's how our implementation works.

# Set SGD and compile

sgd = SGD(lr=args.learning_rate)

player.policy.model.compile(loss='binary_crossentropy', optimizer=sgd) for i_iter in xrange(args.iterations): # Train mini-batches for i_batch in xrange(args.save_every): # Randomly choose opponent from pool opp = np.random.choice(opponents) # Make training pairs and do RL X_list, y_list, winners = make_training_pairs( player, opp, features, args.game_batch_size) player = train_batch(player, X_list, y_list, winners, args.learning_rate)

So, after initializing our Keras SGD object and compiling the policy's neural network model, we loop through the numbers of iterations and batches specified at the command line. Note the player object is an instance of ProbabilisticPolicyPlayer, which is just another layer of abstraction that offers a get_move function that returns a move when given a board state. X_listand y_list are the training states and actions, respectively. Now, let's look at what happens inside make_training_pairs.

while True:

# Cache states before moves

states_prev = [st.copy() for st in states] # Get moves (batch) moves_black = player1.get_moves(states) # Do moves (black) states, X_list, y_list = do_move(states, states_prev, moves_black, X_list, y_list, player_color) # Do moves (white) moves_white = player2.get_moves(states) states, X_list, y_list = do_move(states, states_prev, moves_white, X_list, y_list, player_color) # If all games have ended, we're done. Get winners. done = [st.is_end_of_game or st.turns_played >= 500 for st in states] if all(done): break winners = [st.get_winner() for st in states]

The while loop ensures that all games are played out until the end. states is a list of GameState objects, which keep track of each of the games (current player, turns played, board state, etc.). X_list and y_list are nested lists, where each sub-list represents training items from one of the games being played in parallel. For each iteration of the while loop, the lists corresponding to each game get appended (in do_move) as long as that game hasn't already ended. (Here, I've also added an arbitrary cap of 500 turns to all games, because some might get stuck in ko loops.) We only record an (X, y) training pair if it's the policy's move and the policy didn't choose to pass. Before the games start, the policy is randomly assigned to be either white or black. So player1 may be the policy and player2 the opponent, or vice versa in any given mini-batch. Here's what happens in do_move.

for st, st_prev, mv, X, y in zip(states, states_prev, moves, X_list,

y_list):

if not st.is_end_of_game: # Only do more moves if not end of game already st.do_move(mv) if st.current_player != player_color and mv is not go.PASS_MOVE: # Convert move to one-hot state_1hot = preprocessor.state_to_tensor(st_prev) move_1hot = np.zeros(bsize_flat) move_1hot[flatten_idx(mv, bsize)] = 1 X.append(state_1hot) y.append(move_1hot)

Here we're converting the board states and move tuples to 1-hot encoding, which is just the format needed for our neural network model. Then, we append those to training items to X_listand y_list. Later on, the sub-lists in X_list and y_list will be concatenated into a single numpy array per game in the mini-batch. Now, let's look at how train_batch works.

for X, y, winner in zip(X_list, y_list, winners):

# Update weights in + direction if player won, and - direction if player lost. # Setting learning rate negative is hack for negative weights update. if winner == -1: player.policy.model.optimizer.lr.set_value(-lr) else: player.policy.model.optimizer.lr.set_value(lr) player.policy.model.fit(X, y, nb_epoch=1, batch_size=len(X))

Note that, even though we're doing reinforcement learning, we're able to make use of Keras's backpropagation (model.fit) to update the weights, through the use of a simple hack: we can change the direction the weights are updated by setting the learning rate negative. So we set the learning rate positive if we won a game, since we want to reward this behavior, and negative if we lost, to penalize. All moves in the game leading to the reward outcome are weighted equally.

Those are the basics. You can refer to our code base for more implementation details. But remember that the code is a work in progress, so the wiki may be slow to reflect any changes.

07. Value Network (Phase III)

Pages 11

- Home

- 01. Home

- 02. Code

- 03. Data

- 04. Neural Networks and Training

- 05. Supervised Policy Network (Phase I)

- 06. Reinforcement Policy Network (Phase II)

- 07. Value Network (Phase III)

- 08. Running models with GTP

- 09. Tests and Benchmarks

- 10. MCTS

Clone this wiki locally

Clone in DesktopThe value network estimates, in reinforcement learning terms, the value of each position. That is, if I see a given board state, and played an optimal policy until the end of the game, what would be my expected reward (i.e. winning/losing)? The value network training has two phases: i) games of self-play to generate training pairs, ii) update weights using those training pairs. In broad strokes, the generation part takes the policy we learned from RL (Phase II) and plays it against itself (DeepMind played 30 million games), then in training it's basically the same thing we saw in Phase II. But there are a couple subtle nuances. First, one move (U) during each game is chosen to be played at random, where U is sampled uniformly from 1 to 450. The action is drawn from a uniform random distribution over all board positions (excluding illegal moves). All turns prior to U, both players use the SL, and afterwards they are replaced with the RL. Also, only one training tuple is collected from each game. The tuple consists of the board state just after the random move (sU+1) and the eventual winner (white or black). Additionally, the color of player who played aU+1 is recorded for use in input to the network during training. Presumably, only taking one move per game avoids the overfitting that happens if every move is taken from each game. Notice the action is not included, because this is the value of the board position, alone.

First, let's look at the script for generating the 30 million training examples.

X_list = []

winners_list = []

colors_list = []

for n in xrange(n_training_pairs / batch_size): X, winners, colors = play_batch(player_RL, player_SL, batch_size, features) X_list.append(X) winners_list.extend(winners) colors_list.extend(colors) # Concatenate over batches to make one HDF5 file X = np.concatenate(X_list, axis=0) save(out_pth, X, winners_list, colors_list)

X_list is a list of board states to train on (one per game), and winners_list and colors_list are the other training elements as described above. As is Phase II, we play games in batches (for GPU efficiency). So we loop over batches until we've played the desired number of games (i.e. n_training_pairs). When we've finished looping, we save all the data out to HDF5, which is an efficient standard for storing large files. Now, let's examine play_batch.

player = player_SL

states = [GameState() for i in xrange(batch_size)]

# Randomly choose turn to play uniform random. Move prior will be from SL # policy. Moves after will be from RL policy. i_rand_move = np.random.choice(range(450)) while True: # Do moves (black) if turn == i_rand_move: # Make random move, then switch from SL to RL policy X_list, colors, states, player = do_rand_move(states, player, player_RL) else: # Get moves (batch) moves_black = player.get_moves(states) # Do moves (black) states = do_move(states, moves_black) # Do moves (white) if turn == i_rand_move: # Make random move, then switch from SL to RL policy X_list, colors, states, player = do_rand_move(states, player, player_RL) else: moves_white = player.get_moves(states) states = do_move(states, moves_white) # If all games have ended, we're done. Get winners. done = [st.is_end_of_game or st.turns_played >= 500 for st in states] if all(done): break

This should look similar to Phase II. The major difference is that there's some extra logic to check each move whether the current turn is the one we've randomly chosen to be off-policy (uniform random). do_rand_move does the random move, then returns the training tuple data and reassigns the player to the player_RL.

Great, so now we have more training examples we could ever want. But how do we train? First, a bit about the network architecture. The value network is similar to the policy network with some subtle differences. Both networks have 48 feature planes as input, but the value has an additional binary feature plane describing the current color to play. There are some other differences, which I won't go into detail about here, but are detailed in the paper. The most notable difference is that the last layer of the value network is a single tanh unit (because it's trying to guess whether the game outcome was -1 or 1), whereas the policy network used softmax (because it was classifying). Network implementation can be found in AlphaGo/models/value.py.

Finally, the training is identical to the supervised (Phase I), except that data labels are just 1 or -1 (as opposed to flattened board states). The code in reinforcement_value_trainer.py is almost identical to supervised_policy_trainer.py, with just a few tweaks, like changing the loss to mean squared error.

08. Running models with GTP

Pages 11

- Home

- 01. Home

- 02. Code

- 03. Data

- 04. Neural Networks and Training

- 05. Supervised Policy Network (Phase I)

- 06. Reinforcement Policy Network (Phase II)

- 07. Value Network (Phase III)

- 08. Running models with GTP

- 09. Tests and Benchmarks

- 10. MCTS

Clone this wiki locally

Clone in DesktopWith the addition of the pygtp library, running a model as an opponent becomes easy once you've installed a GTP-compatible interface like GoGui.

GTP (the Go Text Protocol) is a protocol for playing a game of Go. A GTP Engine is a program that is responsible for maintaining the internal state of the game and deciding on a move when asked. A GTP Controller is a program that mediates between engines.

The function run_gtp(Player) in interface.gtp_wrapper will loop forever listening for GTP commands on stdin and writing responses to stdout. (Note that a Player object that prints debug messages to stdout will be incompatible, as these statements will be interpreted as GTP responses. Use a logging module instead). GoGui is a bundle of software that, among other things, provides a graphical interface to play against any GTP-compatible program. Note that GoGui also comes with wrappers that can extend this simple stdin/stdout interface, for example to run the protocol over the internet.

In our code, a Player is any object that implements a get_move(GameState) --> move function. Example classes include AlphaGo.ai.GreedyPolicyPlayer, AlphaGo.ai.ProbabilisticPolicyPlayer, and AlphaGo.mcts.MCTS.

Example: playing against a raw policy network

Start by making a small script "greedy_player_gtp.py" in the RocAlphaGo/ directory that loads in a policy and ultimately calls run_gtp:

from AlphaGo.ai import GreedyPolicyPlayer

from AlphaGo.models.policy import CNNPolicy

from interface.gtp_wrapper import run_gtp MODEL = 'path/to/my_model_spec.json' WEIGHTS = 'path/to/traindata/weights.00999.hdf5' policy = CNNPolicy.load_model(MODEL) policy.model.load_weights(WEIGHTS) player = GreedyPolicyPlayer(policy) run_gtp(player)

Running this script by itself, it will load the player then print "GTP engine ready" and hang, waiting for input. You can type GTP commands directly to it, for example try typing list_commands, and exit by typing quit. If you use a virtual environment, make sure you know how to run this script from outside the virtualenv, for example by using the virtualenv's python interpreter directly (i.e. /path/to/your/virtualenv/bin/python greedy_player_gtp.py should work when your virtual environment is not active).

In GoGui, you now need to create and attach this program using whatever command you use to run it, setting the "working directory" to your /path/to/RocAlphaGo.

09. Tests and Benchmarks

Pages 11

- Home

- 01. Home

- 02. Code

- 03. Data

- 04. Neural Networks and Training

- 05. Supervised Policy Network (Phase I)

- 06. Reinforcement Policy Network (Phase II)

- 07. Value Network (Phase III)

- 08. Running models with GTP

- 09. Tests and Benchmarks

- 10. MCTS

Clone this wiki locally

Clone in DesktopUnittests

We are using Python unittests to verify code. Not all existing code is tested, and not all existing tests are exhaustive. Writing new tests is a great way to become familiar with the project.

How to run all tests (starting in the root directory of the project)

python -m unittest discover

How to run a specific test file (note: in order for this to work, the test file must contain if __name__ == '__main__': unittest.main())

python -m tests.test_policy

How to run a single function within a specific test file

python -m tests.test_policy TestCNNPolicy.test_save_load

Benchmarks

"Benchmarks" refer to speed and profiling scripts. These are not run automatically. These are written and run to identify critical speed bottlenecks.

Speed problems are not always where you would expect them to be. Because readability and simplicity are goals of this project, we are avoiding premature optimization and instead opting to rely heavily on benchmarking scripts to identify slow sections of code and - importantly - to quantify the value of a proposed speedup.

As an example, see benchmarks/preprocessing_benchmark.py. It uses the cProfile module to run a function and saves the profiling results in a .prof file. Run it as

python -m benchmarks.preprocessing_benchmark

There are a number of tools for viewing and interpreting the results. See snakeviz for example.

Writing new benchmarking scripts and/or addressing major speed issues is another great way to get started contributing.

10. MCTS

Pages 11

- Home

- 01. Home

- 02. Code

- 03. Data

- 04. Neural Networks and Training

- 05. Supervised Policy Network (Phase I)

- 06. Reinforcement Policy Network (Phase II)

- 07. Value Network (Phase III)

- 08. Running models with GTP

- 09. Tests and Benchmarks

- 10. MCTS

Clone this wiki locally

Clone in DesktopDeepMind’s version of MCTS effectively reduces the depth and breadth of tree search by integrating convnets into AlphaGo: evaluating positions using a value network, and sampling actions using a policy network.

The tree is traversed by two-phase simulations: in-tree phase of each simulation begins at the root of the search tree and finishes when the simulation reaches a leaf node in L time steps, and second rollout phase of each simulation begins at leaf node and continues until the end of the game.

Each traversed node s in the search tree stores a set of statistics corresponding to a certain state, and its children nodes mapped with all legal actions with respect to that state,

{P(s, a), Nv(s, a), Nr(s, a), Wv(s, a), Wr(s, a), Q(s, a)}

which includes total leaf evaluations Wv(s, a) and rollout rewards Wr(s, a), accumulated over number of visit count N(s,a), and u(s,a) that is proportional to the prior probability P(s, a) but decays with repeated visits to encourage exploration P(s, a) / 1 + N(s, a), and Q(s, a) the overall evaluation of each state action, a weighted average of the Monte Carlo estimates, Q(s, a) = (1 − λ) Wv(s, a) / N(s, a)+ λ Wr(s, a) / N(s, a), that mixes together the value network and rollout evaluations with weighting parameter λ.

An instance of root state is created at the start of each simulation, updated implicitly by taking selected actions as search tree is traversed. and added to a queue for evaluation after in-tree phase simulation completes.

Multiple simulations are executed in parallel on separate threads proceeded in the four stages: selection, evaluation, backup and expansion. The search tree is descended in a forward pass while statistics are updated through a backward pass. Both are based on a children-parent relations(children nodes may be expanded from its ancestor, action value are updated to track the mean value of all evaluations of its children). As simulations keep running, the entire search tree structure is constructed along with all of its statistics being updated and retained.

At the end of search AlphaGo selects the action with maximum visit count(less sensitive to outliers of Q(s,a) values from one or two single simulations), the search tree is reused as game goes on. The child node corresponding to the played action becomes the new root node, the subtree below this child is retained along with all its statistics, while the remainder of the tree is garbage collected.

Selection. At each of time steps, t Evaluation. After reaching the Lth time step, the leaf state evaluation begins in two separate parts: one is to add leaf state to value network for evaluation; the other one is to keep playing until the end the game using fast rollout policy for action selections. There is no need to expand search tree or retain any statistics during rollout phase. After game terminates, the outcome is computed with final game score from the perspective of the current player at time step L: +1 for winning and −1 for losing. Backup. Two separate backward passes are initiated after leaf state evaluations complete. The statistics are updated in a backward pass through each step per simulation. At each in-tree step simulation, the rollout statistics are updated as if it has lost the games to discourage other threads from exploring the identical variation. At the end of the simulation, the rollout statistics are updated to replace the virtual losses by the outcome. Expansion. The leaf state may be expanded by adding the successor state to the search tree to update and retain all of its statistics, when the visit count of current leaf state exceeds a threshold. The threshold depends on the rate at which positions are added to the policy queue for prior probabilities evaluation matches the rate at which the GPUs evaluate the policy network.