【GNN报告】GNN-LOGS部分报告汇总

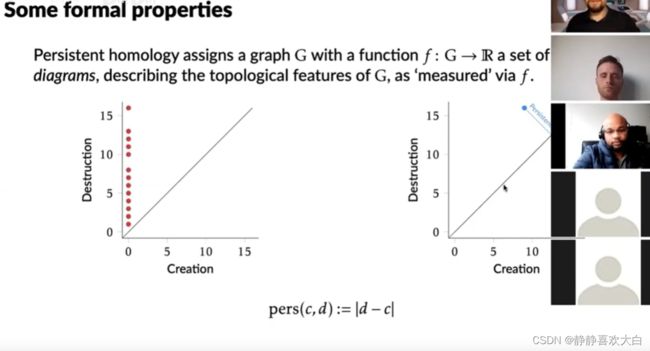

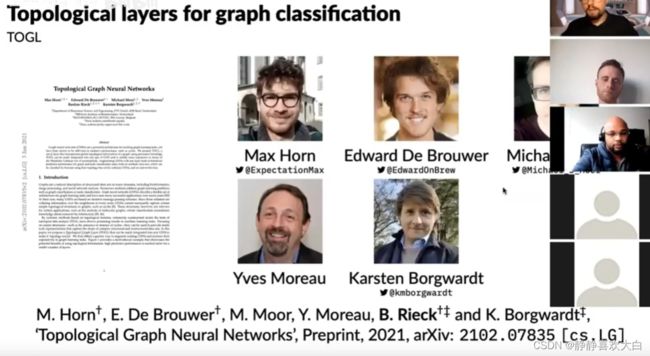

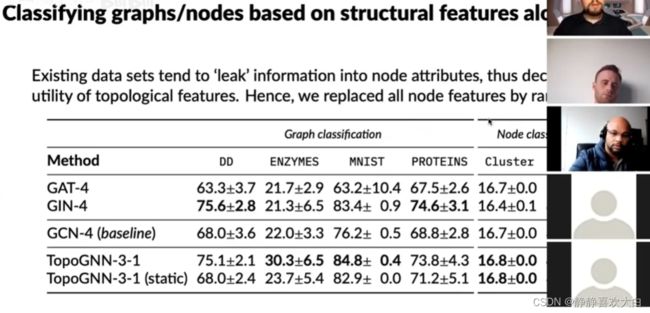

Bastain Rieck: Topology-Based Graph Representation Learning

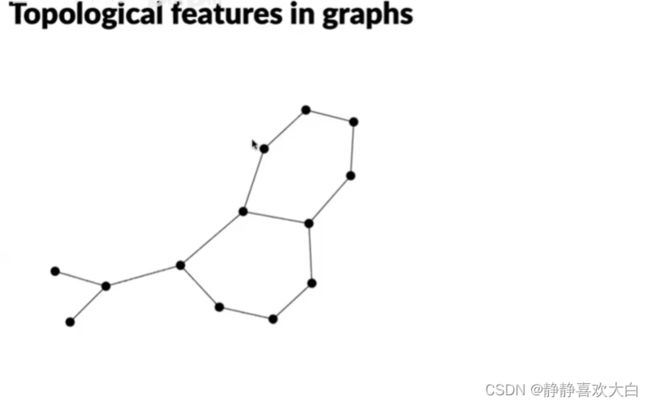

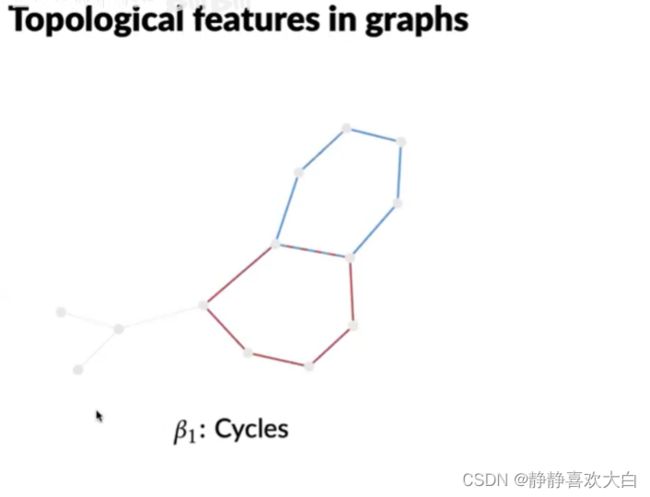

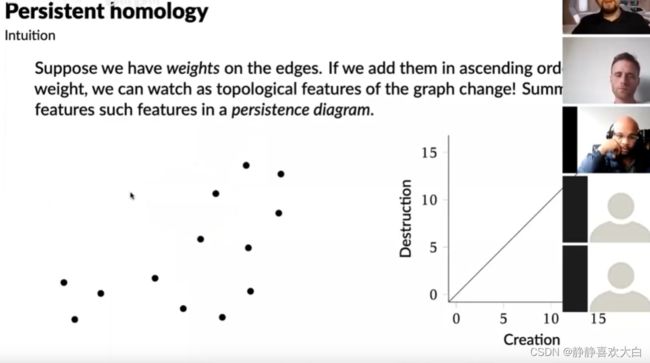

基础

拓扑学习

基于拓扑学习的工作

![]()

参考

Bastain Rieck: Topology-Based Graph Representation Learning_哔哩哔哩_bilibili

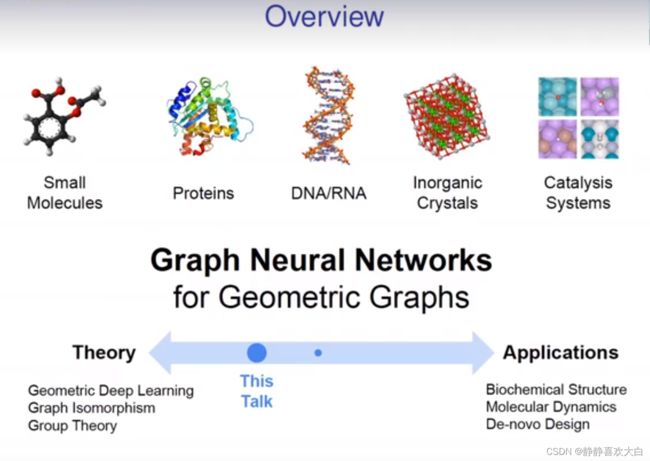

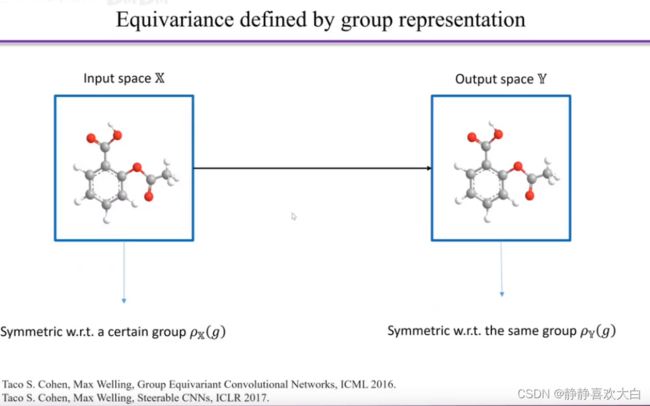

Chaitanya K. Joshi Graph Neural Networks for Geometric Graphs

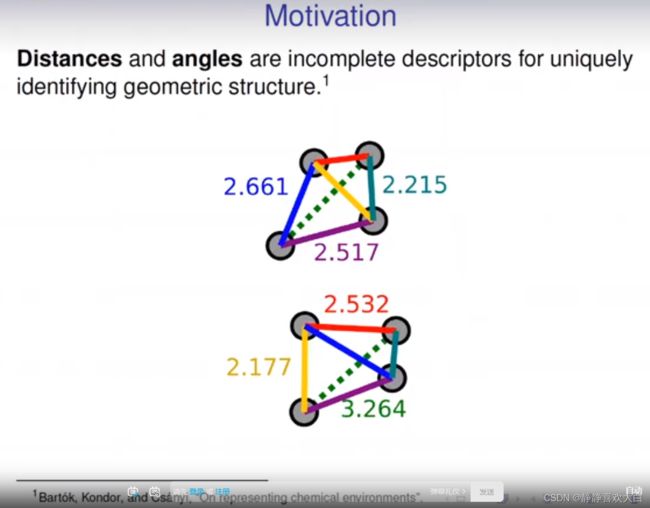

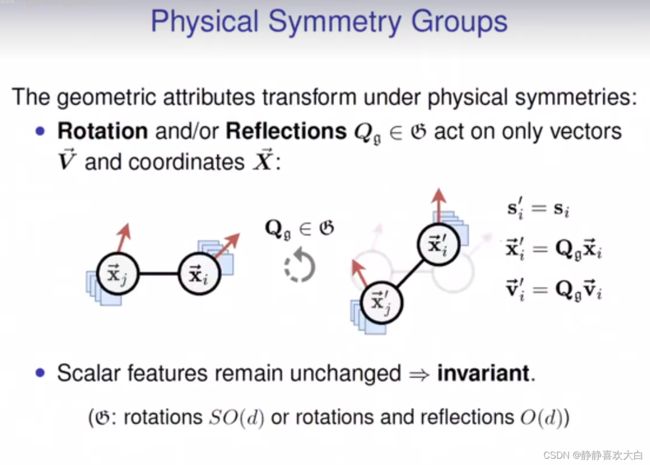

背景

方法

Geometric Graphs

![]()

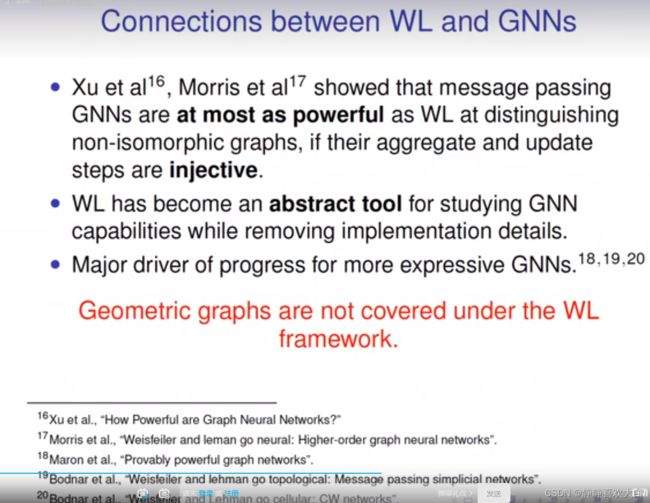

GNNs for Geometric Graphs

Universality and Discrimination

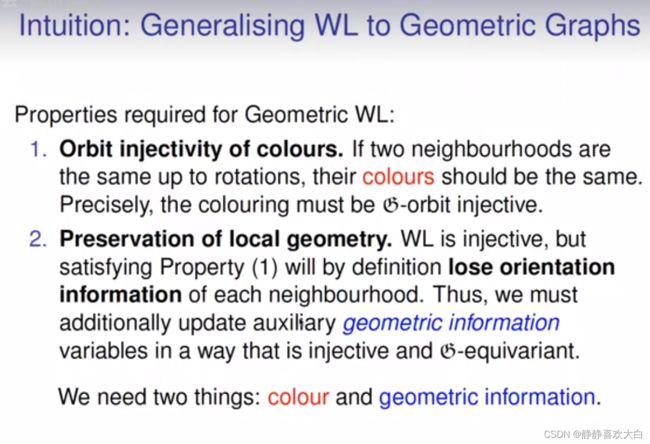

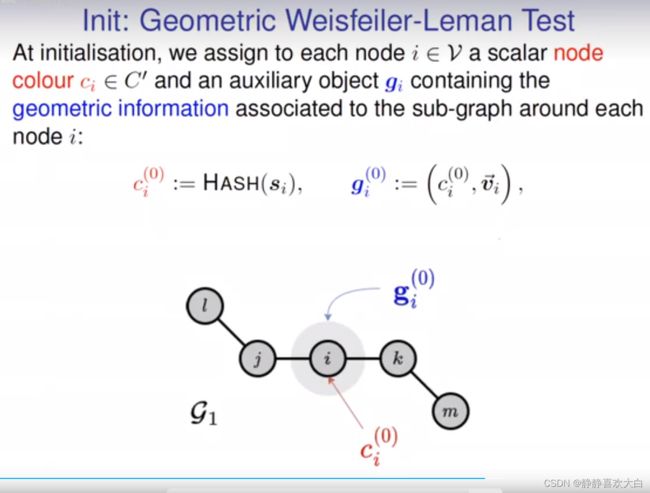

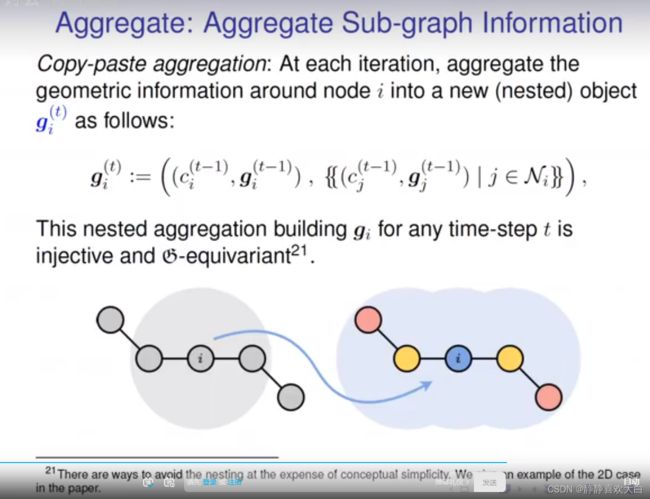

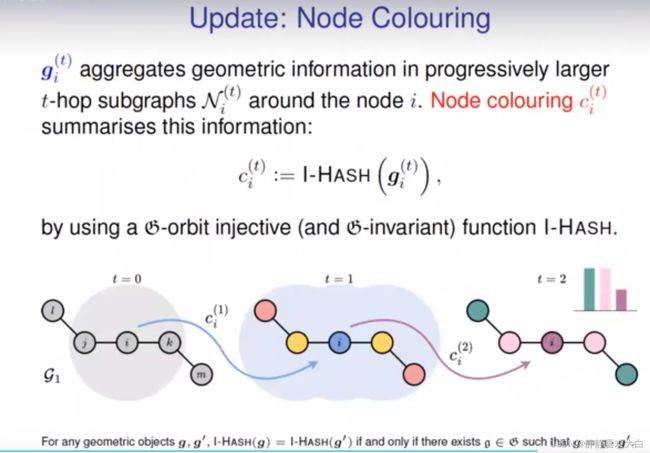

Geometric Weisfeiler-Leman Test

可借鉴:

1)报告中的参考文献引用形式

2)画图配色

参考

Graph Neural Networks for Geometric Graphs - Chaitanya K. Joshi, Simon V. Mathis_哔哩哔哩_bilibili

Pinterest吴凌飞: 图深度学习与自然语言处理

摘要

There are a rich variety of NLP problems that can be best expressed with graph structures. Due to the great power in modeling non-Euclidean data like graphs, deep learning on graphs techniques (i.e., Graph Neural Networks (GNNs)) have opened a new door to solving challenging graph-related NLP problems and have already achieved great success. Despite the success, deep learning on graphs for NLP (DLG4NLP) still faces many challenges (e.g., automatic graph construction, graph representation learning for complex graphs, and learning mapping between complex data structures). This tutorial will cover relevant and interesting topics on applying deep learning on graph techniques to NLP, including automatic graph construction for NLP, graph representation learning for NLP, advanced GNN-based models (e.g., graph2seq and graph2tree) for NLP, and the applications of GNNs in various NLP tasks (e.g., machine translation, natural language generation, information extraction and semantic parsing). In addition, hands-on demonstration sessions will be included to help the audience gain practical experience in applying GNNs to solve challenging NLP problems using our recently developed open source library – Graph4NLP, the first library for researchers and practitioners for easy use of GNNs for various NLP tasks.

嘉宾简介

吴凌飞博士,美国公立常春藤之称的威廉玛丽学院计算机科学博士学位。他的主要研究方向是机器学习,表征学习,和自然语言处理的有机结合,特别是图神经网络及其应用。目前他是美国Pinterest公司的主管知识图谱和内容理解的研发工程经理(EM)。在这之前,他是京东硅谷研究中心的首席科学家,带领了30 多名机器学习/自然语言处理科学家和软件工程师组成的团队,构建智能电子商务个性化系统。他目前著有图神经网络图书一本,并发表了100多篇顶级会议和期刊的论文,谷歌学术引用将近3000次(H-index 28, I10-index 68)。他主持开发的Graph4NLP软件包,自2021年中发布以来收获1500+ Stars,180+ Forks,深受学术界和工业界欢迎。他曾是 IBM Thomas J. Watson 研究中心的高级研究员,并领导10 多名研究科学家团队开发前沿的图神经网络方法和系统,三次获得IBM杰出技术贡献奖。他是 40 多项美国专利的共同发明人,凭借其专利的高商业价值,共获得八项IBM发明成果奖,并被任命为 IBM 2020 级发明大师。他目前担任IEEE TNNSL和ACM Transactions on Knowledge Discovery from Data的副主编,定期担任主要的 AI/ML/NLP 会议包括 KDD,EMNLP, IJCAI,AAAI等的SPC/AC。个人主页:https://sites.google.com/a/email.wm.edu/teddy-lfwu/

背景

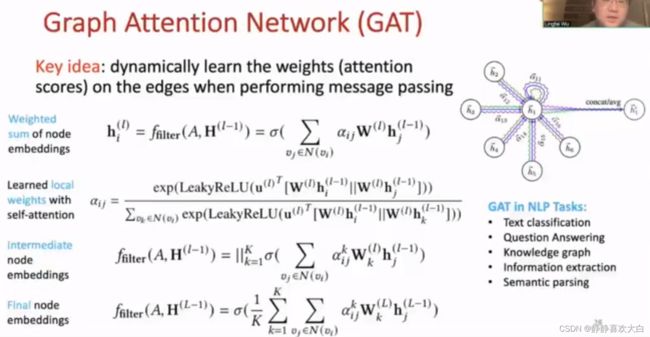

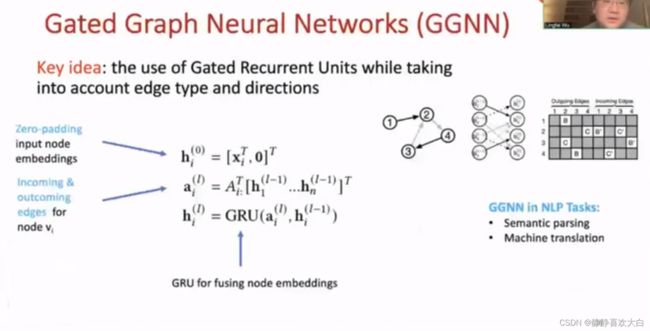

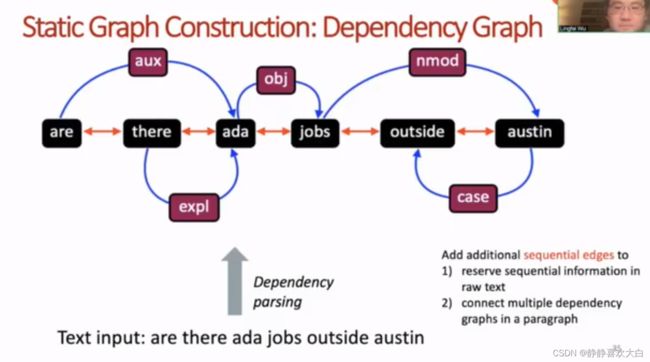

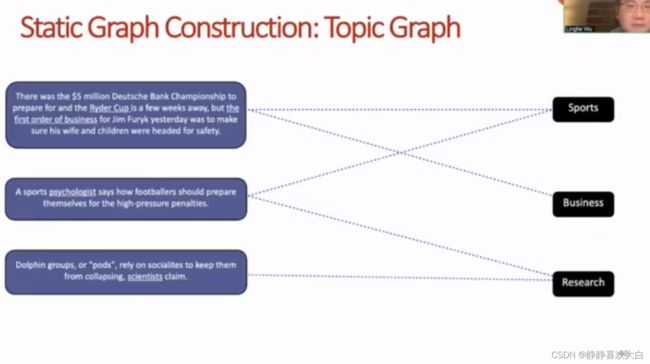

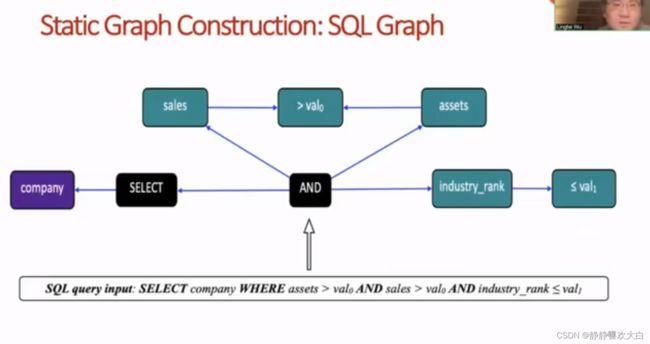

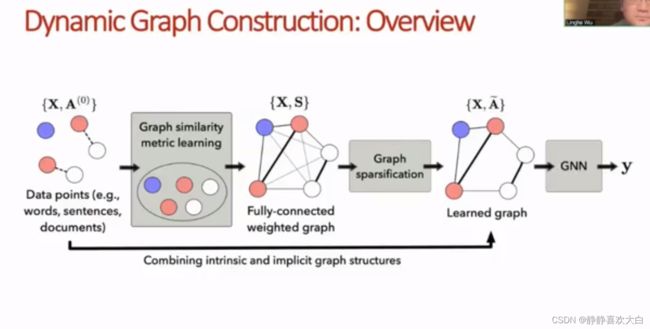

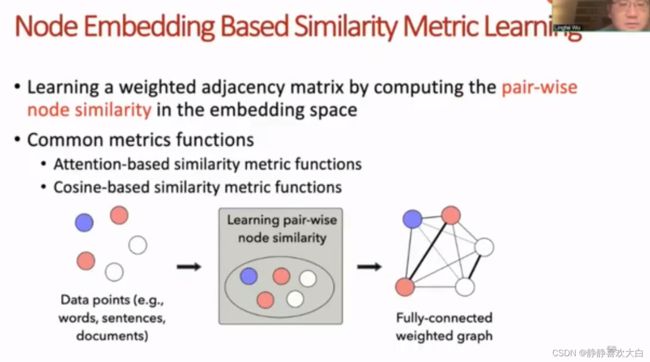

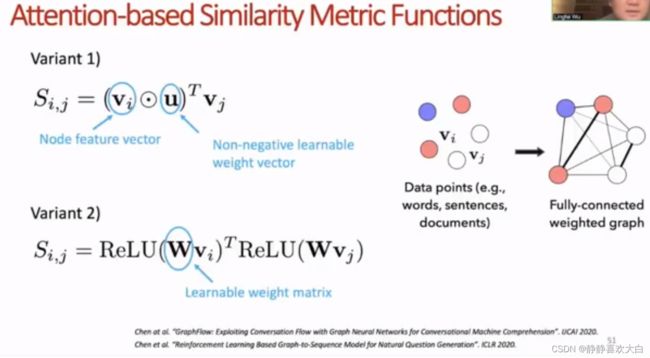

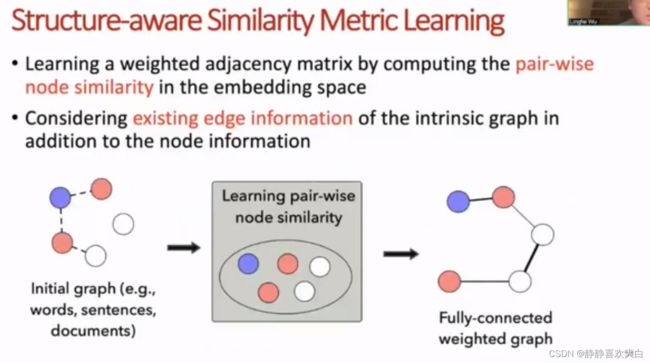

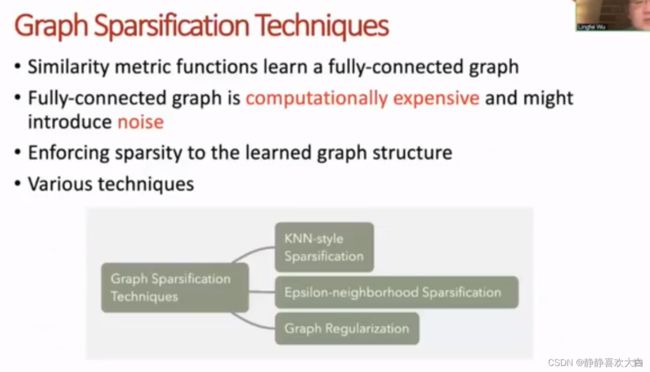

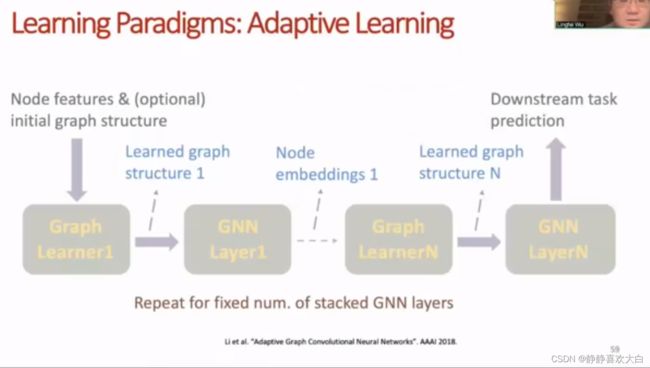

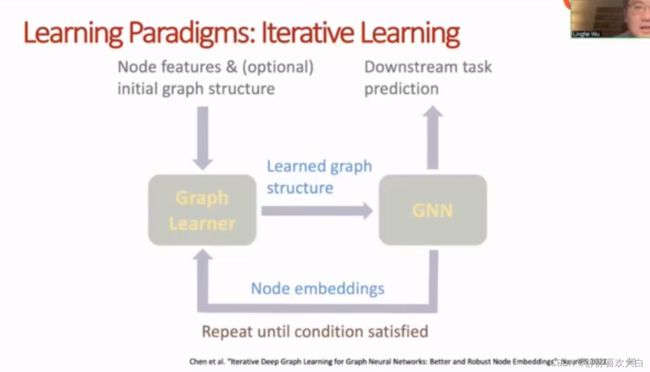

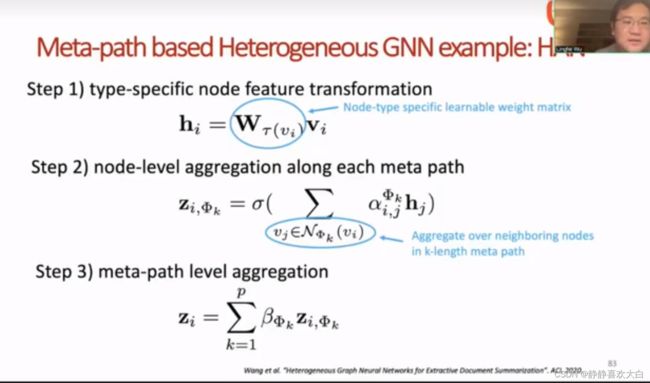

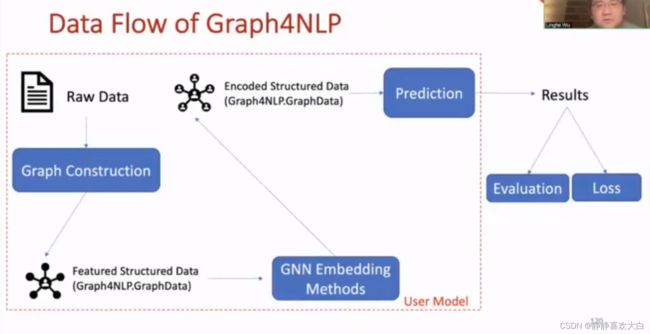

方法

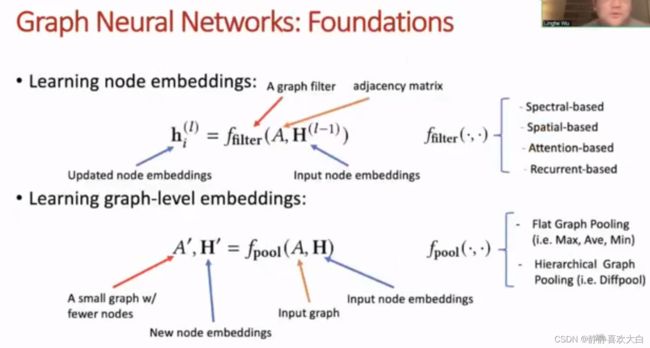

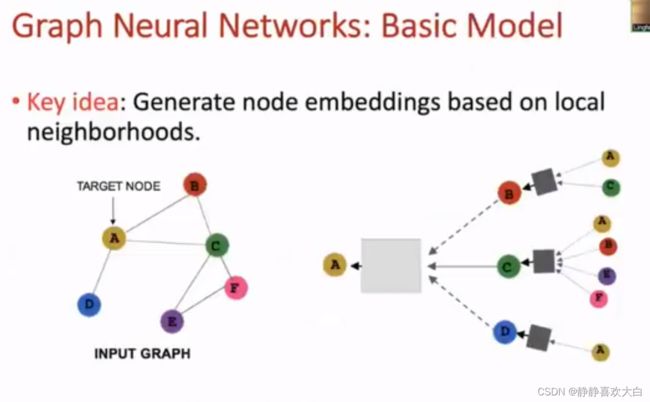

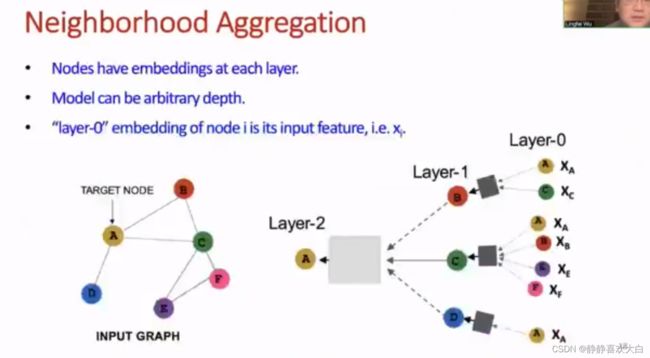

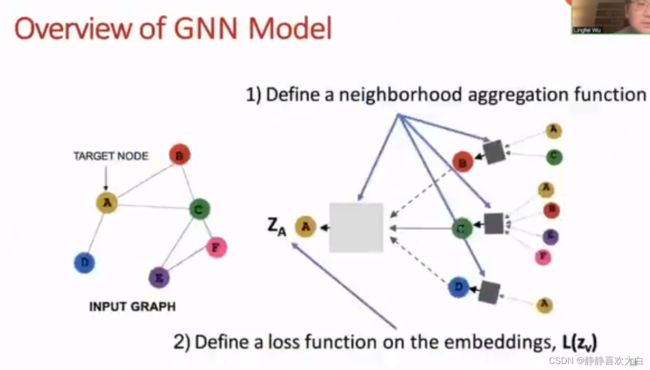

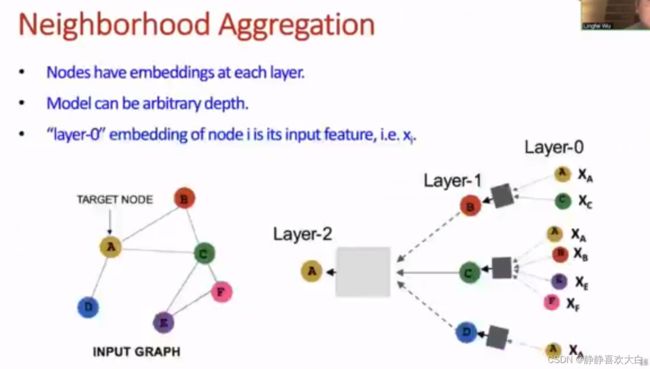

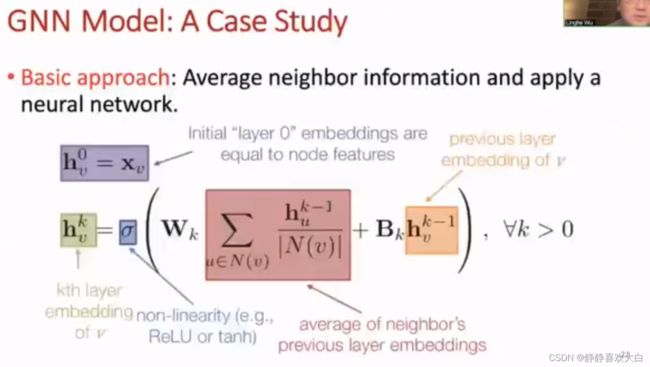

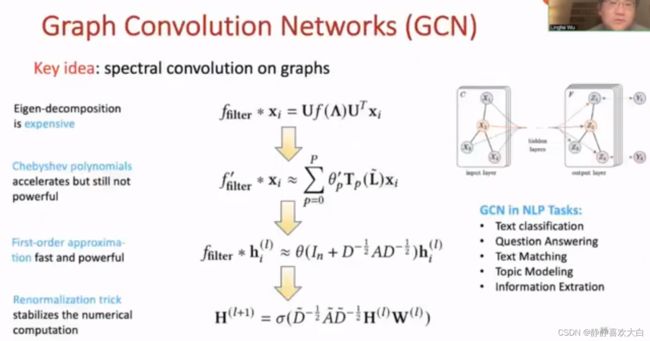

基础

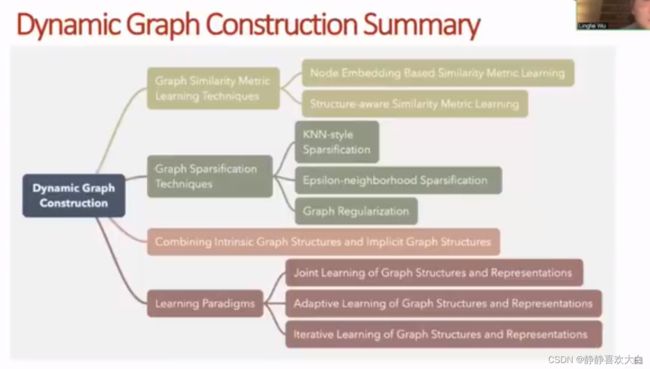

![]()

GNN4NLP

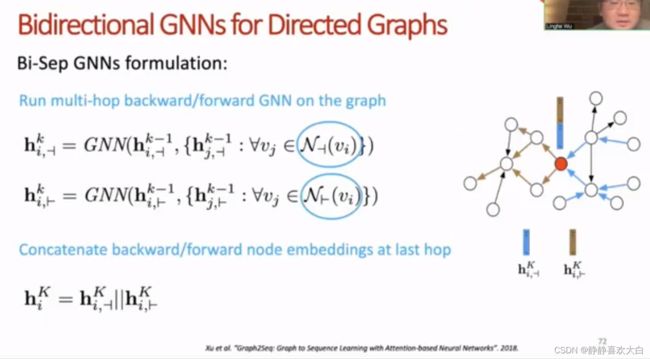

![]()

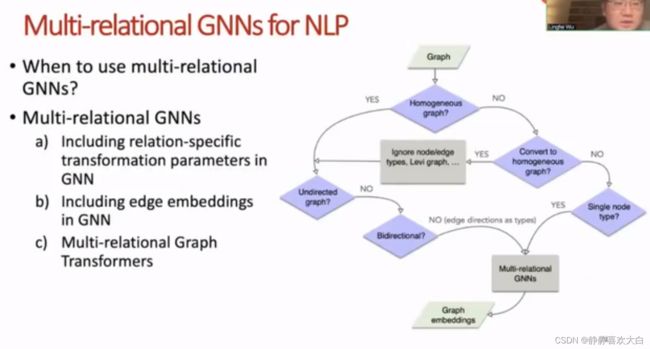

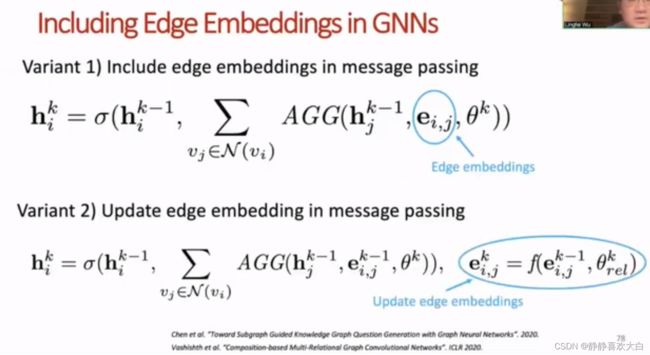

![]()

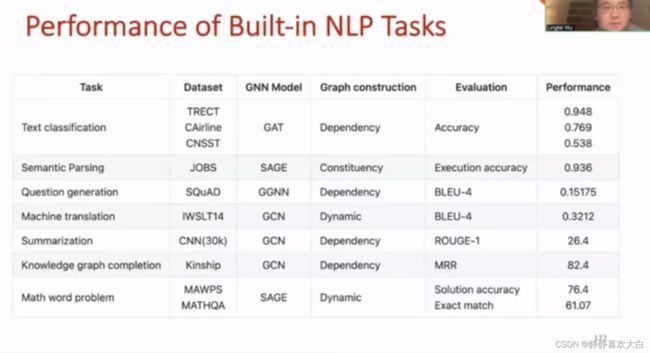

应用

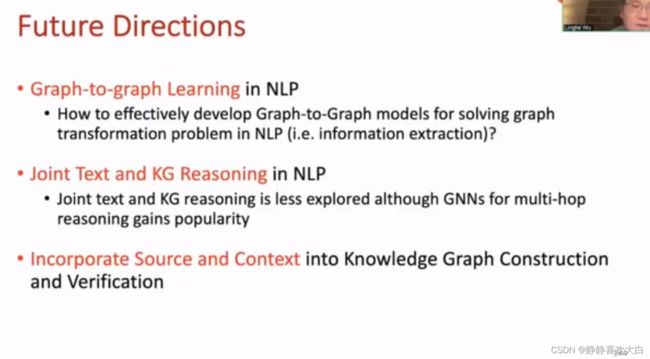

总结

1)报告模板!可以拿来借鉴使用(尤其引言基础介绍部分可以全部拿来用)

2)画图:轴线图,

3)公式凝练:Foundations

4)模型总结:基础部分

参考

LOGS图学习研讨会2022/11/29期||Pinterest吴凌飞: 图深度学习与自然语言处理_哔哩哔哩_bilibili

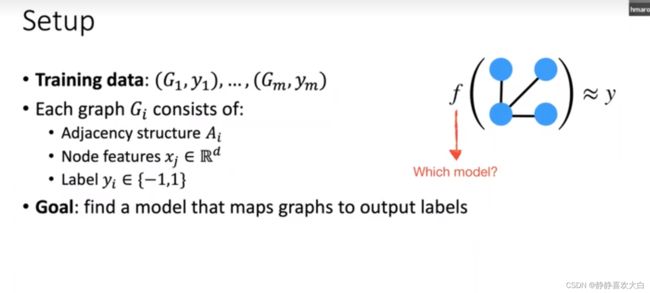

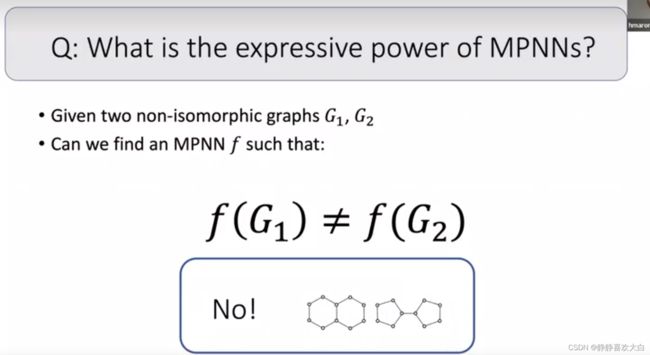

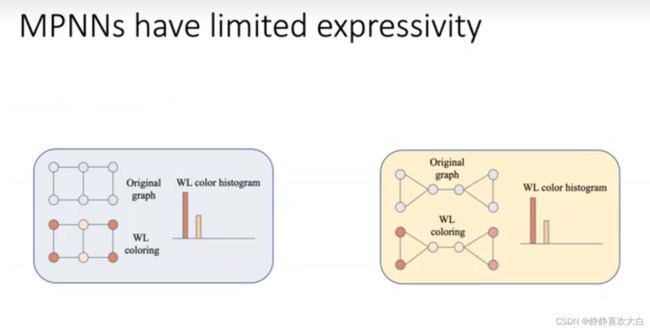

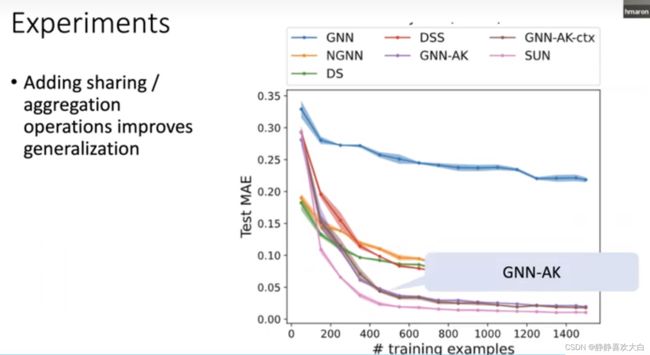

Subgraph-based expressive, efficient, and domain-independent graph learning

Abstract

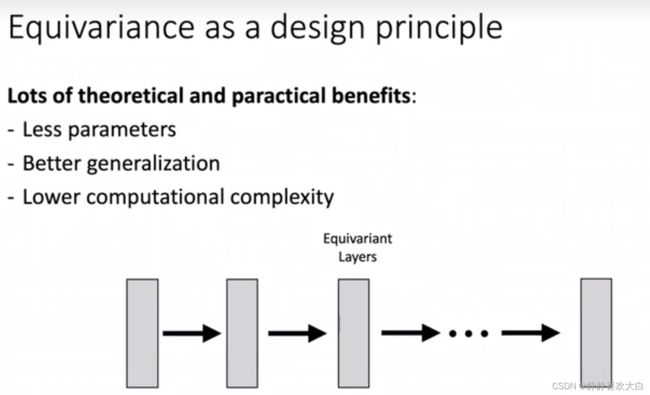

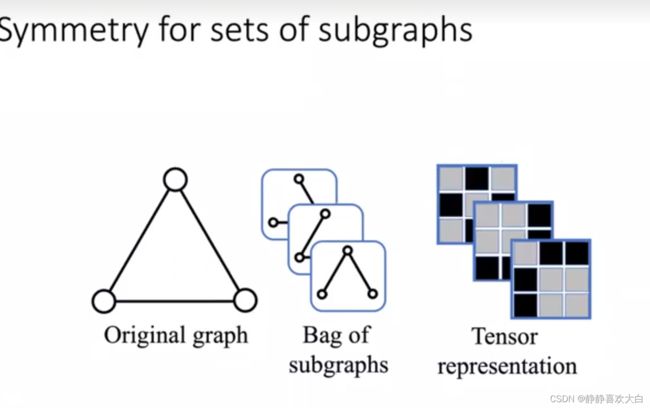

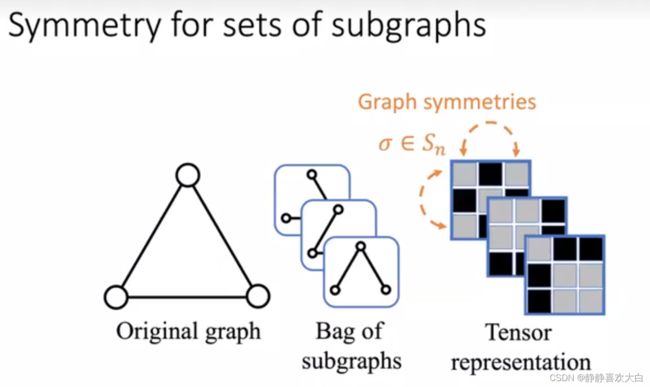

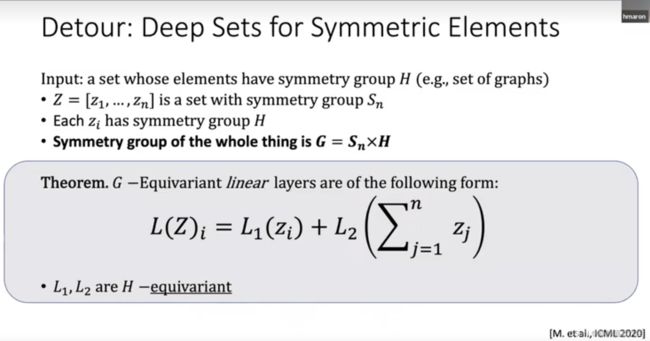

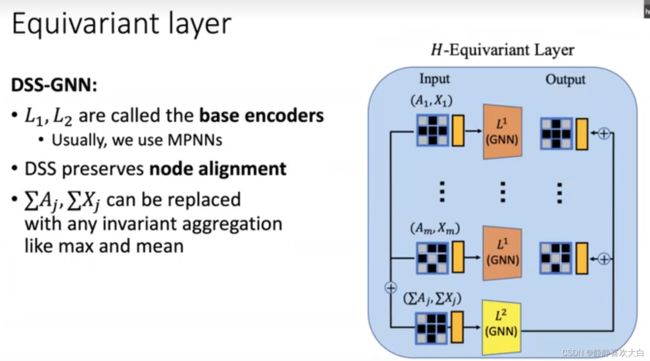

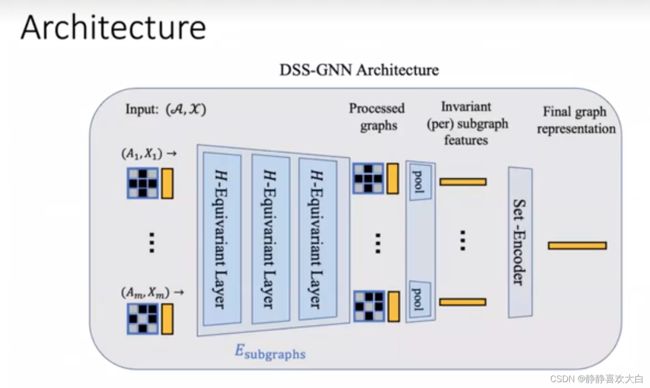

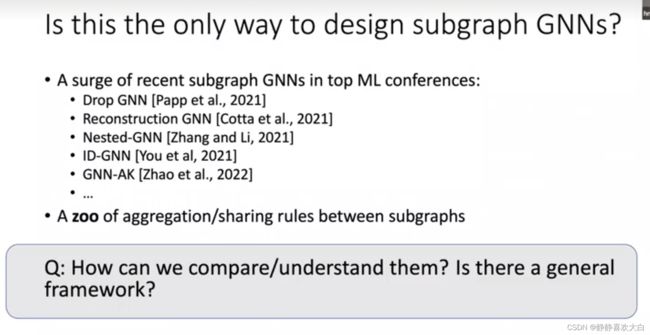

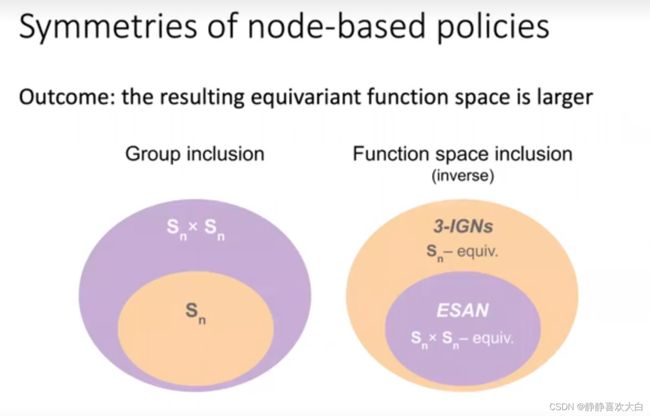

While message-passing neural networks (MPNNs) are the most popular architectures for graph learning, their expressive power is inherently limited. In order to gain increased expressive power while retaining efficiency, several recent works apply MPNNs to subgraphs of the original graph. As a starting point, the talk will introduce the Equivariant Subgraph Aggregation Networks (ESAN) architecture, which is a representative framework for this class of methods. In ESAN, each graph is represented as a set of subgraphs, selected according to a predefined policy. The sets of subgraphs are then processed using an equivariant architecture designed specifically for this purpose. I will then present a recent follow-up work that revisits the symmetry group suggested in ESAN and suggests that a more precise choice can be made if we restrict our attention to a specific popular family of subgraph selection policies. We will see that using this observation, one can make a direct connection between subgraph GNNs and Invariant Graph Networks (IGNs), thus providing new insights into subgraph GNNs' expressive power and design space.

Biography

Haggai is a Senior Research Scientist at NVIDIA Research and a member of NVIDIA's TLV lab. His main field of interest is machine learning in structured domains.

In particular, he works on applying deep learning to sets, graphs, point clouds, and surfaces, usually by leveraging their symmetry structure. He completed his Ph.D. in 2019 at the Weizmann Institute of Science under the supervision of Prof. Yaron Lipman.

Haggai will be joining the Faculty of Electrical and Computer Engineering at the Technion as an Assistant Professor in 2023.

背景

方法

参考

Subgraph-based expressive, efficient, and domain-independent graph learning_哔哩哔哩_bilibili

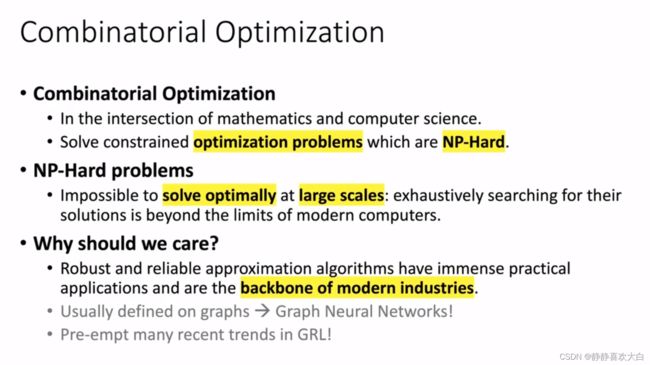

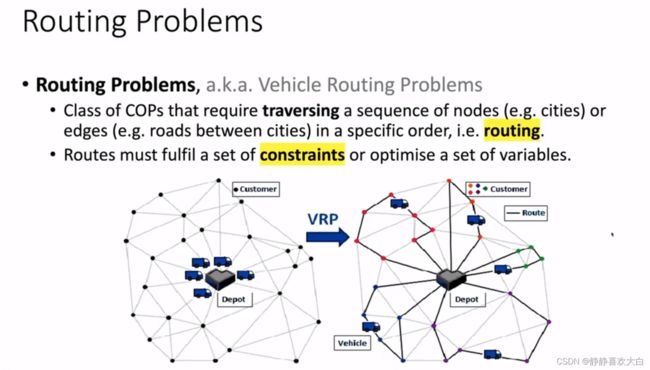

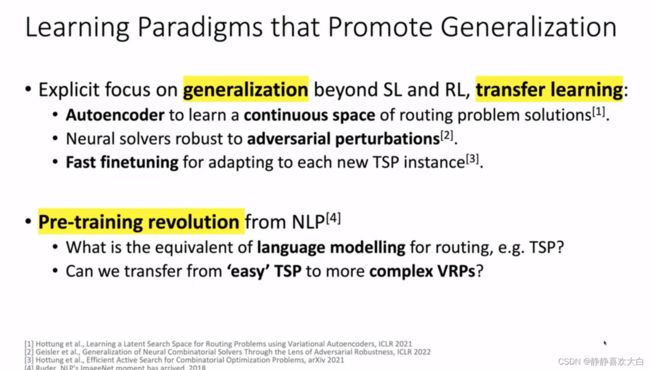

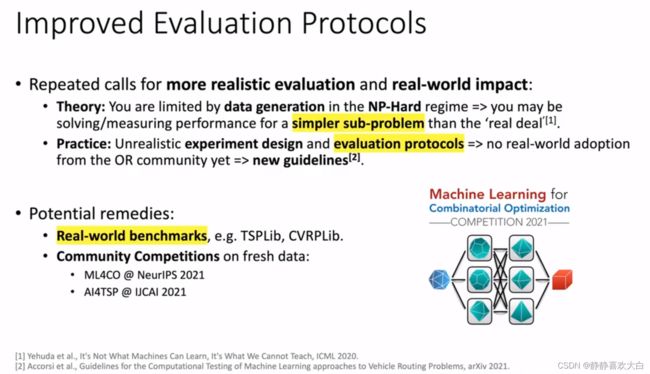

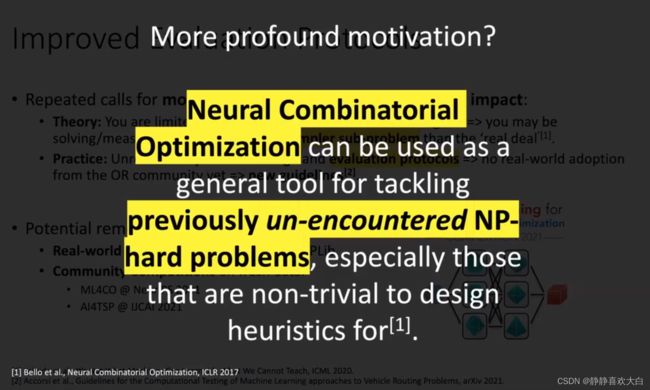

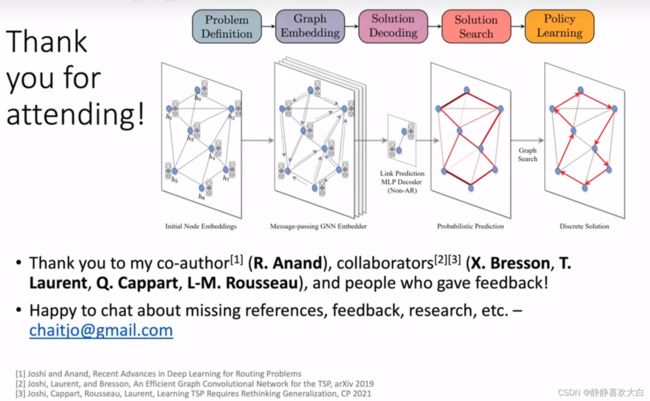

Chaitanya K. Joshi:Recent Advances in Deep Learning for Routing Problems

背景

Chaitanya K. Joshi

目前是剑桥计算机博士在读,主要的研究方向是几何深度学习以及图神经网络的理论基础以及其在生物医药中的应用。视频来源Chaitanya的youtube channel并获得他的授权。更多的博文内容也可以在他的个人网站https://www.chaitjo.com/。

Video source: https://www.youtube.com/watch?v=KkUL0UETN0w&t=415s

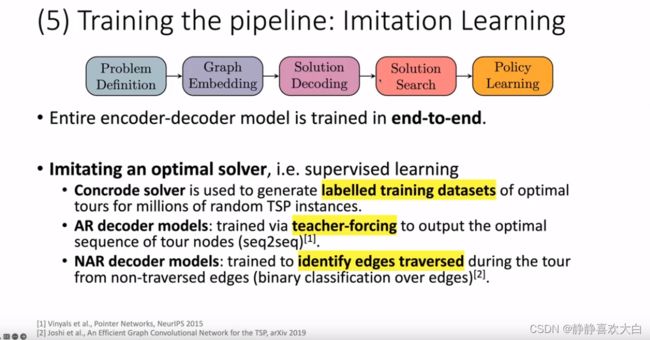

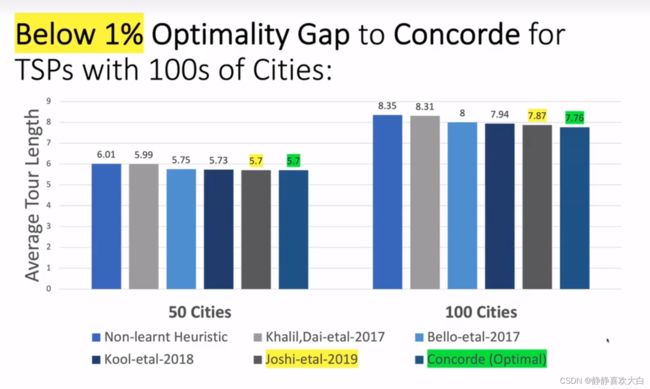

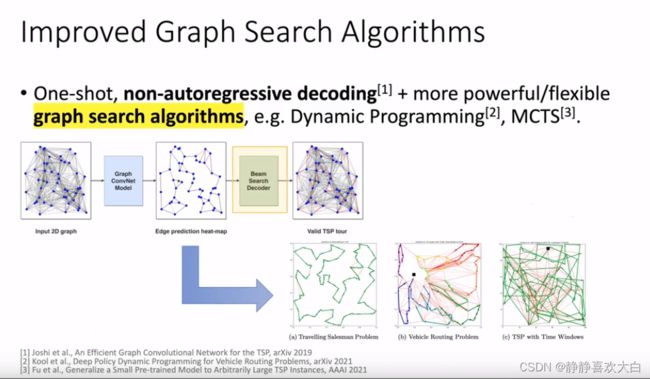

方法

结论

参考

Recent Advances in Deep Learning for Routing Problems Chaitanya K. Joshi_哔哩哔哩_bilibili

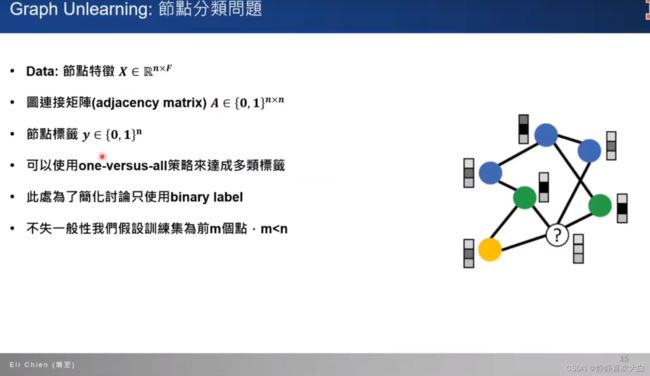

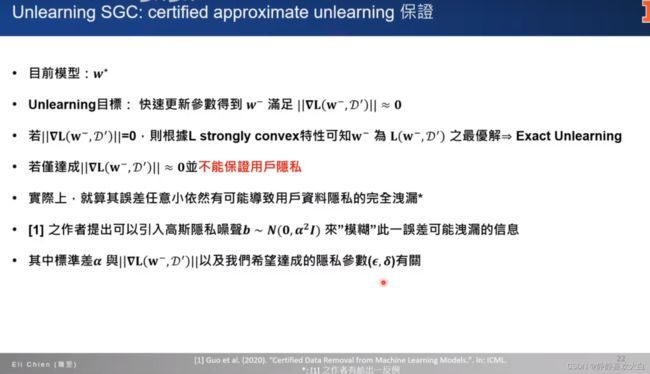

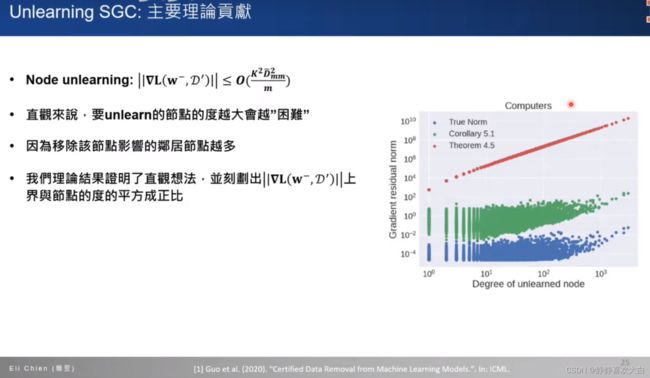

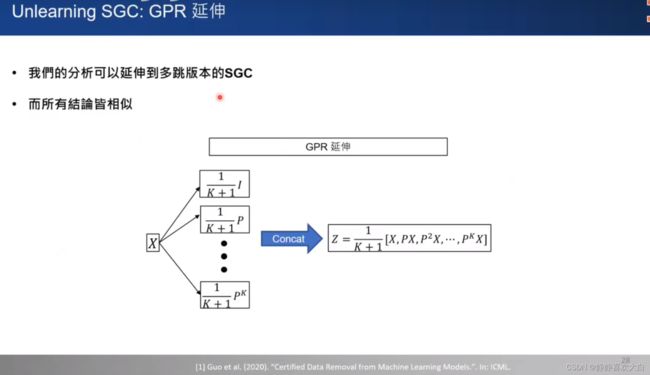

UIUC 简翌: Graph unlearning-如何保障用户[被遗忘的权利]

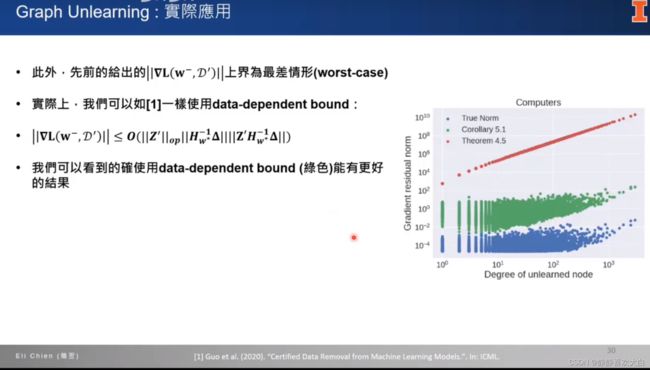

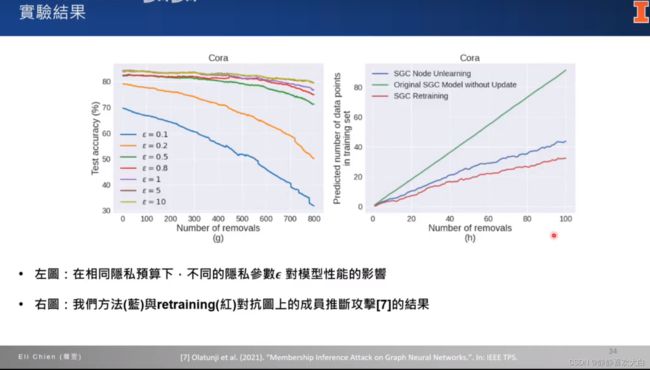

背景

方法

结论

参考

LOGS第2022/11/27期||UIUC 简翌: Graph unlearning-如何保障用户[被遗忘的权利]_哔哩哔哩_bilibili

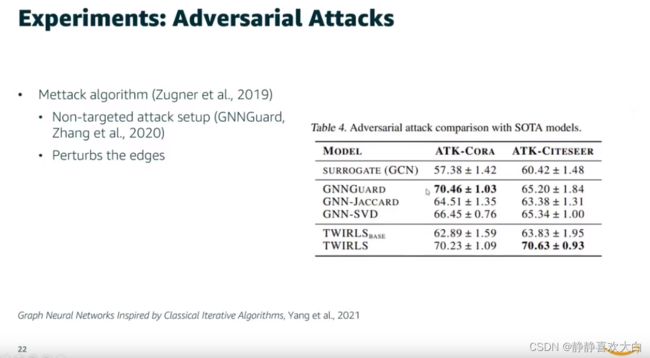

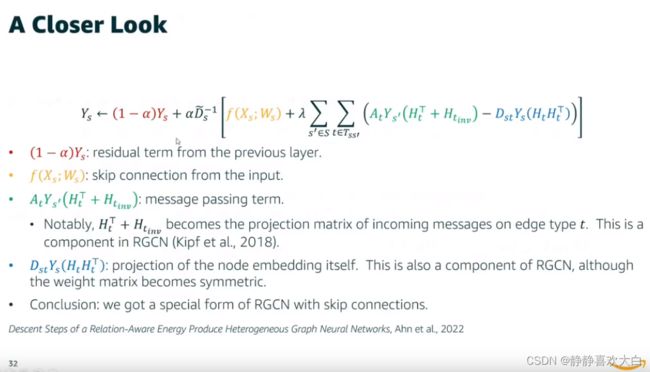

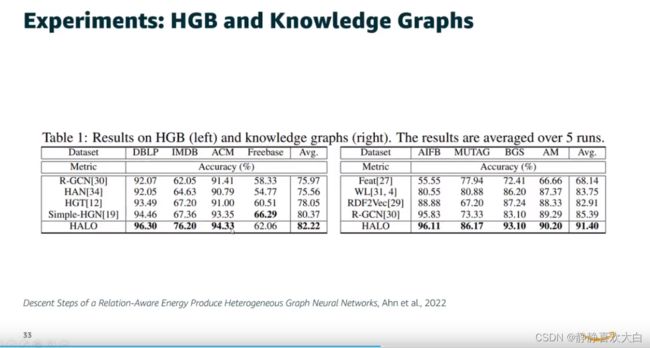

亚马逊上海DGL团队:从经典凸优化算法到图神经网络

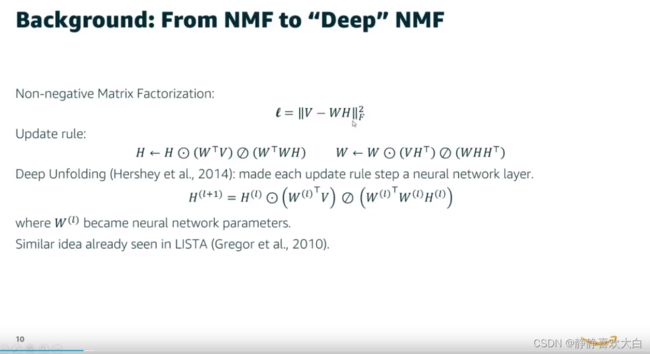

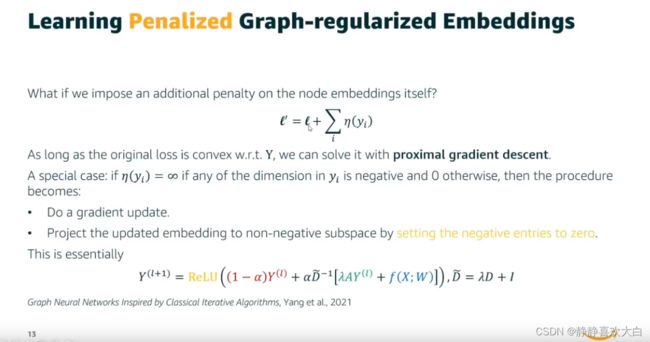

背景

方法

结论

可以借鉴:动机+数据推导和总结+HGNN目标函数

参考

LOGS 第2023/01/07期||亚马逊上海DGL团队:从经典凸优化算法到图神经网络_哔哩哔哩_bilibili

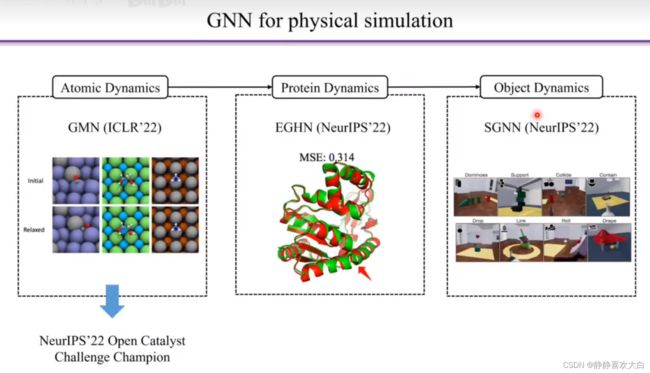

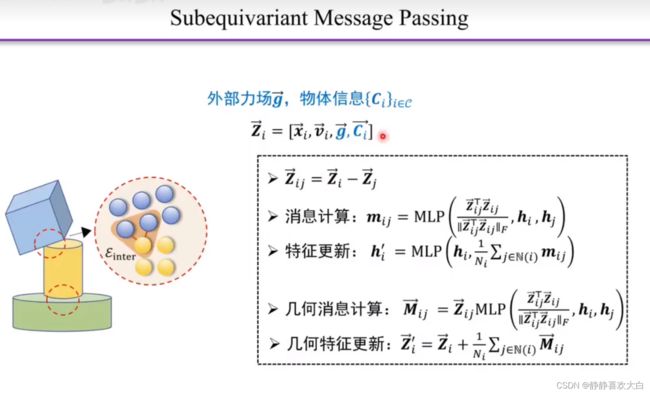

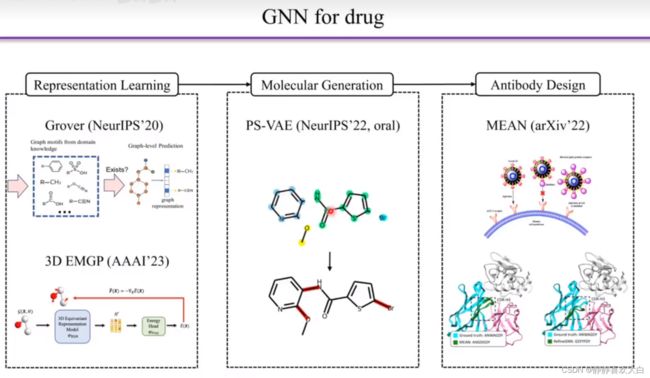

中国人民大学高瓴人工智能学院黄文炳: 几何图神经网络在科学计算中的应用

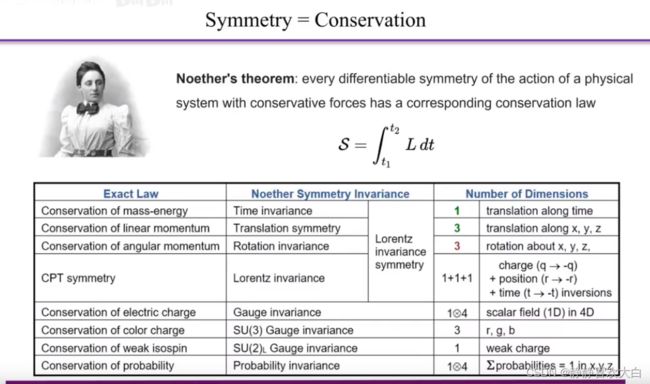

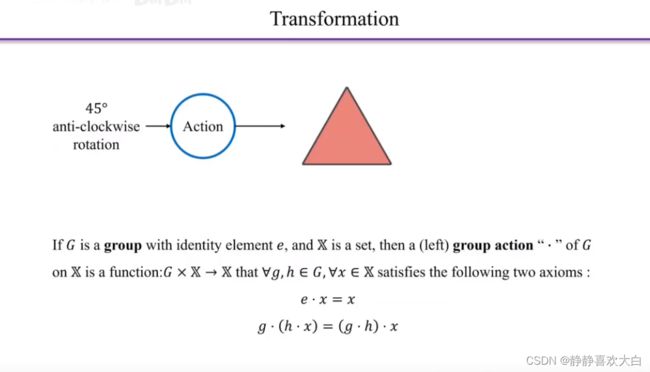

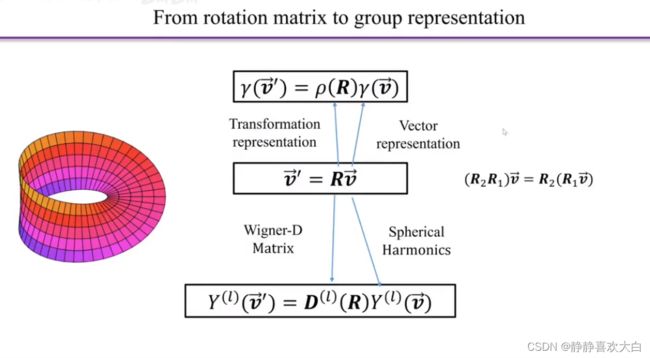

背景

这一期我们邀请到了来自中国人民大学高瓴人工智能学院的黄文炳老师,为我们带来一期几何图神经网络在科学计算中的应用的精彩内容。

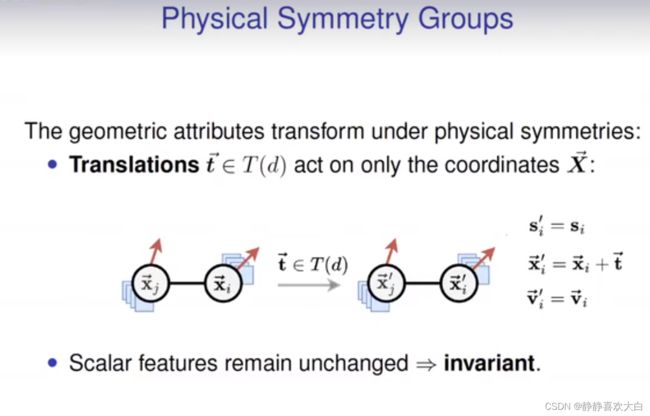

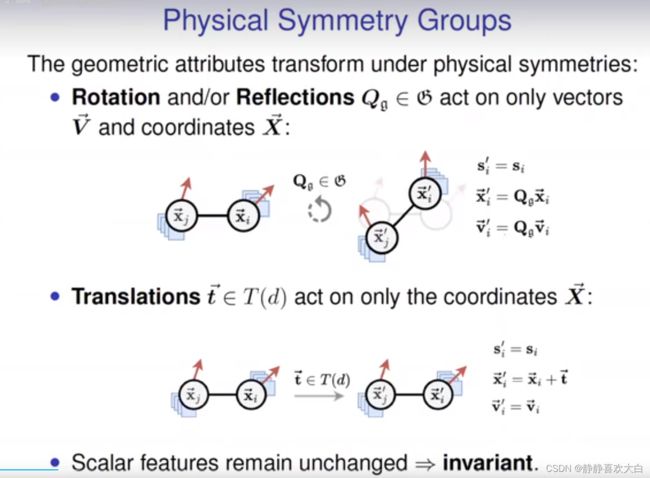

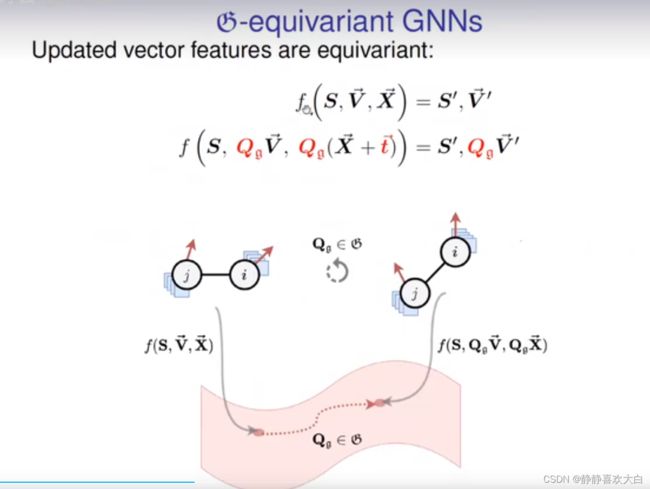

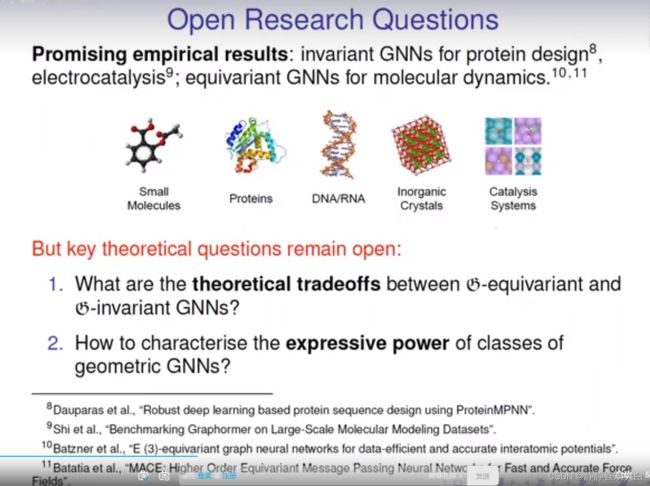

几何图广泛存在于物理、化学等科学领域的诸多问题中,从分子动力学模拟到机器人动力学控制等。近年来,越来越多的研究人员考虑利用利用等变图神经网络对几何图进行建模和表示,以便保持物理世界的平移、旋转和翻转对称性。本报告在系统性整理等变图神经网络发展的基础上,介绍团队在GNN for Physics、GNN for Drug等领域的应用情况,包括原子系统建模、多物体交互、药分子表示学习、药分子生成等。

方法

。。

结论

参考

LOGS 第2023/01/15期||中国人民大学高瓴人工智能学院黄文炳: 几何图神经网络在科学计算中的应用_哔哩哔哩_bilibili

香港中文大学MISC Lab-陈焱凯: 面向在线Top-K推荐的图表征二值化

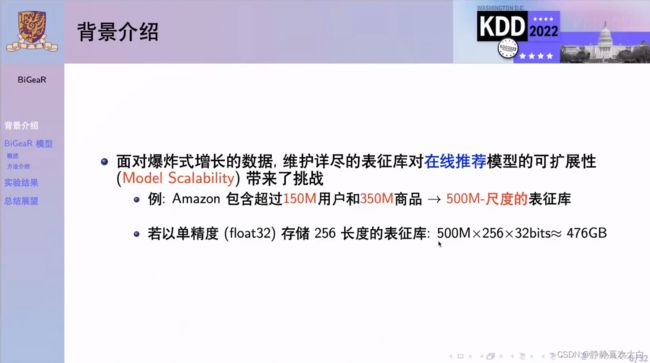

背景

团队介绍

本次汇报简介

Topic

面向在线Top-K推荐的图表征二值化

摘要

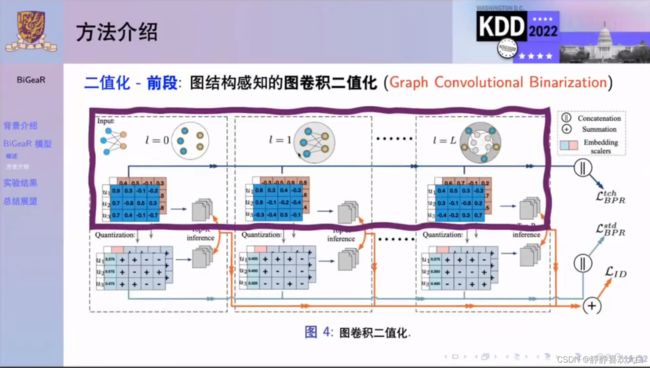

学习向量化表征是用于用户-商品匹配的各种推荐系统模型的核心。为了执行快的在线推理,表征二值化(Representation Binarization),旨在通过利用有限二值化数字序列嵌入潜在对象特征,最近显示了优化内存和计算开销的潜力。然而,现有工作仅关注数值层面的转化,而忽略了伴随的信息丢失问题,从而导致模型的性能明显下降。为了处理这类问题,我们提出了一种新颖有效的图表征二值化框架。我们在二值化表示学习的前期、中期和后期引入了多方面的量化强化技术,这很大程度上保留了针对二值化表征的信息量。除了节省内存占用外,它还通过按位运算进一步开发了可靠的在线推理加速,为实际部署提供了替代的灵活性。我们在五个真实数据集上的经验结果表明, 我们的方法比最先进的基于二值化表征学习的推荐系统模型实现了约 22%~40% 的性能提升。同时与取得SOTA效果的全精度模型相比,所提出的方法在优化推理时间和表征空间开销的同时,可以达到对应约95%~102%的预测能力。

分享嘉宾

陈焱凯目前是香港中文大学第四年PhD。他的研究兴趣主要集中在搜索和推荐相关的信息提取问题和应用,包括神经网络的量化技术,基于图模型的推荐系统优化以及基于自然语言理解的神经网络排序(neural ranking)模型等等。他同时也对传统的图数据挖掘感兴趣,例如社区检索(community retrieval)问题等.

方法

结论

可借鉴:

PPT制作风格;实验结果描述;绘图优秀(实验+方法框架)

参考

香港中文大学MISC Lab-陈焱凯: 面向在线Top-K推荐的图表征二值化_哔哩哔哩_bilibili